Big Blue gets Cool Blue

IT PRO takes a look at IBM's Cool Blue project, one of several attempts in the industry to tackle the growing problem of heat generation and power consumption.

There can be few IT and facility management executives who are not concerned about the cost of running their datacentre. Power costs have risen significantly while the demand for IT has shown no signs of slowing down. In the US, we have even had instances of data centres being told by their local utility company that there is no more power available. In California, businesses have been dealing with rolling brownouts for some time now and the problem is spreading across the country.

To add to the pressure on the IT department and its thirst for electricity, Luiz Andre Barroso, a distinguished engineer at Google, recently said "If performance per watt is to remain constant over the next few years, power costs could easily overtake hardware costs, possibly by a large margin". With IDC also estimating that it would cost almost $400,000 annually to power a 1,000 volume server-unit data centre, power has overtaken hardware as a real problem.

Data centre overload

As we cram our data centres with switches, servers, storage systems and other hardware, we not only need power to drive it but we need to cool it, meaning even more power consumption overall. Processor vendors are working hard to reduce the amount of power that they use. The next generation of quad-core processors will consume the same amount of power as the current dual-core processors. The same cannot be said for other components and with the constant increase in networks and storage, datacentres are getting hotter and hotter.

IBM claims this is causing a change in how companies view IT. Until now, IT has been seen as a strategic investment, one that is required for operational efficiency and competitive advantage. With the high costs of power and infrastructure, IBM sees Facilities Management (FM) taking control of how IT behaves by placing controls on power and therefore costs.

This is all under a project IBM calls Cool Blue. At present, Cool Blue consists of a piece of hardware that contains a small thermometer and needs to be built onto the motherboard of the computer. It is controlled through an interface called Power Executive. Between them, they monitor the amount of power that is being consumed by the device - it could be a server, a switch, a hub - anything in your data centre.

The Power Executive interface provides the user with an accurate reading of how much power the actual device is using. From here, you can map the power usage over time of a particular device. Once you have determined what its maximum and minimum power requirements are, you can write rules to prevent it from exceeding the maximum power.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Asset management

One of the areas where IBM believes this will be useful is in deciding on is the best use of assets. For example, should you be trying to get processor usage up to an average of 75 per cent? How much power is a server drawing based on processor utilisation? One of the things that it is difficult to understand today is the relationship between processor, I/O, disk, memory and power usage. IBM argues that Power Executive will allow both IT Managers and FM to get a real understanding of that relationship.

What this suggests is that we may have to rethink the last few years where virtualisation and "sweating" assets might not be the best solution. Virtualisation is still part of the solution with Cool Blue because it allows you to move an application or server instance between physical hardware servers. Asset sweating might use more power than having multiple servers running at a low utilisation.

The key area for this is blade servers. Here the perceived wisdom is about using virtual instances of applications and just moving them onto a blade to get best use of resources. Cool Blue adds another variable into the mix based on power consumption.

One of the obvious concerns about the use of Power Executive to "cap" the power usage of a server is who will be doing it. IBM is taking control away from the IT department and giving it to FM. That is not a move that many would see as being good. However, while IT has failed to get consistent representation on the main board, FM has always been represented. With power costs and green taxes a board issue, this is a move that will resonate with those who control the purse strings.

IBM admits that it is a long way from getting other technology companies to buy into the hardware to widen the use of Power Executive across the entire data centre. That isn't going to stop IBM from spreading Cool Blue throughout all of its own hardware over the next refresh cycles according to Kwasi Asare, brand manager for Cool Blue at IBM.

What does remain to be seen is how effective Power Executive will be in managing power usage. IBM has no figures at present and there are no studies likely to be available from customers to validate the savings or explain how the change of server control was handled.

Cooling techniques

Another part of the Cool Blue initiative is the Rear Door Heat eXchanger (see picture). This is a move back to old and trusted technology by using water to cool the data centre. IBM claims that the water used is recycled through the datacentre with no loss and no drain on other water resources. There is also a project looking at how to use seawater in cooling systems but nothing concrete as yet. The challenge here is removing the salt before it corrodes the pipes.This is part of a more complex problem of reducing the overall heat problem in the datacentre and part of building hot and cold aisles. IBM admits that this is an issue to which there is no easy solution to reducing heat. However, it does cite GA Tech University who installed 12 Rear Door Heat eXchangers in their new super computer. The savings, compared to upgrading the AC units are claimed at $9,000 per year per rack. That is a considerable reduction in cost and is worth serious consideration.

Like its competitors, IBM is busy playing the power reduction and cooling card. Unlike many of its competitors, IBM has actual hardware and monitoring systems. Whether it can persuade the industry to take them forward or get them adopted by any of the green computing initiatives, only time will tell.

-

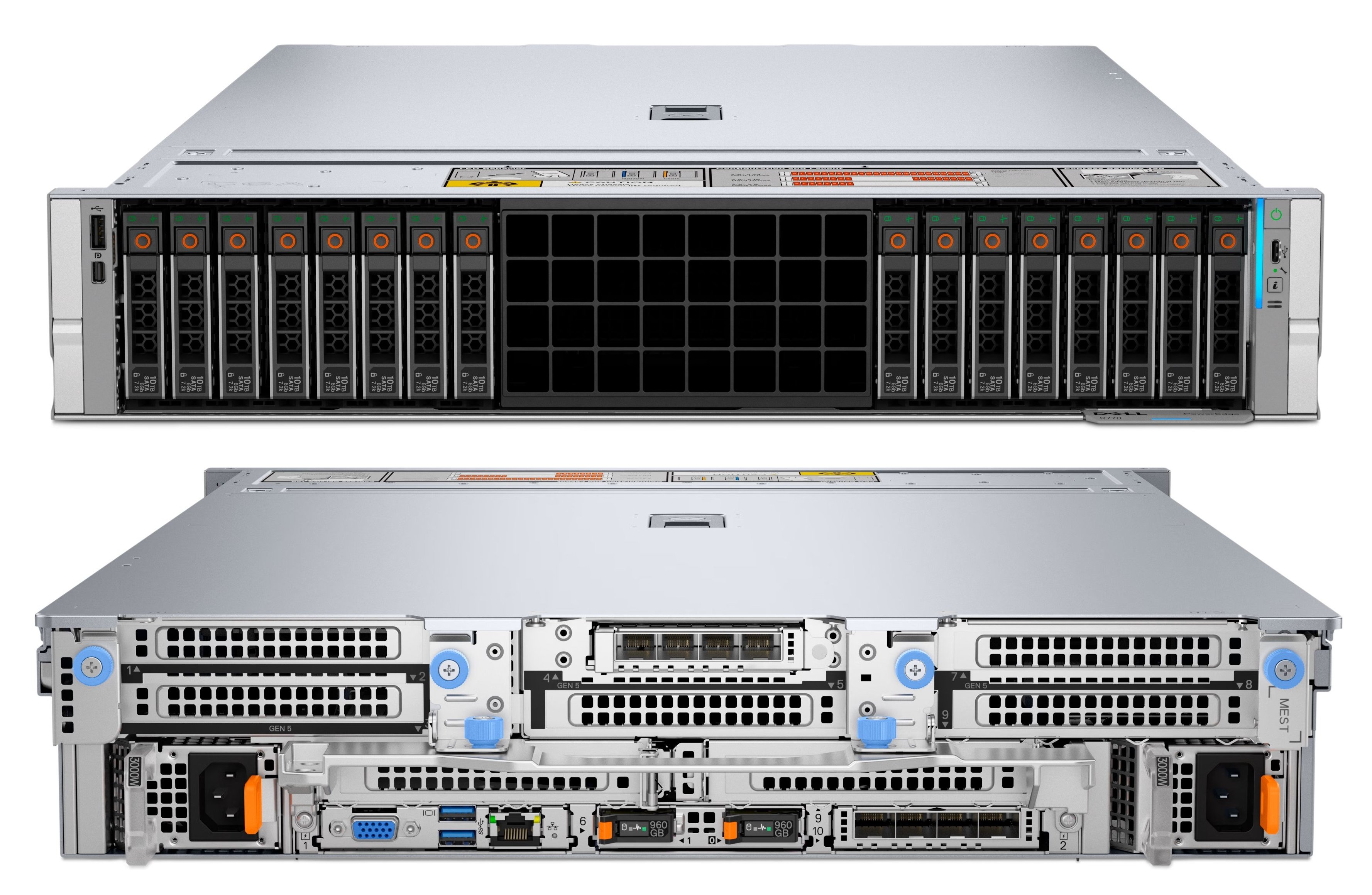

Why Dell PowerEdge is the right fit for any data center need

Why Dell PowerEdge is the right fit for any data center needAs demand rises for RAG, HPC, and analytics, Dell PowerEdge servers provide the broadest, most powerful options for the enterprise

-

Oracle's huge AI spending has some investors worried

Oracle's huge AI spending has some investors worriedNews Oracle says in quarterly results call that it will spend $15bn more than expected next quarter