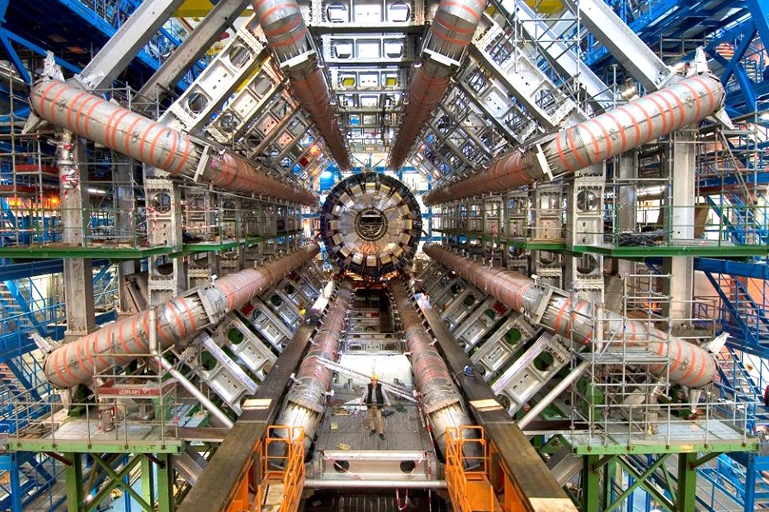

Big IT for CERN's particle smashing experiment

CERN's Large Hadron Collider isn't just the largest experiment in history, it's also a massive IT project linking hundreds of thousands of computers - and could change how we share and analyse data.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

You are now subscribed

Your newsletter sign-up was successful

Nestled in the Alps near lovely Geneva is a feat of engineering and technological ingenuity on par with few others created in the name of science, and it's about to awaken.

When the Large Hadron Collider (LHC) goes live at CERN along the Swiss-French border in just a few days, it will produce an awesome amount of data which will be fed to thousands of scientists around the world assuming the thing doesn't create a massive black hole and kill us all in a horribly cool sci-fi sort of way.

CERN's big baby isn't just an overwhelming science experiment; it's a grand IT project, too. And the last time CERN's band of boffins had tech trouble, some guy named Tim invented the web.

This time, CERN's tech team is focusing on distributed computing, also known as grid computing, leaving some to wonder if the LHC could lead to innovation on the scale of the web project pioneered by Sir Tim Berners-Lee. Given the scale of this project, it's hard not to think something new and shiny will come out of it.

Indeed, trying to describe the project both the IT and physics in numbers is mind-boggling. They're all too huge to get your head around: 100,000 CPUs crunching 15 petabytes of data at hundreds of sites around the world what does that even mean?

Simply smashing

For the past few years, engineers at CERN the French acronym for the European Organisation for Nuclear Research have been putting together a 16.77 mile tunnel, lined with sensors and 1,600 superconducting magnets across eight sectors, which are held at an operating temperature some 271 degrees below zero. The accelerator, which is accurate to a nanosecond, will be used to slam particles into each other to try to recreate the conditions at the beginning of time as in, the Big Bang.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

While cynics have suggested this will create a black hole that will destroy the universe, CERN's (hopefully-not-evil) geniuses have said that's just not very likely.

Even without the peril of death, it's pretty exciting. CERN's geeks are looking for the so-called god particle, dubbed Higgs Boson, which will offer clues about dark matter and other missing links of physics.

This week, despite 10 September being pushed as the official start-up, no collisions will take place. That date simply marks the first time a beam will be sent all the way around the track; the smashing is set to start up on 21 October. As the wee particles are slammed into each other, sensors will take all that data, filter it for useful bits, and then start spitting it out to scientists to work with.

"It's not the flip of a switch, but a long-planned process," said David Britton, project manager of GridPP, the UK side of the distributed computing system which will allow all this data to move around and be analysed. The collider itself has been cooling for some six weeks, and the data testing has preceded this week, too. "We have been turning on the grid all this year," he said, adding that it's been tested with fake data to ensure success.

"It's an ongoing process, and next week, we will start to see a trickle of real data," he said. While that data will be more interesting than the fake stuff, it doesn't start to become really important until the beams collide, he said.

An avalanche of data

And when the real data does start coming from the Alpine lab, it will be an avalanche. The data output about 15 petabytes a year will require some 100,000 CPUs across 50 countries with some tens of thousands of those in the UK, which will also hold 700TB of disk storage across 17 sites.

The data sharing for the project is tiered and spread out over a grid managed by the LHC Computing Grid (LCG) project. The LHC's detectors will stream data straight to tier zero', which is CERN's own computing centre. The farm' features processing power equal to 30,000 computers, including storage to five million gigabytes of spinning disk space and 16 million on tape.

Raw and processed data will be sent from there over 10GB/sec lines to tier one' academic bodies, which will act as back-up to CERN and also help disseminate the data to 150 tier two' organisations and tier three' computers which could be a basic PC sitting on a scientist's desk.

Freelance journalist Nicole Kobie first started writing for ITPro in 2007, with bylines in New Scientist, Wired, PC Pro and many more.

Nicole the author of a book about the history of technology, The Long History of the Future.

-

Sumo Logic expands European footprint with AWS Sovereign Cloud deal

Sumo Logic expands European footprint with AWS Sovereign Cloud dealNews The vendor is extending its AI-powered security platform to the AWS European Sovereign Cloud and Swiss Data Center

-

Going all-in on digital sovereignty

Going all-in on digital sovereigntyITPro Podcast Geopolitical uncertainty is intensifying public and private sector focus on true sovereign workloads

-

HPE launches ‘world’s first’ solar-powered supercomputer Hikari to solve Zika virus

HPE launches ‘world’s first’ solar-powered supercomputer Hikari to solve Zika virusNews Nearly a third of total power used by supercomputer supplied by renewable energy sources

-

Intel confirms Altera purchase: What happens next?

Intel confirms Altera purchase: What happens next?Analysis The acquisition will enable Intel to integrate Altera's FPGA products to meet customers' IoT and data centre needs

-

ARM unveils mbed OS for Internet of Things

ARM unveils mbed OS for Internet of ThingsNews Free OS could help developers speed up IoT products and devices, it is hoped

-

AMD buys into cloud server market with SeaMicro

AMD buys into cloud server market with SeaMicroNews The acquisition of SeaMicro will give the chip manufacturer a clear entrance into the cloud.

-

SAP splashes £2.18bn on SuccessFactors

SAP splashes £2.18bn on SuccessFactorsNews The firm boosts their business software with the inclusion of Human Capital Management.

-

AMD launches 16-core chips for cloudy goodness

AMD launches 16-core chips for cloudy goodnessNews The 16-core chips should benefit cloud users looking for scalability and efficiency.

-

Fujitsu returns to UK supercomputing

Fujitsu returns to UK supercomputingNews The HPC Wales project gets a boost as Fujitsu signs up to provide the initiative's distributed grid.

-

SETI boosted by nVidia CUDA tech

SETI boosted by nVidia CUDA techNews Nvidia's latest CUDA powered graphics chips helping hunt aliens - in real-life rather than in games.