Researchers develop a robot that can lie

The digital age of innocence is truly over.

A team at Georgia Tech Research Institute in the US is teaching robots how to deceive other machines and humans.

It may sound like a plot for a movie but the researchers have a serious aim. Robots are being used increasingly for search and rescue missions.

In a scenario that echoes the Terminator movies, it is possible that two robots from opposing armies may be involved in the same mission.

One robot might be clearing mines and the other is sent to prevent it from completing its task. The ability to recognise the situation and then be able to hide would be an essential aim for the first robot.

In the researcher's terms, circumstances for deception had to satisfy two main criteria. The first is that there must be a sense of conflict between the robots. Second, there must be a benefit in performing the deception.

Ronald Arkin, a professor in the Georgia Tech School of Interactive Computing, and his team developed software for the robots and set up a "playground" for a hi-tech game of hide and seek.

Coloured marker pins were set up pointing to three possible hiding places for the first robot to choose. The markers stopped short of the actual safe areas.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

The twisted intelligence incorporated in the programming allowed the lying robot to select a hiding place and then devise a path that would fool the chasing robot.

As the robot moved, it knocked over the coloured pins, leaving a track of its movements. The machine headed directly for a particular hiding place and, when it reached the unmarkered ground, quickly changed direction to outfox the chasing robot.

In 40 trials, the lying robot successfully fooled its pursuer 75 per cent of the time.

Small beginnings and no full marks for the robot but Arkin said that it demonstrated that machines could learn and use deceptive signals.

He said, "The results were also a preliminary indication that the techniques and algorithms described in the paper could be used to successfully produce deceptive behaviour in a robot."

The team envisage taking their research on to create more sophisticated robots. In the future, a rescue robot may need to deceive a human in order to calm them down in a dangerous situation.

Arkin said that he sees the work of his team as an important development but realises the ethical issues that surround the concept of deceitful robots.

-

Should AI PCs be part of your next hardware refresh?

Should AI PCs be part of your next hardware refresh?AI PCs are fast becoming a business staple and a surefire way to future-proof your business

By Bobby Hellard Published

-

Westcon-Comstor and Vectra AI launch brace of new channel initiatives

Westcon-Comstor and Vectra AI launch brace of new channel initiativesNews Westcon-Comstor and Vectra AI have announced the launch of two new channel growth initiatives focused on the managed security service provider (MSSP) space and AWS Marketplace.

By Daniel Todd Published

-

‘Not if, but when’: Where are the autonomous robots?

‘Not if, but when’: Where are the autonomous robots?In-depth Robots are already common in manufacturing, but where are the autonomous robots of the future and what form will they take?

By Steve Ranger Published

-

The Forrester Wave™: Robotic Process Automation Services

The Forrester Wave™: Robotic Process Automation ServicesWhitepaper The 15 providers that matter most and how they stack up

By ITPro Published

-

MOV.AI’s robotics engine platform aids AMR production

MOV.AI’s robotics engine platform aids AMR productionNews New platform automates autonomous mobile robot development with visual IDE, open API framework, 3D physics simulator, and more

By Praharsha Anand Published

-

ANYbotics taps Velodyne to enhance autonomous robots’ navigation

ANYbotics taps Velodyne to enhance autonomous robots’ navigationNews ANYbotics will incorporate Velodyne’s Puck Lidar sensors into its mobile robots

By Praharsha Anand Published

-

Infostretch and Automation Anywhere join forces to deliver hyperautomation

Infostretch and Automation Anywhere join forces to deliver hyperautomationNews New deal will help organizations establish RPA centers of excellence

By Praharsha Anand Published

-

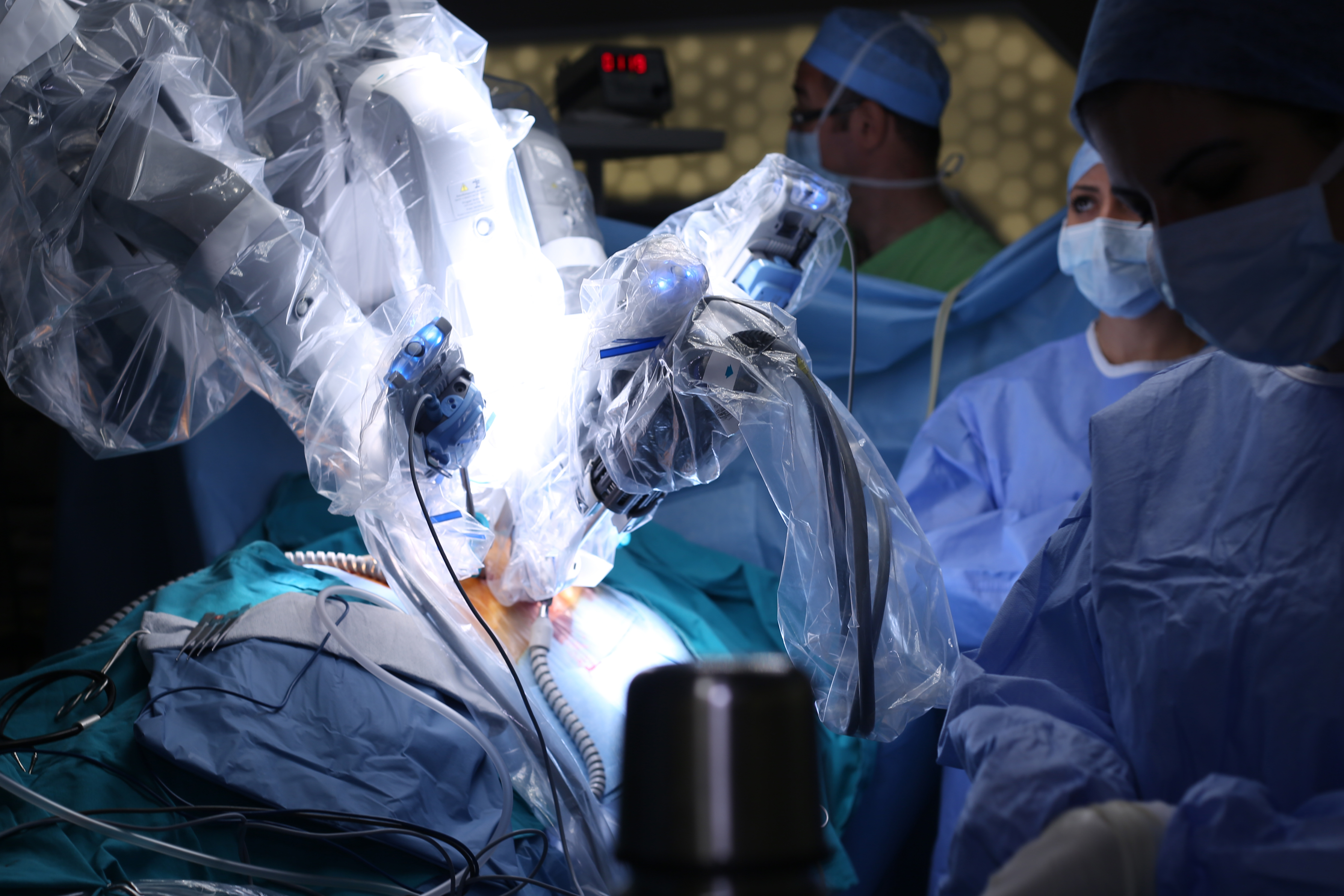

Telepresence medical robot market set to reach $159.5 million by 2028

Telepresence medical robot market set to reach $159.5 million by 2028News Increasing adoption of robots in health care settings and product innovations are contributing to the market's growth

By Rene Millman Published

-

Boston Dynamics announces commercial sales of Spot Robot

Boston Dynamics announces commercial sales of Spot RobotNews Boston Dynamics launches its first online sales offering with Spot Robot

By Sarah Brennan Published

-

Changing the Games: Why Tokyo 2020 will be the most technologically advanced Olympics yet

Changing the Games: Why Tokyo 2020 will be the most technologically advanced Olympics yetIn-depth Japan is hoping to amaze athletes and fans alike at the upcoming Tokyo 2020 Olympics, with a host of cutting-edge innovations. Can it deliver on its promise?

By Lindsay James Published