Combining & refining data for added insight

To gain real business insight, companies need to combine and analyse both internal and external data streams.

Data scientists are in great demand. Research from e-skills UK recently indicated demand for big data skills will rise by 28 per cent per annum in coming years, and by 2017 there will be 28,000 openings for big data staff.

Why such demand? In today's world, such database wizards and the IT teams around them wield a lot of power to give organisations an edge over competitors. They are the ones who can combine data from all over the place and turn it into something useful for those teams making business decisions.

For those who want to reap benefits from the vast fields of information out there in the universe, big decisions need to be made: how to store and mesh in-house data with those potentially lucrative yet untapped external resources, what kind of mining tools to use with all that information and, most importantly, how this can bring about positive business change.

Simply smart

Online business insurance broker Simply Business based its data combine and refine project on Microsoft SQL Server 2012 Enterprise. Primarily, it was looking at metrics and interactions on its website to improve its products and find new customers.

It recently started using its Microsoft-based BI stack for search term acquisition, said Chris Slater, chief operating officer at Simply Business (pictured). It took website metrics from its Snowplow analytics tool, fed that into a MongoDB system, before running data through SQL Server Integration Services into its data warehouse. The IT team even built its own code to import data from MongoDB into SQL Server called Mongosis, which it made freely available online.

"Once in the data warehouse, the data can be joined to our sales and acquisition costs that we collect on a daily basis from a variety of different sources," Slater said.

"For our internal business users we have created, using SQL Server Analysis Services (SSAS), a tabular model that contains all the information needed and can be easily accessed through Excel."

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

"This means that the final user will be able to create a pivot table that contains acquisition cost and revenue for every campaign, feeding into the decision making for our ongoing acquisition strategy." Then the search terms are bought, meaning more customers are introduced to Simply Business when looking around the Internet on the likes of Bing and Google.

Simply Business has also been using the powerful, and free, PowerPivot solution for Excel, allowing the company's analysts to throw data "in its rawest form" from MongoDB, amounting to billions of rows of data, into Excel to try out different queries, looking at things like drop-off rates from its application forms. "It's a capability that lets us get quick insight," said Slater.

He wants his team to draw even more information from unstructured data streams into the Microsoft BI stack in the future, including geo-mapping and social data. "The challenge is to use old systems and marry them with new data," Slater added.

Clouds coming together

Combining cloud and in-house data streams can also bring great rewards. Aviva did just that when it built the Aviva Drive app, with the Microsoft Visual Studio 2010 Professional development system and the Microsoft .NET Framework 4.

Data from the app, including braking, cornering, acceleration behaviour and GPS information, was fed into two linked databases: a Windows Azure SQL Database and the Windows Azure Table storage service, a NoSQL datastore that accepts calls from inside and outside the Azure cloud. This telemetry data was tied to the information sitting on Aviva servers about customers' age, gender and driving history to give them more personalised quotes.

Those combined data streams would be used to give the driver a score, delivered on the company website thanks to integration between the Azure cloud and the on-premises quote system using the Open Database Connectivity (ODBC) API.

The app's Facebook and Twitter integration lets users spread the good word about the software's benefits too, while providing the insurance giant with further understanding of its customers and gaining new ones thanks to this innovative approach. For Aviva, this is all good news.

EMI intelligence

Back when EMI Music was a standalone entity it too made use of SQL Server to draw in various data sources, but it had quite a few more to deal with than Aviva and Simply Business, and the information was far more unstructured in many cases. It included geographical, consumer and partner data from 200 external bodies. Without ways to effectively manage and store all those data feeds, it wasn't marketing artists in different countries as well as it could have, nor performing quality analysis across countries and its allies.

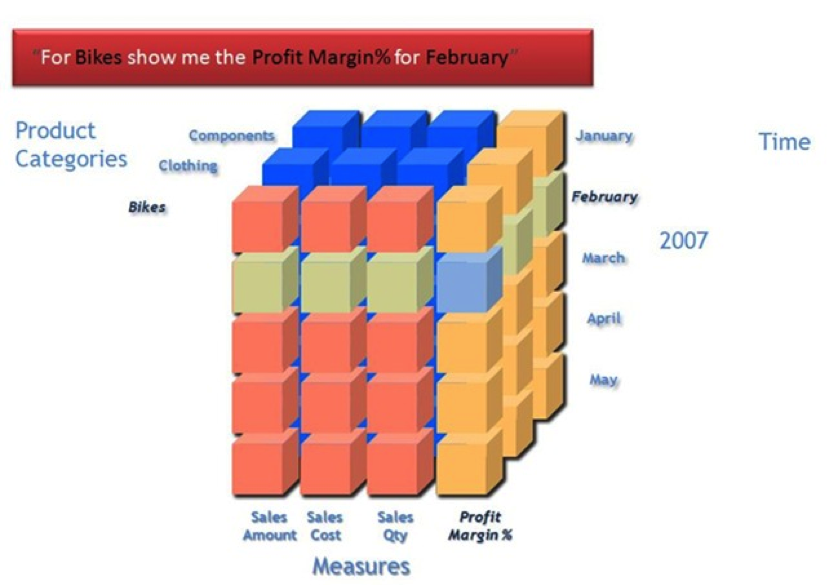

EMI needed one consistent area for its rock star data analysts to attack the information and give it some order. Like Simply Business, its BI operation was based on SQL Server Integration Services and SQL Server Analysis Services, but it wasn't just looking at tabular models, it was looking at multi-dimensional cubes too.

The company's insight teams used a Microsoft SQL Server 2012 Fast Track Data Warehouse that could scale to 80 terabytes. Towards the end of last year, its partner insight work with Spotify was using 8-billion rows of data, rising at the rate of 20-million rows a day. It was a lot, but the back end and the BI tools could handle it.

Thanks to this fine data science at the backend, the frontend workers got friendly tools for digging into how individual artists' albums, tracks, ringtones and merchandise sold across different regions.

Heading towards Hadoop

While SQL databases are still the norm, and evidently were used to great effect at Aviva, Simply Business and EMI, a new world is emerging in information management, bringing streams together to find value. It is built around Hadoop.

The simplest way to think about Hadoop is looking at it as two parts that make a powerful whole. First, there is the Hadoop Distributed File System (HDFS). It allows administrators to separate data across servers, meaning it can be mined with massively parallel processing, making computation far quicker and more effective. Then comes the MapReduce part. It maps the data and reduces it, bringing the patterns hidden inside the data to life.

It requires a different mindset from traditional SQL psyches, however. Before diving in then, IT should look at the size and nature of their data streams to decide whether Hadoop is the right option, said Dan Scarfe, CEO of DotNetSolutions.

"Many big data solutions ingest data in a very simplistic format - something as simple as text files on a hard drive, as to import it into anything can take as long as analysing it. Microsoft has a range of solutions to help with this such as PowerBI and PowerPivot for moderate sized datasets," Scarfe added.

"These should probably be the first port of call for an IT team. At bigger scales, though, you'd be looking at something like Hadoop, but that will get very complicated, very quickly."

Hadoop for Halo

Microsoft itself has shown how to use Hadoop for the benefit of customers. The team behind Halo for Xbox, one of the most popular games on the planet, knew they were going to have some problems with massive online competitions, whether through capacity issues, gamers trying to cheat the system, or gameplay glitches. Deep analysis of telemetry data provided many of the answers to these problems.

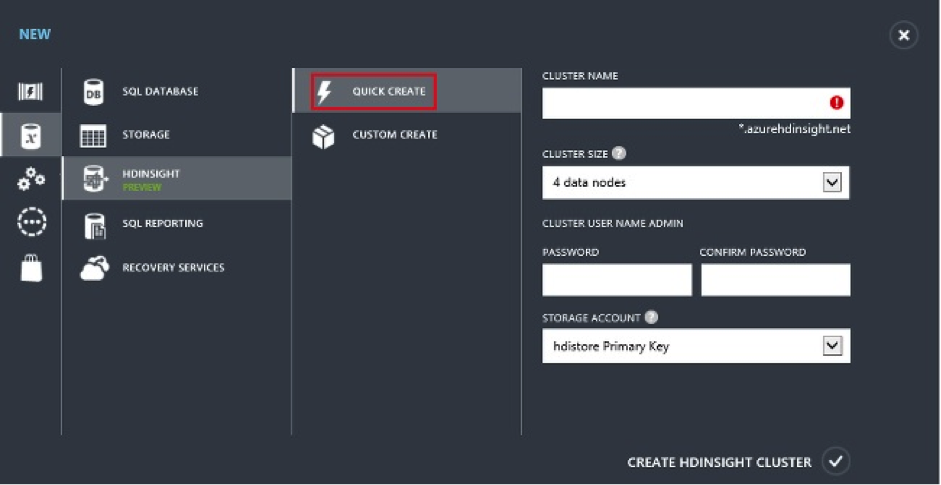

To capture this information, the Halo 4 development team at 343 Industries has been using HDInsight, which took the raw gaming data sitting on Azure, where multiplayer games are hosted, and stored it using the HDFS. Think of HDInsight as a 100 per cent Apache Hadoop solution running in the cloud, taking in and structuring any kind of data at any volume.

At the front end, the developers used Microsoft SQL Server Power Pivot for SharePoint to make sense of the Azure BLOBs (binary large objects). By applying PowerPivot to the HDInsight data, the Halo guys pulled it into Excel, carried out queries, the results of which were used to create workbooks that would be transferred to SharePoint. This allowed for collaboration with the game coders, who could make necessary tweaks to ensure player satisfaction.

"In one case, somebody had done a hack or a mod where they made the characters' heads disappear so you couldn't kill them, but they can see these things now before it degrades play for others," said Dave Campbell, Microsoft CTO for cloud.

"They don't have to fix the code either, they can post a hint in the forums."

There was a marketing benefit too. By combining in-house transactional information, in this case the Halo 4 sales numbers, with HDInsight data on how people were actually playing the game, the Halo marketing team could create and automate tailored emails. Messages would recommend what types of games to play, based on player preferences during a week of gaming, all determined by the HDInsight system.

Tools like Power View can also be combined with HDInsight to take the information, mash it up and create visualisations for further analysis.

Picking the right tool

In all these projects, the basic aims were the same: to locate or even create new data streams, draw in terabytes of information, analyse it, make business decisions based on that work and benefit the customer. The tools are now widely available for anyone to do this. It's just about picking the right ones.

"If you're a Facebook, Twitter or British Gas, only something like Hadoop can deal with the volumes (i.e. terabytes or petabytes)," Scarfe added.

"The vast majority of big data projects are looking at data sets in the gigabytes, though, which can easily be handled by traditional database technologies. In fact, traditional database technologies can often be a much better fit as they can be used by IT folk, whereas things like Hadoop typically need developers and data scientists to get any useful insights."

And that last point is vital for organisations starting up BI projects. As we continue to move into a world where data is increasingly analysable and useful, the data scientists are not just the beacons of light in the IT department, they are the future stars of the entire business.

To register for a Microsoft Business Intelligence Experience Workship click here.

-

AI is helping bad bots take over the internet

AI is helping bad bots take over the internetNews Automated bot traffic has surpassed human activity for the first time in a decade, according to Imperva

By Bobby Hellard

-

Two years on from its Series B round, Hack the Box is targeting further growth

Two years on from its Series B round, Hack the Box is targeting further growthNews Hack the Box has grown significantly in the last two years, and it shows no signs of slowing down

By Ross Kelly

-

Put AI to work for talent management

Put AI to work for talent managementWhitepaper Change the way we define jobs and the skills required to support business and employee needs

By ITPro

-

More than a number: Your risk score explained

More than a number: Your risk score explainedWhitepaper Understanding risk score calculations

By ITPro

-

Four data challenges holding back your video business

Four data challenges holding back your video businesswhitepaper Data-driven insights are key to making strategic business decisions that chart a winning route

By ITPro

-

Creating a proactive, risk-aware defence in today's dynamic risk environment

Creating a proactive, risk-aware defence in today's dynamic risk environmentWhitepaper Agile risk management starts with a common language

By ITPro

-

How to choose an HR system

How to choose an HR systemWhitepaper What IT leaders need to know

By ITPro

-

Sustainability and TCO: Building a more power-efficient business

Sustainability and TCO: Building a more power-efficient businessWhitepaper Sustainable thinking is good for the planet and society, and your brand

By ITPro

-

What is small data and why is it important?

What is small data and why is it important?In-depth Amid a deepening ocean of corporate information and business intelligence, it’s important to keep things manageable with small data

By Steve Cassidy

-

Microsoft's stellar cloud performance bolsters growth amid revenue slump

Microsoft's stellar cloud performance bolsters growth amid revenue slumpNews The tech giant partly blames unstable exchange rates and increased energy costs for the slowdown

By Rory Bathgate