What is Apache Kafka?

Learn about the distributed stream processing engine powering thousands of well-known companies

Over the past few years, organisations across many industries have discovered an increasingly important gap in their data infrastructure. Traditionally, organisations have focused on providing a place to store data. But in order to make use of data, they have realised they're missing a method of sending it to destinations like applications. This gap is being filled by streaming platforms like Apache Kafka.

Apache Kafka was first created by Linkedin, and was open sourced in 2011. Thousands of well-known companies are now built on it, for example Airbnb, Netflix and Goldman Sachs, to name a few. It is a distributed stream processing engine for building real-time data pipelines and streaming applications.

How it works

At a basic level, Apache Kafka is a central hub of data streams. It transforms an ever-increasing number of new data producers and consumers into a simple, unified streaming platform at the centre of an organisation. It allows any team to join the platform, while central teams manage the service, and it can scale to trillions of messages per day while delivering messages in real time.

Central to Kafka is a log that behaves, in many ways, like a traditional messaging system. It is a broker-based technology, accepting messages and placing them into topics. Any service can subscribe to a topic and listen for the messages sent to it. But as a distributed log itself, Kafka differs from a traditional messaging system by providing improved properties for scalability, availability and data retention.

The overall architecture for an Apache Kafka environment includes producing services, Kafka itself and consuming services. What differentiates this architecture is that it's completely free of bottlenecks in all three layers. Kafka receives messages and shards them across a set of servers inside the Kafka cluster.

Each shard is modelled as an individual queue. The user can specify a key which controls which shard data is routed to, thus ensuring strong ordering for messages that have the same key.

On the consumption side, Kafka can balance data from a single topic across a set of consuming services, greatly increasing the processing throughput for that topic.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

The result of these two architectural elements is a linearly scalable cluster, both from the perspective of incoming and outgoing datasets. This is often difficult to achieve with conventional message-based approaches.

Apache Kafka also offers high availability. If one of the services fails, the environment will detect the fault and re-route shards to another service, ensuring that processing continues uninterrupted by the fault.

Uses

Kafka takes on legacy technology across many different areas, including ETL, data warehouses, Hadoop, messaging middleware and data integration technologies, to substantially simplify an organisation's infrastructure. In many cases, Kafka can replace or augment an existing system to make data more consistently available, faster and less costly to deliver.

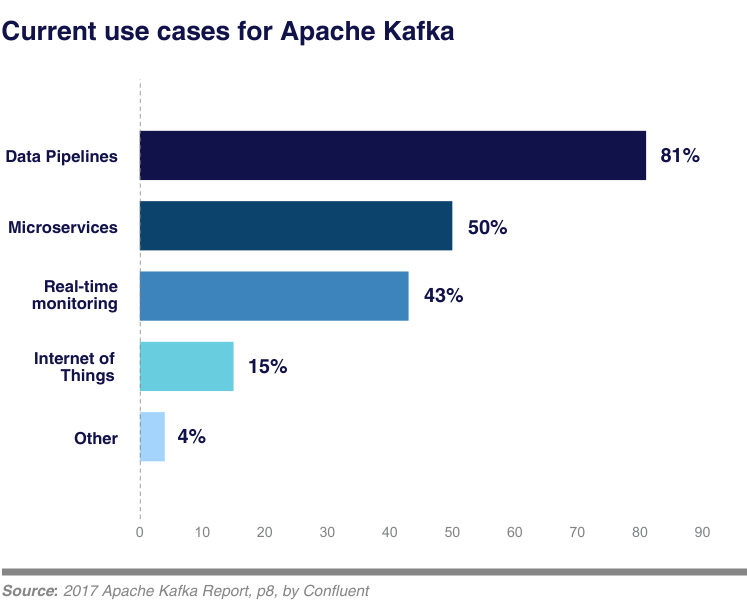

The use of Apache Kafta is on the rise. A recent survey by Confluent revealed that 52% of organisations have at least six systems running Kafka, with over a fifth having more than 20.

Kafka is used broadly in the cloud, with the most common use being in some combination of virtual private clouds, public clouds and on-premises.

Apache Kafka can be used in a variety of different ways for many different use cases. The past few years has seen a surge in the number of companies adopting streaming platforms. With this approach, they are able to build mission critical, real-time applications that power their core business - all the way from small to large-scale use cases that handle millions of events per second.

Many organisations are seeing significant benefits from their use of Kafka. Because data is available, shared and immediate, companies can create new products and significantly transform existing ones to take advantage of new market opportunities.

In addition to creating new opportunities, companies are leveraging Kafka to be more efficient and transform existing processes. It makes building data-driven applications and managing complex back-end systems simple. Other business benefits include reduced operating costs according to 47% of organisations surveyed by Confluent, improved customer experience and reduced risk.

Esther is a freelance media analyst, podcaster, and one-third of Media Voices. She has previously worked as a content marketing lead for Dennis Publishing and the Media Briefing. She writes frequently on topics such as subscriptions and tech developments for industry sites such as Digital Content Next and What’s New in Publishing. She is co-founder of the Publisher Podcast Awards and Publisher Podcast Summit; the first conference and awards dedicated to celebrating and elevating publisher podcasts.

-

Should AI PCs be part of your next hardware refresh?

Should AI PCs be part of your next hardware refresh?AI PCs are fast becoming a business staple and a surefire way to future-proof your business

By Bobby Hellard Published

-

Westcon-Comstor and Vectra AI launch brace of new channel initiatives

Westcon-Comstor and Vectra AI launch brace of new channel initiativesNews Westcon-Comstor and Vectra AI have announced the launch of two new channel growth initiatives focused on the managed security service provider (MSSP) space and AWS Marketplace.

By Daniel Todd Published

-

Future focus 2025: Technologies, trends, and transformation

Future focus 2025: Technologies, trends, and transformationWhitepaper Actionable insight for IT decision-makers to drive business success today and tomorrow

By ITPro Published

-

B2B Tech Future Focus - 2024

B2B Tech Future Focus - 2024Whitepaper An annual report bringing to light what matters to IT decision-makers around the world and the future trends likely to dominate 2024

By ITPro Last updated

-

Six steps to success with generative AI

Six steps to success with generative AIWhitepaper A practical guide for organizations to make their artificial intelligence vision a reality

By ITPro Published

-

The power of AI & automation: Productivity and agility

The power of AI & automation: Productivity and agilitywhitepaper To perform at its peak, automation requires incessant data from across the organization and partner ecosystem

By ITPro Published

-

Operational efficiency and customer experience: Insights and intelligence for your IT strategy

Operational efficiency and customer experience: Insights and intelligence for your IT strategyWhitepaper Insights from IT leaders on processes and technology, with a focus on customer experience, operational efficiency, and digital transformation

By ITPro Published

-

Sustainability at scale, accelerated by data

Sustainability at scale, accelerated by dataWhitepaper A methodical approach to ESG data management and reporting helps GPT blaze a trail in sustainability

By ITPro Published

-

What businesses with AI in production can teach those lagging behind

What businesses with AI in production can teach those lagging behindWhitepaper The more sophisticated the AI Model, the more potential it has for the business

By ITPro Published

-

Four steps to better business decisions

Four steps to better business decisionsWhitepaper Determining where data can help your business

By ITPro Published