Google to change AI forever with open source 'Parsey McParseface'

The SyntaxNet system understands human language with an incredible degree of accuracy

Google has open sourced its language parsing model, SyntaxNet, calling the English version Parsey McParseface.

The system understands human language with an incredible degree of accuracy, but attention has centred around its choice of name, which comes after people voted to name a science research ship Boaty McBoatface - it was in fact named after Sir David Attenborough.

The open sourcing of Google's parsing model means that the broader community can employ the tool to up the game of artificial intelligence (AI). This means that machines could understand sentences from a standard database of English language journalism, the first step in their journey to take over the world.

"At Google, we spend a lot of time thinking about how computer systems can read and understand human language in order to process it in intelligent ways," explained Google senior staff research scientist, Slav Petrov.

"Today, we are excited to share the fruits of our research with the broader community by releasing SyntaxNet, an open source neural network framework implemented in TensorFlow that provides a foundation for Natural Language Understanding systems."

SyntaxNet is built on powerful machine learning algorithms that learn to analyse the linguistic structure of language, and that can explain the functional role of each word in a given sentence.

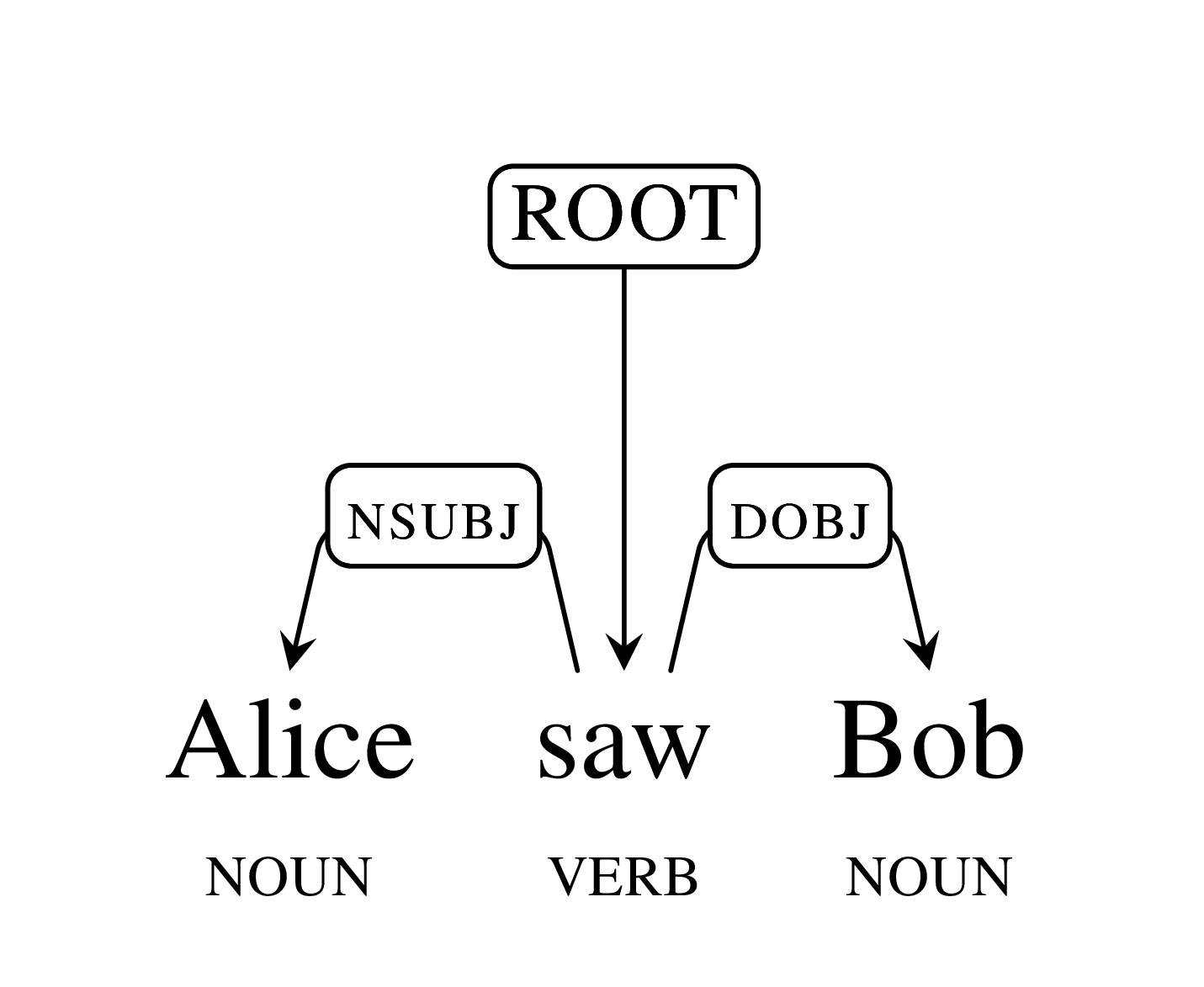

But how does it work? The system basically recognises the subject and object of sentences and make sense of what they mean, by determining the syntactic relationships between words in the sentence, represented in the dependency parse tree.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

To explain this visually, here is a simple tree diagram for the word group: "Alice saw Bob".

The structure encodes that Alice and Bob are nouns and saw is a verb. The main verb 'saw' is the root of the sentence and Alice is the subject (nsubj) of saw, while Bob is its direct object (dobj). This diagram structure helps Parsey McParseface essentially suss out the meaning of the sentence, analysing it correctly.

"Our release includes all the code needed to train new SyntaxNet models on your own data, as well as Parsey McParseface," added Petrov. "SyntaxNet applies neural networks to the ambiguity problem. An input sentence is processed from left to right, with dependencies between words being incrementally added as each word in the sentence is considered."

According to Google, Parsey McParseface gets 94 per cent accuracy on the news text, compared to about 96 per cent or 97 per cent for human linguists, but it doesn't do quite as well on random sentences from the Web, where it gets about 90 per cent accuracy.

-

Bigger salaries, more burnout: Is the CISO role in crisis?

Bigger salaries, more burnout: Is the CISO role in crisis?In-depth CISOs are more stressed than ever before – but why is this and what can be done?

By Kate O'Flaherty Published

-

Cheap cyber crime kits can be bought on the dark web for less than $25

Cheap cyber crime kits can be bought on the dark web for less than $25News Research from NordVPN shows phishing kits are now widely available on the dark web and via messaging apps like Telegram, and are often selling for less than $25.

By Emma Woollacott Published

-

Future focus 2025: Technologies, trends, and transformation

Future focus 2025: Technologies, trends, and transformationWhitepaper Actionable insight for IT decision-makers to drive business success today and tomorrow

By ITPro Published

-

B2B Tech Future Focus - 2024

B2B Tech Future Focus - 2024Whitepaper An annual report bringing to light what matters to IT decision-makers around the world and the future trends likely to dominate 2024

By ITPro Last updated

-

Six steps to success with generative AI

Six steps to success with generative AIWhitepaper A practical guide for organizations to make their artificial intelligence vision a reality

By ITPro Published

-

The power of AI & automation: Productivity and agility

The power of AI & automation: Productivity and agilitywhitepaper To perform at its peak, automation requires incessant data from across the organization and partner ecosystem

By ITPro Published

-

Operational efficiency and customer experience: Insights and intelligence for your IT strategy

Operational efficiency and customer experience: Insights and intelligence for your IT strategyWhitepaper Insights from IT leaders on processes and technology, with a focus on customer experience, operational efficiency, and digital transformation

By ITPro Published

-

Sustainability at scale, accelerated by data

Sustainability at scale, accelerated by dataWhitepaper A methodical approach to ESG data management and reporting helps GPT blaze a trail in sustainability

By ITPro Published

-

What businesses with AI in production can teach those lagging behind

What businesses with AI in production can teach those lagging behindWhitepaper The more sophisticated the AI Model, the more potential it has for the business

By ITPro Published

-

Four steps to better business decisions

Four steps to better business decisionsWhitepaper Determining where data can help your business

By ITPro Published