What is Delta Lake in Databricks?

With Delta Lake version 3.0, Databricks has created a new universal format to resolve compatibility issues

Delta Lake is an open source framework designed by Databricks that allows businesses to manage and analyze the data they generate in real-time.

Taking the form of a transactional storage layer, it provides a foundation for storing data and tables on Data Lakehouses – which Databricks refers to as an open data management architecture. This allows for data warehousing and machine learning operations to be applied directly onto a data lake.

It’s the default storage format for all Databricks operations and is an open protocol, with the organization completely open sourcing Delta Lake with the launch of Delta Lake 2.0 in June 2022.

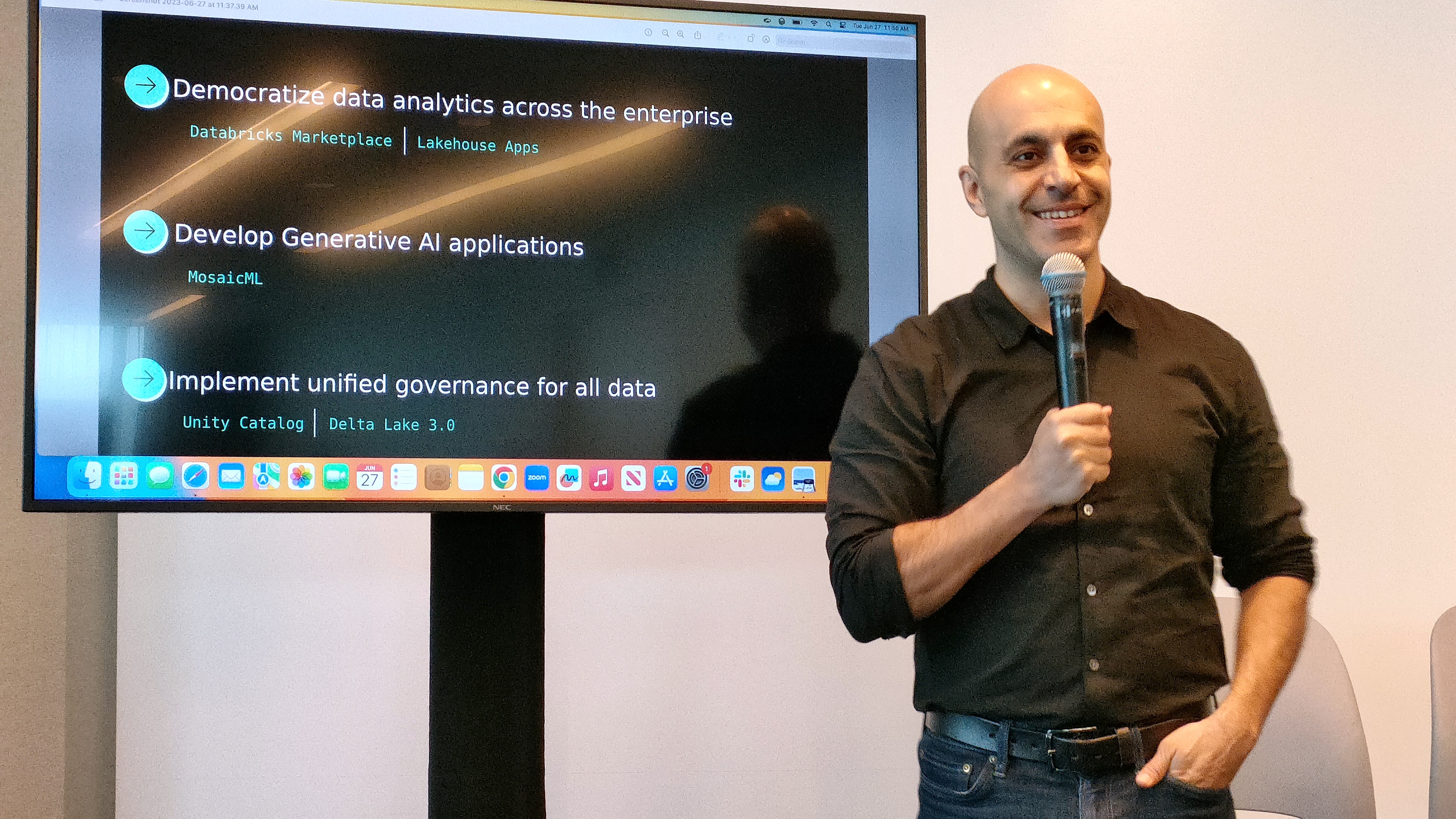

With Delta Lake 3.0, launched at Databricks Data + AI Summit 2023, Databricks has launched a new unified file format – UniForm – that solves a longstanding compatibility issue in the way businesses store metadata in their data lakes. This launches alongside a bid to remove data silos by addressing connector fragmentation, and performance improvements.

What is Delta Lake?

Introduced as an open source project in 2019, Delta Lake was created to ensure the data that powers real-time insights that organizations crave is genuinely reliable. It was billed at the time as Databricks’ most important innovation, even including the Apache Spark engine for data processing.

RELATED RESOURCE

Modern storage: The answer to multi-cloud complexity

Innovative organizations need innovative storage to manage and leverage their data no matter where it lives

The need for Delta Lake has emerged from the way businesses have traditionally managed data in massive and unrefined data lakes. Because these repositories contain both structured and unstructured data, at different scales, any operations running on top of this data – such as data analytics or machine learning – might not be optimized.

Databricks designed Delta Lake to transform these messy data lakes into cleaner ‘Delta Lakes’ with higher-quality data. This ultimately ensures any additional processes or operations performed on top generate much better insights, even if performed in real-time.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

“Nearly every company has a data lake they are trying to gain insights from, but data lakes have proven to lack data reliability,” said Databricks cofounder and CEO, Ali Ghodsi. “Delta Lake has eliminated these challenges for hundreds of enterprises. By making Delta Lake open source, developers will be able to easily build reliable data lakes and turn them into ‘Delta Lakes’.”

Delta Lake competes with a number of other services on the market that perform similar functions, including Apache HUDI, Azure Data Lake, and Snowflake.

How does Delta Lake work?

Based in the cloud, Delta Lake is a Spark proprietary extension that allows organizations to create Delta tables by default, whether they’re using Apache Spark or SQL. The open source platform extends the classic parquet data file format with a file-based transaction log, which allows for additional functions.

Delta Lake features include providing atomicity, consistency, isolation and durability (ACID) transactions on Spark, as well as scalable metadata handling. The former ensures there’s never inconsistent data, while the latter takes advantage of Spark’s distributed processing power to handle masses of metadata at once.

Organizations can also take advantage of streaming and batch unification – by allowing both types of data to land in the same sink. This comes alongside some quality-of-life features such as rollbacks and historical audit trails, as well as the capability to reproduce machine learning experiments.

It comes with a variety of open source connectors, including Apache Flink, Presto and Trino, alongside standalone readers/writers that lets clients for Python, Ruby and Rust write directly into Delta Lake without needing a massive data engine like Apache Spark.

In 2021, Databricks launched Delta Sharing, a protocol that lets different companies share massive data sets securely and in real time. This aims to break the notion of vendor lock-in, as well as break down data silos due to proprietary data formats and computing resources that are required to read data. Users can access shared data through Pandas, Tableau, Presto, and other platforms without the need to use proprietary systems.

What’s new in Delta Lake 3.0?

With the latest iteration of Delta Lake, Databricks is hoping to end the ‘format wars’ that’s long posed challenges for storing and managing data in data lakes.

Most data (99%) is stored in data lakes using the parquet format, but organizations must choose from one of several competing standards to store all metadata: Delta Lake, Apache Iceberg, and Apache Hudi. Each format handles metadata differently are fundamentally incompatible with one another. Once enterprises choose a format, they’re stuck using it for everything.

With Delta Lake 3.0, Databricks has created the universal format (UniForm), which serves as a unification of the three standards. Customers who use a Databricks data lake will find their data stored in the parquet format, as usual, alongside three different versions of the metadata. It means the Delta Lake data can be read as if it’s stored in either Iceberg or Hudi.

“The metadata is very important, and if you get the metadata wrong, you can’t actually access this stuff,” says Databricks CEO Ali Ghodsi, speaking at Databricks AI + Data Summit 2023. “Since all three projects are open source, we just went and understood exactly how to do it in each of them, and now inside Databricks, when we create data, we create data for all three.”

Also new in the latest iteration of Delta Lake is the Delta Kernel, which addresses connector fragmentation by ensuring connectors are built against a core library that implements Delta specifications. This alleviates the burden of having to update Delta connectors with each new version or protocol change. With one API, developers can keep connectors up-to-date and ensure the latest innovations are pushed out as soon as they’re ready.

The final new addition is the Delta Liquid Clustering, which boosts the performance of reads and writes. With a flexible data layout technique, the Delta Liquid Clustering marks a departure from the decades old hive-style table partitioning system, which uses a fixed layout. It means organizations can take advantage of cost-efficient data clustering as their data lake grows with time.

Keumars Afifi-Sabet is a writer and editor that specialises in public sector, cyber security, and cloud computing. He first joined ITPro as a staff writer in April 2018 and eventually became its Features Editor. Although a regular contributor to other tech sites in the past, these days you will find Keumars on LiveScience, where he runs its Technology section.

-

Bigger salaries, more burnout: Is the CISO role in crisis?

Bigger salaries, more burnout: Is the CISO role in crisis?In-depth CISOs are more stressed than ever before – but why is this and what can be done?

By Kate O'Flaherty Published

-

Cheap cyber crime kits can be bought on the dark web for less than $25

Cheap cyber crime kits can be bought on the dark web for less than $25News Research from NordVPN shows phishing kits are now widely available on the dark web and via messaging apps like Telegram, and are often selling for less than $25.

By Emma Woollacott Published

-

Databricks targets steep growth after historic funding round

Databricks targets steep growth after historic funding roundNews The Thrive Capital-led funding round saw Databricks raise $10bn for more acquisitions and AI products

By Nicole Kobie Published

-

How data-driven decision-making can inform the channel

How data-driven decision-making can inform the channelIn-depth With continued global economic uncertainty, data-driven decision-making can align the channel with customer needs

By David Howell Published

-

Databricks' new Data Intelligence Platform marks shift away from Lakehouse

Databricks' new Data Intelligence Platform marks shift away from LakehouseNews Databricks plans to offer intuitive new AI features for maximizing the use of enterprise data

By Solomon Klappholz Published

-

How Copenhagen Airport become a big data powerhouse

How Copenhagen Airport become a big data powerhouseCase Study Copenhagen Airport has become one of the most digitized airports in the world due to the deployment of a real-time data-management platform

By Peter Ray Allison Published

-

Will the NHS Federated Data Platform transform UK healthcare?

Will the NHS Federated Data Platform transform UK healthcare?In-depth Plans to create a data platform in partnership with the private sector could revolutionize NHS treatment, but concerns over data privacy and security are festering

By Jonathan Weinberg Published

-

What Databricks’ AI acquisition spree tells us about the future of the data industry

What Databricks’ AI acquisition spree tells us about the future of the data industryAnalysis The $1.3 billion MosaicML acquisition completes a trio of deals in a matter of weeks, with Databricks positioning itself as an 'AI operating system' contender

By Keumars Afifi-Sabet Published

-

Databricks injects array of AI tools into Lakehouse

Databricks injects array of AI tools into LakehouseNews Lakehouse IQ and Lakehouse AI, businesses can build better internal chatbots and create their own LLMs

By Keumars Afifi-Sabet Published

-

Databricks CEO rebuffs claims of ‘generative AI bubble’ following $1.3bn MosaicML acquisition

Databricks CEO rebuffs claims of ‘generative AI bubble’ following $1.3bn MosaicML acquisitionNews Ghodsi denies the price tag is a “crazy number”, despite a previous $222 million valuation, and promises immediate ROI

By Keumars Afifi-Sabet Published