Bletchley Declaration draws cautious approval

AI safety agreement garners international support, but some believe it needs more specificity

The UK, US, European Union (EU), India and China are amongst 28 countries and governments to sign up to the Bletchley Declaration on AI safety, announced on 1 November at the UK's AI Summit.

The agreement recognises that so-called frontier AI technology, such generative AI chatbots and image generators, has “potential for serious, even catastrophic, harm, either deliberate or unintentional". It outlines three key areas of risk: Cybersecurity, biotechnology, and disinformation.

It says risks are best addressed through international cooperation, with participating nations agreeing to work together to support a network of scientific research on AI safety.

"This is a landmark achievement that sees the world’s greatest AI powers agree on the urgency behind understanding the risks of AI – helping ensure the long-term future of our children and grandchildren," said UK prime minister Rishi Sunak.

"The UK is once again leading the world at the forefront of this new technological frontier by kickstarting this conversation, which will see us work together to make AI safe and realise all its benefits for generations to come."

The agreement has received tentative approval from the UK tech sector, with Rashik Parmar, CEO of BCS, saying that it takes a more positive view of the potential of AI than expected.

"I’m also pleased to see a focus on AI issues that are a problem today – particularly disinformation, which could result in personalised fake news during the next election – we believe this is more pressing than speculation about existential risk," he added.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

"The emphasis on global cooperation is vital to minimise differences in how countries regulate AI."

Amanda Brock, CEO of OpenUK, said the agreement will need hard practicalities baked in, and is calling for the open source software and open data business communities to be included.

RELATED RESOURCE

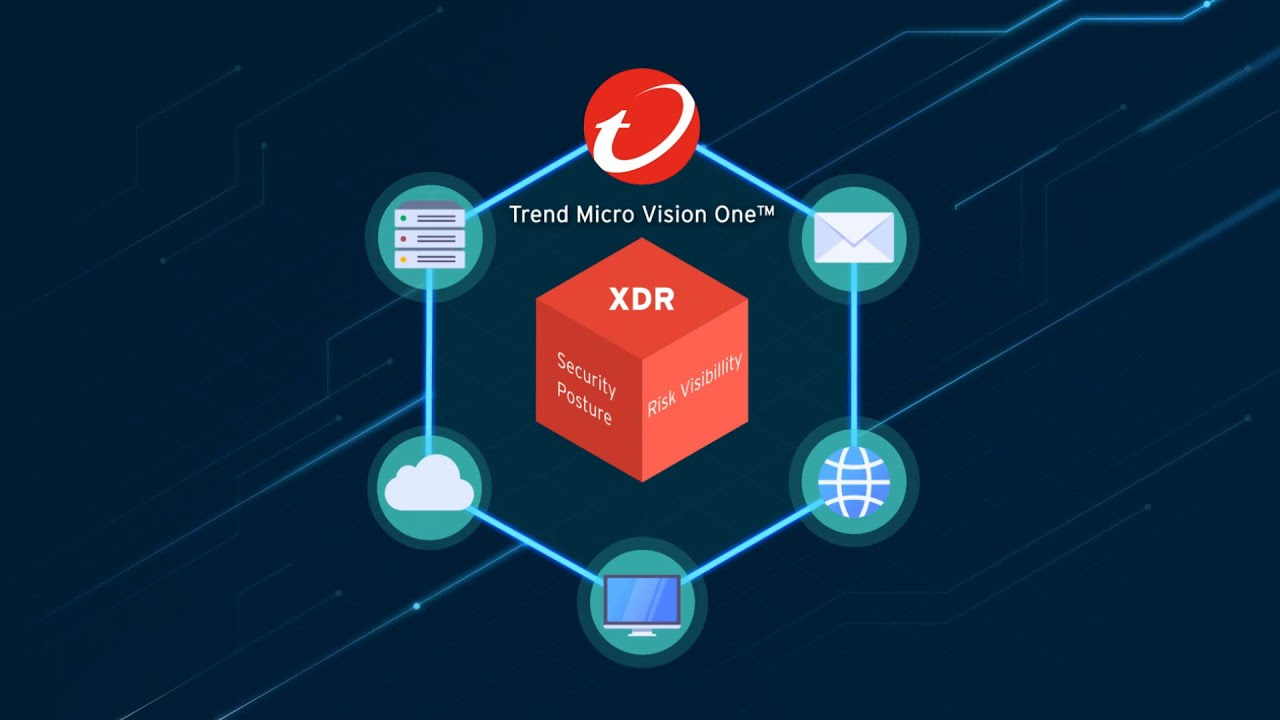

Analyzing the economic benefits of Trend Micro Vision One

Discover how the XDR Workbench enables alert prioritization based on security scores

DOWNLOAD NOW

"Their input will help to appropriately shape the transparency called for in the Declaration alongside the internationally inclusive, collaborative cross border research and innovation and international co-operation in an open manner," she said.

Joseph Thacker, researcher at SaaS security firm AppOmni, was less confident, however.

"The biggest challenge is that the open source ecosystem is really close to enterprises when it comes to making frontier AI," he said.

"And the open source ecosystem isn't going to adhere to these guidelines – developers in their basement aren't going to be fully transparent with their respective governments."

The Bletchley Declaration isn't the only game in town, though. US secretary of commerce Gina Raimondo used the summit to announce a new US-led international AI safety institute.

Just two days before the conference kicked off, on 30 October, US president Joe Biden signed an executive order, described by the White House as “the most significant actions ever taken by any government to advance the field of AI safety”, requiring AI firms to report the risks their technology could pose.

The EU is already in the process of passing its AI Act and, on the same day Biden signed his executive order, the G7 agreed a set of guiding principles for AI and a voluntary code of conduct for AI developers.

The challenge, most agree, will be to ensure that future international efforts are focused on practical measures.

"The test will be seeing cooperation on the concrete governance steps that need to follow," said Seán Ó hÉigeartaigh, programme director of AI: futures and responsibility at the University of Cambridge.

"I hope to see the next summit in South Korea forge a consensus around international standards and monitoring, including establishing a shared understanding of which standards must be international, and which can be left to national discretion," he added.

Emma Woollacott is a freelance journalist writing for publications including the BBC, Private Eye, Forbes, Raconteur and specialist technology titles.

-

Bigger salaries, more burnout: Is the CISO role in crisis?

Bigger salaries, more burnout: Is the CISO role in crisis?In-depth CISOs are more stressed than ever before – but why is this and what can be done?

By Kate O'Flaherty Published

-

Cheap cyber crime kits can be bought on the dark web for less than $25

Cheap cyber crime kits can be bought on the dark web for less than $25News Research from NordVPN shows phishing kits are now widely available on the dark web and via messaging apps like Telegram, and are often selling for less than $25.

By Emma Woollacott Published

-

‘AI is coming for your jobs. It’s coming for my job too’: Fiverr CEO urges staff to upskill or be left behind

‘AI is coming for your jobs. It’s coming for my job too’: Fiverr CEO urges staff to upskill or be left behindNews The latest in a string of AI skills warnings has urged staff to begin preparing for the worst

By Ross Kelly Published

-

Anthropic ramps up European expansion with fresh hiring spree

Anthropic ramps up European expansion with fresh hiring spreeNews Anthropic has unveiled plans to further expand in Europe, adding 100 roles and picking a new EMEA head.

By Nicole Kobie Published

-

How simplicity benefits the IT partner ecosystem

How simplicity benefits the IT partner ecosystemSponsored Content Across private cloud and AI adoption, simple approaches can unlock more time and money for IT teams

By ITPro Published

-

AI skills training can't be left in the hands of big tech

AI skills training can't be left in the hands of big techNews Speakers at Turing's AI UK conference lay out challenges to AI skills readiness

By Nicole Kobie Published

-

The role of AI and cloud in true digital transformation

The role of AI and cloud in true digital transformationSupported Content Both cloud computing and AI technologies are vital to pushing your business forward, and combining them in the right way can be transformative

By Keumars Afifi-Sabet Published

-

Generative AI adoption is 'creating deep rifts' at enterprises: Execs are battling each other over poor ROI, IT teams are worn out, and workers are sabotaging AI strategies

Generative AI adoption is 'creating deep rifts' at enterprises: Execs are battling each other over poor ROI, IT teams are worn out, and workers are sabotaging AI strategiesNews Execs are battling each other over poor ROI, underperforming tools, and inter-departmental clashes

By Emma Woollacott Published

-

‘If you want to look like a flesh-bound chatbot, then by all means use an AI teleprompter’: Amazon banned candidates from using AI tools during interviews – here’s why you should never use them to secure a job

‘If you want to look like a flesh-bound chatbot, then by all means use an AI teleprompter’: Amazon banned candidates from using AI tools during interviews – here’s why you should never use them to secure a jobNews Amazon has banned the use of AI tools during the interview process – and it’s not the only major firm cracking down on the trend.

By George Fitzmaurice Published

-

Starmer bets big on AI to unlock public sector savings

Starmer bets big on AI to unlock public sector savingsNews AI adoption could be a major boon for the UK and save taxpayers billions, according to prime minister Keir Starmer.

By George Fitzmaurice Published