Senators seek to reform Section 230 protections

Platforms would be liable for knowingly promoting harmful content under bill

A group of Democratic senators have launched a bill to reform Section 230 of the Communications Decency Act. The law would render online platforms liable for knowingly using algorithms that promote harmful content online.

The Justice Against Malicious Algorithms Act would target algorithms that promote content contributing to physical or severe emotional injury. If passed, it would be a landmark bill for those unhappy with the blanket protection of online platforms from content liability.

Passed in 1996 as under the Telecommunications Act, Section 230 removes online platforms' liability for content that their users might post. However, critics argue the internet has evolved since then, rendering the original legislation obsolete.

The bill targets algorithms that personalize information for users but does not include search features. It excludes smaller platforms with fewer than 5 million users.

The bill's authors are Energy and Commerce Committee Chairman Frank Pallone (D-NJ), Communications and Technology Subcommittee Chairman Mike Doyle (D-PA), Consumer Protection and Commerce Subcommittee Chair Jan Schakowsky (D-IL), and Health Subcommittee Chair Anna Eshoo (D-CA). Sen. Eshoo had previously introduced the Protecting Americans from Dangerous Algorithms Act, which also sought to curb platforms' use of algorithms by modifying Section 230.

The senators accused Facebook in particular of amplifying content that promotes conspiracy theories and incites extremism. They referred to Facebook whistleblower Frances Haugen, a former project manager at Facebook who revealed her identity in an interview with TV show 60 Minutes.

The 37-year-old Haugen has also worked at Google and Pinterest. She worked with the Wall Street Journal to produce the Facebook Files, a series of reports alleging various damaging things about the social media company. These include accusations that Facebook knew about the potential for its Instagram social network to harm teen health. Facebook has denied these claims.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Haugen also said during her 60 Minutes interview that a 2018 change to the social media company's news feed contributed to more divisiveness on its platform but also helped the company sell more advertising.

Facebook has also drawn recent criticism for suspending academic accounts used to research its advertising algorithm.

Others expressed support for the bill, including Dr. Hany Farid, senior advisor at UC Berkeley's Counter Extremism Project.

"By hiding behind a distorted interpretation of a three-decade old regulation crafted at the dawn of the modern internet, the titans of tech have escaped responsibility for their dangerous and deadly products," he said. "This modest bill takes an important and critical step to holding Silicon Valley responsible for their reckless disregard of allowing their services to be weaponized against children, individuals, societies, and democracies."

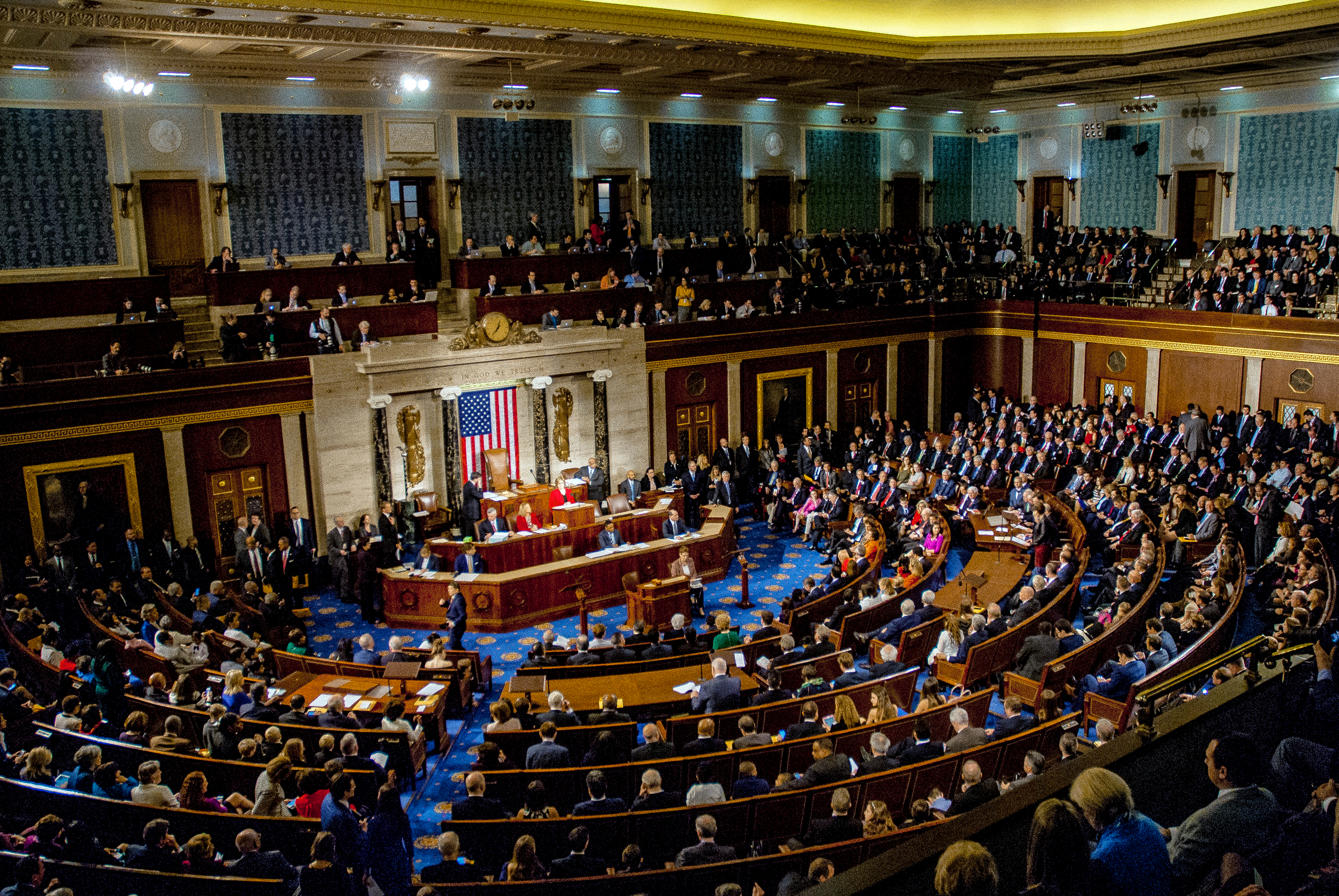

The bill follows a Congressional hearing in March that quizzed big tech platforms on misinformation.

Danny Bradbury has been a print journalist specialising in technology since 1989 and a freelance writer since 1994. He has written for national publications on both sides of the Atlantic and has won awards for his investigative cybersecurity journalism work and his arts and culture writing.

Danny writes about many different technology issues for audiences ranging from consumers through to software developers and CIOs. He also ghostwrites articles for many C-suite business executives in the technology sector and has worked as a presenter for multiple webinars and podcasts.

-

Cleo attack victim list grows as Hertz confirms customer data stolen

Cleo attack victim list grows as Hertz confirms customer data stolenNews Hertz has confirmed it suffered a data breach as a result of the Cleo zero-day vulnerability in late 2024, with the car rental giant warning that customer data was stolen.

By Ross Kelly

-

Lateral moves in tech: Why leaders should support employee mobility

Lateral moves in tech: Why leaders should support employee mobilityIn-depth Encouraging staff to switch roles can have long-term benefits for skills in the tech sector

By Keri Allan

-

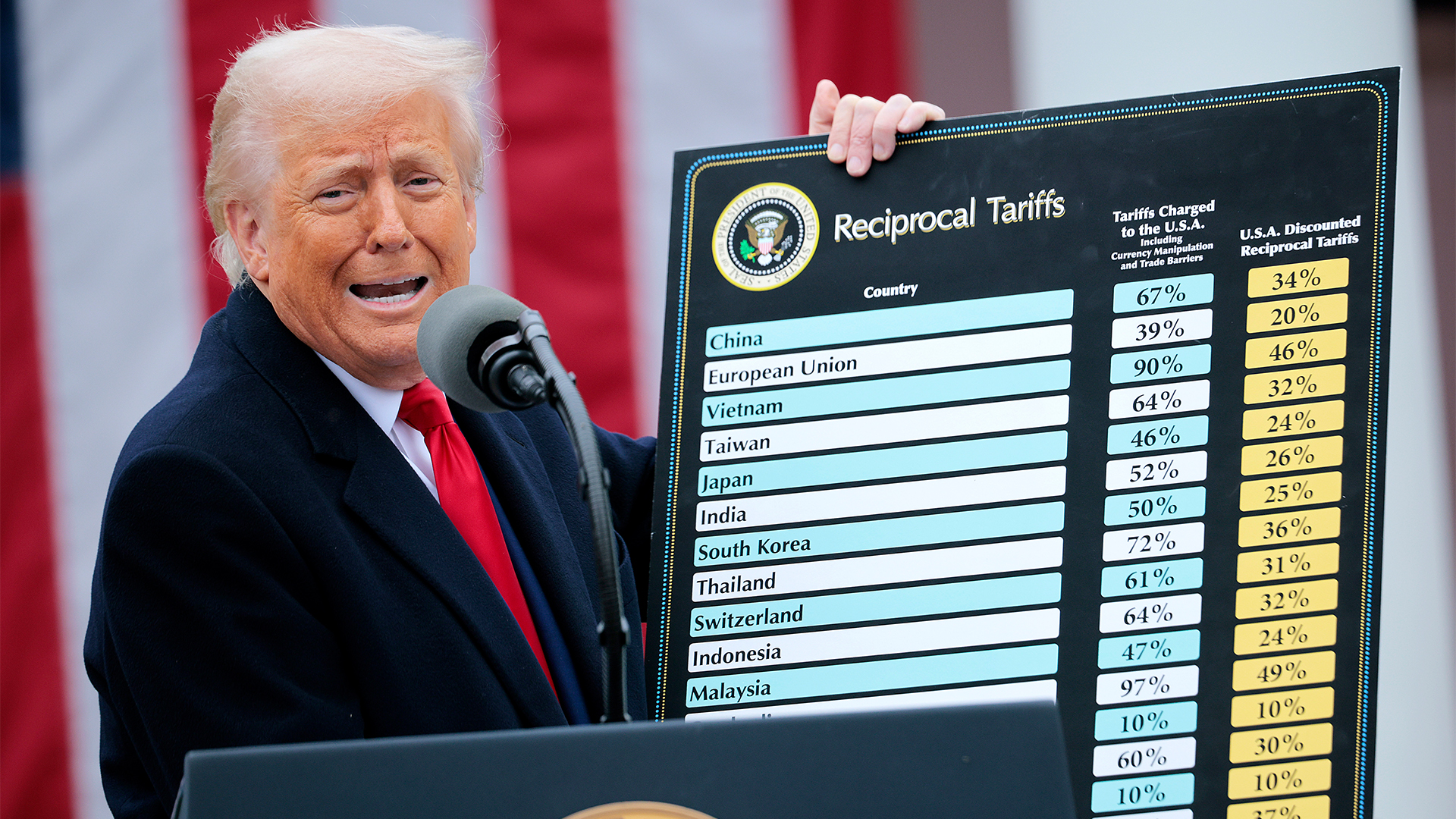

IDC warns US tariffs will impact tech sector spending

IDC warns US tariffs will impact tech sector spendingNews IDC has warned that the US government's sweeping tariffs could cut global IT spending in half over the next six months.

By Bobby Hellard

-

Starmer bets big on AI to unlock public sector savings

Starmer bets big on AI to unlock public sector savingsNews AI adoption could be a major boon for the UK and save taxpayers billions, according to prime minister Keir Starmer.

By George Fitzmaurice

-

UK government targets ‘startup’ mindset in AI funding overhaul

UK government targets ‘startup’ mindset in AI funding overhaulNews Public sector AI funding will be overhauled in the UK in a bid to simplify processes and push more projects into development.

By George Fitzmaurice

-

UK government signs up Anthropic to improve public services

UK government signs up Anthropic to improve public servicesNews The UK government has signed a memorandum of understanding with Anthropic to explore how the company's Claude AI assistant could be used to improve access to public services.

By Emma Woollacott

-

The UK’s AI ambitions face one major hurdle – finding enough home-grown talent

The UK’s AI ambitions face one major hurdle – finding enough home-grown talentNews Research shows UK enterprises are struggling to fill AI roles, raising concerns over the country's ability to meet expectations in the global AI race.

By Emma Woollacott

-

US government urged to overhaul outdated technology

US government urged to overhaul outdated technologyNews A review from the US Government Accountability Office (GAO) has found legacy technology and outdated IT systems are negatively impacting efficiency.

By George Fitzmaurice

-

Government urged to improve tech procurement practices

Government urged to improve tech procurement practicesNews The National Audit Office highlighted wasted money and a lack of progress on major digital transformation programmes

By Emma Woollacott

-

Government says new data bill will free up millions of hours of public sector time

Government says new data bill will free up millions of hours of public sector timeNews The UK government is proposing new data laws it says could free up millions of hours of police and NHS time every year and boost the UK economy by £10 billion.

By Emma Woollacott