AWS blames human error and S3's gargantuan scale for outage

Engineer pressed the wrong button, cloud giant reveals

Human error was responsible for an Amazon Web Services (AWS) outage that affected millions of customers earlier this week, the cloud giant has admitted.

Apologising to users affected by the S3 cloud storage crash, which had a 0.1% chance of happening, according to AWS's 99.9% uptime SLA, the firm set out steps to ensure lightning won't strike twice.

"We are making several changes as a result of this operational event," AWS confirmed in a postmortem of the incident. "We want to apologize for the impact this event caused for our customers.

"While we are proud of our long track record of availability with Amazon S3, we know how critical this service is to our customers, their applications and end users, and their businesses. We will do everything we can to learn from this event and use it to improve our availability even further."

Engineers scrambled to get AWS's Virginia data centre up and running after its S3 cloud storage service, and related services including AWS Lambda, EC2, RDS and RedShift, fell offline for 4.5 hours on Tuesday.

Explaining what happened, AWS said an engineer debugging an issue that was causing S3's billing system to act sluggishly basically pressed a wrong button as they tried to take a small number of servers offline.

"Unfortunately, one of the inputs to the command was entered incorrectly and a larger set of servers was removed than intended," AWS admitted. "The servers that were inadvertently removed supported two other S3 subsystems. One of these subsystems, the index subsystem, manages the metadata and location information of all S3 objects in the region."

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Avoiding using the word 'outage' anywhere in the 900-word explanation, AWS went on to explain that the sheer scale of S3 also made the process of resetting the index subsystem more difficult.

"While this is an operation that we have relied on to maintain our systems since the launch of S3, we have not completely restarted the index subsystem or the placement subsystem in our larger regions for many years," AWS said.

"S3 has experienced massive growth over the last several years and the process of restarting these services and running the necessary safety checks to validate the integrity of the metadata took longer than expected."

By 12.26pm PST, the index system had reset enough capacity to service customers' commands to retrieve, list and delete data from their S3 buckers, and by 1.54pm both the index subsystem and the placement subsystem were back online, and the other affected services started to recover and tackle their backlogs of customer commands.

AWS also explained that its health dashboard, which tells customers how services are performing, was reliant on the S3 data centre in question - to the extent that for some time it was not operational, not displaying a problem until 11.37am, leading everyone to believe it was a 2.5-hour outage, not one lasting four-and-a-half hours.

The cloud giant said that in future, when it needs to take servers offline, the tool's ability will be limited to stop it removing too much too quickly.

"We have modified this tool to remove capacity more slowly and added safeguards to prevent capacity from being removed when it will take any subsystem below its minimum required capacity level. This will prevent an incorrect input from triggering a similar event in the future," said AWS.

It added that it is also tackling the scale of S3 to make recovery a quicker process, partitioning S3's index subsystem into smaller "cells".

01/03/2017: AWS S3 cloud storage crash takes thousands of sites offline

Websites relying on Amazon Web Services (AWS) felt the impact of a 0.1% chance outage last night, as the cloud giant's S3 storage service crashed offline for many customers.

The two-and-a-half-hour issue started at 11.35am PST, or 7.35pm GMT, yesterday, affecting AWS services running out of its North Virginia, US, data centre and lasted until 2.08pm PST, or 10.08pm GMT.

AWS's health service dashboard said at the time of the incident: "We continue to experience high error rates with S3 in US-EAST-1, which is impacting various AWS services." Those services included its on-premise-to-cloud connection mechanism, Storage Gateway, relational database Amazon RDS, Data Pipeline, Elastic Beanstalk, RedShift, Kinesis, and Elastic MapReduce.

On Twitter, AWS said: "S3 is experiencing high error rates. We are working hard on recovering."

Cloud comparison site Cloud Harmony showed it was only the US-EAST-1 facility experiencing downtime. Usually S3 offers 99.9% uptime, but yesterday's outage saw it drop to 99.59% as an average over the month in the Virginia region.

At first AWS's health dashboard didn't reflect any of the troubles the Virginia facility was experiencing, but AWS later explained this was itself related to the S3 problem and got the dashboard back up and running - unlike its service.

Including Amazon's own websites, 148,213 sites rely on S3, including 121,761 unique domains, according to stat counter SimilarTech, although it's unclear how many rely solely on the US facility in question.

By 9.12pm, S3 object retrieval, listing and deletion were fully recovered, then at 9.49pm AWS confirmed it had recovered the ability to add new objects in S3, its last service with a "high error rate".

"As of 1:49 PM PST, we are fully recovered for operations for adding new objects in S3, which was our last operation showing a high error rate. The Amazon S3 service is operating normally," the dashboard said.

AWS tweeted: "For S3, we believe we understand root cause and are working hard at repairing. Future updates across all services will be on dashboard."

It is not yet clear what the root cause is.

The downtime spurred a wave of comment from industry experts and rivals, including file-sharing firm Egnyte, which focuses on a hybrid cloud proposition.

CEO Vineet Jain said: "Whether you are a small business whose ability to complete transactions is halted, or you are a large enterprise whose international operations are disrupted, if you rely solely on the cloud it can do significant damage to your business.

"While the outage has shown the massive footprint that AWS has, it also showed how badly they need a hybrid piece to their solution. Hybrid remains the best pragmatic approach for businesses who are working in the cloud, protecting them from downtime, money lost, and a number of other problems caused by outages like today."

With AWS running 42 availability zones (consisting of one or more data centre) in 16 geographic regions including the UK, many customers will have been unaffected by the US facility issue.

Shawn Moore, CTO of web experience platform Solodev, said: "There are many businesses hosted on Amazon that are not having these issues. The difference is that the ones who have fully embraced Amazon's design philosophy to have their website data distributed across multiple regions were prepared.

"This is a wake-up call for those hosted on AWS and other providers to take a deeper look at how their infrastructure is set up and emphasises the need for redundancy - a capability that AWS offers, but it's now being revealed how few were actually using."

-

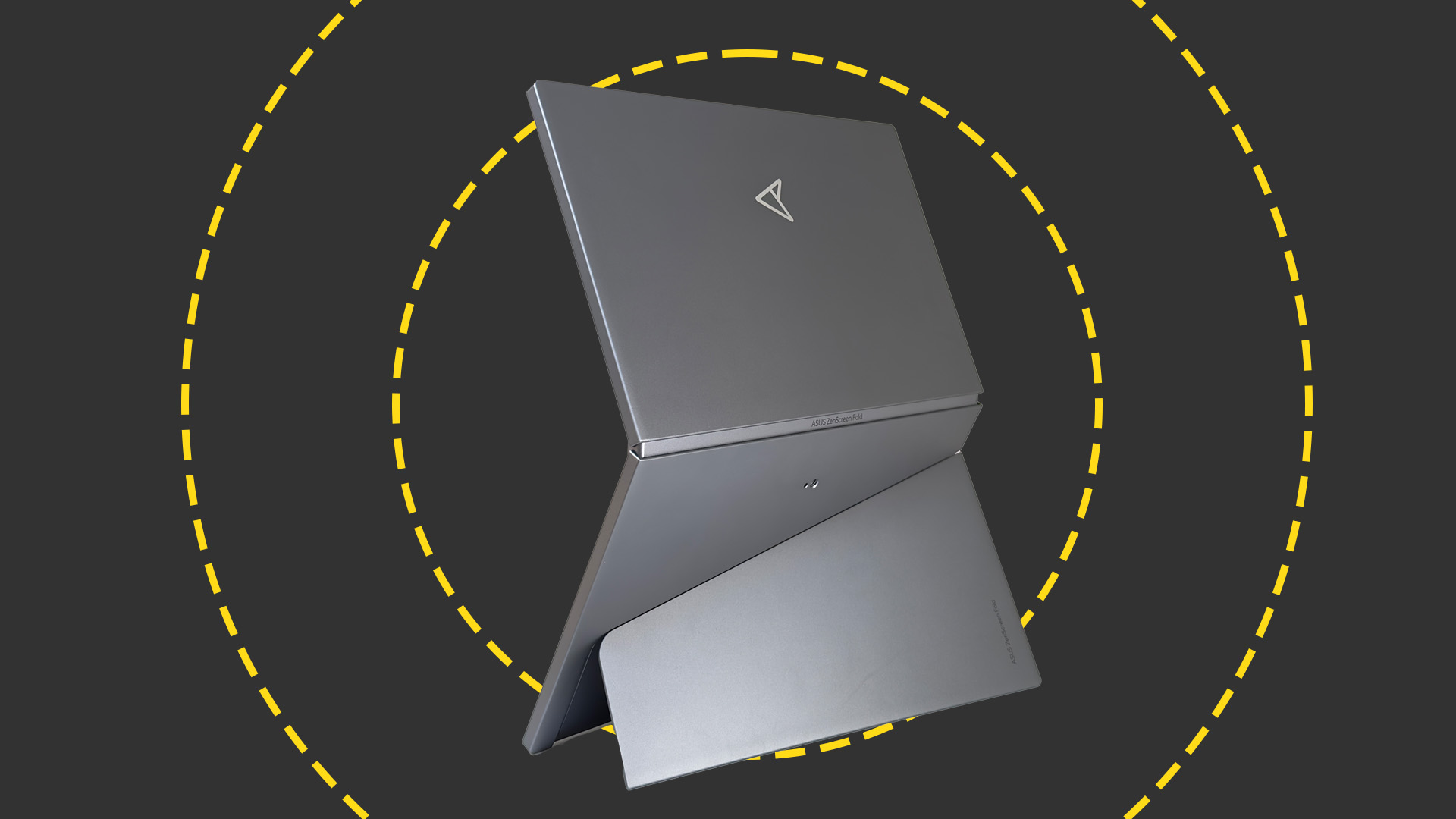

Asus ZenScreen Fold OLED MQ17QH review

Asus ZenScreen Fold OLED MQ17QH reviewReviews A stunning foldable 17.3in OLED display – but it's too expensive to be anything more than a thrilling tech demo

By Sasha Muller

-

How the UK MoJ achieved secure networks for prisons and offices with Palo Alto Networks

How the UK MoJ achieved secure networks for prisons and offices with Palo Alto NetworksCase study Adopting zero trust is a necessity when your own users are trying to launch cyber attacks

By Rory Bathgate