Red Hat teams up with US Department of Energy Laboratories

Series of collaborations will establish best practices for running next-generation HPC workloads

Open source giant Red Hat has announced a collaboration with multiple US Department of Energy (DOE) laboratories to improve cloud-native standards and practices in high-performance computing (HPC).

Those labs will include the Lawrence Berkeley National Laboratory, Lawrence Livermore National Laboratory, as well as the Sandia National Laboratories.

RELATED RESOURCE

The sweet spot of modern enterprise computing

Achieve security, reliability, scalability, and sustainability with hybrid IT infrastructure

As adoption of HPC expands beyond traditional use cases, the aim is to bridge the gap between HPC and cloud environments and lay the foundations for the arrival of exascale supercomputers.

The collaboration will bring together Red Hat’s expertise in cloud-native innovation with the laboratories’ knowledge of massive-scale HPC deployments. By establishing a common foundation of technology best practices, the pair will look to utilize standardized container platforms to link HPC and cloud computing footprints.

Ultimately, that will help to fill potential gaps in building cloud-friendly HPC applications, while also creating common usage patterns for industry, enterprise and HPC deployments, Red Hat said.

“The HPC community has served as the proving ground for compute-intensive applications, embracing containers early on to help deal with a new set of scientific challenges and problems,” explained Chris Wright, senior vice president and chief technology officer at Red Hat.

“That led to the lack of standardization across various HPC sites creating barriers to building and deploying containerized applications that can effectively span large-scale HPC, commercial and cloud environments, while also taking advantage of emerging hardware accelerators.

ChannelPro Newsletter

Stay up to date with the latest Channel industry news and analysis with our twice-weekly newsletter

“Through our collaboration with leading laboratories, we are working to remove these barriers, opening the door to liberating next-generation HPC workloads.”

Red Hat says it will do this by focusing on advancing four key areas that address current gaps: standardization, scale, cloud-native application development, and container storage.

Among the planned collaborative projects, Red Hat and the National Energy Research Scientific Computing Center (NERSC) will work together on bringing standard container technologies to HPC. The pair will work on enhancements to Linux-based daemonless container engine Podman, to enable it to replace NERSC’s custom development runtime, Shifter.

Red Hat is also expanding its collaboration with Sandia National Laboratories to explore deployment scenarios of Kubernetes-based infrastructure at extreme scale, as well as teaming up with the Lawrence Livermore National Laboratory to bring HPC job schedulers, such as Flux, to Kubernetes through a standardized programmatic interface.

Additionally, Red Hat and the three DOE labs said they are aiming to reimagine storage for containers and develop a set of standard interfaces to manage various container image formats and provide access to distributed file systems.

As a result, HPC sites will be able to abstract the “immense complexities their environments can present” and benefit the range of U.S. exascale machines being deployed by the DOE, Red Hat said.

“High-performance computing infrastructure must adapt to the requirements of today's heterogeneous workloads, including workloads that use containers,” commented Earl Joseph, PhD, chief executive officer at Hyperion Research.

“Red Hat’s partnership with the DOE labs is designed to allow the new generation of HPC applications to run in containers at exascale while utilizing distributed file system storage, providing a strong example of collaboration between industry and research leaders."

Dan is a freelance writer and regular contributor to ChannelPro, covering the latest news stories across the IT, technology, and channel landscapes. Topics regularly cover cloud technologies, cyber security, software and operating system guides, and the latest mergers and acquisitions.

A journalism graduate from Leeds Beckett University, he combines a passion for the written word with a keen interest in the latest technology and its influence in an increasingly connected world.

He started writing for ChannelPro back in 2016, focusing on a mixture of news and technology guides, before becoming a regular contributor to ITPro. Elsewhere, he has previously written news and features across a range of other topics, including sport, music, and general news.

-

Should AI PCs be part of your next hardware refresh?

Should AI PCs be part of your next hardware refresh?AI PCs are fast becoming a business staple and a surefire way to future-proof your business

By Bobby Hellard

-

Westcon-Comstor and Vectra AI launch brace of new channel initiatives

Westcon-Comstor and Vectra AI launch brace of new channel initiativesNews Westcon-Comstor and Vectra AI have announced the launch of two new channel growth initiatives focused on the managed security service provider (MSSP) space and AWS Marketplace.

By Daniel Todd

-

Google claims its AI chips are ‘faster, greener’ than Nvidia’s

Google claims its AI chips are ‘faster, greener’ than Nvidia’sNews Google's TPU has already been used to train AI and run data centres, but hasn't lined up against Nvidia's H100

By Rory Bathgate

-

£30 million IBM-linked supercomputer centre coming to North West England

£30 million IBM-linked supercomputer centre coming to North West EnglandNews Once operational, the Hartree supercomputer will be available to businesses “of all sizes”

By Ross Kelly

-

How quantum computing can fight climate change

How quantum computing can fight climate changeIn-depth Quantum computers could help unpick the challenges of climate change and offer solutions with real impact – but we can’t wait for their arrival

By Nicole Kobie

-

“Botched government procurement” leads to £24 million Atos settlement

“Botched government procurement” leads to £24 million Atos settlementNews Labour has accused the Conservative government of using taxpayers’ money to pay for their own mistakes

By Zach Marzouk

-

Dell unveils four new PowerEdge servers with AMD EPYC processors

Dell unveils four new PowerEdge servers with AMD EPYC processorsNews The company claimed that customers can expect a 121% performance improvement

By Zach Marzouk

-

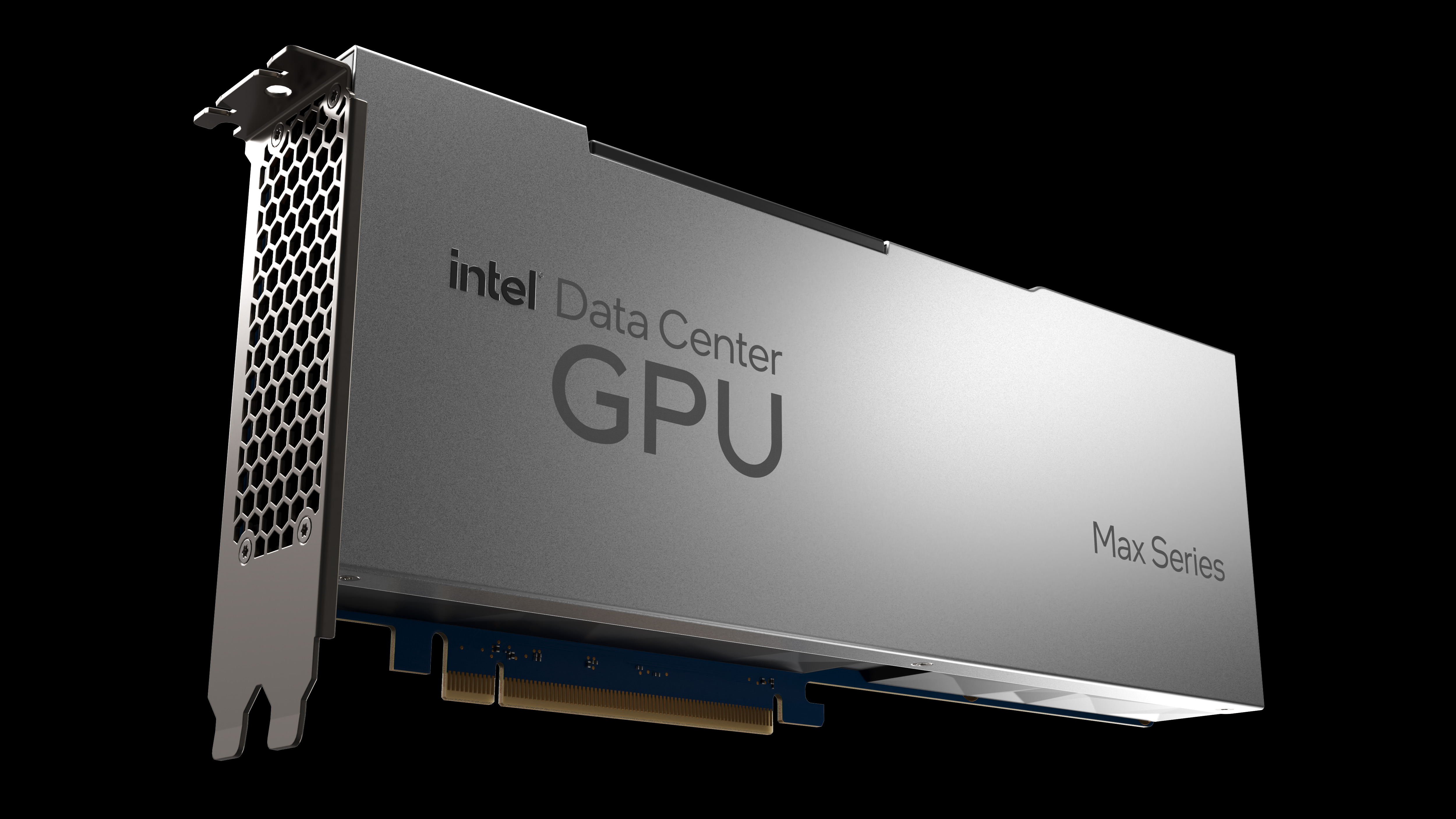

Intel unveils Max Series chip family designed for high performance computing

Intel unveils Max Series chip family designed for high performance computingNews The chip company claims its new CPU offers 4.8x better performance on HPC workloads

By Zach Marzouk

-

Lenovo unveils Infrastructure Solutions V3 portfolio for 30th anniversary

Lenovo unveils Infrastructure Solutions V3 portfolio for 30th anniversaryNews Chinese computing giant launches more than 50 new products for ThinkSystem server portfolio

By Bobby Hellard

-

Microchip scoops NASA's $50m contract for high-performance spaceflight computing processor

Microchip scoops NASA's $50m contract for high-performance spaceflight computing processorNews The new processor will cater to both space missions and Earth-based applications

By Praharsha Anand