Google Cloud doubles down on AI Hypercomputer amid sweeping compute upgrades

Chips to tackle next-generation AI and an open approach are part of Google Cloud’s comprehensive AI offer

Google Cloud has announced a widespread expansion across its cloud infrastructure, promising a varied and powerful approach to AI training, inference, and data processing.

At Google Cloud Next 2024, its annual conference held at Mandalay Bay in Las Vegas, Google Cloud has laid out a range of new advancements for its ‘AI Hypercomputer’ architecture, aimed at helping customers unlock the full potential of AI models.

With a need to serve the ever-growing demand of its customers, the AI Hypercomputer brings together Google Cloud’s TPUs and GPUs as well as its AI software to offer a wide portfolio of generative AI training options.

One of the central pillars of the AI Hypercomputer architecture Google Cloud’s tensor processing units (TPUs) – circuits specifically tailored for neural networks and AI acceleration – and Google Cloud has announced that the latest iteration TPU v5p is now generally available.

First announced in December 2023, Google Cloud has claimed the TPU v5p can train large language models three times as fast as the preceding generation. Each TPU v5p contains 8,960 chips which can unlock memory bandwidth improvements of 300% per chip,

Google Cloud’s A3 virtual machines (VMs), announced in May 2023, will be joined by a new ‘A3 mega’ VM. Featuring an array of Nvidia’s H100 GPUs in each VM, A3 mega will offer twice the GPU to GPU networking bandwidth making them a strong fit for running and training the largest AI workloads on the market.

A new service called Hyperdisk ML will help enterprises leverage block storage to improve data access for AI and machine learning (ML) purposes. It follows the 2023 announcement of Google Cloud Hyperdisk, a block storage service that helps businesses connect durable storage devices to individual VM instances.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Hyperdisk ML was made with AI in mind, with the ability to cache data across servers for inference across thousands of inferences if necessary. In a briefing, Google Cloud stated Hyperdisk ML can load models up to 12 times faster than alternative solutions.

In its sprint for greater power to support workloads, Google Cloud will also soon play host to Nvidia’s Blackwell family of chips. This pushes Google Cloud VMs to the frontier of performance, as the Nvidia GHX B200 is capable of more than tenfold improvements over Nvidia’s Hopper chips for AI use cases.

The GB200 NVL72 brings even more power to the table, combining 36 Grace CPUs and 72 Blackwell GPUs for power that could handle trillion-parameter LLMs of the near future. With liquid cooling and its high-density, low-latency design the data center solution can deliver 25 times the performance of an Nvidia H100 while using the same power and less water.

This puts it more in line with green data centers, a key selling point for Google as it balances AI energy demand with its own sustainability goals.

AWS also recently announced it is bringing Blackwell to its platform, pitting the two hyperscalers against each other in terms of performance potential. The key differentiator here is that the partnership between Google Cloud and Nvidia runs parallel to the former’s wider investment approach into competitive hardware for business use cases.

“Our strategy here is really centered around designing and optimizing at a system level, across compute, storage, networking, hardware, and software to meet the unique needs of each and every workload,” said Mark Lohmeyer, VP/GM Compute & ML Infrastructure at Google Cloud.

Leaning into open, general purpose compute

Against the backdrop of specific AI needs, Google Cloud has also taken the decision to ramp up its line of general-purpose cloud compute offerings. With this in mind, the firm has announced a general-purpose CPU for data centers named Google Axion.

Axion is Google Cloud’s first Arm-based CPU and is already being used to power Google services such as BigTable, BigQuery, and Google Earth Engine. The firm said Axion is capable of performance improvements of as much as 50% compared to current generation x86 instances and a 30% improvement over Arm-based instances.

The chip is also expected to deliver up to 60% better energy efficiency than comparable x86 instances, which businesses could exploit to lower running costs and hit environmental targets more easily.

Built with an open foundation, the aim is for Axion to be as interoperable as possible so that customers don’t need to rewrite any code to get the best out of the CPU.

RELATED WHITEPAPER

Google Cloud has announced two new VMs, C4 and N4, which will be the first in the industry to boast Intel’s 5th gen CPUs. Google Cloud stated that N4 excels at workloads that don’t need to be run flat-out for long periods of time, giving the example of microservices, virtual desktops, or data analytics.

In contrast, C4 is intended to buck up mission-critical workloads, boasting a 25% performance improvement over the C3 VM and ‘hitless’ updates so ensure critical services can run uninterrupted.

Across its new infrastructure offerings, Google Cloud has emphasized the importance of meeting customer demands for performance without compromising on price or energy efficiency. In casting a wide net, the hyperscaler has shown concrete improvements across all compute demands rather than simply doubling down on the most high-end workloads.

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

Bigger salaries, more burnout: Is the CISO role in crisis?

Bigger salaries, more burnout: Is the CISO role in crisis?In-depth CISOs are more stressed than ever before – but why is this and what can be done?

By Kate O'Flaherty Published

-

Cheap cyber crime kits can be bought on the dark web for less than $25

Cheap cyber crime kits can be bought on the dark web for less than $25News Research from NordVPN shows phishing kits are now widely available on the dark web and via messaging apps like Telegram, and are often selling for less than $25.

By Emma Woollacott Published

-

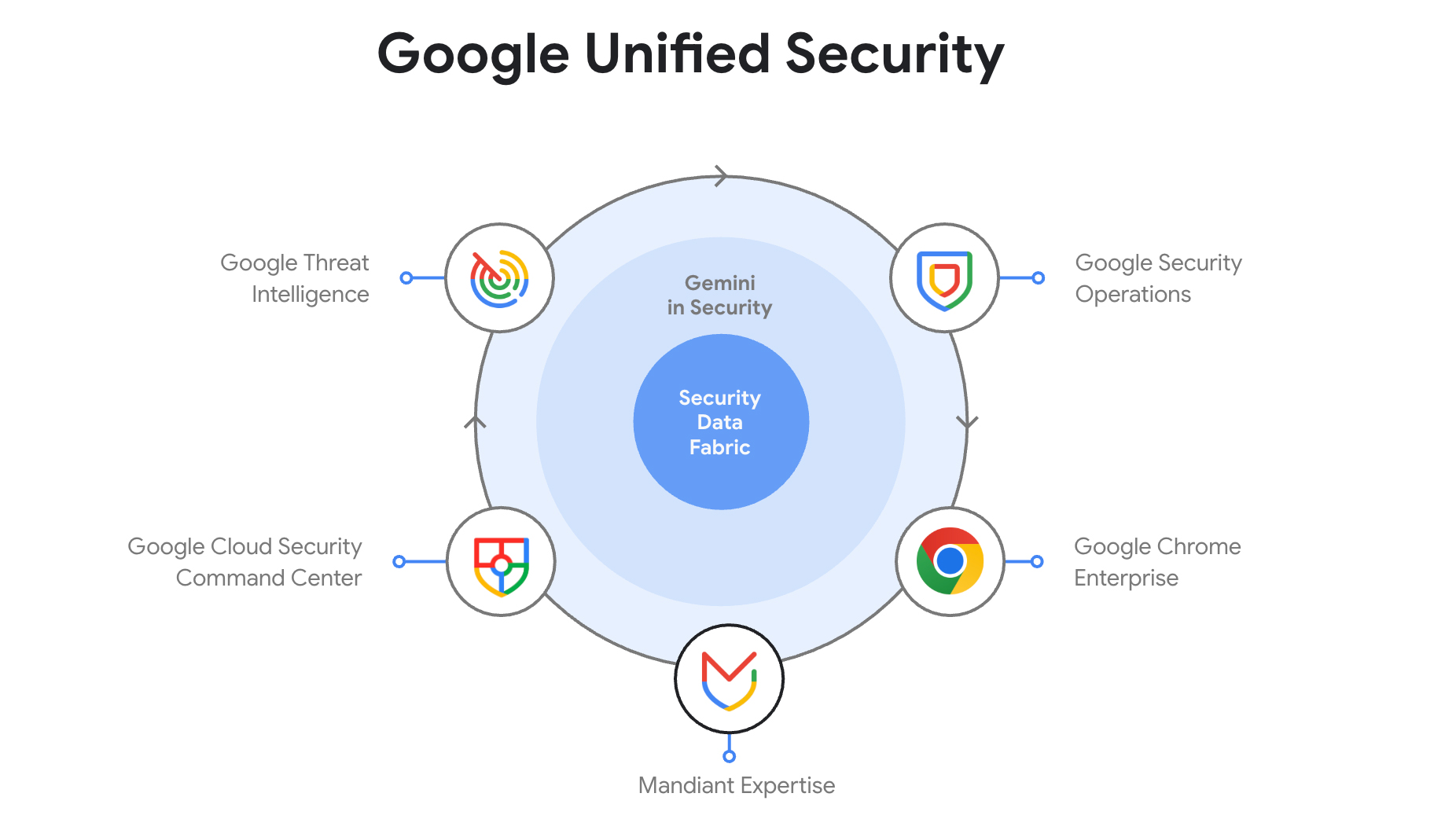

Google Cloud wants to tackle cyber complexity – here's how it plans to do it

Google Cloud wants to tackle cyber complexity – here's how it plans to do itNews Google Unified Security will combine all the security services under Google’s umbrella in one combined cloud platform

By Rory Bathgate Published

-

Google Cloud Next 2025: All the live updates as they happened

Google Cloud Next 2025: All the live updates as they happenedLive Blog Google Cloud Next 2025 is officially over – here's everything that was announced and shown off in Las Vegas

By Rory Bathgate Last updated

-

Google Cloud Next 2025 is the hyperscaler’s chance to sell itself as the all-in-one AI platform for enterprises

Google Cloud Next 2025 is the hyperscaler’s chance to sell itself as the all-in-one AI platform for enterprisesAnalysis With a focus on the benefits of a unified approach to AI in the cloud, the ‘AI first’ cloud giant can build on last year’s successes

By Rory Bathgate Published

-

The Wiz acquisition stakes Google's claim as the go-to hyperscaler for cloud security – now it’s up to AWS and industry vendors to react

The Wiz acquisition stakes Google's claim as the go-to hyperscaler for cloud security – now it’s up to AWS and industry vendors to reactAnalysis The Wiz acquisition could have monumental implications for the cloud security sector, with Google raising the stakes for competitors and industry vendors.

By Ross Kelly Published

-

Google confirms Wiz acquisition in record-breaking $32 billion deal

Google confirms Wiz acquisition in record-breaking $32 billion dealNews Google has confirmed plans to acquire cloud security firm Wiz in a deal worth $32 billion.

By Nicole Kobie Published

-

Microsoft hit with £1 billion lawsuit over claims it’s “punishing UK businesses” for using competitor cloud services

Microsoft hit with £1 billion lawsuit over claims it’s “punishing UK businesses” for using competitor cloud servicesNews Customers using rival cloud services are paying too much for Windows Server, the complaint alleges

By Emma Woollacott Published

-

Microsoft's Azure growth isn't cause for concern, analysts say

Microsoft's Azure growth isn't cause for concern, analysts sayAnalysis Azure growth has slowed slightly, but Microsoft faces bigger problems with expanding infrastructure

By George Fitzmaurice Published

-

The Open Cloud Coalition wants to promote a more competitive European cloud market – but is there more to the group than meets the eye?

The Open Cloud Coalition wants to promote a more competitive European cloud market – but is there more to the group than meets the eye?Analysis The launch of the Open Cloud Coalition is the latest blow in a war of words between Microsoft and Google over European cloud

By Nicole Kobie Published