Nvidia’s new ethernet networking platform promises unrivaled efficiency gains for multi-tenant AI clouds

The hardware giant is partnering with an Israeli supercomputer to help deliver the platform

Nvidia has announced a new Ethernet networking platform, designed to deliver extreme high-speed, high-efficiency connectivity for AI workloads across cloud environments.

Spectrum-X promises to enhance the development of multi-tenant, hyperscale AI clouds by improving the efficiency of Ethernet networks and their ability to move data.

Nvidia said the solution will provide cloud service providers with more effective ways to train and operate generative AI in the cloud and could allow data scientists to uncover insights more quickly.

Multi-tenant cloud data centers offer affordable computing workloads to businesses, and rely on Ethernet connectivity that is considered subpar to the Infiniband connections seen in many high-performance computing setups.

Nvidia’s new platform seeks to improve the speed and efficiency with which cloud data centers can process data, to provide effective AI workloads without depriving enterprises of the benefits of the Ethernet-based, multi-tenant model.

Due to its interoperability with Ethernet-based stacks, Spectrum-X could be an attractive proposition for enterprises looking to plug AI workloads into existing cloud infrastructure.

Nvidia cited Dell Technologies, Lenovo, and Supermicro as companies already making use of the platform.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

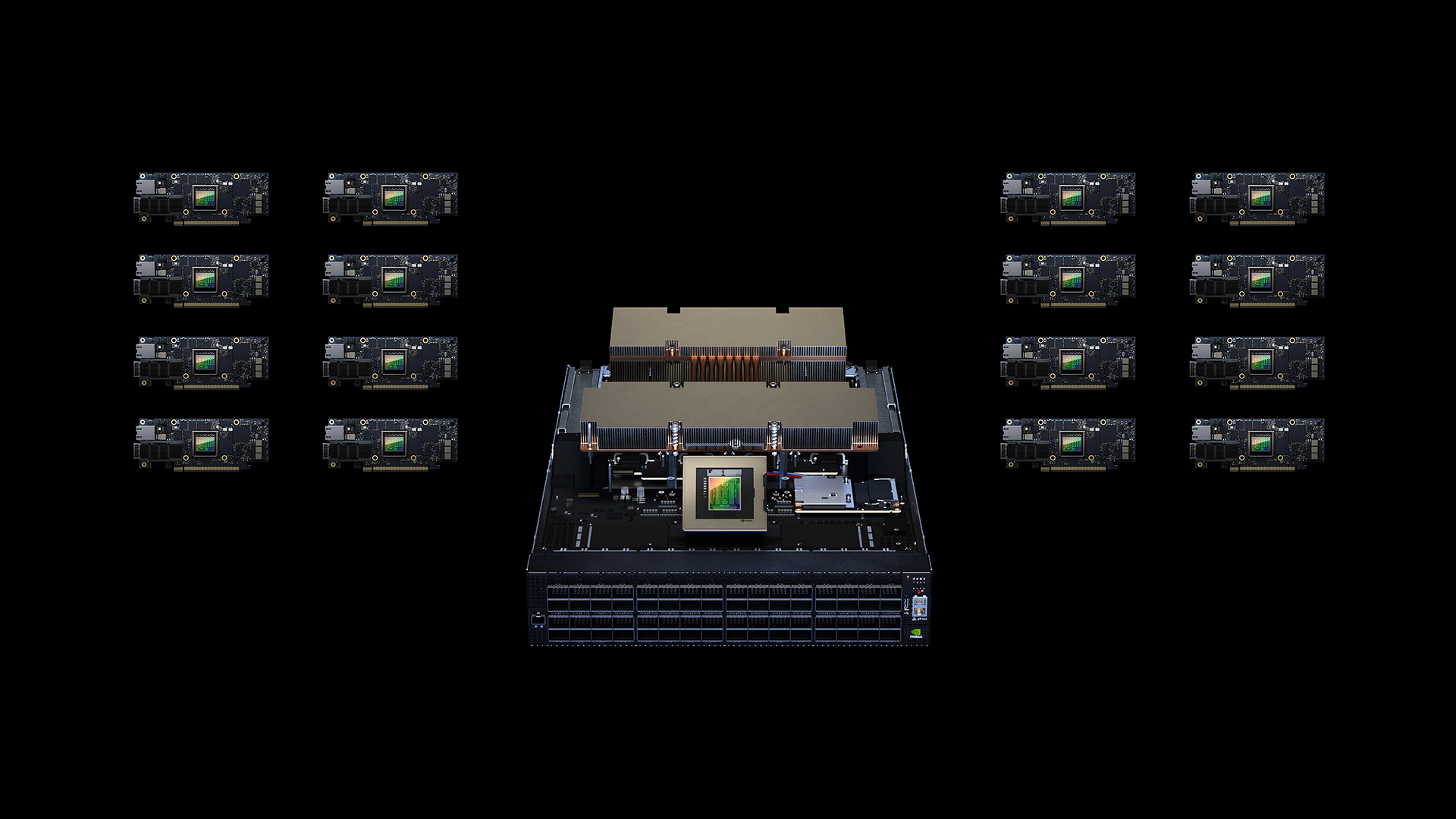

The platform brings together Nvidia’s Spectrum ethernet switch technology with its dedicated data processing units (DPUs), and can boost AI performance and power efficiency within a stack 1.7x, according to Nvidia.

Spectrum-4 switches are configurable up to 64 ports of 800-gigabit ethernet, or 128 ports of 400-gigabit ethernet, and can facilitate 51.2 Terabit/sec switching and routing.

On Monday, the firm announced that it is constructing a supercomputer at its Israel data center, which will act as a testbed for Spectrum-X designs.

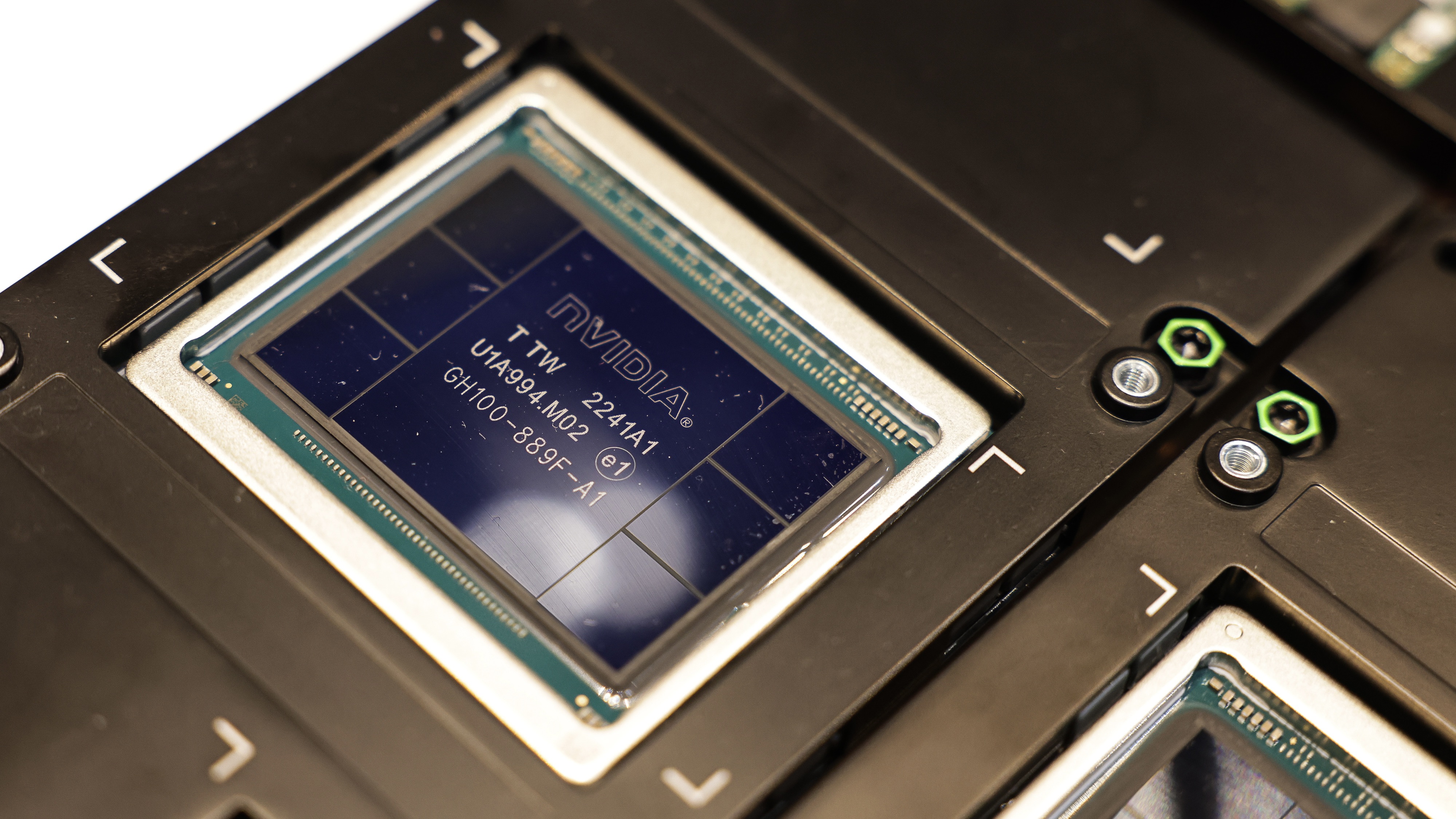

Israel-1 will be built on Dell PowerEdge XE9680 servers, which contain eight Nvidia H100 GPUs, and will deliver eight exaflops of AI performance and 130 petaflops for scientific computing workloads when activated in late 2023.

The project was based on work carried out by staff in Nvidia’s networking division formerly associated with Mellanox, the Israeli chip form acquired by Nvidia for $6.9 billion (£5.5 million) in 2019.

RELATED RESOURCE

Using cloud-based, AI-driven management to improve network operations

Reduce complexity through automation and limit time to resolution

“Transformative technologies such as generative AI are forcing every enterprise to push the boundaries of data center performance in pursuit of competitive advantage,” said Gilad Shainer, senior vice president of networking at NVIDIA in a blog post.

“Nvidia Spectrum-X is a new class of Ethernet networking that removes barriers for next-generation AI workloads that have the potential to transform entire industries.”

“Nvidia Spectrum-X is a new class of Ethernet networking that removes barriers for next-generation AI workloads that have the potential to transform entire industries.”

Analysis of Nvidia's Spectrum-X

Nvidia has been working in the AI space for years, but with the advent of commercial generative AI has truly cemented its place as a core supplier of hardware and networking solutions in the field.

Big tech has flocked to the firm for its A100 and H100 GPUs in order to meet the scaling demands of AI workloads, with Microsoft having built a supercomputer using Nvidia chips in order to train OpenAI’s GPT-4.

Recent months have brought rumors of Microsoft developing its own AI chip, codenamed ‘Athena’, as it has continued to put AI at the center of its strategy through products such as Bing and Edge AI, and 365 Copilot.

While Nvidia cannot comprehensively corner the AI hardware market, it has positioned itself as an invaluable player in AI through a broad range of solutions including training and accelerator architecture and its partnership with Dell Technologies and Microsoft supercomputer project.

Notably, its acquisition of Mellanox has enabled it to deliver advanced network solutions like Spectrum-X, which will only grow in demand as firms look to scale AI workloads in the cloud with as little latency as possible.

Developers also benefit from Nvidia’s rigorous CUDA software framework, which can be used to develop interoperable software across platforms and hardware generations.

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

Cleo attack victim list grows as Hertz confirms customer data stolen

Cleo attack victim list grows as Hertz confirms customer data stolenNews Hertz has confirmed it suffered a data breach as a result of the Cleo zero-day vulnerability in late 2024, with the car rental giant warning that customer data was stolen.

By Ross Kelly

-

Lateral moves in tech: Why leaders should support employee mobility

Lateral moves in tech: Why leaders should support employee mobilityIn-depth Encouraging staff to switch roles can have long-term benefits for skills in the tech sector

By Keri Allan