The benefits of workload optimisation

Optimisation can boost performance and save money – and it doesn’t have to be difficult, either

Once your organisation moves core workloads to the cloud, the cost, availability and performance of those cloud resources have a direct impact on productivity and the bottom line.

Go too far one way and overprovision, and you can drive up costs to no real benefit. Respondents to Flexera’s 2021 State of the Cloud report estimated that 30% of their cloud spend was being wasted – and that’s probably understated. A lack of visibility and control of costs plays its part, but underutilisation of cloud resources is a major contributor.

Go too far the other way, or place workloads in the wrong cloud infrastructure, and you’ll find that poor performance, latency or bandwidth-related bottlenecks render any other benefits or efficiencies from moving to the cloud near irrelevant. A 2019 IDC survey showed that, after security and cost, performance was the third biggest driver for firms repatriating applications from the cloud.

With this in mind, how can enterprises optimise their cloud workloads and ensure that they’re using the best possible mix of cloud resources? The easiest answer would be to get things right in the provisioning, design and implementation of cloud services. When organisations take the time to work out their requirements and select the right cloud resources for each workload, it’s easier to ensure that the workload will run as productively, efficiently and cost-effectively as possible.

This involves getting to grips with the application’s requirements in terms of storage, bandwidth, data and compute resources, and finding the platform most appropriate to those needs. It means taking latency, data flows and interdependencies with other applications into account. It also means understanding business requirements – accessibility, productivity, compliance and time to market – and balancing these with security, cost and the more technical requirements around performance and access to data.

This balance will be different for each application and will depend on how mission critical it is to existing operations and forward-looking plans. Every business has different priorities, whether those are driving cost efficiencies or adding agility and accelerating time to market. These need to be reflected in how and where their workloads run.

The right workload, the right cloud

Assigning workloads to the most appropriate infrastructure is hard. It takes experience and expertise to know what will fly in public cloud and what would be better suited to on premises. This is one reason why HPE’s Greenlake Hybrid Cloud services are supported by the HPE Right Mix Advisor – a service which looks at the applications business plan to migrate to the cloud, then uses automation to collect data about how they operate, in order to deliver recommendations on the best cloud destination and the right cloud-migration approach. Right Mix Advisor tracks how applications are used, their performance requirements and data dependencies, to give enterprises confidence that, when they move them to the cloud, they’ve moved them to the right hybrid cloud platform.

But here’s the thing about cloud: the landscape is always changing. New services appear with new performance characteristics, costs rise and fall, and new requirements emerge as enterprises explore new opportunities in machine learning, IoT, analytics or edge computing. What’s more, many organisations have moved to hybrid cloud in a somewhat ad-hoc manner, with different teams buying into different IaaS or PaaS services as the need arose. Sometimes they will have overprovisioned, resulting in unnecessary expense. Sometimes they will have underestimated the compute power or bandwidth needed by a workload or failed to take peaks of demand into account, resulting in poor performance. Often, the way an application is used, or even the numbers of people using it, will change over time.

How to optimise

So what can enterprises do to optimise those workloads once they’re in place? For starters, they need to adopt a data-driven approach, monitoring, auditing and analysing to capture as much data as possible about key workloads – how they’re used, which resources they are utilising and how intensively, how they’re performing and how they’re affected by storage or data-transfer bottlenecks or latency. It’s vital here to use all logs and tools at your disposal, particularly those built into your cloud platforms, and work to eliminate estimates and guesswork.

With that information in hand, it’s easier to see which workloads are well optimised, which are struggling for resources, and which are over resourced and wasting money. Once you know that, you can look to adjusting your cloud services or moving applications, right-sizing workloads to make sure they have appropriate resources or moving them to a different cloud – or back on premises – if the current environment doesn’t meet that workload’s needs. While there’s a tendency to see cloud repatriation as cloud failure, it’s actually smarter to see it as an act of rebalancing, moving the workload to the best platform in terms of latency, performance or data sovereignty right now.

Making optimisation easier

Three things can really help here. The first is having a modern, software-defined, composable hybrid-cloud architecture that allows you to treat on-premises, private cloud and public cloud infrastructure as one seamless architecture, using the same management tools and consoles. With HPE Greenlake and HPE Greenlake Central, this approach not only makes it easier to deploy and scale-up new cloud resources, but optimise resources for different workloads and move those workloads around where necessary.

Secondly, modernising and virtualising or containerising workloads can help, simply by making them more portable. This isn’t always possible or the right thing to do with some legacy workloads, but making the investment and effort where it is can help make operations more cost efficient in the future.

The third is having tools and services built into the cloud platform that can reduce the time and effort involved in optimisation, using automation, analytics and – increasingly – AI. For example, HPE Greenlake Lighthouse uses HPE Ezemeral software to continuously and autonomously optimise cloud services and workloads. It does so by looking at the relevant usage and performance data, then composing resources to provide the best possible balance of performance and cost, depending on the needs of the business.

As time goes on, it’s likely that optimisation will involve more automation and more AI, meaning enterprising IT teams can spend less time tinkering and shifting workloads, and more time focusing on projects with a more direct business value. Optimisation can be hard, but it doesn’t have to be – and with HPE Greenlake it’s getting much, much easier.

Find out more about HPE Greenlake and how it can help you optimise your workloads

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

ITPro is a global business technology website providing the latest news, analysis, and business insight for IT decision-makers. Whether it's cyber security, cloud computing, IT infrastructure, or business strategy, we aim to equip leaders with the data they need to make informed IT investments.

For regular updates delivered to your inbox and social feeds, be sure to sign up to our daily newsletter and follow on us LinkedIn and Twitter.

-

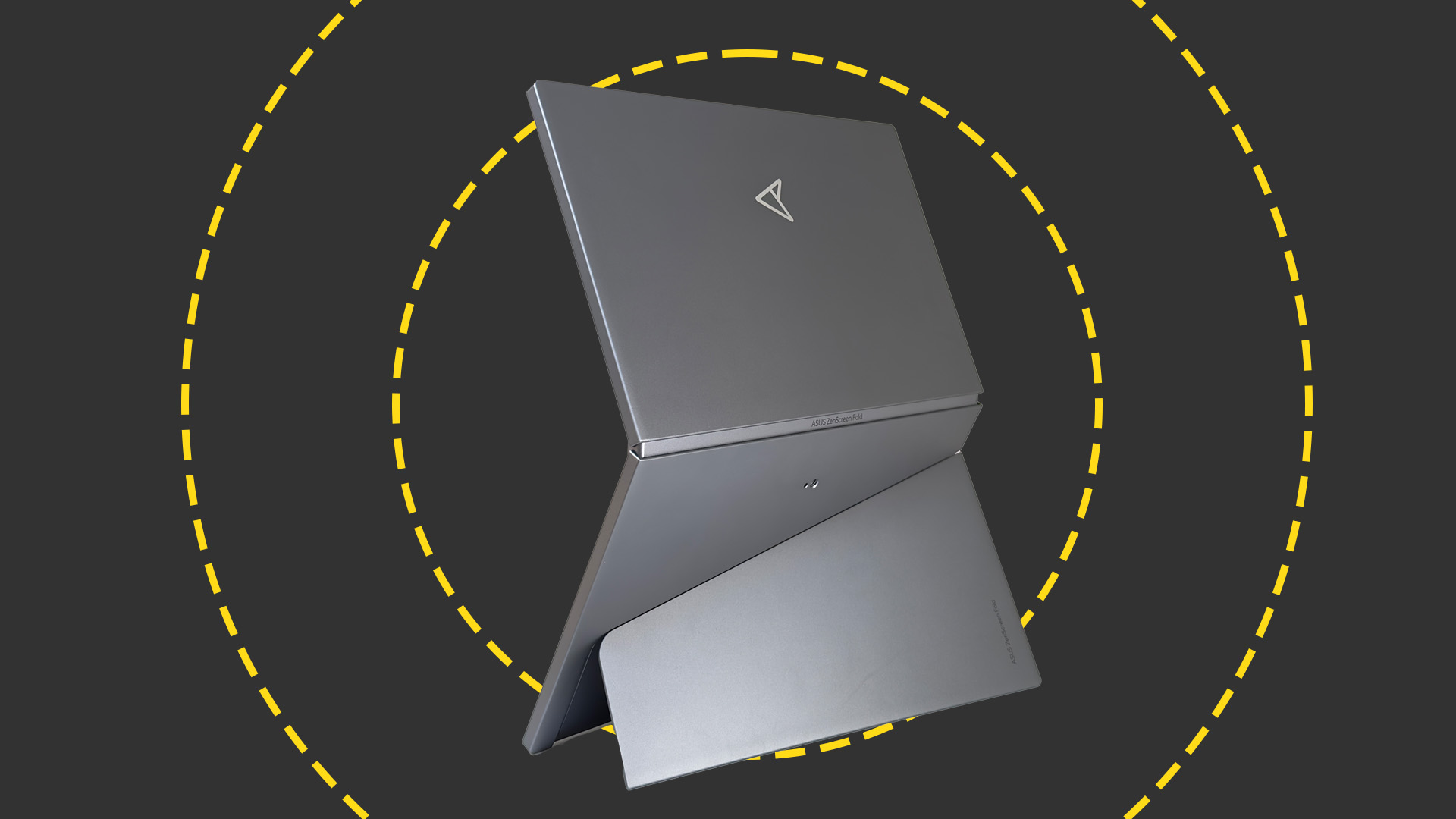

Asus ZenScreen Fold OLED MQ17QH review

Asus ZenScreen Fold OLED MQ17QH reviewReviews A stunning foldable 17.3in OLED display – but it's too expensive to be anything more than a thrilling tech demo

By Sasha Muller

-

How the UK MoJ achieved secure networks for prisons and offices with Palo Alto Networks

How the UK MoJ achieved secure networks for prisons and offices with Palo Alto NetworksCase study Adopting zero trust is a necessity when your own users are trying to launch cyber attacks

By Rory Bathgate

-

HPE announces VM Essentials – the VMWare competitor that isn’t

HPE announces VM Essentials – the VMWare competitor that isn’tNews Execs at HPE Discover acknowledge Broadcom issues, but deny they’re in competition

By Jane McCallion

-

Barclays extends HPE GreenLake contract amid “significant acceleration” of hybrid cloud strategy

Barclays extends HPE GreenLake contract amid “significant acceleration” of hybrid cloud strategyNews The pair will step up their collaboration to drive private cloud efficiencies using AI and other new technologies

By Daniel Todd

-

'Catastrophic' cloud outages are keeping IT leaders up at night – is it time for businesses to rethink dependence?

'Catastrophic' cloud outages are keeping IT leaders up at night – is it time for businesses to rethink dependence?News The costs associated with cloud outages are rising steadily, prompting a major rethink on cloud strategies at enterprises globally

By Solomon Klappholz

-

Scottish data center provider teams up with HPE to unveil National Cloud – a UK sovereign cloud service for large enterprises, tech startups, and public sector organizations

Scottish data center provider teams up with HPE to unveil National Cloud – a UK sovereign cloud service for large enterprises, tech startups, and public sector organizationsNews The DataVita National Cloud service is aimed at customers with complex workloads, addressing compliance and security concerns for public services and regulated industries

By Emma Woollacott

-

HPE eyes ‘major leap’ for GreenLake with Morpheus Data acquisition

HPE eyes ‘major leap’ for GreenLake with Morpheus Data acquisitionNews HPE will integrate Morpheus’ hybrid cloud management technology to ‘future-proof’ its GreenLake platform

By Daniel Todd

-

HPE Discover 2024 live: All the news and updates as they happened

HPE Discover 2024 live: All the news and updates as they happenedLive Blog HPE Discover 2024 is a wrap – here's everything we learned in Las Vegas this year

By Jane McCallion

-

Four things to look out for at HPE Discover 2024

Four things to look out for at HPE Discover 2024Analysis HPE Discover 2024 is taking place at the Venetian in Las Vegas from 18-19 June. Here are some ideas of what we can expect to see at the show

By Jane McCallion

-

HPE GreenLake gets a slew of new storage features

HPE GreenLake gets a slew of new storage featuresNews The additions to HPE GreenLake will help businesses simplify how they optimize storage, data, and workloads, the company suggests

By Jane McCallion