Welcome back to ITPro's live coverage of AWS re:Invent 2025. It's day-two here in Las Vegas, and we've got another action packed morning ahead of us.

Leading the opening keynote today is Swami Sivasubramanian, Vice President of Agentic AI at AWS. He'll be running us through all the developer-focused service and product announcements, and we're expecting another rapid fire session to buckle up.

CEO Matt Garman kicked things off yesterday with updates across all of AWS' key product lines, including Amazon S3 and Bedrock. Naturally, the key focus was new agentic AI capabilities for customers.

AWS customers will be able to get their hands on powerful new "frontier agents" aimed at streamlining software development practices, as well as the ability to build their own frontier AI models using Amazon Nova Forge.

IT modernization was also another key talking point, again with agentic AI capabilities for AWS Transform.

You can read more about all these announcements below.

• Now anyone can build their own frontier AI model

• Amazon S3 just got a big performance boost

• AWS targets IT modernization gains with new agentic AI features in Transform

• 'Frontier agents' are here, and AWS thinks they're going to transform software development

While most of the big announcements from AWS are yet to be revealed this morning, we have had some big updates rolled out for the company's Transform service. First announced earlier this year, AWS has doubled down on the IT modernization platform with an array of new agentic AI capabilities.

As part of the move, enterprises will be given access to powerful new AI features aimed at supercharging legacy code modernization spanning a range of programming languages, with additional tools for mainframe modernization also announced.

You can read more about the latest update to AWS Transform in our coverage below.

• https://www.itpro.com/technology/artificial-intelligence/aws-targets-it-modernization-gains-with-new-agentic-ai-features-in-transform

It wouldn't be a tech conference without some music absolutely blasting out at 7.30am. The keynote theater is filling up now with a torrent of people making their way through the Venetian Hotel.

We're just a couple of minutes out from Matt Garman's keynote now and the excitement is building. A packed conference hall here.

Matt Garman has taken to the stage, hailing the company significant growth over the last year.

"Amazon Bedrock is now powering AI inference for more than 100,000 companies around the world," he says, giving a shout-out to the AgentCore service launched earlier this year. Huge growth within just a few months and thousands of companies flocking to the agentic AI service.

Infrastructure expansion continues at pace, Garman says. In the last year alone the company has added 3.8 gigawatts of data center capacity, more than any other company on earth.

Networking infrastructure is also expanding rapidly, he adds.

"None of what we do at AWS happens without builders, and specifically developers."

"AWS has always been passionate about developers," he adds. This is a key tenet of the company, and with developers fueling the company's sharp AI focus they've never been more important.

Developers face significant challenges, however. They're spending too much time dealing with bottlenecks. Freeing up time for developers is the key to building successful products, Garman notes.

Naturally, AI is helping solve this problem, and with the advent of AI agents the potential here is huge.

"This change is going to have as much impact on your business as much as the internet or the cloud," he says.

So what do businesses need to drive agentic AI adoption? This will be a multi-pronged approach, according to Garman. Starting from the foundational infrastructure level through to data storage and the tools and solutions needed to build and deploy agents.

Of course, AWS has been focusing heavily on all these fronts.

"You have to have a highly scalable and secure cloud that deliver the absolute best performance for your workloads."

And with that we have our first big announcement here at re:Invent, the launch of AWS AI Factories.

“With this launch, we’re enabling customers to deploy dedicated AI infrastructure for AWS in their own data centers for exclusive use for them," Garman says.

“We give them access to leading AWS infrastructure and servers, including the very latest Trainium and UltraServers.”

We’ve moved onto Trainium now, with Garman touting its potential for inference - a big talking point we expected to see here at re:Invent with the focus on Google’s TPUs growing and the company's recent deal with Anthropic.

“We’ve deployed over one million Trainium chips to date,” he says. And AWS isn’t stopping there. “We’re selling those as fast as we can make them.”

Anthropic is also using Trainium chips for AI training and inference through Project Ranier.

And we have another big product announcement today - Trainium3 UltraServers general availability.

"Trainium3 offer the industry's best price performance for large scale AI," Garman says. Big performance gains here.

4.4x more compute, 3.9 times more memory bandwidth. With UltraServers, customers have 144 Trainium3 chips at their disposal - that's 362 PFLOPS and 706 TB/s bandwidth.

AWS is well along on Trainium4 development, Garman reveals. This upcoming range will offer six-times performance, four-times memory bandwidth and 2x memory capacity compared to Trainium3. Huge performance gains that customers can expect to see next year.

Infrastructure is only one part of the story, Garman says as we move onto explore inference, another key focus for the company through its Bedrock service.

More than 100,000 customers are using Bedrock, according to Garman. But it’s the “volume of the usage that’s astounding”, he says.

“We now have more than 50 customers who’ve processed more than 1 trillion tokens through Bedrock. Incredible scale and momentum.”

So how does Bedrock work? It’s essentially a marketplace for in-house and third-party AI models, with customers able to pick and choose based on their own needs.

“We never believed there was going to be one model to rule them all,” Garman says, and Bedrock certainly shows that. It’s doubled the amount of models hosted on the service in the last year, underlining the varied demand from enterprise customers.

And with that we’ve got another big announcement for Bedrock with four new open weight models, including Nvidia's Nemotron and Google Gemma.

Mistral Large 3 and Ministral 3 are also coming to Bedrock Garman reveals.

AWS has its own in-house models, the Nova range, which is getting a big update with the Nova 2 series.

“Nova has grown to be used by tens of thousands of customers today,” he says.

This includes three separate models - Lite for cost-effective reasoning, Pro, the “most intelligent” model for complex workloads, and Sonic, a multi-modal option. Amazon Nova 2 Omni is also coming soon, Garman reveals. This is a multimodal option which excels in reasoning and image generation, perfect for marketers and creatives.

Nova 2 Pro is a key focus here, particularly given its use in underpinning agents, according to Garman. I get the feeling we’re building towards a big agentic AI announcement.

So, you’re an enterprise and want to build your own AI model - seems simple, right? It’s far from it.

Training from scratch is a time-consuming, expensive process and not a realistic expectation for most enterprises.

Building with open weight models help take the edge off, but you can only go so far Garman says.

“You just don’t have a great way to get a great frontier model,” he says. AWS wants to solve that.

Amazon Nova Forge is a new service that “introduces the concept of open training models”. Essentially, you can build your own frontier AI model by combining internal enterprise data with AWS open weight models picking up the slack.

“We’ve already been working with a few customers to test out Nova Forge,” Garman says. This includes Reddit, which has built its own frontier model using the service.

“We think this idea of open training models is going to completely transform what companies can invent with AI,” Garman adds.

We've officially moved onto agents, one of the "biggest opportunities that are going to change everyone's business," according to Garman. The company has already had moves toward ramping up customer agentic AI adoption.

The Bedrock AgentCore service, launched earlier this year, gives customers access to a range of custom built agents as well as the ability to build and deploy their own.

"AgentCore is truly unique in what it enables for building of agents," he says, adding that flexibility and choice is a key focus of the service.

"You only have to use the building blocks you need. We don't force you as builders to go down a single fixed path."

AgentCore is a source of immense excitement at AWS, it seems.

"The momentum is really accelerating," Garman says, with enterprise customers flocking to the platform at a rapid pace since its launch earlier this year.

Security is a recurring talking point with agentic AI, Garman says. Identity security-related considerations and guardrails are causing headaches for security teams as agents weave their way through data sources behind the scenes.

"You can't with certainty control what your agent does and does not go," he says. Giving enterprises tools to set clear boundaries for agents is critical.

With that in mind, the company announces Policy in AgentCore, which allows users to set strict rules for agents to help them "stay in bounds".

Garman is running us through an example here involving an agent operating in a customer service capacity. Users can set a limit on the size of refunds issued by an agent. Hoping to chance your luck and get a refund on something over $1,000? That won't work.

So now that you've secured your agents, how do you track performance? You've invested a lot of money in these shiny new tools, but are they actually delivering a return on investment or even helping customers?

Another new service unveiled today, AgentCore Evaluations, helps enterprises keep track of agent activities and performance based on real-world interactions with customers.

"Evaluations helps developers continuously inspect the quality of their agent based on real world behavior," Garman explains. "Evaluations can help you analyze agent behavior for specific criteria like correctness, helpfulness, harmfulness."

AWS has been quietly working away building its own range of agents in recent months, Garman says. Amazon Quick, for example, assists users in visualizing and analyzing data or automating workflows.

Employees across the company are using Amazon Quick and recording marked benefits so far.

"Today we already have hundreds of thousands of users inside the company," he says. "Teams are telling us they're completing tasks in one-tenth of the time it used to take."

Amazon is also using agents in customer service roles, with Amazon Connect already being used by a range of major customers such as Toyota, Capital One, or National Australia Bank.

"The Connect business passed the one billion annualized run rate mark," Garman says.

Moving onto developers now, Garman says agents have great potential here to help reduce workloads and speed up operations.

This is where platforms such as AWS Transform come in, helping modernize legacy and mainframe code. Thomson Reuters, for example, is modernizing more than 1.5 million lines of code a month during the process of moving from Windows to Linux.

Elsewhere, solutions like Kiro, AWS' AI coding tool, is helping drive developer productivity.

Kiro is one of a growing array of AI coding tools out there on the market in 2025, but Garman says the reception has been huge, with "hundreds of thousands of developers" using the platform globally since launch.

Kiro has been a huge success internally at Amazon so far. Last week, the company made the decision to make it the official development tool for teams across the company.

With agentic AI gains over the last year, there's huge potential for developers, Garman says, especially with "frontier agents".

These aren't your bog standard AI agents, they're more intuitive, autonomous, and powerful - for developers, the Kiro autonomous agent could be a game changer.

This AI agent essentially acts like "another member of your team", according to Garman, learning from the processes and practices from the team to continually improve.

"We think this will help you move much more quickly," Garman says. The agent is going to help shipping more code, more quickly.

But there's more to software development than just writing code, there are key security considerations at play here. The new AWS Security Agent will help underpin safe, secure software development.

"This agent will help you build applications that are secure from the very beginning," Garman says. "It embeds security expertise upstream and enables you to secure your systems more often."

Security Agent also helps with penetration testing, giving developers teams what was traditionally a laborious process one that's now on-demand. Huge improvements to broader software security here, but also speeding up the development lifecycle.

Want to learn more about the new frontier agents from AWS? Check out our coverage and interview on the announcement below.

• AWS says ‘frontier agents’ are here – and they’re going to transform software development

With faster programming and improved security processes, there's only one part of the equation missing. Deployment of software. With this in mind, the new AWS DevOps Agent helps investigate incidents and proactively work to improve application deployments.

We've still got a bunch of launches expected here. Garman says he's going to rally through the next few announcements - 25 in 10 minutes.

"Buckle up everybody," he says. We're going to cut to the chase here and distill things into simple bullet points covering key areas, starting with Amazon S3.

• S3 object size limits are getting a big boost, a 10x boost to 50TB

• That's not all though, Amazon S3 Batch Operations are also now 10x faster

• Elsewhere, Amazon S3 Tables are also getting new intelligent tiering to help with cost optimization

• General availability of S3 Vectors

Elsewhere, we have some big database announcements.

• Increased storage capacities for RDS for SQL Server and RDS for Oracle are coming

• Optimized CPUs for RDS for SQL Server

• A big new database savings plan for customers - savings of up to 35%, according to Garman

And with that, the keynote session is over. We'll be back shortly with a roundup of everything we learned in the opening session. Thanks for following and remembered to keep tabs on our socials and newsletter for all content from AWS re:Invent across the week.

A steady stream of people entering the keynote theater now with just over 30 minutes until Swami kicks things off on day two.

Swami Sivasubramanian has taken to the stage now, asking the audience to think back to the first programme they built. Feeling rather left out at this point...

Good news though, Swami says "who can build is changing" as a result of generative AI and agentic AI. New AI-powered tools are lowering the bar for non-technical individuals.

So how is an agent different from a generative AI chatbot? Swami says imagine that traffic to a website goes down rapidly in a matter of days.

Using a chatbot, the response would be rather basic, but with an agent you'd get a response with deeper context and a concrete plan to solve the problem.

"The chatbot tells you what to investigate, the agent investigates, diagnoses the problem, and initiates the solution," Swami says.

Agents are the next big thing in the tech industry, and as we've seen already this week, AWS appears all-in. But building agents is a tricky process fraught with challenges and potentially disastrous pitfalls.

AWS wants to make that easier for customers, simplifying the process and enabling developers to build their own agents.

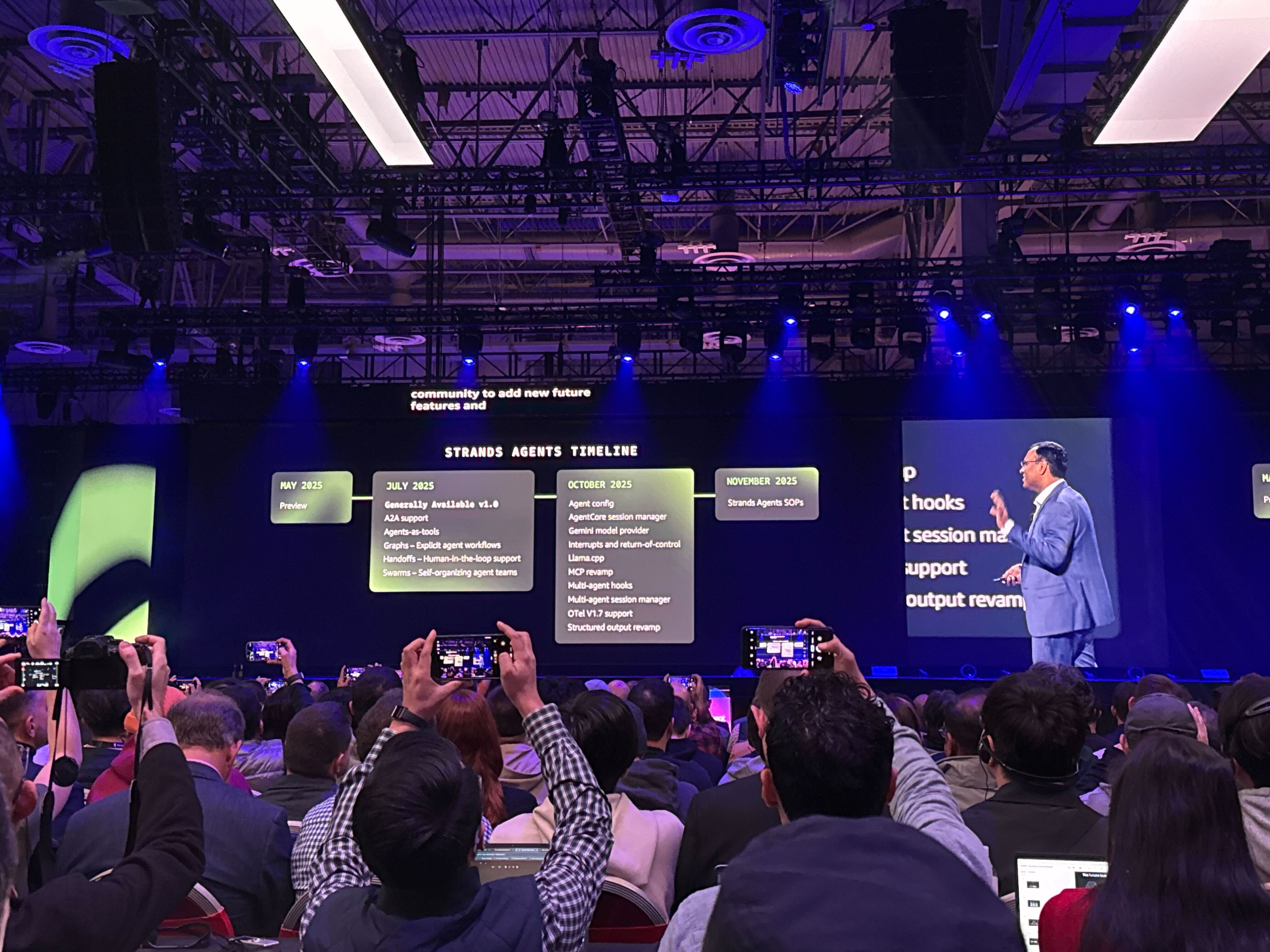

Strands SDK, for example, is helping supercharge developer building capabilities. The service was made generally available in July this year, and has seen great uptake already.

Now AWS is doubling down, offering support for TypeScript, one of the world's most popular programming languages. Elsewhere, Strands SDK will offer support for running agents at the edge - big potential in robotics here, Swami says.

Complexity is the biggest issue in building agents, Swami says. It's a long, difficult process in many cases. Ultimately, this slows down innovation.

Bedrock AgentCore seeks to address this, he adds. The service allows customers to build custom agents. The service has proved highly popular since its launch earlier this year and already boasts thousands of customers, Swami says.

"People love it because it works with any agentic framework and any model, giving you the freedom to use the tools that work best on your use case," he tells attendees.

"It's modular, so that you can mix and match using just the pieces you need for your solution," Swami adds. "AgentCore does the heavy work so you can focus on what matters most, creating these breakthrough experiences that solve real-world business problems."

And with that, we have two new capabilities for AgentCore. The first of these includes AgentCore Policy, which helps enterprises set more robust controls for agent interactions and behaviour.

Elsewhere, AgentCore Evaluations will help users keep tabs on how agents are performing, allowing them to fine-tune and tweak based on end-user feedback.

Another quick-fire announcement here with the launch of episodic memory capabilities for agents through AgentCore.

This, Swami says, will essentially give agents longer-term memory capabilities, helping them to remember and learn from past experiences.

Swami gives the example of air travel here as an area this capability could help. Travelling solo? get an agent to book a flight with a fairly quick turnaround at the airport. But travelling with family six months later, that same booking is going to be a disaster. Further down the line, using episodic memory, the agent would realize this and compensate.

Call me cynical, but this is just common sense. Why do you need an agent to remember a previous airport-related farce?

A tangible example of this being used in a business context would be great.

So far we've discussed how AWS is helping customers to build agents. Now we're moving on to look at making them more efficient.

Most agents are focused on basic functions right now, working away in the background, analyzing search results, writing code, or solving basic problems.

Scaling the capability of agents is the next step, giving them the ability to tackle more complex problems.

"Think of it as teaching your AI agent to be a specialist instead of a generalist. Like turning a family doctor into a cardiologist who is laser-focused on exactly what you need," he says.

Starting with pre-trained models makes sense here, and Swami says this is how most teams begin scaling agent capabilities. These are trained on curated agent-specific datasets.

"This creates permanent behaviour changes," he explains. "They don't require lengthy prompts, can dramatically improve performance on specific tasks."

But there's an issue here. Sometimes models can become too focused on specific tasks due to over training.

So what's the solution? Quality over quantity, essentially. Reinforcement learning, Swami says, helps build stronger, more intuitive agents.

"When an agent is troubleshooting a complex issue, you don't just want a final answer. You want the logical diagnostic steps," he says.

This is easier said than done, though. This is a costly process running between six-to-twelve months and requires deep in-house technical capabilities.

AWS has the solution here, who would've thought? Reinforcement fine-tuning is now available in Bedrock, Swami reveals. This will help markedly improve model accuracy and customization of AI agents.

All told, Swami says this delivers 66% accuracy gains. "That is how powerful these techniques are," he says.

Drilling down into deeper levels of customization, Amazon SageMaker AI is a big focus for AWS and customers. A new model customization feature, launching in preview, allows customers to tweak and fine-tune in-house or third-party models.

"With this release, you can customize popular models such as Amazon Nova, Llama, DeepSeek, and deploy them in a just a few steps," he explains.

"This comes with two experiences, and you can choose the right approach based on your comfort level - a self-guided approach for those who like to be in the driver's seat, and an agent-driven experience that uses an AI expert for folks who like to turn on autopilot in their cars."

Tweaking and fine-tuning AI models using AI agents. We're heading down the rabbit hole here.

We're joined now by Byron Cook, VP, distinguished scientist for automated reasoning at AWS, to discuss "neurosymbolic AI" and how the hyperscaler is working to make AI agents more trustworthy.

LLMs, as we know, can be tricked by bad actors, and that has downstream implications for agents. As a result, we introduce guardrails and essentially keep agents on a short leash.

But that limits their ability to deliver positive gains for users.

AWS uses automated reasoning to verify the outputs of the underlying LLM, which often provide instructions for the agents.

Automated reasoning is being used across a range of areas at the company, including storage and virtualization. Essentially critical areas where "failure is unacceptable".

We heard all about the new frontier agents AWS unveiled during yesterday's opening keynote - powerful new tools for developers spanning code generation, DevOps, and cybersecurity.

These will essentially act as teammates in the future, Sivasubramanian says. It's clear the company is pinning its hopes on a big push into the AI coding space with these new offerings.

They've got stiff competition though. Anthropic, OpenAI, Microsoft, GitHub, Google, and the various 'vibe coding' startups taking the industry by storm mean AWS will have stiff competition.

And that's a wrap on the day-two keynote. We've drilled down into a lot of the developer-focused product launches today, and we'll be sure to hear more as the week continues - tomorrow's keynote session is focusing specifically on infrastructure, a huge talking point in the age of AI.

We'll be back shortly with a comprehensive roundup of all the big talking points from both keynote sessions so far this week, so stay tuned!