Well, it's been a very busy week – as the blog below proves – but Google Cloud Next 2025 is officially at an end. Throughout the week Google Cloud unveiled its new TPU, new AI agents and the networking needed to run them, a new protocol for AI interoperability, and much more. Read below and check the ITPro website to catch up on all this, as it happened.

To catch up on some of the news from the past week, check out our ITPro Podcast episode on the conference here.

And with that, we've finished the developer keynote! You can refer back to the rest of this blog for all the latest and stay tuned on the ITPro site for more coverage from Google Cloud Next 2025.

Within the Kanban Board, Densmore can ask Code Assist to add code for specific features. If another team member has changed code and broken something – in this case, Densmore uses Seroter as a negative example – Code Assist can flag the changes to make a fix.

When a developer notices a bug, they can tag Code Assist directly in their messaging app, or add a comment within their bug tracker.

Densmore shows us the Gemini Code Assist Kanban Board, which includes something Google Cloud calls a 'backpack' – which contains all context for code, security policies, formats, and even previous feedback.

Rounding us out, we're welcoming Scott Densmore, senior director, Engineering, Code Assist at Google Cloud, to demo a sneak peek at Google Cloud's software engineering agent.

To share the visualization with colleagues, Nelson can press a 'create data app' button to quickly generate a link to the interactive forecast.

The agent uses a new foundation model called TimesFM, which has been built specifically for forecasting, to produce a table with product IDs and dates, as well as a chart with sales over time.

Within the Colab notebook, Nelson can ask the Gemini data science agent to generate a forecast based on his data.

Here to explain is Jeff Nelson, developer advocate at Google Cloud. Nelson starts with Colab, where we'll be shown a demo of Google Cloud's new data science agent in action.

We're moving on to learning about data agents, Google Cloud's tools for easily analyzing data.

Gemini can see and make sense of information that isn't apparent to the human eye, says Wong, showing a video of her basketball throw as an example. She adds that a team of developers recently produced an AI commentator for sport and that X Games is interested in using AI for judging.

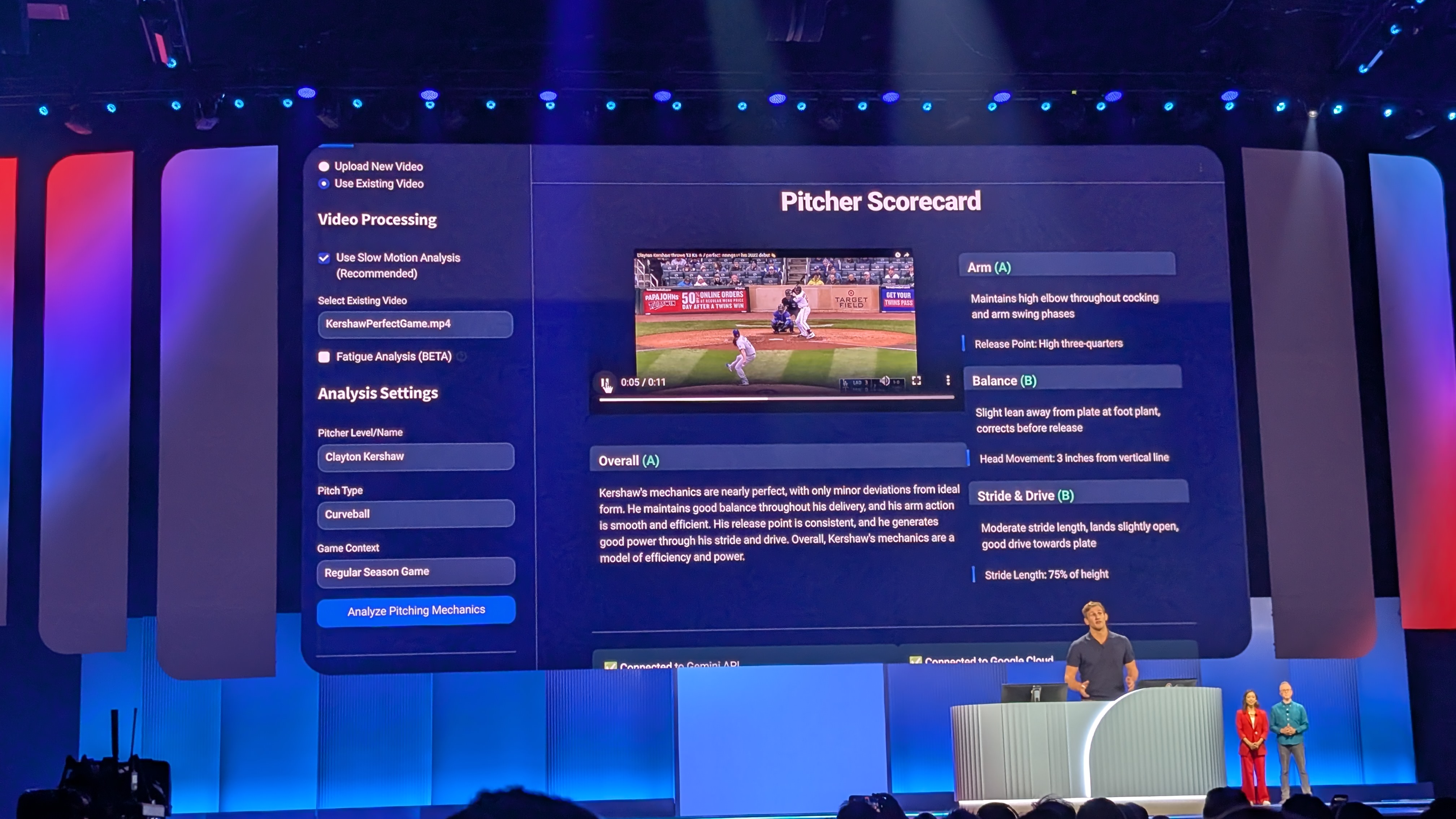

DiBattista notes that Gemini is capable of analyzing multiple frames at once to evaluate motion, rather than just snapshots. He stresses that he built the tool in just one week, with no need to build a custom model or handle complex data sets.

To demonstrate the amateur pitch, we're shown a clip of Seroter throwing a baseball outside Google HQ. The system grades him as a 'C', with breakdowns or his arm, balance, and stride & drive.

Via Gemini API, DiBattista created a system that can analyze video and produce text analysis of the pitch in the video – both for pros and amateurs.

The winner of the Cloud X MLB (TM) Hackathon was Jake DiBattista, who's here now to tell us all about his project – measuring pitches using MLB high-speed video.

What does all this look like in practice? Wong and Seroter say MLB is using Gemini to measure its 25 million data points per game. Google Cloud ran a hackathon to see what innovative use cases people could come up with for Gemini in sports.

"We're striving to meet developers where you are," says Cabrera. "Your team can build great apps using Gemini as your IDE of choice, or you can use Vertex AI Model Garden to call your model of choice. No matter what you use, we're excited to see what you come up with."

Within Model Garden, developers can test out the model's response to questions like "what capabilities can you offer for designing renovation subjects?" and see how it responds to evaluate which one best suits their purpose.

Cabrera says while Gemini is her favorite model, Model Garden on Vertex AI offers a range of models from Meta, Mistral, and Anthropic among others.

We're really cooking now, as Cabrera moves over to Gemini Copilot to produce unit tests by entering a prompt in Spanish – which it quickly does.

Cabrera wants to make an agent to help with budgets, powered by Gemini 2.5. Moving over to Cursor, Cabrera adds input validation to the agent.

For this demo, Cabrera is using the Windsurf IDE, which is intended to support devs with 'vibe coding'.

Debi Cabrera, senior developer advocate at Google Cloud is now onstage to show us how developers can use Gemini in their IDE of choice, and then bring their model of choice to Google Cloud for their apps.

Google Cloud is at pains to stress that it does not require devs to use Gemini – with Vertex AI Model Garden, there's a wide range of models to choose from.

Seroter says that Google Cloud is helping developers with its new Agent2Agent, which not only connects agents together but helps developers discover new agents to connect with in the first place.

Within the tool, Gemini suggests a fix to the problem and Sukumaran can immediately deploy it without having to affect anyone’s access to the agent.

To fix this issue, Sukumaran shows us Cloud Assist Investigations, a new tool for diagnosing problems in infrastructure and massively cutting down on debugging time.

Within Agentspace, Sukumaran asks for information related to ordering, expecting a relevant sub-agent to provide the right response. But instead, we're presented with an error message.

Once she's deployed this agent system, she'll be able to share it within Agentspace, where she can interact with the agent.

Sukumaran creates a multi-agent system, right here in the keynote. This means creating a ‘root agent’ with a number of sub-agents, which will work together to automate a task.

Abirami Sukumaran, developer advocate at Google Cloud, is here to show us how to build agents within Vertex AI using ADK with Gemini.

We’re now learning about Vertex AI Agent Engine, which has recently been made generally available and helps enterprises deploy agents with enterprise-grade security. We’ll also hear about Agentspace, Google Cloud’s new solution for building no-code agents, or for developers to share agents they’ve built with the rest of their company.

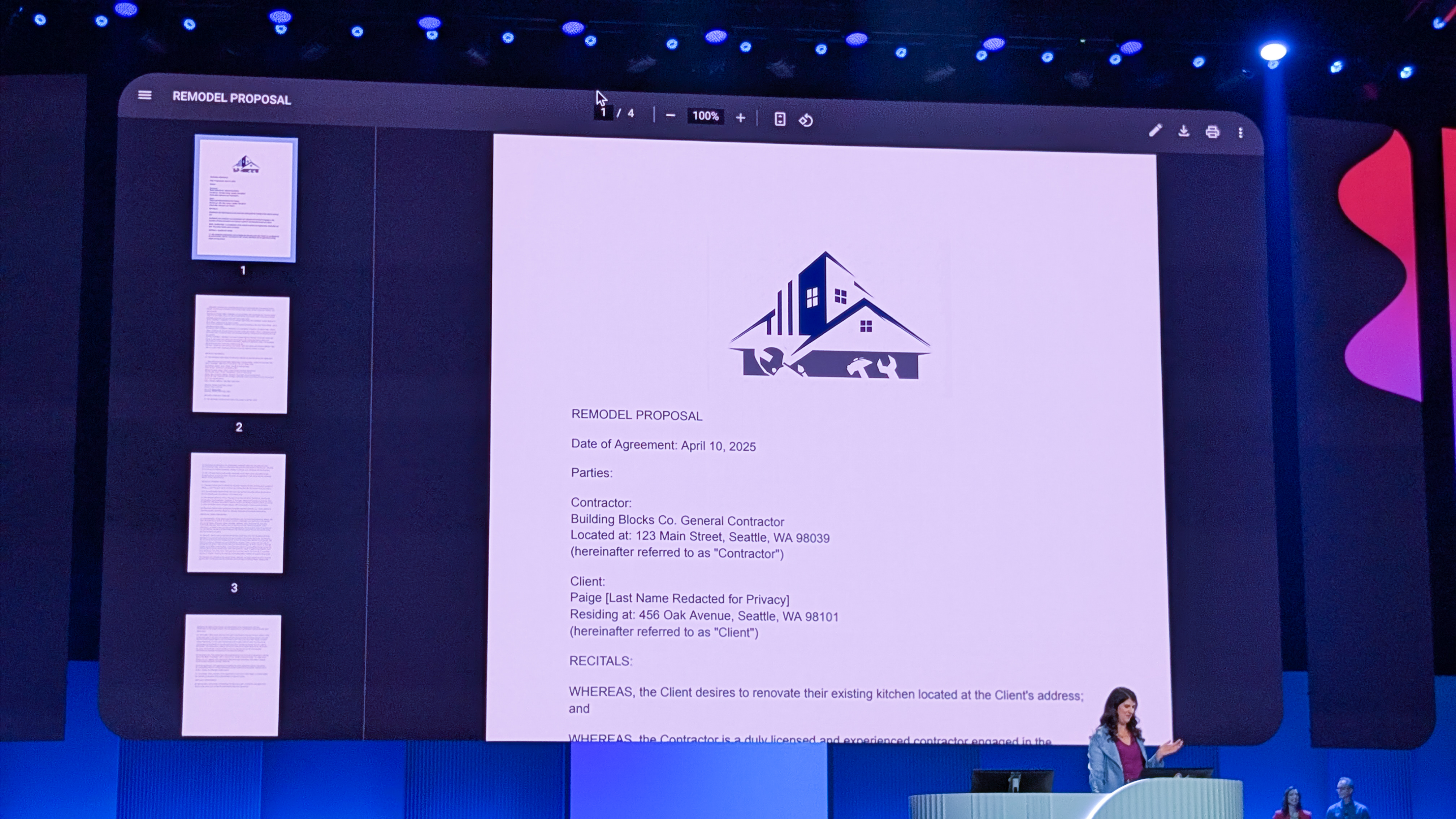

The moment of truth comes – and the agent produces a detailed PDF proposal that Hinkelmann can access right within the prompt window.

The next step is to select the AI model Hinkelmann wants for the agent. Because ADK is model agnostic, Hinkelmann says she could use Llama 4 or another model – but in this case will use Gemini 2.5.

Performing RAG requires accessing information from outside the agent, for which model context protocol (MCP) comes in Handy, Hinkelmann says.

Next, Hinkelmann adds an 'analyze bulding codes' tool, which allows the agent to use RAG to check a private dataset for local buildings.

Hinkelmann says agents need instructions, tools, and a model. So to start, she uses Gemini in Vertex AI to create a custom instruction: in this case, taking a customer request and creating a PDF proposal.

Here to demo this is Fran Hinkelmann, developer relations engineering manager at Google Cloud.

Wong and Seroter say Vertex AI’s new Agent Development Kit can create an agent that can verify building codes and go deeper into meeting Bailey’s requirements.

Next up, Seroter wants to know what an agent can do.

"An agent is a service that talks to an AI model to perform a goal-based operation using the tools and context it has," Wong explains.

Wong asks Bailey to go into more detail on the benefits of long context windows.

"This example is some things like photos, images, and a few sketches," Bailey says. "But with long context, you're able to send full videos to use for your projects."

Bailey asks the model to add two globe pendant lights into the image and within seconds, they've been added.

In another tab, we're shown Bailey has used Gemini to generate a prompt for its image generation capabilities and then used this to produce a concept image for the kitchen. It can produce the image, which is photorealistic, in just a few seconds.

Straight away, the model's 'thinking' box shows the model has considered the floor plan (based on a sketched floor plan Bailey provided) and local regulations and building codes.

To start, the pair ask Gemini 2.0 Flash to generate a very detailed plan for remodeling a 1970s style kitchen. Bailey says the model has 65,000 token output window, which is great for generating long plans.

The two are going to make an AI app to help remodel Bailey's kitchen, taking into account all the details and laws around doing that.

Gemini is key here, of course. Here to show us how is Paige Bailey, AI developer experience engineer at Google DeepMind, and Logan Kilpatrick, senior product manager at Google DeepMind.

Wong says today's keynote is all about how Google Cloud can help developers build software, from start to scaling, and a sneak peek at the future of development in Google Cloud.

Here to tell us more is Stephanie Wong, head of developer skills & community at Google Cloud and Richard Seroter, chief evangelist at Google Cloud.

Finally, Gemini underpins all these innovations with its large context window, multimodality, and advanced reasoning.

Next, Google Cloud is helping developers be as productive as possible via Gemini Code Assist and Gemini Cloud Assist.

Here to welcome us to the developer keynote is Brad Calder VP & GM at Google Cloud. He says Google Cloud is innovating in three key areas. First up, helping companies build agents, which can collaborate to achieve goals on behalf of users.

To count us down for the final 30 seconds, we're being shown numbers generated by Veo 2, i ncluding some truly abstract clips such as a giant 1 blasting off to a planet shaped like a 0.

And we're off! As with yesterday's keynote, we're starting with a sizzle reel – this time all about developers, skills, AI, and production.

We're now sat in the arena and once again listening to the AI-sampled music of The Meeting Tree onstage, accompanied by abstract visuals generated with Google DeepMind's video generation model Veo 2.

There are just 30 minutes to go until the developer keynote. Presented under the subtitle 'You can just build things', we're expecting this session to be all about the ease of deploying AI with Google Cloud – expect to hear lots about Agentspace, automation in Workspace powered by Google Workspace Flows, and Google Cloud's new infrastructure for training custom AI models.

With the press conference done, all eyes are now on the developer keynote – we'll be seated and ready to bring you images and updates as they come.

Finally, he adds that Google Cloud has European partnerships with firms such as TIM and Thales, to operate in a supervisory role and provide trust and verification in Europe.

He adds that for customers who are worried about long-term survivability, Google Distributed Cloud runs fully detached with no connection to the internet.

Kurian says that technologically, Google Cloud can prevent this from impacting its customers, because the firm doesn’t have access to its customer’s environments and no ability to reach their encryption keys.

Now another question on tariffs from Techzine – specifically on the potential risk that American companies could be ordered to stop delivering services to European customers.

In response, Kurian says Agentspace arose from an observation that organizations struggle with information searches, particularly across different apps. He adds that the service already has 100 connectors live and 300 connectors in development so people can adopt it without ripping out and replacing anything.

We’ve just had a question on how easy it will be for companies to adopt Agentspace when one’s enterprise has already invested heavily in other AI ecosystems such as Microsoft or Oracle, from Diginomica.

A question on tariffs, now – which have been a repeated talking point throughout the event. Kurian is asked whether Google Cloud is prepared for their impact and in response says the “tariff discussion is an extremely dynamic one,” and that Google has been through many cycles like this including the 2008 financial crisis and the pandemic.

Kurian also said Google is working hard to identify opportunities for renewable energy to power data centers and looking to using nuclear as a source of power for its sites.

“We have done many things over the years to improve the infrastructure – for example, we introduced water cooling many years ago for our processors," he says.

Asked a question on how Google Cloud is meeting the increased energy demand from data centers for generative AI, Kurian says the cost of inference has decreased 20 times.

He adds there’s a competitive advantage to adopting AI and some of the changes in the past few months have changed the European attitude to the technology.

In response, Brady says that Google Cloud is helping EMEA customers with security and flexibility, which are very important in the region, particularly when it comes to not being locked into long-term contracts.

Now a question on pressure facing the EMEA region from our sister publication TechRadar Pro.

The first question is on the challenge of AI adoption in certain countries, to which Kurian says Google Cloud is working hard on its sovereign cloud capabilities. He also highlights the importance of it allowing companies to use its global technology infrastructure in meeting security requirements.

Kurian begins by highlighting how hard Google Cloud is working to expand across the globe and how it now operates in 42 regions.

Before the developer keynote later on, we're getting to hear from Thomas Kurian, CEO at Google Cloud, Tara Brady, president EMEA at Google Cloud, and Eduardo Lopez, president Latin America at Google Cloud in a press conference.

It's coming up on 8:00 in Las Vegas and we're back to report on day two of Google Cloud Next 2025. With the developer keynote due to kick off this afternoon, there's sure to be more detail on all the announcements we've heard about so far and more hands-on demos of some of Google Cloud's newest tools.

If you've ever wondered what it's like on the ground at an event such as Google Cloud Next 2025, this photo gives a good impression. You can see it's incredibly busy here, with attendees in the thousands entering and exiting each keynote. Google Cloud has a huge range of partners and customers, many of whom will be looking to reaffirm or expand their business relationship to make the most of AI, so the event is thick with meetings, roundtables, and live demos in the expo hall.

"What an amazing time for all of us to experience and work with these technology advances," Kurian concludes.

"We at Google Cloud are committed to helping each of you in effect by delivering the leading enterprise-ready, AI-optimized platform with the best infrastructure, leading models, tools, and agents. By offering an open multi-cloud platform and building for interoperability so we can speed up time to value from your AI tests, we are honored to be building this new way to cloud with you."

And with that, the first keynote of the event comes to a close. We'll keep bringing you all the updates as they happen live from Las Vegas.

Kurian says Google Cloud is working hard on making its innovations easy to adopt in four key ways:

- Better cross-cloud networking.

- Hands-on work with ISVs to improve Google Cloud integration.

- Working with service partners on agent rollouts.

- Offering more sovereign cloud compatibility via Google Cloud.

We're rounding out now and Kurian is back onstage to bring the keynote to a close.

He acknowledges Google's recent acquisition of Wiz as evidence of how seriously it takes cybersecurity.

In a demo, Payal Chakravarty shows us how Google Unified Security can detect vulnerabilities in code and extensions used within an enterprise's environment.

The agentic, autonomous features of the new platform can automatically detect when an AI extension has put sensitive data at risk and flag it to a human in the company's security team. In addition to providing response advice, it can proactively quarantine the suspicious extension.

Continuing at pace, we're now welcoming Sandra Joyce, VP, Google Threat Intelligence, to hear about the security announcements Google Cloud is making today.

Chief among these announcements is the new Google Unified Security, the new converged security platform for better visibility and faster threat detection.

Read our detailed write-up on Google Unified Security here.

We're moving onto Gemini Code Assist, Google Cloud's AI pair programmer, which Calder says is already being used by a wide range of enterprises.

Google Cloud is today announcing Gemini Code Assist agents, which can help developers to quickly complete tasks such as the generation of software and documentation, as well as AI testing and code migration.

Via the new Gemini Code Assist Kanban board, developers can interact with agents to get insight into why they're making the decisions they are and see which tasks they're still yet to complete.

Calder says that Google Cloud is announcing new agents for every role in the data team.

Data engineering agents, embedded within BigQuery pipelines, can perform data preparation and automate metadata generation.

Meanwhile, data science agents can intelligently select models, flag data anomalies, and clean data to reduce the time teams have to spend manually validating all data.

Finally, Looker conversational analytics allows users to explore data using natural inputs. This will be made available via a new conversational analytics API, now in preview, so data teams can embed this easy question and answer layer into their existing applications.

Imagen 3 and Veo 2 models are coming to Adobe Express, we're told, as the firm pushes forward on AI-generated content.

Moving onto data agents, we're now welcoming Brad Calder, VP & GM, Google Cloud, onstage.

He tees up a video showing that Mattel is using Google Cloud's AI to reduce the need for its teams to manually analyze customer sentment.

"We can instantly identify key issues and trends improving growth, efficiency, and innovation," says Ynon Kreiz, CEO at Mattel.

"For example, we improved the ride mechanism in the Barbie Dreamhouse elevator."

We're back to creative agents – it seems creative output is a major focus for Google Cloud at this year's event. We're being told about Wizard of Oz at Sphere again – find the details for that at the start of this live blog.

O'Malley is back onstage to discuss purpose-built agents.

For example, Mercedes Benz is using AI for conversational search and route mapping in a new line of its cars.

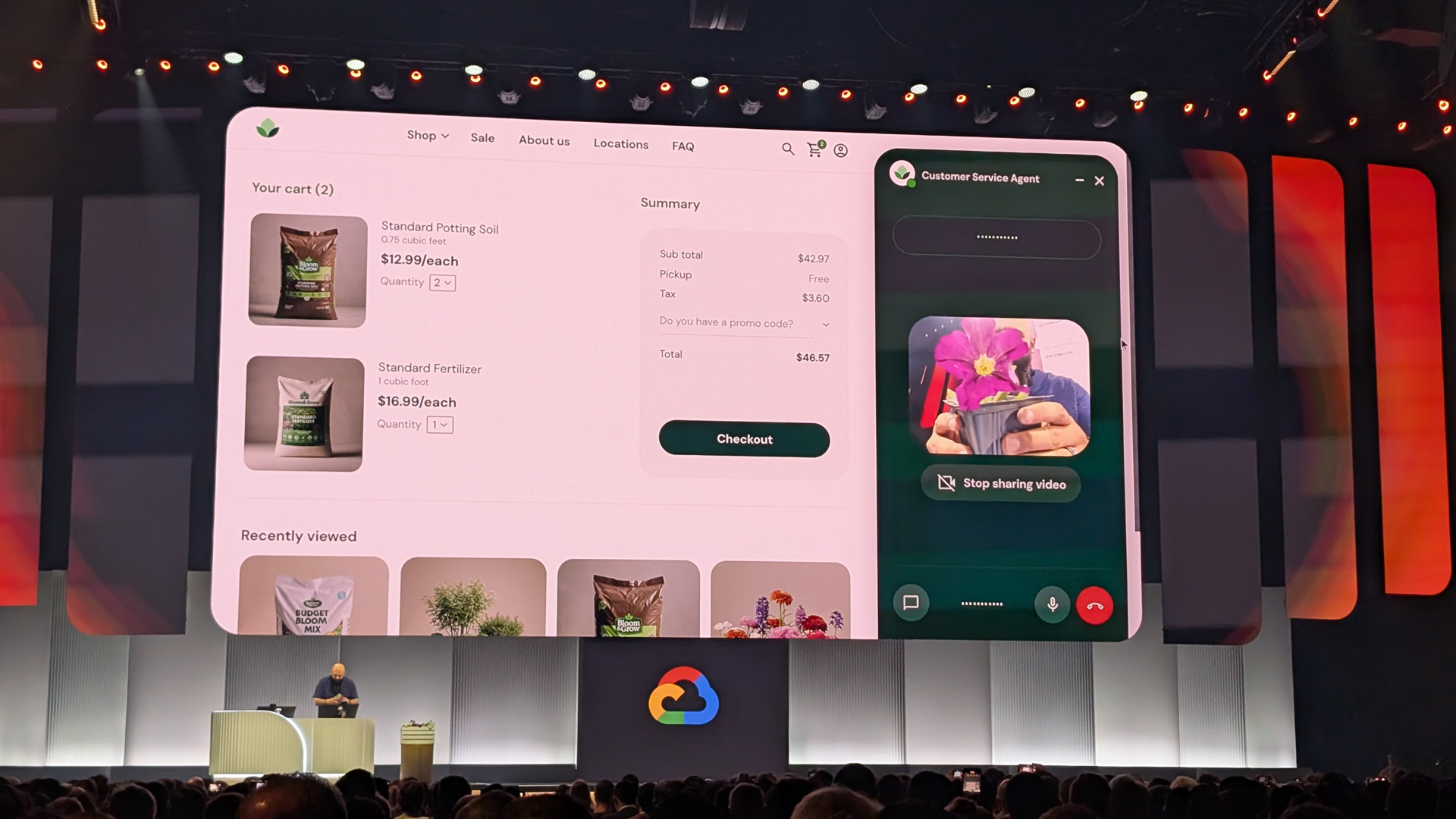

In a demo by Patrick Marlow, product manager for Applied AI at Google Cloud, we're shown how the suite can be used to get instant answers and assistance at a garden store.

Marlow is able to hold petunias he has purchased up to a camera and receive real-time, voice output assistance from the agent. For example, he asks if he's buying the right fertilizer for the plants and the agent is able to recommend an alternative fertilizer and add it to his cart.

In cases where human assistance is required – such as Marlow asking for a 50% discount on his purchase – the agent escalates to a manager in Salesforce.

O'Malley says Google Cloud's Customer Engagement Suite is already helping organizations meet customer knowledge demand.

She gives the example of Verizon, which adopted the Customer Engagement Suite. The firm uses the offering to provide its 28,000 customer assistants with up-to-date data and move customers to resolution even quicker.

O'Malley announces new feaures for Customer Engagement Suite, including human-like voices, integration with CRM systems and popular communications platforms, and the ability to comprehend customer emotions.

Customers are using all kinds of agents to unlock new value in their enterprise environment – but what are these different kinds?

Kurian welcomes Lisa O'Malley, leader of Product Management, Cloud AI at Google, to explain more.

O'Malley says we'll start with customer agents, showing us a video of how Reddit is using Gemini for Reddit Answers, a new conversational layer on the message board website.

Next, we're told about how Vertex AI Search is helping healthcare and retail organizations to deliver more relevant results to their customers and boost their conversion rates.

"Agentspace is the only hyperscaler platform on the market that can connect third-party data and tools, and offers interoperability with third-party agent models," says Weiss.

Here to show us more is Gabe Weiss, Developer Advocate Manager, Google Cloud.

Weiss shows us how he can simply identify potential issues with his business' customers within Agentspace. Based on this, he can ask for an agent to identify client opportunities in the future. He can then iterate on this prompt by asking for an audio summary of its findings, to be delivered to him every morning – creating an in-depth, analytical agent with a few sentences of code.

Finally, he can ask for the agent to write an email within Agentspace, which once approved is automatically sent via Outlook without him having to open the app himself.

It's time to talk about agents – sound the klaxon. These advanced AI assistants work to automate tasks autonomously, as Kurian explains.

To hear more about the potential of agents, we're shown a clip of Marc Benioff, CEO at Salesforce.

"Right now, we're really at the start of the biggest shift any of us have ever seen in our careers," Benioff says.

"That's why we're so excited about Agentforce and our expanded partnership with Google. I just love Gemini, I use it every single day whether it's Gemini inside Agentforce, whether it's all the integrations between Google and Salesforce."

Starting today, Kurian announces, customers can scale agents across their environment, deploy ready-made agents, and connect agents together.

This will largely be driven by the Agent Development Kit, a new open source framework for widespread systems of agents interacting with one another.

Agent2Agent, a newly-announced protocol. will allow disparate agents to communicate across enterprise ecosystems regardless of which vendor built them and which framework they are built on.

"This protocol is supported by many leading partners who share a vision to allow agents to work across the agent ecosystem," Kurian says.

Already, more than 50 partners including Box, Deloitte, Salesforce, and UiPath are working with Google Cloud on the protocol.

Within Google Agentspace, enterprises can have Google-made agents, as well as third-party agents and custom-built agents easily communicate with one another.

Vertex AI provides customers with all of Google's internally-made models as well as open models such as Meta's Llama 4.

"With Vertex AI, you can be sure your model has access to the right information at the right time," he says.

"You can connect any data source or any vector database on any cloud, and announcing today you can build agents directly on your existing NetApp storage without requiring any duplication."

Kurian adds that Google Cloud has the most comprehensive approach to grounding on the market.

Promising Kurian will crowd-surf at tomorrow's concert, he welcomes the CEO back onstage.

Kurian moves quickly onto Vertex AI, with a look at how it helps customers.

"Tens of thousands of companies are building with companies in Gemini," he says, giving examples such as Nokia buiding a tool to speed up application code development, Wayfair updating product attributes five times faster, and Seattle Children's Hospital making thousands of clinical guidelines searchable by pediatricians.

Once videos have been generated, the user can fine-tune them with new in-painting controls.

In his ive demo, Bardoliwalla paints around an unwanted stage-hand in a close-up clip of a guitar to seamlessly remove him from the final result.

Next, Bardoliwalla uses Lyria to generate music for the trailer. This can be combined in the platform to create quick clips for advertising and more.

Here to show us all how this works in practice is Nenshad Bardoliwalla, Director, Product Management, Vertex AI, Google Cloud.

We're told his mission is to create a trailer for the party to end the event – complete with a gag about Kurian not wanting to be able to sing Chappel Roan but not getting permission.

Bardoliwalla opens Vertex Media Studio, in which he can ask for a drone shot of the Vegas skyline and choose specific settings such as frame rate video length.

Onto some more of that creative content we had tee'd up with the DJ (you see, we said it might come up again).

Kurian highlights Imagen 3, the firm's image generation model, as well as Veo 2, its video generation model. The latter is now capable of adding new elements into filmed video and producing videos that mimic specific lens types and camera movements.

Finally, we're also told that Lyria is now available on Google Cloud. The model can turn text prompts into short music outputs – the first tool of its kind in the cloud, Kurian says.

Kurian is back onstage, reminiscing on the large progress Google Cloud made last year with Gemini's multimodality and large, two million token context window.

Gemini is now included in all Google Workspace subscriptions and Kurian tees up a video to show us how businesses are making good use of the service already. In the video, customers say that Gemini is already cutting down their toil and opening new time for valuable work.

Google Cloud's close relationship with Nvidia runs throughout its hardware announcements today. To hear more, we're being shown a video of Jensen Huang.

Huang describes the Google Distributed Cloud as "utterly gigantic".

"Google Distributed Cloud with Gemini and Nvidia are going to bring state-of-the-art AI to the world's regulated industries and countries," he says.

"Now, if you can't come to the cloud, Google Cloud will bring AI to you."

Vahdat runs through the core infrastructure announcements from today including Ironwood, AI Hypercomputer, and data storage announcements. As a reminder, you can read about these in detail announcements here.

It's not all about running workloads in the cloud, Vahdat says. Google Cloud is also announcing Gemini on Google Distributed Cloud, which allows firms to run Gemini locally – including in air-gapped environments.

This opens the door to government organizations using AI in secret and top secret environments.

With that, Pichai is off and Kurian is back onstage.

He explains how Google Cloud is uniquely positioned to support customers, with a massive range of enterprise tools to build AI agents and an open multi-cloud platform for connecting AI to one's existing databases.

"Google Cloud offers an enterprise-ready, AI platform built for interoperability," he says.

"It enables you to adopt AI deeply while addressing the evolving oncerns around sovereignty, security, privacy, and regulatory requirements."

Finally, Google Cloud's infrastructure is core to its advantages for customers. To help illustrate this point, Kurian welcomes Amin Vahdat, VP, ML, Systems and Cloud AI Google Cloud to the stage.

It's always good to hear directly from a customer about how AI is helping their business.

We've just been shown a reel from McDonald's, in which Chris Kempczinski, CEO at McDonald's, explained how AI can be used to predict when machines will need maintenance in McDonald's restaurants or provide workers with quick answers to their questions.

The announcements are coming fast here in the arena. Pichai rattles off stats about Gemini 2.5, the firm's new thinking model which is currently the top-ranked chatbot in the world per the Chatbot Arena Leaderboard.

He also notes that Gemini 2.5 Flash, Google Cloud's low cost, low latency model that allows organizations to balance reasoning with budget for every output.

Pichai draws a direct line between Ironwood and Google's quantum chip Willow, which it announced last year.

Both are used as examples of the boundaries Google is pushing within its hardware teams, as well as in divisions such as Google DeepMind to crack problems such as weather prediction.

Next, Pichai announces Google Cloud's 7th generation TPU, Ironwood which brings sizeable performance and efficiency improvements over its predecessors.

A few key stats about Ironwood: it’s capable of 42.5 exaflops of performance, 24 times the per-pod performance of the world’s fastest supercomputer El Capitan.

Read more in our full coverage of Ironwood here.

First off, Pichai says that Google will make $75 billion investment in capital investment in 2025, directed toward servers and data centers.

To further support its AI-hungry customers, Pichai announces that Google Cloud will make its global network available to Cloud customers via Cloud WAN, a new managed solution for connecting enterprises across a wide area network.

"This builds on a legacy of opening up our technical infrastructure for others to use," Pichai says.

To give the crowd a taste of what AI can do, Kurian welcomes Sundar Pichai, CEO at Google, to the stage.

Pichai opens by paying tribute to The Wizard of Oz at Sphere and then moves on to make some announcements.

Now the keynote proper begins, with Thomas Kurian, CEO at Google Cloud, taking to the stage to kick us off.

“Google’s AI momentum is exciting – we’re seeing more than four million developers using Gemini, a 20 times increase in Vertex AI,” says Kurian, noting that the firm processes more than 2 billion AI requests per month in workspace, driven by businesses.

Today's sizzle reel is peppered with AI-generated video, in a show of sophistication by Google Cloud.

And we're off! To begin with, as is normal for keynotes, we’re being shown a sizzle reel of Google Cloud’s impact on the industry and hyping up the potential for AI in the enterprise.

Just one minute left until the keynote begins in earnest. Stay tuned as we bring it to you live.

The music we're hearing will apparently be played throughout the entire conference – musical group The Meeting Tree have scored an entire soundtrack for the event, with the theme of AI.

Paired with Google Cloud's work on The Wizard of Oz (details lower down in the live blog), it's clear that Google Cloud is eager to show what it can offer to industries that have been more reluctant to adopt AI to date.

There's a clear need to acknowledge fears that AI could damage the livelihoods of artists. A constant refrain at yesterday's event at the Sphere was that ideally, AI should be used to empower creatives rather than replace them. In the event yesterday, Google Cloud suggested that new roles could appear in the creative sector as a result of AI breakthroughs – it will be interesting to see if this is expanded upon at all in the keynote.

We're now learning a bit more about how that music has been made for the event, via a behind-the-scenes video.

Human musicians were first recorded and then their samples were fed into Music AI Sandbox, which could produce audio outputs that the producers can edit, alter, and use as the basis for new noises.

As you can see, there's a huge amount of foot traffic this morning as we pile into the Michelob Ultra Arena at Mandalay Bay. As is usual for tech conferences, we're being serenaded by a live DJ inside the arena itself – more unusual is the visuals for this morning's music, which have been generated entirely with Google DeepMind's video model Veo 2.

As a reminder, the theme for this morning's keynote is 'The new way to cloud', with a focus on interoperability, unification, and more intelligent automation through Gemini AI.

Last night, we were given a glimpse into what to expect this week at the Sphere, with preview speeches from Google CEO Sundar Pichai and Google Cloud chief executive Thomas Kurian onstage. You can read all about the goings on from the evening further down the live blog.

We've already had a range of big announcements ahead of the opening keynote, including the launch of Google's new 'Ironwood' AI accelerator chip and the launch of Google Unified Security, which aims to drive cloud security capabilities for enterprises and demystify cyber complexity in the cloud.

You can read all about these announcements below:

With that, Kurian officially started Google Cloud Next, with confetti cannons heralding the official start of the event.

"If tonight's event sets the tone for what we plan to bring you for the next three days, I think it's safe to say it's going to be an incredible week," he said.

Kurian will be back onstage bright and early tomorrow morning at the opening keynote 'The new way to cloud'. We'll be bringing you all the updates from that and throughout the conference, both here and across ITPro so stay right here for all the very latest.

In the meantime, why not read my pre-conference analysis of what Google Cloud can do to set itself apart from competitors at this event and the key story it needs to tell.

Next, it was time to hear from Thomas Kurian, CEO at Google Cloud, and James Dolan, CEO at Sphere Entertainment, on the challenges of bringing The Wizard of Oz to the Sphere.

"I've been running companies for 40 years and this is one of the first times I ever felt that I wasn't a customer – I was a partner," said Dolan, praising the hands-on collaboration of the Google Cloud, Google DeepMind, and Magnopus teams.

Kurian noted that a total of twenty different models were needed to bring the Wizard of Oz at Sphere to life, with engineers leveraging Google's extensive TPU architecture and inventing new techniques to expand and recreate the original film frames. This was an enormous technical challenge, not least because the scale and resolution of the screen makes it hard to hide any mistakes in the final image.

"Most importantly, the camera and this amazing theater here at the Sphere is something that doesn't exist anywhere else in the world," he said. "So it's almost like you were told to do AI and your first project was your PhD thesis."

After Pichai's speech, we were treated to an extended video showing the behind the scenes of the project. It included detail on how difficult it is to extend existing video footage to fit the Sphere's unique aspect ratio and resolution, as well as the complexity of generating entirely new footage of characters when they would otherwise have been offscreen.

Engineers had to work iteratively and study the original plans for the film to recreate the characters without making them generic.

The final project includes special effects such as wind which is blown on the audience and haptic rumbling under the seats – of which we were given a very interactive example.

After entering the Sphere's cavernous arena, we were treated to an opening speech by Sundar Pichai, CEO at Google. He played tribute to the efforts of all the engineers and creatives who worked on the project, which required intense research and overcoming numerous technological hurdles. Ultimately, it was created using Google DeepMind's video generation model Veo 2.

"We have seen significant improvements: super low latency, incredible video quality, multimodal output, so many things we couldn't have done with AI even 12 months ago," Pichai said.

"Beyond the technical capability, it took a whole lot of imagination, creativity, and collaboration. Our goal: giving Dorothy, Toto, and all of these iconic characters new life on a 16k screen in super resolution."

Good evening from Las Vegas, where choice attendees from the event have just been treated to a sneak peek of a brand new attraction opening at the Sphere in August – The Wizard of Oz at Sphere.

Made in partnership with Warner Bros. Discovery, Google Cloud, and Magnopus, the finished product will run as a multi-sensory, 16k recreation of the original 1939 movie for the Sphere's 160,000-square-foot screen using Google DeepMind's video generation models.