Will Nvidia's AI dominance shake up the public cloud ‘big three’?

Nvidia continues to make strategic moves to partner with and compete against the largest cloud service providers, with AWS in danger of losing ground

The relatively nascent cloud computing industry entered a slowdown in its meteoric growth this year. As it begins to mature, it now encounters a major challenge in the form of how best to integrate artificial intelligence (AI)-optimized hardware, software, and semiconductor chips.

The ‘big three’ public cloud services – Microsoft Azure, Google Cloud Platform (GCP), and market leader Amazon Web Services (AWS) – may face an existential crisis with the rise of generative AI and the hardware needed to power large language models (LLMs) and other workloads.

Out of these three, AWS is especially vulnerable to losing market share, according to the experts, with another company – Nvidia – holding all the cards.

With its hold on the AI hardware segment, Nvidia is moving quickly to shore up its leverage by deepening partnerships with major cloud providers, apart from one: AWS. This could work to shake up a public cloud market which we had assumed was set.

AWS skips the Nvidia AI party… but at what cost?

Public cloud market leader AWS depended on a four-part approach to create and dominate the public cloud market. This plan included:

- Creating infrastructure according to popularity / demand for services

- Leveraging first-mover advantage

- Making silicon in-house, save billions of dollars

- Controling product end-to-end

This four-part formula served AWS well, says Dylan Patel, chief analyst at SemiAnalysis, but with the advent of AI, another cloud leader emerged. Nvidia, the semiconductor chip designer of choice for high-performance computing (HPC), LLM training and inference, now competes against the companies it also partners with.

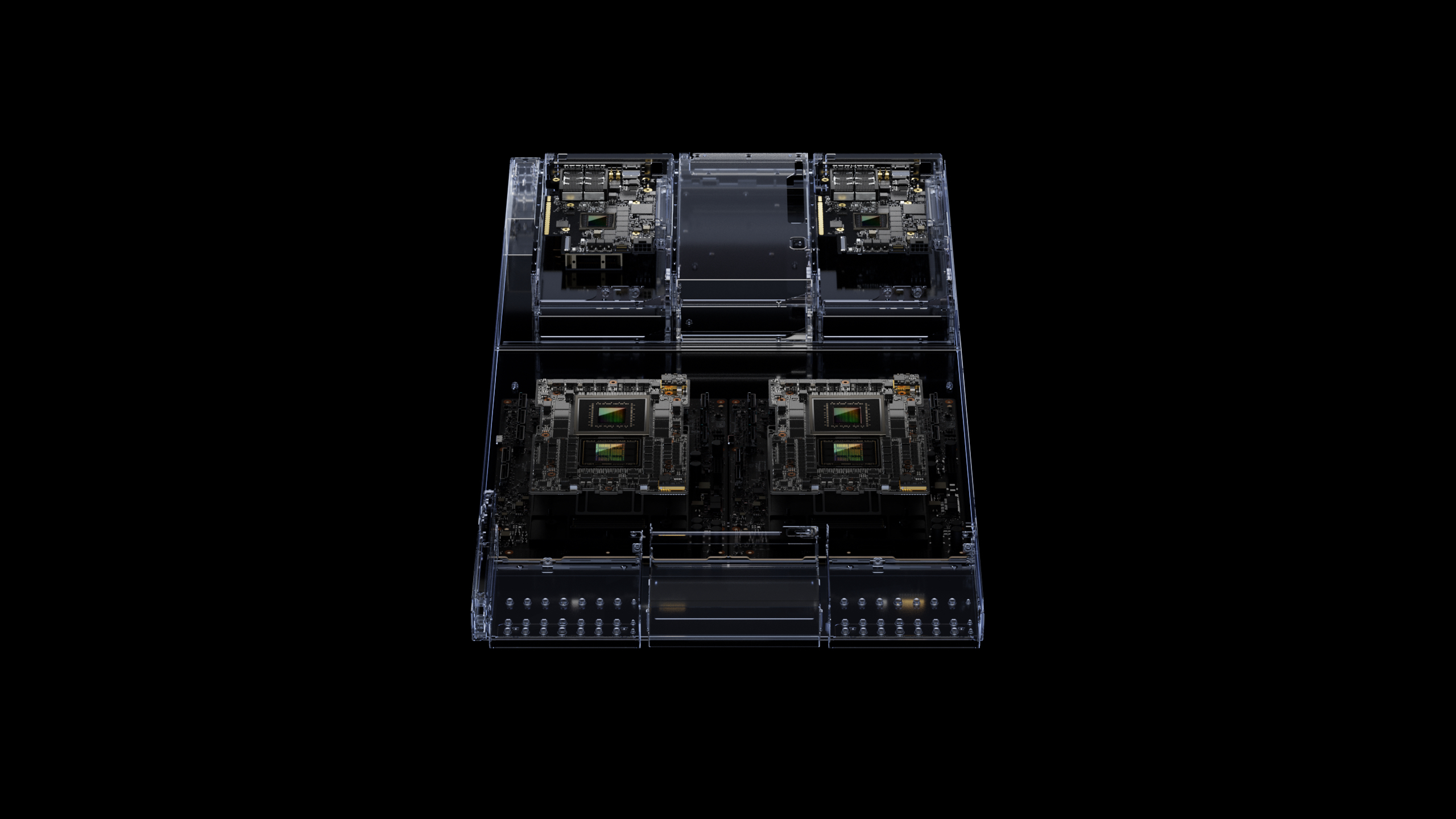

“Nvidia has big ambitions in AI,” says Jay Goldberg, CEO of technology and finance consulting firm D2D Advisory. “It wants to move beyond only selling semiconductors to providing a complete AI solution to end-customers. This includes providing extensive software tools and even its own cloud service.”

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

RELATED RESOURCE

Discover how IBM watsonx.data supports a range of current standard technologies

DOWNLOAD NOW

“We are witnessing an intense competition among these cloud providers to capitalize on the significant revenue opportunities presented by the thriving AI applications,” adds Sameh Boujelbene, VP of data center and campus ethernet switch market research at Dell’Oro Group. “Nvidia is seizing this pivotal moment in the market to leverage its dominance in AI hardware and generate revenue from cloud-based software solutions.

“Nvidia made early investments in AI and deep learning, recognizing their potential long before they became mainstream,” Boujelbene adds. “This head start allowed it to develop and refine its technology to cater specifically to AI applications.”

Networking is key to Nvidia’s cloud ambitions

AWS has resisted this push, and its approach to Nvidia could close the gap between it and its major competitors Azure and GCP. The service of which Goldberg and Boujelbene speak is Nvidia’s AI as a service offering, Nvidia AI. It gives customers access to the power of a supercomputer to train LLM, develop AI inference models, and monitor data.

“So far, Nvidia has been careful to position its cloud computing ambitions in partnership with the public cloud providers,” says Goldberg. “Beyond this, Nvidia's dominant position in AI semiconductors and limited supplies has allowed it to ration allocation of those chips.”

AWS is left out, says Patel, because it refuses to leverage Nvidia’s networking product NVLink. Leveraging the tool would give Nvidia too much control, in the eyes of AWS, Patel tells ITPro.

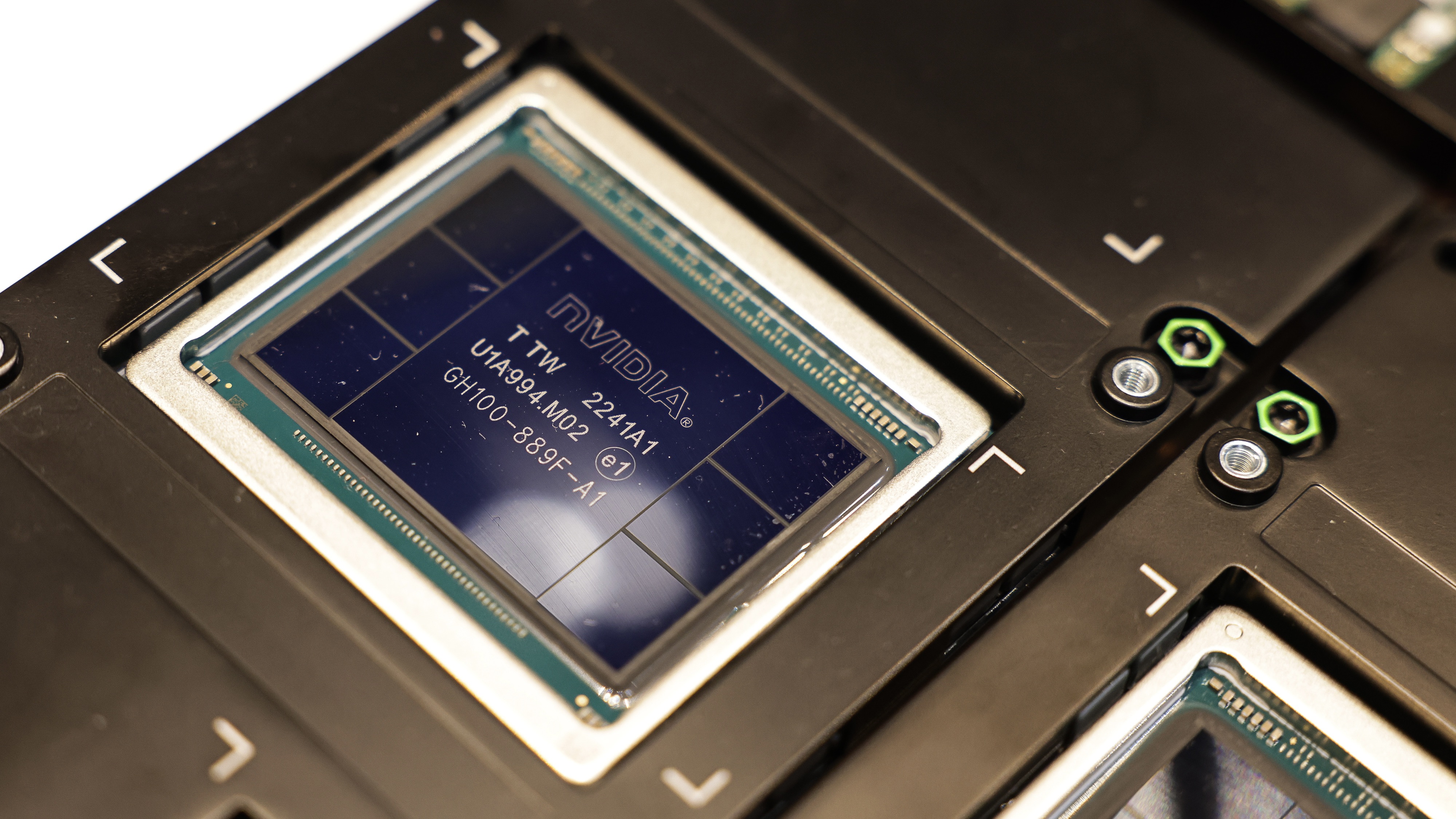

“The new GH200 Grace Hopper Superchip platform] … uses the Grace Hopper Superchip, which can be connected with additional Superchips by NVIDIA NVLink™, allowing them to work together to deploy the giant models used for generative AI…. gives the GPU full access to the CPU memory, providing a combined 1.2TB of fast memory when in dual configuration.”

Networking remains a critical piece of Nvidia’s full-stack solution, according to Boujelbene. In addition to NVLink, Boujelbene points out the firm’s InfiniBand and Ethernet offerings. The H100s can reach 3.5 times their normal performance when using NVLink between GPU nodes, Nvidia claims. He notes AWS isn’t the only one cautious about integrating NVLink.

But, Patel warns, once Nvidia’s much anticipated GH200 Grace Hopper chip arrives, the firm will most likely make NVLink mandatory for other companies to gain access to the chip’s power and performance. Patel has inferred this from Nvidia’s product announcement, in which it all but came out and said NVLink is needed to get the most out of its tools.

How Nvidia is directly challenging cloud providers

Nvidia is using its popular H100 chips as leverage by prioritizing Azure, GCP, and smaller players such as Coreweave, Equinix, and Lambda to receive the first available shipments of the in-demand H100s. By doing so, the firm is folding cloud providers into its cloud ambitions, and AWS sticks out like a sore thumb because it won’t play ball, Patel says.

The demand for H100s comes from the chip’s raw speed in both inference and LLM training. Even with Nvidia’s price spikes, H100s still provide the best performance per dollar from any of the latest GPUs.

AWS occupies an almost too-big-to-fail position in the market, and it can certainly afford stick to its principles and remain in control, but this puts it in the rare position of playing catchup. “Nvidia is the leading provider of GPUs and AI accelerated compute for the cloud services providers,” says Goldberg. “They rely on Nvidia for providing the best GPUs, along with the CUDA software tools, which power the training of AI models.”

To compete, AWS developed its own inference and training software called Trainium and Inferentia, Patel adds. But they’re below standard capabilities, they’re struggling to gain traction in the market, and aren’t competitive after offering steep discounts.

Nvidia is assuming the role of gatekeeper for the ‘big three’ cloud service providers in this AI age. The firm uses its software – CUDA – and hardware – H100 and GH200 – to retain partnerships and pressure holdouts.

Nvidia also struck partnerships with Microsoft, Oracle, Google, and minor players to roll out a lower-margin DGX as a service operation. This gives customers access to Nvidia’s latest suite of cloud-ready systems without needing hardware. According to Patel, AWS is the only major cloud provider not offering Nvidia’s AI as a service model, jeapordizing its position as the market leader.

Lisa D Sparks is an experienced editor and marketing professional with a background in journalism, content marketing, strategic development, project management, and process automation. She writes about semiconductors, data centers, and digital infrastructure for tech publications and is also the founder and editor of Digital Infrastructure News and Trends (DINT) a weekday newsletter at the intersection of tech, race, and gender.

-

Bigger salaries, more burnout: Is the CISO role in crisis?

Bigger salaries, more burnout: Is the CISO role in crisis?In-depth CISOs are more stressed than ever before – but why is this and what can be done?

By Kate O'Flaherty Published

-

Cheap cyber crime kits can be bought on the dark web for less than $25

Cheap cyber crime kits can be bought on the dark web for less than $25News Research from NordVPN shows phishing kits are now widely available on the dark web and via messaging apps like Telegram, and are often selling for less than $25.

By Emma Woollacott Published

-

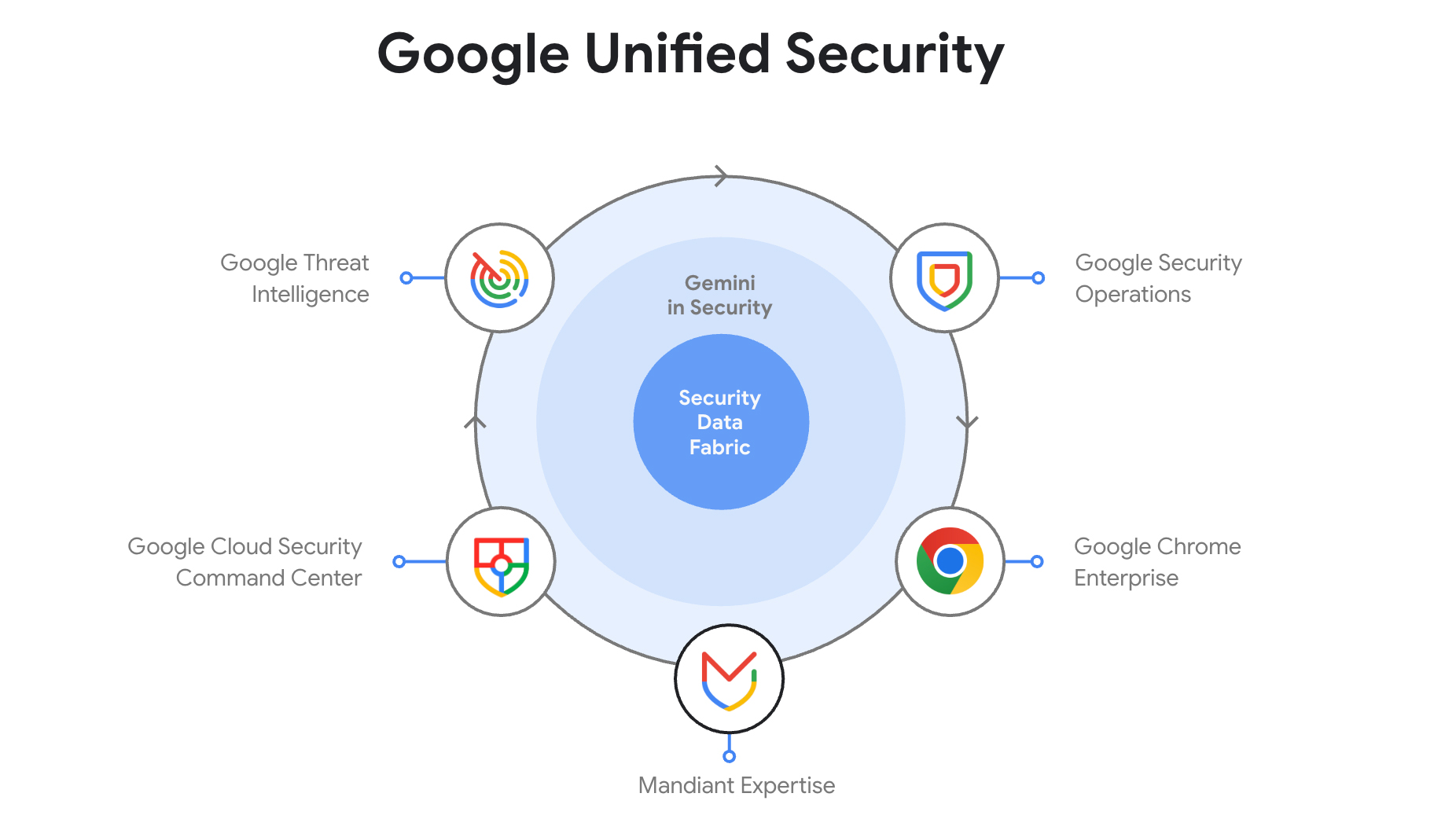

Google Cloud wants to tackle cyber complexity – here's how it plans to do it

Google Cloud wants to tackle cyber complexity – here's how it plans to do itNews Google Unified Security will combine all the security services under Google’s umbrella in one combined cloud platform

By Rory Bathgate Published

-

Google Cloud Next 2025: All the live updates as they happened

Google Cloud Next 2025: All the live updates as they happenedLive Blog Google Cloud Next 2025 is officially over – here's everything that was announced and shown off in Las Vegas

By Rory Bathgate Last updated

-

Google Cloud Next 2025 is the hyperscaler’s chance to sell itself as the all-in-one AI platform for enterprises

Google Cloud Next 2025 is the hyperscaler’s chance to sell itself as the all-in-one AI platform for enterprisesAnalysis With a focus on the benefits of a unified approach to AI in the cloud, the ‘AI first’ cloud giant can build on last year’s successes

By Rory Bathgate Published

-

The Wiz acquisition stakes Google's claim as the go-to hyperscaler for cloud security – now it’s up to AWS and industry vendors to react

The Wiz acquisition stakes Google's claim as the go-to hyperscaler for cloud security – now it’s up to AWS and industry vendors to reactAnalysis The Wiz acquisition could have monumental implications for the cloud security sector, with Google raising the stakes for competitors and industry vendors.

By Ross Kelly Published

-

Google confirms Wiz acquisition in record-breaking $32 billion deal

Google confirms Wiz acquisition in record-breaking $32 billion dealNews Google has confirmed plans to acquire cloud security firm Wiz in a deal worth $32 billion.

By Nicole Kobie Published

-

Microsoft’s EU data boundary project crosses the finish line

Microsoft’s EU data boundary project crosses the finish lineNews Microsoft has finalized its EU data boundary project aimed at allowing customers to store and process data in the region.

By Nicole Kobie Published

-

AWS expands Ohio investment by $10 billion in major AI, cloud push

AWS expands Ohio investment by $10 billion in major AI, cloud pushNews The hyperscaler is ramping up investment in the midwestern state

By Nicole Kobie Published

-

Microsoft hit with £1 billion lawsuit over claims it’s “punishing UK businesses” for using competitor cloud services

Microsoft hit with £1 billion lawsuit over claims it’s “punishing UK businesses” for using competitor cloud servicesNews Customers using rival cloud services are paying too much for Windows Server, the complaint alleges

By Emma Woollacott Published