What is Hadoop?

We explain the Java-based framework powering Big Data and another industrial revolution

Hadoop is one of the most popular open source cloud platforms used by developers, data scientists, and engineers to process enormous amounts of data, including analysing, sorting and storing information.

The platform is used to deliver key understandings into a company's health, whether financial or operational, allowing stakeholders to make decisions based upon data.

One of the main advantages of using Hadoop over other cloud platforms is that everything happens in real-time, enabling faster reaction times and meaning businesses can always keep one step ahead of competitors.

Debuting more than 10 years ago, Hadoop has undergone many changes, essentially evolving to take account of shifting demands from businesses, including the capacity to process much larger datasets.

For example, the first iteration was able to sort 1.8 TB on 188 nodes in 47.9 hours - revolutionary for its time. However, this has been increased considerably in a decade and much greater datasets can now be handled in less time thanks to contributions from the likes of Facebook, LinkedIn, eBay and IBM.

Hadoop was first developed by Doug Cutting and Mike Carafella, two software engineers that wanted to improve web indexing. It was built upon Google's File System paper and was created as the Apache Nutch project, after tons of experimentation by Cutting and the subsequent creation of Apache Lucene, previous to his tie-up with Carafella.

When Cutting started working for Yahoo in 2006, the platform's development accelerated thanks to a dedicated team to work on it. Fast forward to 2008 and it became clear that Yahoo was no longer the place for Hadoop to flourish.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

At this point, Rob Beardon and Eric Badleschieler who had been heavily involved in the development of Hadoop made the decision to split Hortonworks, which was currently responsible for the evolution of the platform, from Yahoo. They created a team of developers that could focus primarily on its future.

Google hasn't completely abandoned Hadoop, but the evolution reigns are now managed by Hortonworks' founder Owen O'Malley.

What Is Big Data?

Hadoop's main role is to store, manage and analyse vast amounts of data using commoditised hardware. What makes it so effective is the way in which it structures the data, making it extremely efficient to sort it autonomously.

Big data has huge potential, but only if you work out how to harness it. That means you must think about how you want to use it, and what answers you want from the data. That will determine your approach to data analytics, and help you reach the right insights that give your products and services an edge over the competition.

How does Hadoop work?

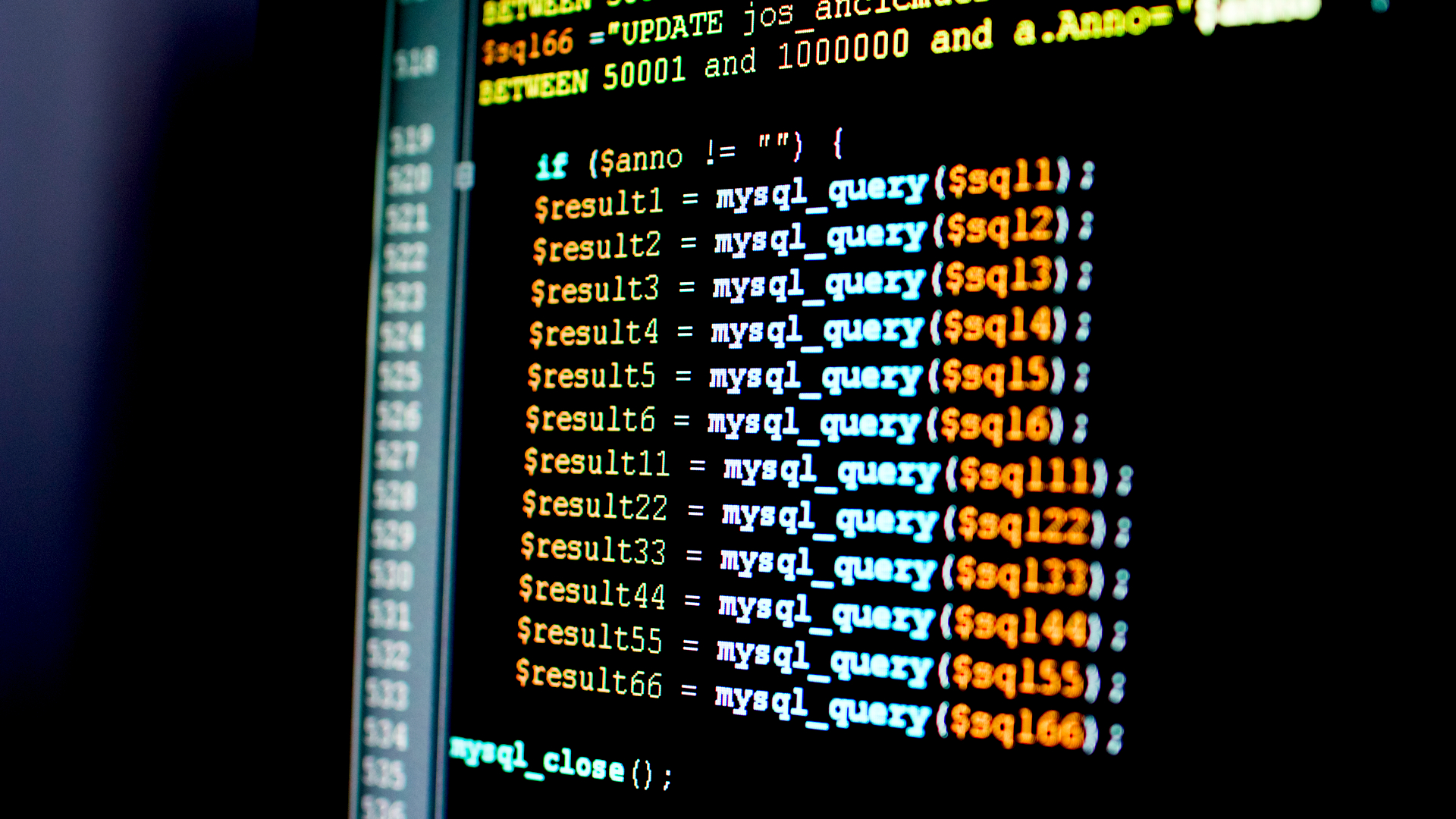

Four key elements comprise Hadoop; the Distributed File System, MapReduce, Hadoop Common and YARN. These 'modules' are each responsible for distinct tasks within the software.

The Distributed File System (DFS) provides the storage component; splitting files into fragments that it then distributes over many servers to improve high bandwidth streaming. This approach also makes it simple to increase storage capacity, compute power and even the bandwidth itself - all you need to do is install more commodity hardware.

Next, we come to the MapReduce module, the piece that performs the analytics on the datasets. It works by taking a large volume of data and crunching it into smaller datasets - perhaps the most crucial part of Hadoop's software.

MapReduce does two things - first, its map function crawls through the data, and slices it into key-value pairs. Reduce then collates the results of the mapping. We can see how this works on word-counting tasks.

Let's say we performed MapReduce on the 'she sells sea shells' tongue-twister, first of all the mapping part of the software would break the text down and count each word, which would look like this:

(she, 1)(sells, 1)(sea, 1)(shells, 1)(on, 1)(the, 1)(sea, 1)(shore, 1)(the, 1)(sea, 1)(shells, 1)(that, 1)(she, 1)(sells, 1)(are, 1)(sea, 1)(shells, 1)(i'm, 1)(sure, 1)

Once this operation has completed, the reduce function then groups the results together, returning an aggregate of the various values. In this case, that would be:

(she, 2)(sells, 2)(sea, 4)(shells, 3)(on, 1)(the, 2)(shore, 1)(that, 1)(are, 1)(i'm, 1)(sure, 1)

While this is an exceedingly simplistic example, it does illustrate the vital role MapReduce plays in breaking down huge datasets into more manageable formats.

The other modules that make up Hadoop are Hadoop Common, which refers to the utilities, libraries, packages and other miscellaneous files that the framework requires to function, and YARN (short for Yet Another Resource Negotiator), a cluster management tool that offers a central platform for research management across Hadoop infrastructure.

Why is Hadoop so popular?

While there are alternatives to Hadoop, it's unquestionably the most popular Big Data processing framework in the enterprise. There are a few very good reasons for this. The first is that it's fantastically economical because a Hadoop cluster can be built using commodity hardware, the setup and operational costs are extremely low compared with other technologies. It's open source, too, which means that there are no inherent licensing costs.

It's also highly scalable, which has been a boon to businesses that are looking to quickly spin up their Big Data operations. Hadoop also offers very high bandwidth across the cluster, allowing large workloads to be completed much more quickly and with fewer bottlenecks.

What are the main problems with Hadoop?

The biggest problem facing Hadoop is that it's still incredibly complex. While other elements of the enterprise IT stack have benefitted from increasing simplification and user-friendliness, Hadoop is still one of the more labyrinthine and confusing technologies in common use.

This makes it difficult for businesses to find people who can start working on Hadoop deployments or to up-skill existing staff in its implementation. It can also make it incredibly hard to sell the board on its benefits, as non-IT folks often have a hard time getting their head around what it actually does.

Rene Millman is a freelance writer and broadcaster who covers cybersecurity, AI, IoT, and the cloud. He also works as a contributing analyst at GigaOm and has previously worked as an analyst for Gartner covering the infrastructure market. He has made numerous television appearances to give his views and expertise on technology trends and companies that affect and shape our lives. You can follow Rene Millman on Twitter.

-

How the UK public sector could benefit from strategic channel partnerships

How the UK public sector could benefit from strategic channel partnershipsIndustry Insights Is the channel the answer to the growing cost vs budget problem facing the public sector?

-

Microsoft wants to replace C and C++ with Rust by 2030

Microsoft wants to replace C and C++ with Rust by 2030News Windows won’t be rewritten in Rust using AI, according to a senior Microsoft engineer, but the company still has bold plans for embracing the popular programming language

-

Accelerating revenue with IBM

Accelerating revenue with IBMwhitepaper Increase your impact and grow new revenue streams

-

Magic Quadrant for Full Life Cycle API Management

Magic Quadrant for Full Life Cycle API ManagementWhitepaper Assessing vendors in the fast-evolving full life cycle API management market to help software engineering leaders pick the right one

-

Four steps to better business decisions

Four steps to better business decisionsWhitepaper Determining where data can help your business

-

Majority of CIOs concerned that cloud complexity exceeds human ability

Majority of CIOs concerned that cloud complexity exceeds human abilityNews Greater observability and rollout of automated systems are also needed to reduce IT team strain and burnout

-

Datadog Database Monitoring extends to SQL Server and Azure database platforms

Datadog Database Monitoring extends to SQL Server and Azure database platformsNews The tool offers increased visibility into query-level metrics and detailed explanation plans

-

Twitter reports largest ever period for data requests in new transparency report

Twitter reports largest ever period for data requests in new transparency reportNews The company pointed to the success of its moderation systems despite increasing reports, as governments increasingly targeted verified journalists and news sources

-

Oracle and Microsoft announce Oracle Database Service for Azure

Oracle and Microsoft announce Oracle Database Service for AzureNews Azure users can now easily provision, access, and monitor enterprise-grade Oracle Database services in Oracle Cloud Infrastructure

-

Elastic expands cloud collaboration with AWS

Elastic expands cloud collaboration with AWSNews Partnership aims to ease migration to Elastic Cloud on AWS, as well as simplify onboarding and drive go-to-market initiatives