Speak easy: How neural networks are transforming the world of translation

Translation software has gone from a joke to a genuinely useful business tool, thanks to machine learning

Understanding foreign languages has always been a barrier for individuals and businesses seeking to expand in other countries. Language learning and translation has somewhat aided this and with the recent technological advancements in artificial neural networks this may become even easier.

In simple terms, artificial neural networks normally shortened to "neural networks" are a type of artificial intelligence (AI) that mimics the biological neural networks seen in animals.

Erol Gelenbe, a professor at Imperial College London's department of electrical and electronic engineering, is one of the leading researchers in the field, whose interest in artificial neural networks (here on shortened to "neural networks") developed from his earlier work on anatomy. He started by trying to build mathematical models of parts of human and animal brains, then graduated to using neural networks to route data traffic across the internet and other large networks.

Gelenbe says translation has three different aspects, whether carried out by a machine or a human being. The first is word to word translations, which can be accelerated or simplified using neural networks and other fast algorithms. The second is mapping the syntax, which means the neural network will have to "understand" the nuances of grammar in both languages. The third is using context to translate, which is extremely important as it directly affects which words are chosen.

Gelenbe uses English and German as an example: "Neural networks can be used for each of these steps as a way to store and match patterns, for example matching school' with schule', matching to' with nach', or learning and matching the grammatical structures".

A robotic rosetta stone?

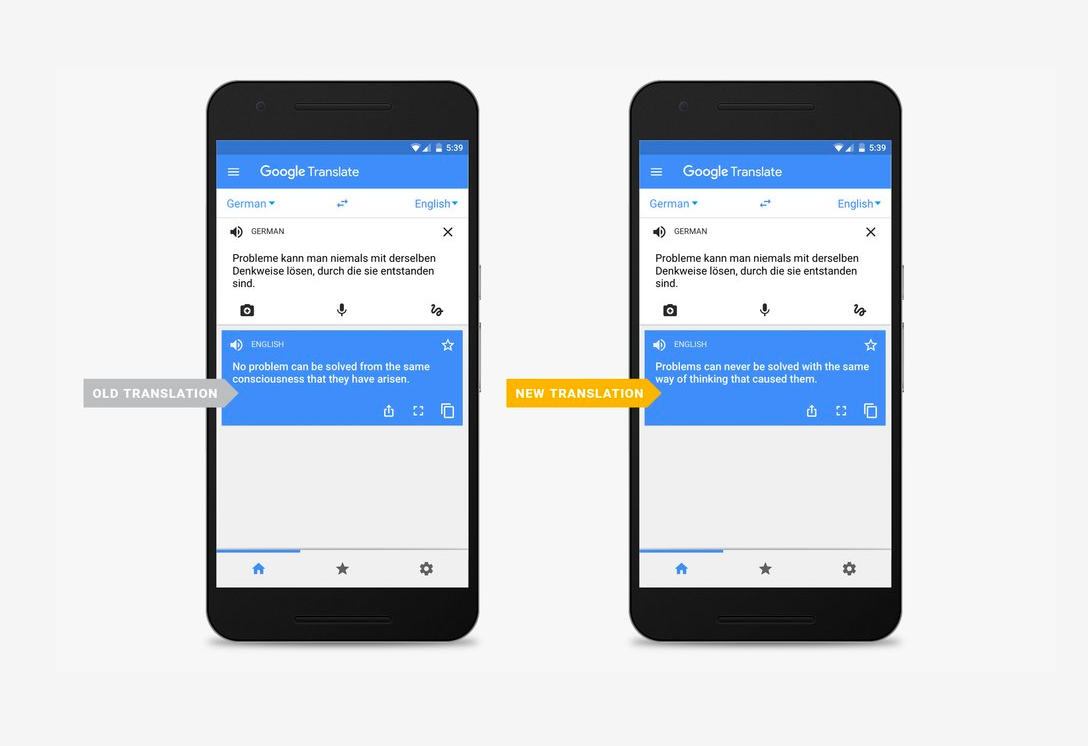

Google and Microsoft both introduced neural machine translation back in November 2016. It differs from the previous large-scale statistical machine translation, as it translates whole sentences at a time instead of just one or two words at a time. In a blog post, Google explained how the sentence is translated in its broader context and is then rearranged and adjusted "to be more like a human speaking with proper grammar". This makes it easier to translate larger bodies of text as they are taken sentence by sentence, so paragraphs and articles will be translated with fewer errors or instances of miscomprehension. Microsoft has a useful tool to highlight the difference between neural networks and statistical machine translation, which shows how neural translation sounds much more natural. And the best part? Over time neural networks learn to create better and more natural translation.

But neural network-powered translation isn't all about completely new innovations - it also builds technologies being used in other domains, such as LSTM.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

LSTMs support machine learning and can learn from experience, depending on how they are applied. Since 2015, Google's speech recognition on smartphones has been based on self-learning long short-term memory (LSTM) recurrent neural networks (RNNs) and the technology has been extended to other products, including Google Translate.

Jrgen Schmidhuber, professor and co-director of the Swiss Dalle Molle Institute for Artificial Intelligence and president of NNAISENSE, who developed LSTM-RNN technology, predicts that in the future these systems will enable "end-to-end video-based speech recognition and translation including lip-reading and face animation".

"For example, suppose you are in a video chat with your colleague in China. You speak English, he speaks Chinese. But to him it will seem as if you speak Chinese, because your intonation and the lip movements in the video will be automatically adjusted such that you not only sound like someone who speaks Chinese, but also look like it. And vice versa," Schmidhuber explains.

Zach Marzouk is a former ITPro, CloudPro, and ChannelPro staff writer, covering topics like security, privacy, worker rights, and startups, primarily in the Asia Pacific and the US regions. Zach joined ITPro in 2017 where he was introduced to the world of B2B technology as a junior staff writer, before he returned to Argentina in 2018, working in communications and as a copywriter. In 2021, he made his way back to ITPro as a staff writer during the pandemic, before joining the world of freelance in 2022.

-

Should AI PCs be part of your next hardware refresh?

Should AI PCs be part of your next hardware refresh?AI PCs are fast becoming a business staple and a surefire way to future-proof your business

By Bobby Hellard

-

Westcon-Comstor and Vectra AI launch brace of new channel initiatives

Westcon-Comstor and Vectra AI launch brace of new channel initiativesNews Westcon-Comstor and Vectra AI have announced the launch of two new channel growth initiatives focused on the managed security service provider (MSSP) space and AWS Marketplace.

By Daniel Todd

-

Intel targets AI hardware dominance by 2025

Intel targets AI hardware dominance by 2025News The chip giant's diverse range of CPUs, GPUs, and AI accelerators complement its commitment to an open AI ecosystem

By Rory Bathgate

-

Calls for AI models to be stored on Bitcoin gain traction

Calls for AI models to be stored on Bitcoin gain tractionNews AI model leakers are making moves to keep Meta's powerful large language model free, forever

By Rory Bathgate

-

Why is big tech racing to partner with Nvidia for AI?

Why is big tech racing to partner with Nvidia for AI?Analysis The firm has cemented a place for itself in the AI economy with a wide range of partner announcements including Adobe and AWS

By Rory Bathgate

-

Baidu unveils 'Ernie' AI, but can it compete with Western AI rivals?

Baidu unveils 'Ernie' AI, but can it compete with Western AI rivals?News Technical shortcomings failed to persuade investors, but the company's local dominance could carry it through the AI race

By Rory Bathgate

-

OpenAI announces multimodal GPT-4 promising “human-level performance”

OpenAI announces multimodal GPT-4 promising “human-level performance”News GPT-4 can process 24 languages better than competing LLMs can English, including GPT-3.5

By Rory Bathgate

-

ChatGPT vs chatbots: What’s the difference?

ChatGPT vs chatbots: What’s the difference?In-depth With ChatGPT making waves, businesses might question whether the technology is more sophisticated than existing chatbots and what difference it'll make to customer experience

By John Loeppky

-

Bing exceeds 100m daily users in AI-driven surge

Bing exceeds 100m daily users in AI-driven surgeNews A third of daily users are new to the past month, with Bing Chat interactions driving large chunks of traffic for Microsoft's long-overlooked search engine

By Rory Bathgate

-

OpenAI launches ChatGPT API for businesses at competitive price

OpenAI launches ChatGPT API for businesses at competitive priceNews Developers can now implement the popular AI model within their apps using a few lines of code

By Rory Bathgate