Google claims its AI chips are ‘faster, greener’ than Nvidia’s

Google's TPU has already been used to train AI and run data centres, but hasn't lined up against Nvidia's H100

Google has announced that its newest chips for training AI models are more powerful and efficient than competing chips from Nvidia, and have been used to train its large language models in a supercomputer.

Its 4th generation tensor processing unit (TPU) is better equipped for AI training than its predecessors, and offers 1.7 times speed improvements over Nvidia’s competing GPU the A100, the company claimed.

Google outlined the technical details of TPU v4 in a research paper published on Tuesday. It stated that the chip formed the basis for its third supercomputer specifically intended for machine learning (ML) models, which contains 4,096 TPUs for ten times greater power overall than previous clusters.

High-performance chips such as the TPU are necessary components for supercomputers, which are seeing particularly high use right now as the hardware used to train large language models (LLMs) for generative AI.

Google trained its own 540 billion-parameter PaLM LLM using two TPU v4 supercomputer clusters, which partially forms the basis for Bard, its answer to ChatGPT.

Last month, Google also announced that the AI image generation program Midjourney was trained using Google TPUs, GPU VMs, and Google Cloud infrastructure.

The firm highlighted the TPU v4's optical circuit switches, a high-speed, low-power alternative to Infiniband node connectors that can be used to dynamically reconfigure the chip's topology to fit user needs.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

The TPU also uses 1.3-1.9 times less power than Nvidia’s A100 chips, a crucial statistic in the interest of keeping energy costs low and maintaining carbon budgets for energy centres and supercomputers.

Amid ongoing energy price volatility, Gartner predicted that data centres could face cost increases of up to 40%, and the UK government reportedly held crisis talks with data centre operators in October over blackout concerns.

Google TPUs are used throughout Google Cloud data centres, and the firm stated that the 4th generation chips in Google Cloud warehouse computers use three times less energy, producing 20 times fewer CO2 emissions than contemporary data centres.

Gartner additionally identified sustainability as the key strategic technology trend for 2023, and with this in mind, the TPU v4 could make Google Cloud an attractive proposition for firms looking to train AI with minimal carbon impact.

RELATED RESOURCE

Why aren’t factories as smart as they could be?

How edge computing accelerates the journey to a remarkable factory

Absent from the paper was any mention of Nvidia’s H100, the firm’s new AI accelerator of choice announced in March 2022.

Nvidia has stated that the H100 delivers 6.7 times greater performance than its predecessors in ML benchmarks, with the A100 delivering 2.5 times performance improvements by the same measurements.

Big tech has flocked to Nvidia for AI hardware, and the GPU giant has even announced that its DGX Cloud supercomputer service will soon be available through Google Cloud.

It remains one of the most trusted choices for powerful and energy-efficient hardware for AI, and provided the chips for Microsoft’s supercomputer used to train OpenAI’s GPT-4 model.

An Nvidia spokesperson told ITPro that the firm's H100 chip delivers the best performance across categories including natural language understanding, recommender systems, computer vision, speech AI and medical imaging.

“Three years ago when we introduced A100, the AI world was dominated by computer vision. Generative AI has arrived,” said NVIDIA founder and CEO Jensen Huang in a blog post.

“This is exactly why we built Hopper, specifically optimized for GPT with the Transformer Engine. Today’s MLPerf 3.0 highlights Hopper delivering 4x more performance than A100."

This article was updated to include a statement from Nvidia.

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

Bigger salaries, more burnout: Is the CISO role in crisis?

Bigger salaries, more burnout: Is the CISO role in crisis?In-depth CISOs are more stressed than ever before – but why is this and what can be done?

By Kate O'Flaherty Published

-

Cheap cyber crime kits can be bought on the dark web for less than $25

Cheap cyber crime kits can be bought on the dark web for less than $25News Research from NordVPN shows phishing kits are now widely available on the dark web and via messaging apps like Telegram, and are often selling for less than $25.

By Emma Woollacott Published

-

£30 million IBM-linked supercomputer centre coming to North West England

£30 million IBM-linked supercomputer centre coming to North West EnglandNews Once operational, the Hartree supercomputer will be available to businesses “of all sizes”

By Ross Kelly Published

-

How quantum computing can fight climate change

How quantum computing can fight climate changeIn-depth Quantum computers could help unpick the challenges of climate change and offer solutions with real impact – but we can’t wait for their arrival

By Nicole Kobie Published

-

“Botched government procurement” leads to £24 million Atos settlement

“Botched government procurement” leads to £24 million Atos settlementNews Labour has accused the Conservative government of using taxpayers’ money to pay for their own mistakes

By Zach Marzouk Published

-

Dell unveils four new PowerEdge servers with AMD EPYC processors

Dell unveils four new PowerEdge servers with AMD EPYC processorsNews The company claimed that customers can expect a 121% performance improvement

By Zach Marzouk Published

-

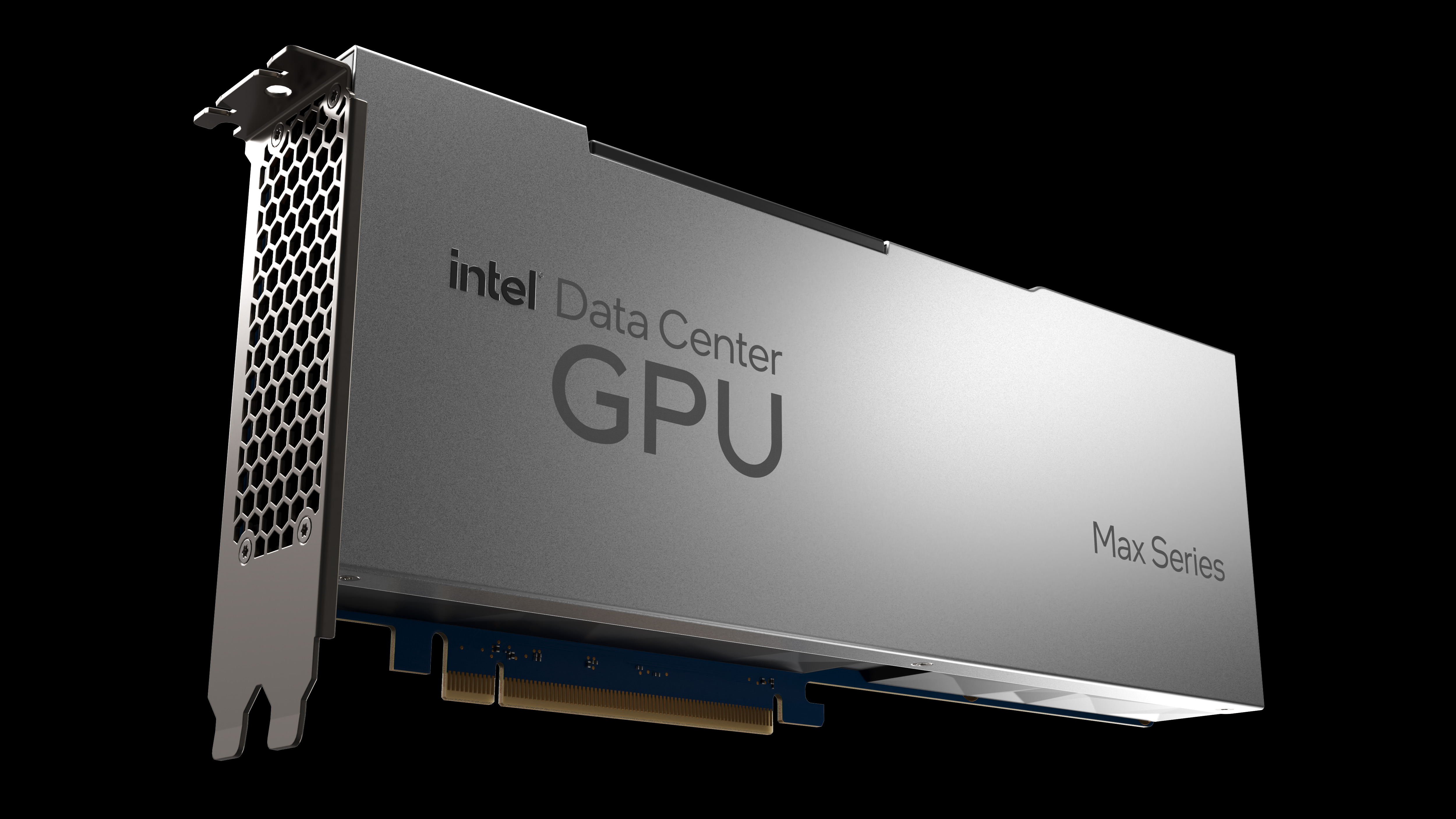

Intel unveils Max Series chip family designed for high performance computing

Intel unveils Max Series chip family designed for high performance computingNews The chip company claims its new CPU offers 4.8x better performance on HPC workloads

By Zach Marzouk Published

-

Lenovo unveils Infrastructure Solutions V3 portfolio for 30th anniversary

Lenovo unveils Infrastructure Solutions V3 portfolio for 30th anniversaryNews Chinese computing giant launches more than 50 new products for ThinkSystem server portfolio

By Bobby Hellard Published

-

Microchip scoops NASA's $50m contract for high-performance spaceflight computing processor

Microchip scoops NASA's $50m contract for high-performance spaceflight computing processorNews The new processor will cater to both space missions and Earth-based applications

By Praharsha Anand Published

-

BAE Systems lands $699 million US army HPC contract

BAE Systems lands $699 million US army HPC contractNews Defense giant will operate and maintain the military’s high performance computing systems until 2027

By Daniel Todd Published