Welcome to ITPro's live coverage of the AMD Advancing AI conference in San Francisco.

It's a chilly morning here in San Francisco, but we're up and rearing to go ahead of the conference, which is due to kick off at 9am PST.

We've got a jam-packed agenda ahead of us at the Moscone Center today, and yesterday saw assembled press get a sneak peak at all the news and announcements we can expect to see in the opening keynote session – and we can assure you there's going to be a lot of talking points.

In the opening keynote we'll hear from a variety of executives at AMD, including chief executive Lisa Su.

Make sure to keep tabs on our rolling live coverage here throughout the day.

It's clear that AMD has been cooking up a storm so far in 2024 as it primes itself for a battle with Nvidia.

All eyes have been on the latter over the last two years since the emergence of generative AI, but AMD has been putting in the groundwork of late to catch up with its great rival.

Today we'll hear more about how it plans to ramp things up in the year ahead, but we have seen a raft of exciting acquisitions, including two in the last couple months alone. These included a $4.9 billion deal to acquire ZT Systems, which marked a huge statement of intent from the chip maker.

In July, AMD also announced the $655 million acquisition of SIlo AI amidst its big AI push.

We've still got a little while until the opening keynote session kicks off, but in the meantime why not check out our preview ahead of the conference for a taste of what we can expect to hear about?

• AMD has put in the groundwork for a major AI push while the tech industry fawned over Nvidia

It's still early - and we are expecting a torrent of people to converge on the Moscone Center this morning - but I have to say the city center in San Francisco has been a breath of fresh air compared to the carnage that Dreamforce brought with it just a couple of weeks prior.

No sea of lanyards or the cacophony of car horns in chaotic traffic.

We're expecting to hear about a big push on AI PCs from AMD today. The rise of the AI PC has been a big talking point over the last year, with manufacturers and chip makers alike ramping up development in this space.

Intel CEO Pat Gelsinger claimed last year that the AI PC would be the "star of the show" in 2024, and he wasn't wrong. This has been a big area of focus for Intel, one of AMD's key competitors in this space.

But what's AMD doing in this regard? Its Ryzen series is a key focus for the company in its big AI PC push, so we'll likely hear more on this during the opening keynote.

There has been some concern from industry stakeholders on the Ryzen series, however.

After AMD unveiled its Ryzen Pro enterprise-focused processors, the company promised significant performance gains, but reports in April suggested they were unlikely to meet the steep performance threshold required to be designated as an 'AI PC' by Microsoft.

A key talking point here was that the AI processing power of these chips appeared to fall short of the 40 trillion operations per second (TOPS) standard set by Microsoft.

You can read more on this in our coverage below.

With this in mind, we could see AMD come out guns blazing with a new update to the Ryzen series.

Like we said, a real contrast in the volume of people here at the Moscone Center today.

We are seated now and good to go. Just a matter of time until we hear from Lisa Su in her opening remarks here at AMD Advancing AI. A good buzz around the keynote hall ahead of things commencing.

And here we go! AMD chief executive Lisa Su is on stage to kick things off at Advancing AI.

"Let's talk a little bit about AI," Su says. "Actually, we're going to talk a lot about it."

Setting the tone here right off the mark.

We're getting a brief rundown of AMD's current strategy.

"It's about employing the entire ecosystem - the cloud, OEMs...our goal is to create an open ecosystem," Su says.

Su says AMD is "really committed" to driving open innovation in high performance computing and AI infrastructure. This has been a recurring talking point in the company's strategy of late - ecosystem building and collaboration.

"When we think about AI, it really is about using the right compute for the right application," Su says.

There's really no 'one-size-fits-all' approach to AI, and AMD is keen to enable enterprises to get those creative juices flowing and adopting the technology on their terms, at their pace, and based on their unique individual needs.

We've moved onto the data center portfolio here. A really exciting area for AMD right now - big gains and big revenues boosts in recent months.

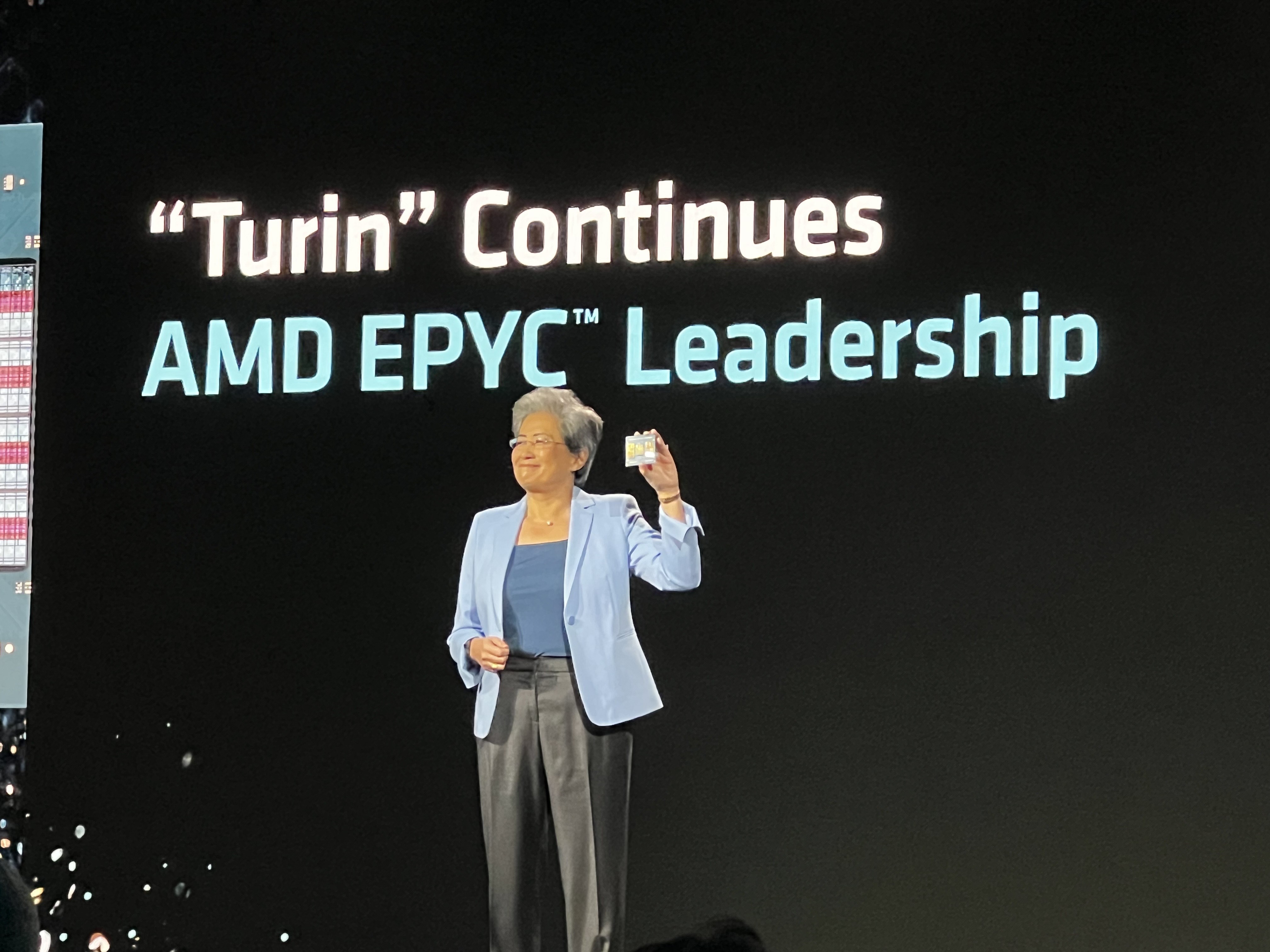

AMD EPYC now boasts a record market share - and it's growing, Su says. At the end of the second quarter, AMD exited with a 34% revenue share in this domain.

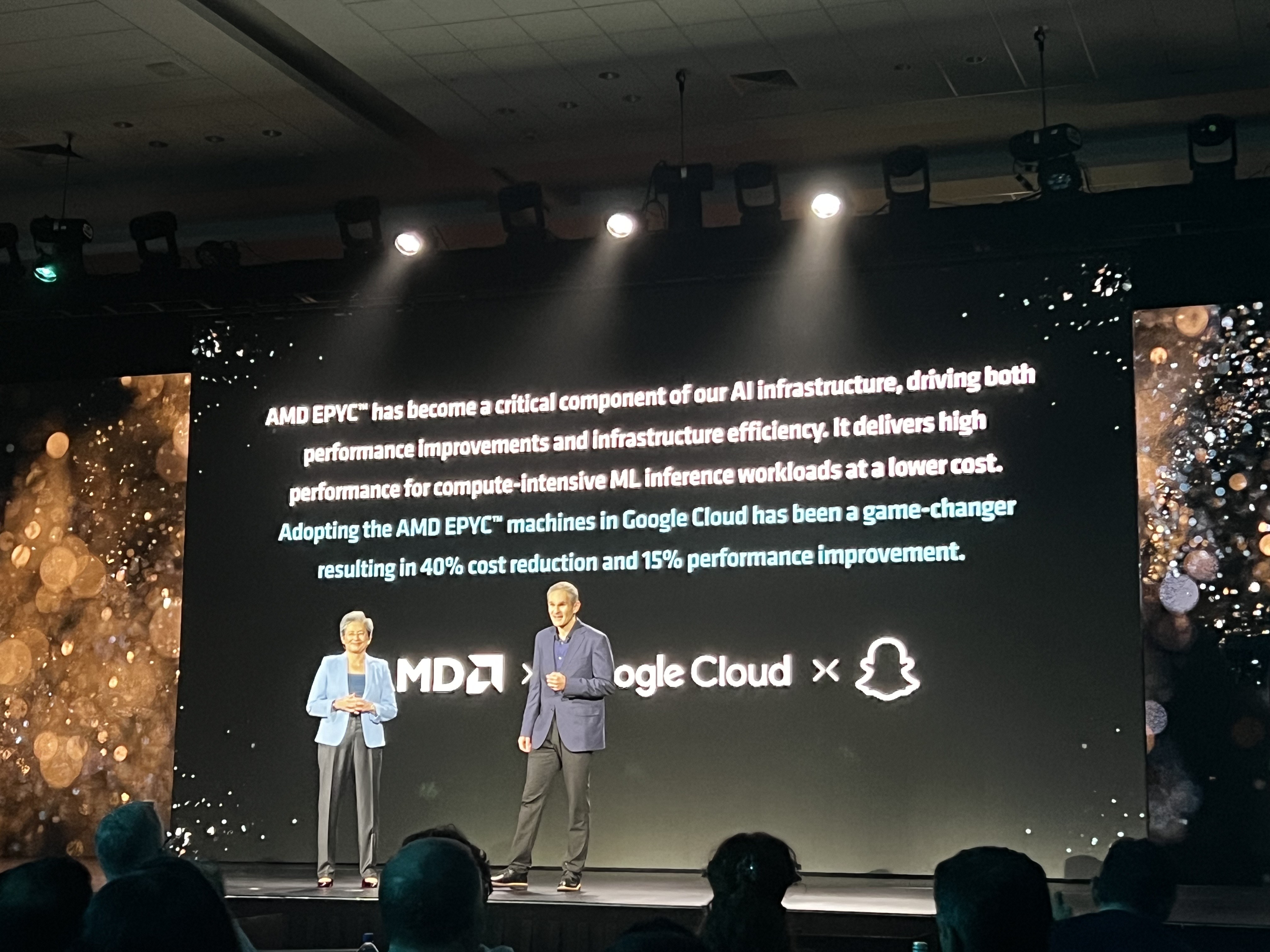

We've got our first partner on stage now from Google Cloud.

"The demand for AI compute power is insatiable."

So how's this benefitting Google Cloud? Well, leveraging EPYC machines, the tech giant has cut costs by 40% and delivered a 15% performance improvement.

Big numbers here and when you look at this from a financial perspective, very significant for the hyperscaler.

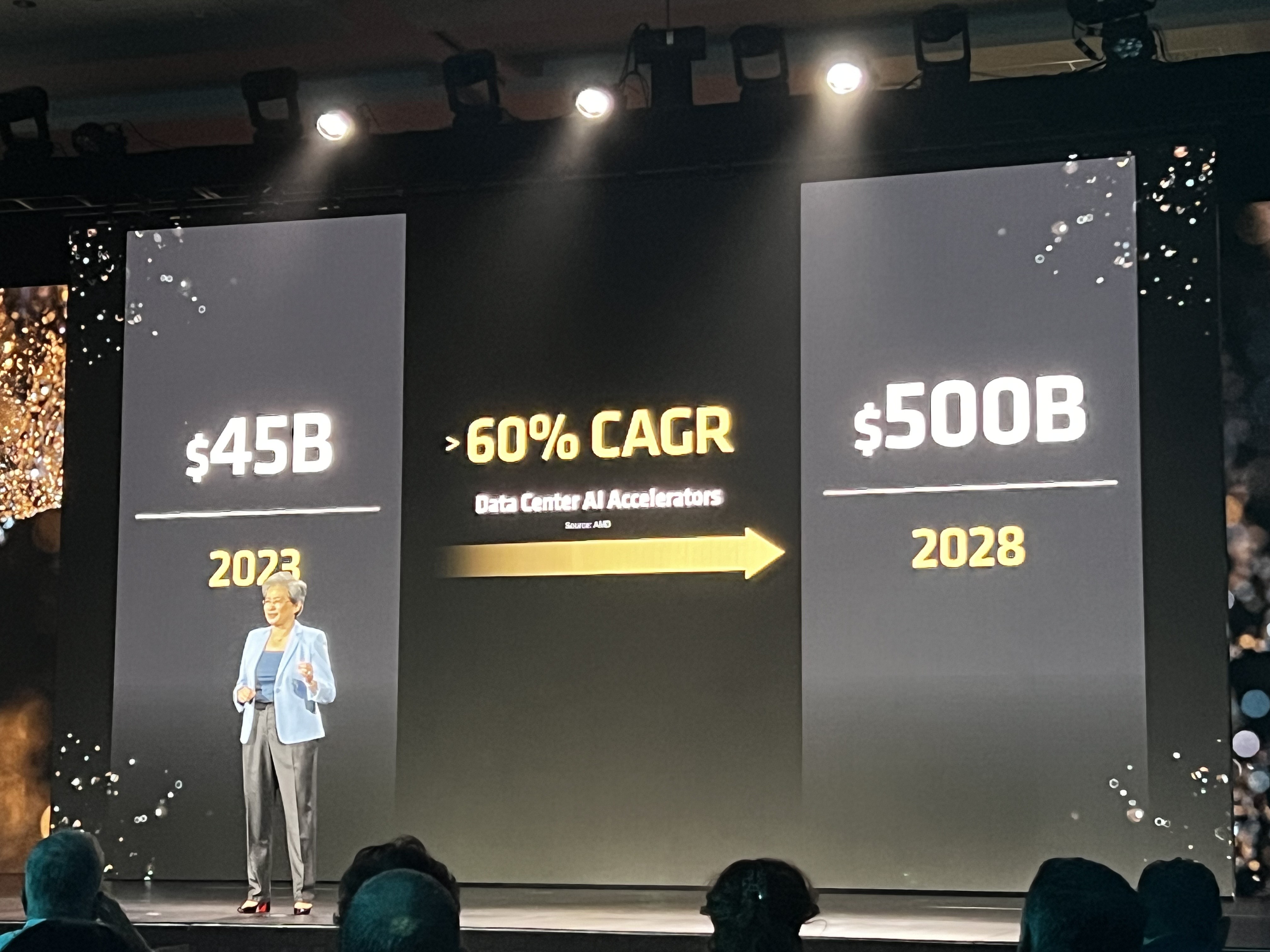

Data center AI demand is absolutely skyrocketing. If you're a regular reader of ITPro then you'll have come across some of the various bits of research we've covered on this subject.

Insatiable demand from enterprises globally has prompted a massive surge. This, Su says, represents a massive opportunity for AMD to capitalize on this trend with its Instinct GPU series.

Looking ahead, the numbers involved here are simply mind boggling.

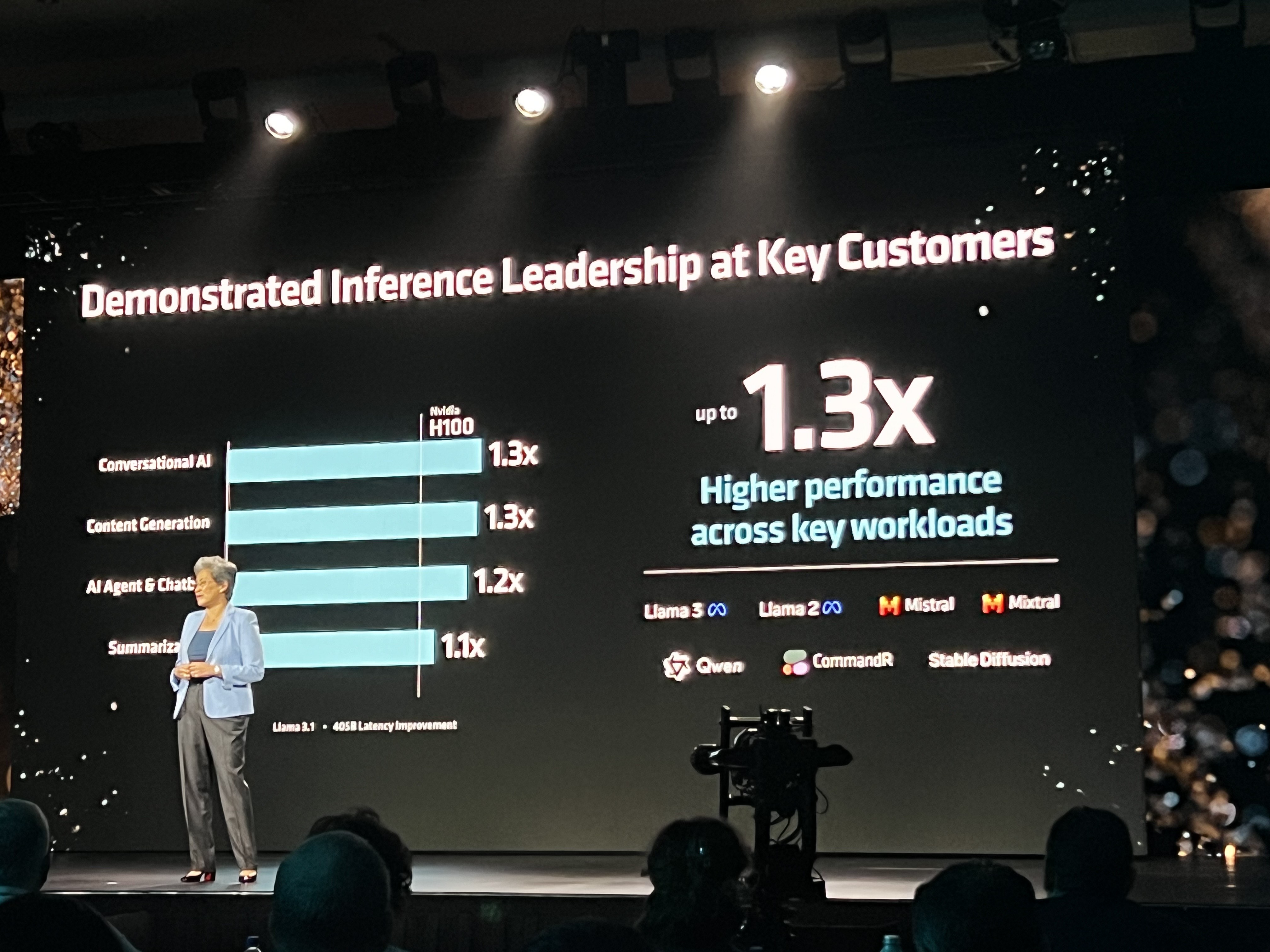

So what's AMD's big selling point here then with regard to GPU capabilities?

The Instinct MI300X series is the chip maker's flagship product here, and in terms of performance it's far exceeding what competitors can provide.

The MI300X outperforms Nvidia's H100 series on several key benchmarks, Su explains. All told, this represents 1.3x higher performance on Llama 3 and Mistral-based workloads.

We now have another partner joining Su up on stage. This time it's Karan Batta, SVP at Oracle.

AI, naturally, is the biggest talking point here. Oracle is seeing "incredible levels" of performance and efficiency on AMD GPUs, Batta says.

The company is continuing to scale up capacity for MI300X series GPUs for customers, Batta says. There's more to come in the year ahead as well.

Rapid fire customer chats here with Naveen Rao, VP of AI at Databricks joins Su on stage now to discuss the company's partnership with AMD.

"We've been on this journey for a while," Rao says. "We've achieved remarkable results."

Leveraging the MI300X the company has delivered marked compute improvements. It's a real "powerhouse" of a GPU, Rao says.

Satya Nadella, Microsoft CEO, joins Su now…just not on stage. We’re getting a run through a video chat with the duo discussing the pace of change and development in the AI space.

“It’s been incredible, the amount of innovation in the industry,” Su says.

AMD and Microsoft have deep, deep ties. Microsoft is creating an entirely new PC category with AMD at the moment with AI PCs.

In the last four years, Microsoft has been tapping into AMD’s AI innovation to support its own cloud innovation. It’s a very beneficial feedback loop for both organizations that’s paying dividends.

Microsoft is using the MI300 series which is delivering great benefits for key services such as its raft of various AI solutions and tools, as well as Azure.

“What we have to deliver is performance, per dollar, per watt,” Nadella says. A big focus on energy efficiency and performance to deliver cost benefits for enterprises.

That’s the one metric that matters, Nadella says. Given the current costs associated with AI development, unlocking these improvements is vital.

AMD really is pulling out the big hitters in terms of brand names here. Oracle, Microsoft, and now Meta.

Su is joined on stage now by Kevin Salvadori, VP for Infrastructure and Engineering at Meta.

Meta's sharpened focus on generative AI over the last two years has been underpinned by AMD Instinct GPUs and EPYC CPUs.

Salvadori admits that Meta is a demanding customer, but AMD has been on hand to match those expectations amid the company's big AI boom.

"We've deployed over one and a half million CPUs," Salvadori says. Huge numbers and serious scale.

Using the MI300x series in production has been "instrumental" to helping Meta scale its AI infrastructure and power inference. This has been crucial to support development of the Llama large language model.

While hardware is important, software is an equally crucial aspect of AMD’s current roadmap and strategy.

AMD platforms are powering some of the most powerful workloads on the planet.

We’re getting a run down on ROCm now, AMD’s open software stack that complements its hardware offerings.

“ROCm really delivers for AI developers.”

Part of this focus includes close collaboration with the open source community for AMD. The chip maker works closely with PyTorch, for example, as well as Hugging Face and vLLM.

With Hugging Face in particular, more than one million models run on AMD.

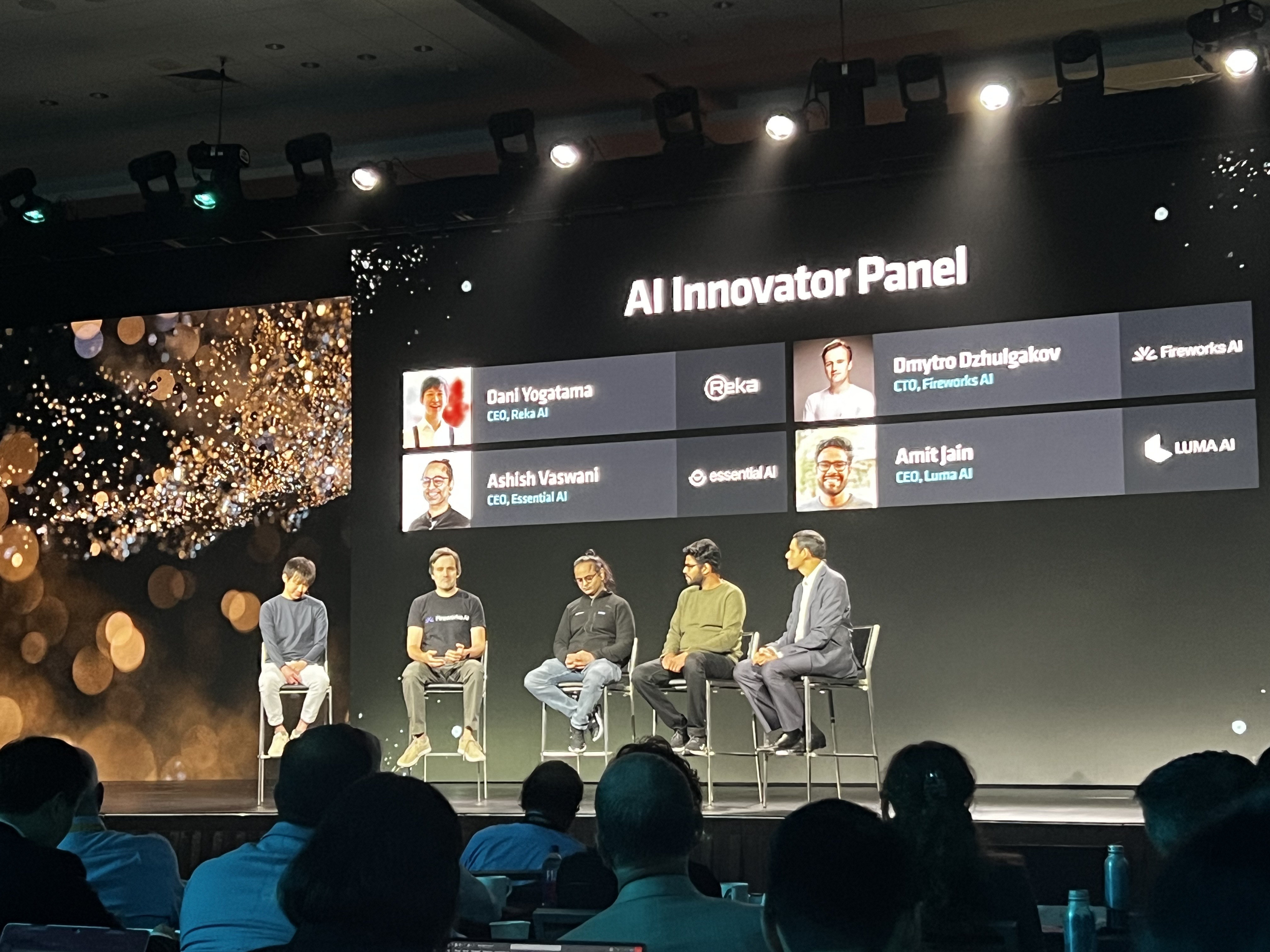

We've got a panel session coming up now with a host of industry stakeholders, including:

• Dani Yogatama, CEO at Reka AI

• Dmytro Dzhulgakov, CTO at Fireworks AI

• Ashish Vaswani, CEO of Essential AI

• Amit Jain, CEO at Luma AI

So how is ROCm helping support development at these trailblazing companies?

The open source approach that AMD employs has been highly beneficial to all four organizations, that much is clear. Furthermore, AMD GPUs are underpinning the development of the models currently in development at these firms.

"ROCm's open source platform really allowed us to move fast," Yogatama says.

Dzhulgakov at Fireworks AI says using the MI300 series has unlocked marked benefits for the company during its development process.

"This basically allows us to deliver really efficient deployment of AI models," he says.

Forrest Norrod, EVP and GM of the AMD data center solutions business unit takes to the stage now to give us a run through all the goings on in the firm's data center segment.

This aspect of the business has been booming of late, so a lot to be excited about from AMD's perspective.

It's safe to say AI has changed the game with data center operations, both on the front end and back end. Networking is also evolving at pace to contend with this new level of scale, Norrod says.

"Networks now must scale efficiently," he says. Poor networking can create major bottlenecks for AI clusters. "AI networking must match the unique challenging characteristics of AI workloads."

Ethernet has traditionally been the answer to this. "Quite simply, from cloud to enterprise, ethernet is preffered," Norrod says.

But while it's a clear winner in terms of scale and TCO, there are difficulties at play here and it does need to evolve.

General purpose networks can typically operate at under 50% utilization, Norrod says. But AI workload networks are operating at 95% utilization. This underscores the demands placed on network infrastructure by AI.

We’ve got another customer up with Forrest now, this time from Supermicro.

Vik Malyala, SVP for technology and AI at Supermicro says AMD has enabled the firm to create ‘kick ass’ products for customers.

“BY working closely with AMD, one of the things we do is further stretch performance limits,” Malyala says. The company uses EPYC CPUs, and Malyala says this has delivered marked improvements to both efficiency, but also in providing benefits for customers.

That's the hardware side of things though, Malyala says. the software aspect, like we heard earlier, is equally compelling and is highly beneficial.

AMD's open ecosystem has been critical, in this regard, Malyala adds.

AMD and Lenovo boast close ties, Norrod says, bringing Vlad Rozanovich, SVP at Lenovo's infrastructure solutions group.

"Over the last 15 year, we've actually grown our AMD footprint in that tier 2 cloud service provider by around 50%," Rozanovich says.

Over the last three years they've grown their AMD footprint three times over. Lenovo is well and truly all-in on AMD.

This is helping Lenovo really simplify AI for enterprise customers, Rozanovich says, noting that reliability and security are two areas where Lenovo really differentiates itself. A big talking point during the AI boom, and AMD is helping underpin this reputation, Rozanovich says.

"Lenovo and AMD are really driving AI to provide the best customer experience possible," Rozanovich says.

Lisa Su is back on stage now to round things off. We've heard a lot about data center infrastructure, but now we're going to be looking at AI PCs.

This is an exciting focus area for AMD and there's huge potential in AI PCs both in the consumer and enterprise markets. AMD has invested heavily in NPUs, Su says, and has been innovating aggressively in this space with its Ryzen series.

"When you look at our platforms, they're really built to deliver the best performance, multi-day battery life, security, reliability, all the things we need in enterprises," Su says.

Wouldn't be an AI PC stream without a mention of Microsoft - one of the leaders in this space with its Copilot+ range for Windows.

With that in mind, Su welcomes the final guest to the stage today, Pavan Davaluri, corporate VP for Windows and devices at Microsoft.

With AMD, Copilot+ PCs are going to hit new heights in terms of performance, Davaluri says.

"Speed matters, and Copilot+ PCs are fast," he says. AMD's Ryzen series plays a key role to help Microsoft meet expectations in this regard.

Everything we learned at AMD Advancing AI

And with that the keynote session at AMD Advancing AI is done and dusted. There was a lot to digest there but what was clear is that AMD has a concrete plan of attack to take on Nvidia in the year ahead and beyond.

In a keynote session filled with customer success stories and bold claims, there were a few big talking points with regard to product launches, so here's a rundown of everything we heard:

AMD Instinct GPU updates

The next iteration of AMD's Instinct GPU series, the MI325X, was officially unveiled.

According to AMD, the MI325X accelerators will "deliver leadership AI performance and memory capabilities" for even the most demanding AI workloads. Better still, they're more than a match for Nvidia's upcoming - and hotly-anticipated - Blackwell chip.

That's not all we heard on the Instinct front, however. Lisa Su gave us a glimpse at the roadmap for future releases, including the MI350 series, which is expected to begin shipping in the second half of 2025.

We even had a glimpse at the MI400 series, which we can expect to see rolling out in 2026.

DPU and NIC updates

We had an update with regard to AMD's DPU and NIC offerings, including the launch of the Pensando Salina DPU alongside the Pendanso Pollara 400.

Both focused on streamlining and maximizing AI infrastructure performance, these will help enterprise customers "optimize data pipelines," according to AMD and facilitate better GPU communication for high-performance AI systems.

Ryzen processors are getting a big boost

Toward the end of the keynote session, Lisa Su unveiled the launch of the Ryzen AI Pro 300 series processors.

These will feature AMD 'Zen 5' and XDNA 2 architectures and are aimed specifically at delivering better performance, battery life, and security for Copilot+ laptops used in the enterprise.

We've heard a lot about AI PC's over the last year, and AMD certainly seems bullish on this front for the year ahead.

EPYC processors target big efficiency gains

We've got new EPYC processors on the way, mainly the 9005 Series.

Again built on AMD's 'Zen 5' architecture, these new CPUs appear to be a source of great excitement at AMD.

These will help deliver "record-breaking performance" and energy efficiency, according to the chip maker. There's already been some initial adoption, as well, with Dell, HPE, Lenovo, an Supermicro all reporting positive results so far.