Meta unveils two new GPU clusters used to train its Llama 3 AI model — and it plans to acquire an extra 350,000 Nvidia H100 GPUs by the end of 2024 to meet development goals

Meta is expanding its GPU infrastructure with the help of Nvidia in a bid to accelerate development of its Llama 3 large language model

Meta has announced two new GPU clusters that will see the firm provide improved infrastructure for dealing with the taxing compute demands of artificial intelligence (AI) systems.

Marking a “major investment in Meta’s AI future,” the firm announced the addition of two 24k GPU data center-scale clusters that boast heightened throughput and reliability for AI workloads.

These GPUs will support both Meta’s current Llama 2 model and its upcoming Llama 3 model, as well as the company’s wider research and development projects across generative AI and other areas.

The announcement was described by the firm as “one step in our ambitious infrastructure roadmap”, and will see the tech giant acquire 350,000 Nvidia H100 GPUs to expand its portfolio.

Meta said the expansion project will deliver a total compute power equivalent to nearly 600,000 H100s upon completion.

“As we look to the future, we recognize that what worked yesterday or today may not be sufficient for tomorrow’s needs,” the firm said in a statement.

“That’s why we are constantly evaluating and improving every aspect of our infrastructure, from the physical and virtual layers to the software layer and beyond.”

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

How do Meta’s GPUs stack up for large-scale use?

Meta focused on building “end-to-end” AI systems in its latest pair of GPU clusters, emphasizing researcher and developer experience as a means of guiding production.

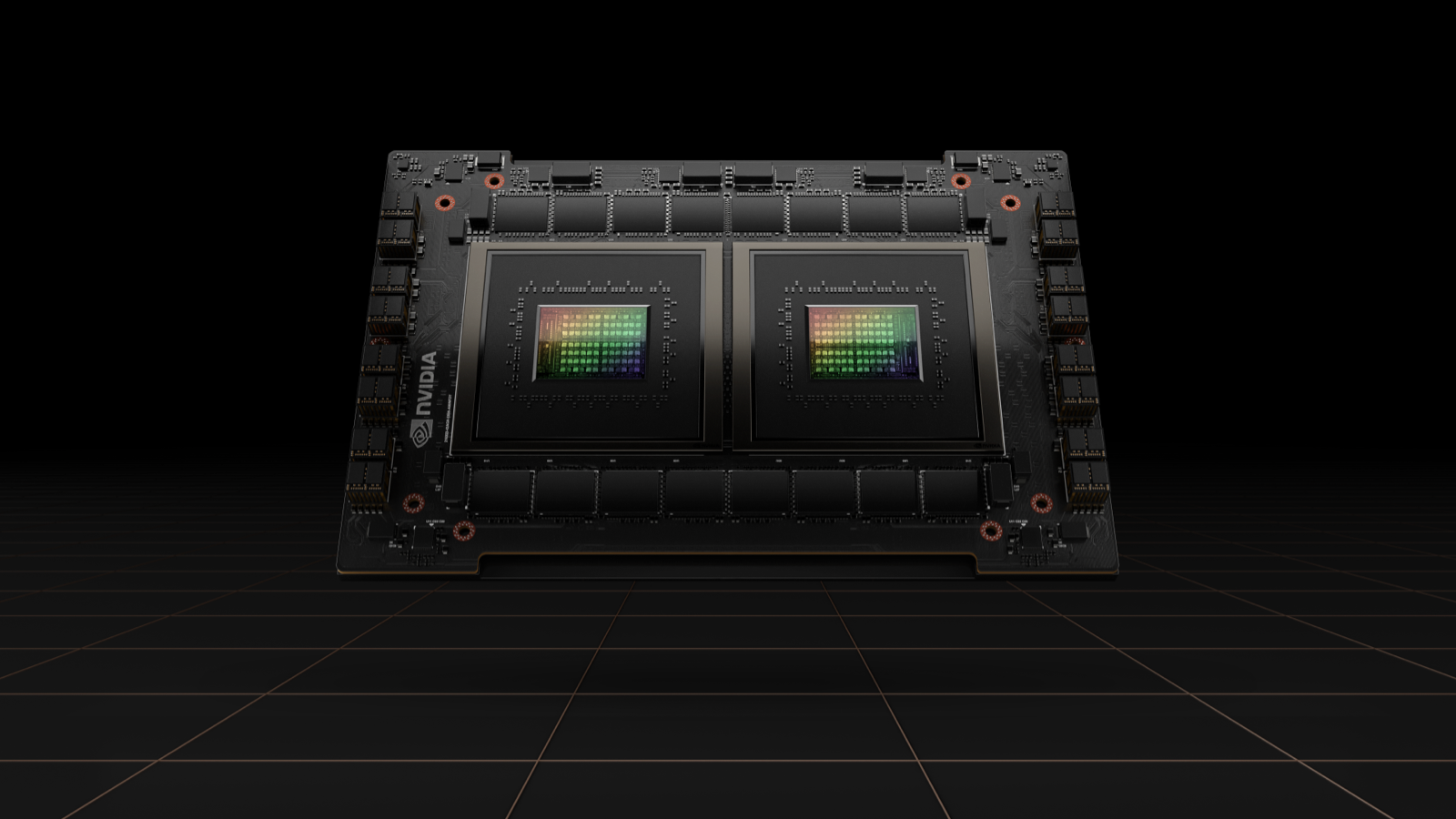

With high-performance network fabrics working alongside 24,576 Nvidia Tensor Core H100 GPUs, these new clusters are able to support “larger and more complex” models than Meta’s previous RSC clusters.

One of the new clusters was built with “remote direct memory access (RDMA) over converged Ethernet (RoCE),” while the other features an "Nvidia Quantum 2 InfiniBand fabric,” both geared towards improved network functionality.

Both clusters were built using Meta’s in-house open GPU hardware platform Grand Teton, which itself is built on generations of AI that integrate “power, control, compute, and fabric interfaces into a single chassis for better overall performance.”

RELATED WHITEPAPER

“Grand Teton allows us to build new clusters in a way that is purpose-built for current and future applications at Meta,” the firm said.

Generative AI also consumes data in huge volumes, the firm said, meaning the next generation of GPUs need to take storage into account.

Meta’s “home-grown” Linux storage system does this in its latest GPU cluster offerings, which will work in parallel with a version of Meta’s Tectonic distributed storage solution.

Though Meta reports that there were initial performance issues with these larger clusters, changes to its internal job scheduler helped optimize both GPU clusters to “achieve great and expected performance.”

George Fitzmaurice is a former Staff Writer at ITPro and ChannelPro, with a particular interest in AI regulation, data legislation, and market development. After graduating from the University of Oxford with a degree in English Language and Literature, he undertook an internship at the New Statesman before starting at ITPro. Outside of the office, George is both an aspiring musician and an avid reader.

-

Bigger salaries, more burnout: Is the CISO role in crisis?

Bigger salaries, more burnout: Is the CISO role in crisis?In-depth CISOs are more stressed than ever before – but why is this and what can be done?

By Kate O'Flaherty Published

-

Cheap cyber crime kits can be bought on the dark web for less than $25

Cheap cyber crime kits can be bought on the dark web for less than $25News Research from NordVPN shows phishing kits are now widely available on the dark web and via messaging apps like Telegram, and are often selling for less than $25.

By Emma Woollacott Published

-

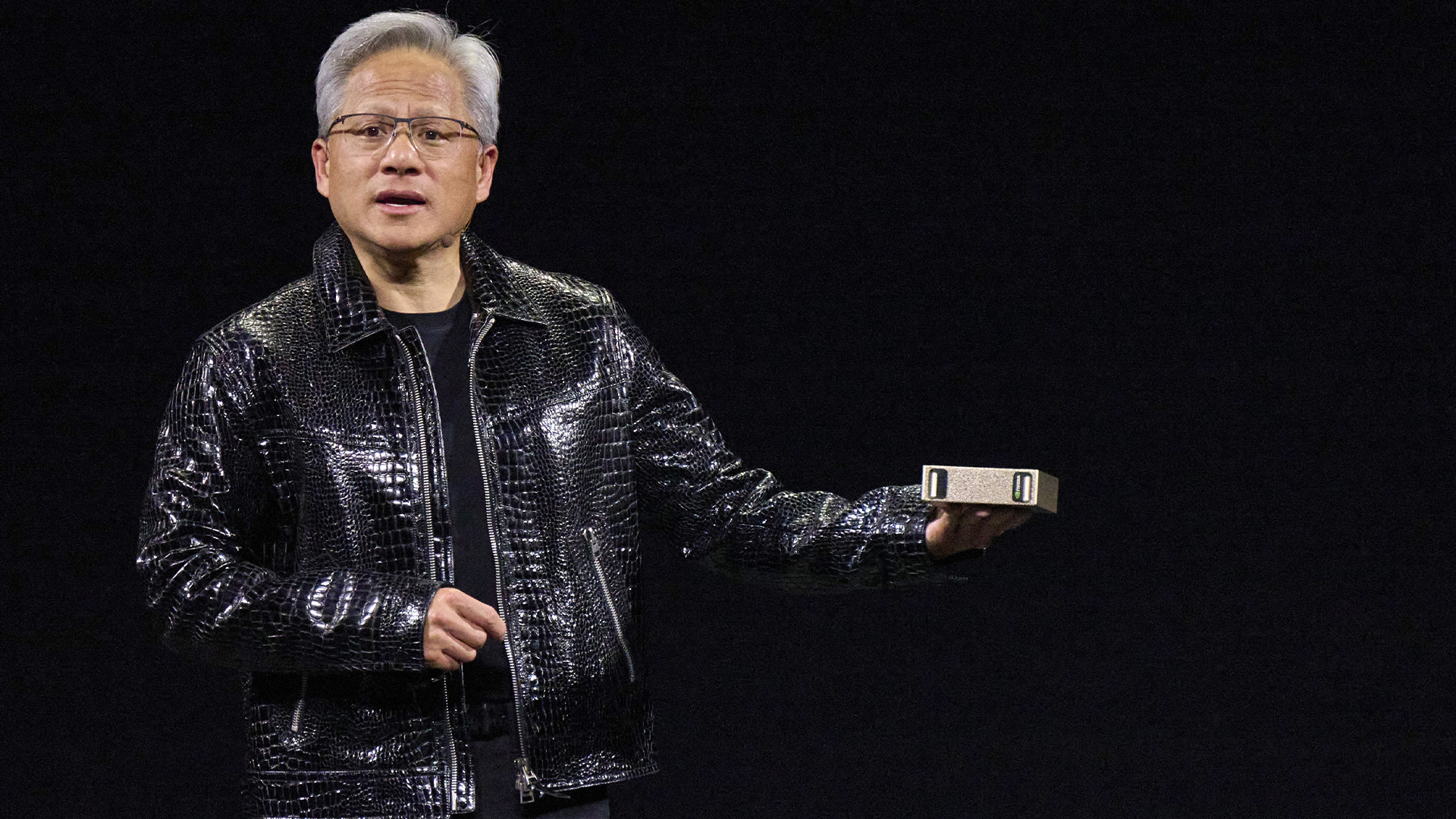

“The Grace Blackwell Superchip comes to millions of developers”: Nvidia's new 'Project Digits' mini PC is an AI developer's dream – but it'll set you back $3,000 a piece to get your hands on one

“The Grace Blackwell Superchip comes to millions of developers”: Nvidia's new 'Project Digits' mini PC is an AI developer's dream – but it'll set you back $3,000 a piece to get your hands on oneNews Nvidia unveiled the launch of a new mini PC, dubbed 'Project Digits', aimed specifically at AI developers during CES 2025.

By Solomon Klappholz Published

-

Intel just won a 15-year legal battle against EU

Intel just won a 15-year legal battle against EUNews Ruled to have engaged in anti-competitive practices back in 2009, Intel has finally succeeded in overturning a record fine

By Emma Woollacott Published

-

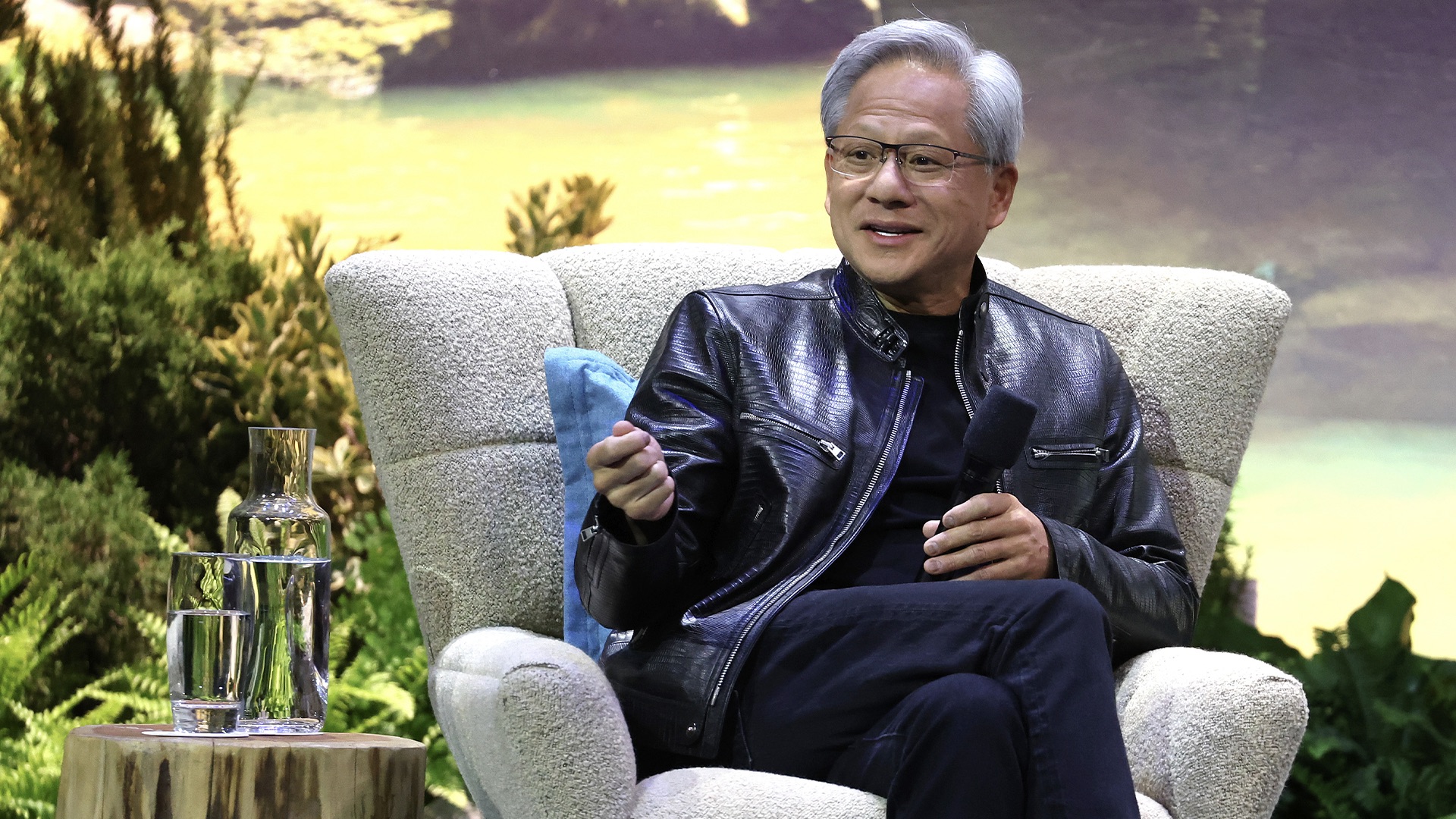

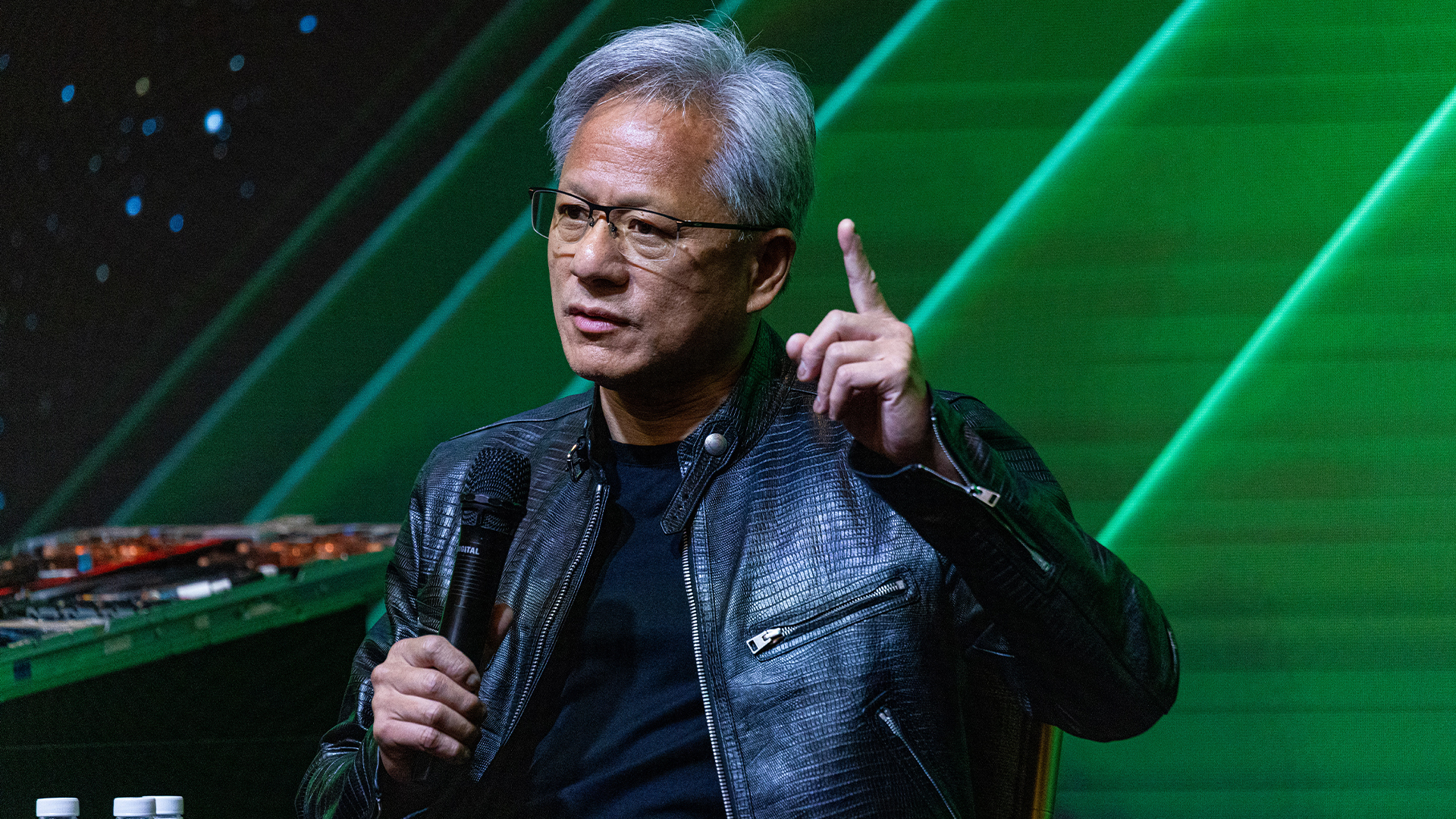

Jensen Huang just issued a big update on Nvidia's Blackwell chip flaws

Jensen Huang just issued a big update on Nvidia's Blackwell chip flawsNews Nvidia CEO Jensen Huang has confirmed that a design flaw that was impacting the expected yields from its Blackwell AI GPUs has been addressed.

By Solomon Klappholz Published

-

How Nvidia took the world by storm

How Nvidia took the world by stormAnalysis Riding the AI wave has turned Nvidia into a technology industry behemoth

By Steve Ranger Last updated

-

TD Synnex buoyed by UK distribution deal with Nvidia

TD Synnex buoyed by UK distribution deal with NvidiaNews New distribution agreement covers the full range of Nvidia enterprise software and accelerated computing products

By Daniel Todd Published

-

PCI consortium implies Nvidia at fault for its melting cables

PCI consortium implies Nvidia at fault for its melting cablesNews Nvidia said the issues were caused by user error but the PCI-SIG pointed to possible design flaws

By Rory Bathgate Published

-

Nvidia announces Arm-based Grace CPU "Superchip"

Nvidia announces Arm-based Grace CPU "Superchip"News The 144-core processor is destined for AI infrastructure and high performance computing

By Zach Marzouk Published

-

Nvidia warns rivals are exploiting uncertainty surrounding Arm’s future

Nvidia warns rivals are exploiting uncertainty surrounding Arm’s futureNews The company claims Intel and AMD have been getting ahead due to the drawn-out regulatory process

By Zach Marzouk Published