May's anti-internet response to terror called "embarrassing"

PM Theresa May has said the internet gives extremists a "safe space to breed"

Prime Minister Theresa May has used the terror attack in London over the weekend to take another swing at introducing backdoors to encryption a common pattern that has brought out the usual criticism from the tech industry, calling her response "lazy," "embarrassing" and "disappointing".

After the terror attack at London Bridge on Saturday evening, May once again called for Silicon Valley giants to do more against extremism.

She said: "we cannot allow this ideology the safe space it needs to breed. Yet that is precisely what the internet and the big companies that provide internet-based services provide. We need to work with allied, democratic governments to reach international agreements that regulate cyberspace to prevent the spread of extremism and terrorist planning. And we need to do everything we can at home to reduce the risks of extremism online."

Her comment touched on two points: first, the removal of extremist propaganda spread via social media; and second, the use of encrypted messaging tools for "planning" such attacks.

May and her government have made similar calls after the other two terror incidents in the country this year. After the Westminster attack, Home Secretary Amber Rudd said: "We need to make sure that organisations like WhatsApp, and there are plenty of others like that, don't provide a secret place for terrorists to communicate with each other". After the Manchester bombing, reports suggested the government planned to start enforcing aspects of the new Investigatory Powers Act that would force online companies to hand over user data via encryption backdoors assuming the party is re-elected, that is.

Repeated encryption criticism

May's rhetoric was, once again, criticised. The repetition is "embarrassing," Paul Bernal, University of East Anglia, said on Twitter. "The knee-jerk blame the internet' that comes after every act of terrorism is so blatant as to be embarrassing," he said.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Professor Peter Neumann, director of the International Centre For The Study Of Radicalisation at King's College London, said May's response was "lazy". Most jihadists are now using end-to-end encrypted messenger platforms e.g. Telegram. This has not solved [the]problem, just made it different," he said on Twitter. "Moreover, few people radicalised exclusively online. Blaming social media platforms is politically convenient but intellectually lazy."

He added: "In other words, May's statement may have sounded strong but contained very little that is actionable, different, or new."

Jim Killock, director of the Open Rights Group, said May's response was "disappointing".

"This could be a very risky approach," he said. "If successful, Theresa May could push these vile networks into even darker corners of the web, where they will be even harder to observe."

"But we should not be distracted: the internet and companies like Facebook are not a cause of this hatred and violence, but tools that can be abused," Killock added. "While governments and companies should take sensible measures to stop abuse, attempts to control the internet is not the simple solution that Theresa May is claiming."

Cory Doctorow, author and internet pundit, cited internet activistAaron Swartz' call that it's "not okay to not understand the internet anymore". Doctorow said in a blog post: "That goes double for cryptography: any politician caught spouting off about back doors is unfit for office anywhere but Hogwarts, which is also the only educational institution whose computer science department believes in 'golden keys' that only let the right sort of people break your encryption."

Tech response

Leading tech firms stressed they were doing all they could to remove extremist content from their platforms, with Google saying it had spent hundreds of millions of pounds doing so.

Facebook told the BBC: "Using a combination of technology and human review, we work aggressively to remove terrorist content from our platform as soon as we become aware of it - and if we become aware of an emergency involving imminent harm to someone's safety, we notify law enforcement."

Twitter echoed that, saying "terrorist content has no place" on its site, adding that it is working to expand its use of technology to automate the removal of such content.

However, online firms have been repeatedly caught out with extremist content on their sites, with the government pulling its own advertising after it was spotted running alongside hate-promoting videos on YouTubeand a government report slammed tech companies for "profiting from hatred". Of course, such content can be removed without tech firms needing to meddle with encryption.

Freelance journalist Nicole Kobie first started writing for ITPro in 2007, with bylines in New Scientist, Wired, PC Pro and many more.

Nicole the author of a book about the history of technology, The Long History of the Future.

-

Should AI PCs be part of your next hardware refresh?

Should AI PCs be part of your next hardware refresh?AI PCs are fast becoming a business staple and a surefire way to future-proof your business

By Bobby Hellard

-

Westcon-Comstor and Vectra AI launch brace of new channel initiatives

Westcon-Comstor and Vectra AI launch brace of new channel initiativesNews Westcon-Comstor and Vectra AI have announced the launch of two new channel growth initiatives focused on the managed security service provider (MSSP) space and AWS Marketplace.

By Daniel Todd

-

UK financial services firms are scrambling to comply with DORA regulations

UK financial services firms are scrambling to comply with DORA regulationsNews Lack of prioritization and tight implementation schedules mean many aren’t compliant

By Emma Woollacott

-

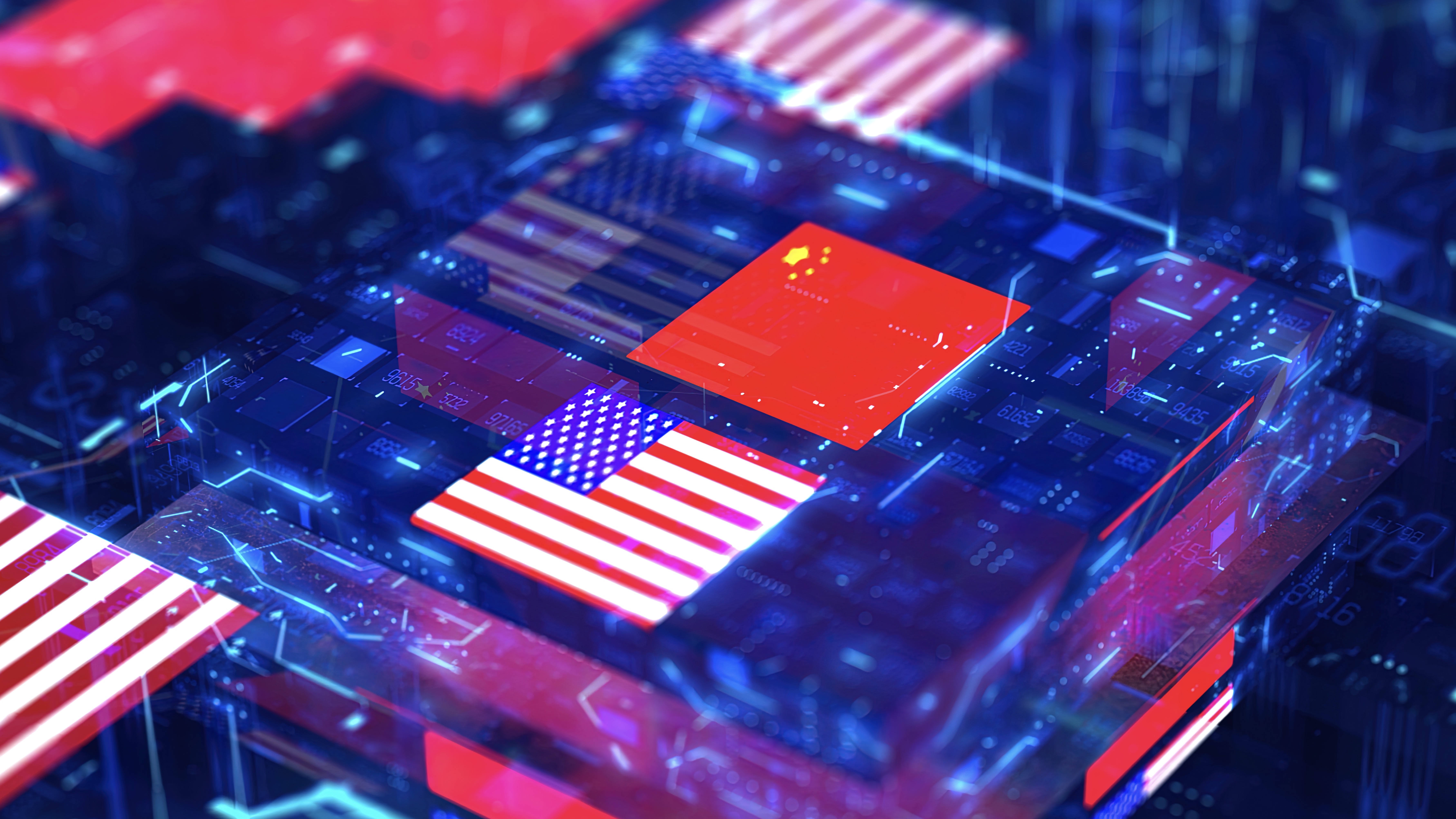

What the US-China chip war means for the tech industry

What the US-China chip war means for the tech industryIn-depth With China and the West at loggerheads over semiconductors, how will this conflict reshape the tech supply chain?

By James O'Malley

-

Former TSB CIO fined £81,000 for botched IT migration

Former TSB CIO fined £81,000 for botched IT migrationNews It’s the first penalty imposed on an individual involved in the infamous migration project

By Ross Kelly

-

Microsoft, AWS face CMA probe amid competition concerns

Microsoft, AWS face CMA probe amid competition concernsNews UK businesses could face higher fees and limited options due to hyperscaler dominance of the cloud market

By Ross Kelly

-

Online Safety Bill: Why is Ofcom being thrown under the bus?

Online Safety Bill: Why is Ofcom being thrown under the bus?Opinion The UK government has handed Ofcom an impossible mission, with the thinly spread regulator being set up to fail

By Barry Collins

-

Can regulation shape cryptocurrencies into useful business assets?

Can regulation shape cryptocurrencies into useful business assets?In-depth Although the likes of Bitcoin may never stabilise, legitimising the crypto market could, in turn, pave the way for more widespread blockchain adoption

By Elliot Mulley-Goodbarne

-

UK gov urged to ease "tremendous" and 'unfair' costs placed on mobile network operators

UK gov urged to ease "tremendous" and 'unfair' costs placed on mobile network operatorsNews Annual licence fees, Huawei removal costs, and social media network usage were all highlighted as detrimental to telco success

By Rory Bathgate

-

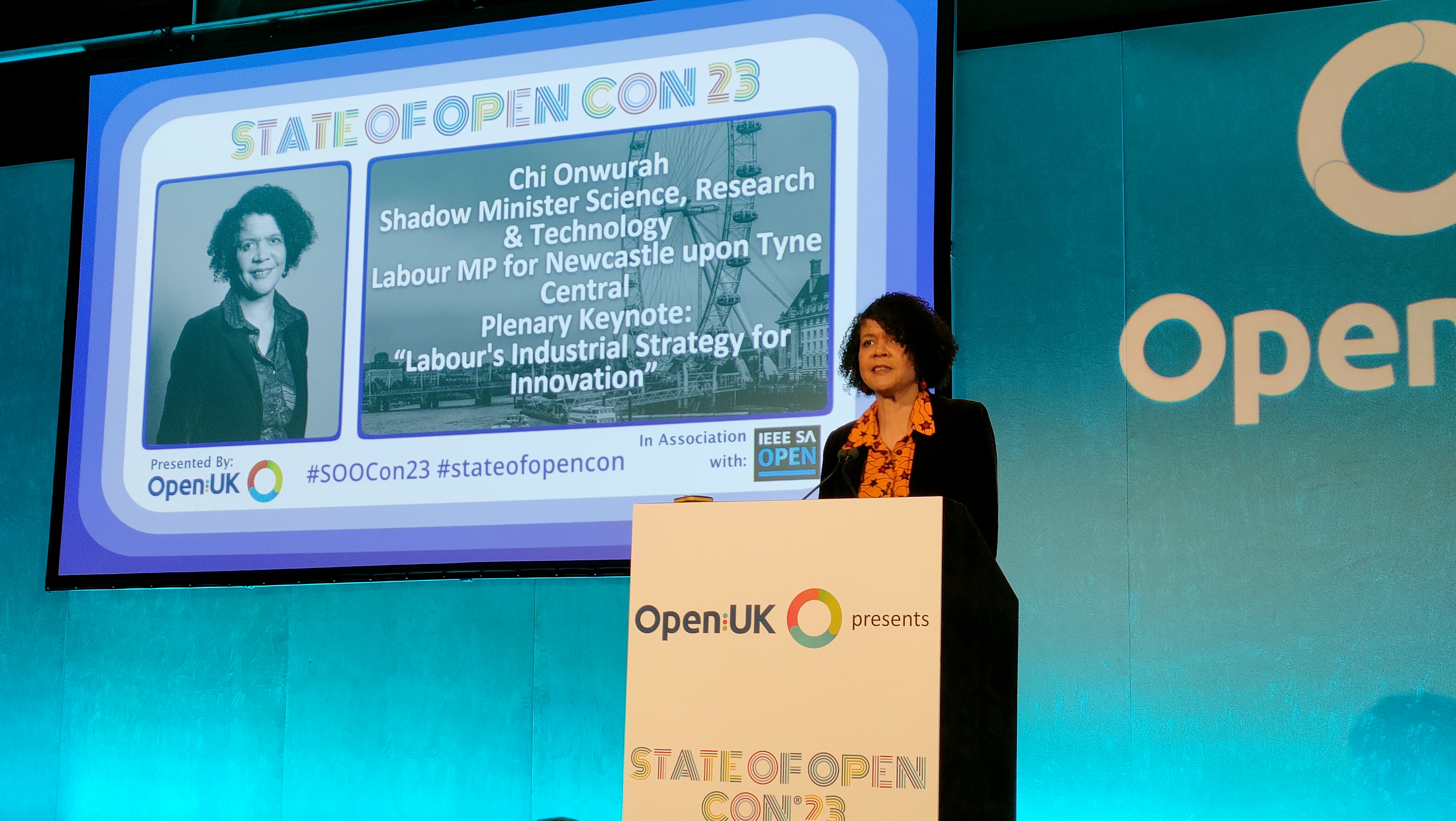

Labour plans overhaul of government's 'anti-innovation' approach to tech regulation

Labour plans overhaul of government's 'anti-innovation' approach to tech regulationNews Labour's shadow innovation minister blasts successive governments' "wholly inadequate" and "wrong-headed" approach to regulation

By Keumars Afifi-Sabet