Nvidia looks to transform machine learning with DGX-2 and Rapids

Nvidia’s Rapids platform slashes compute times from hours to seconds

Nvidia is no stranger to data crunching applications of its GPU architecture. It's been dominating the AI deep learning development space for years and sat rather comfortably in the scientific computing sphere too.

But now it's looking to take on the field of machine learning, a market that accounts for over half of all data projects being undertaken in the world right now.

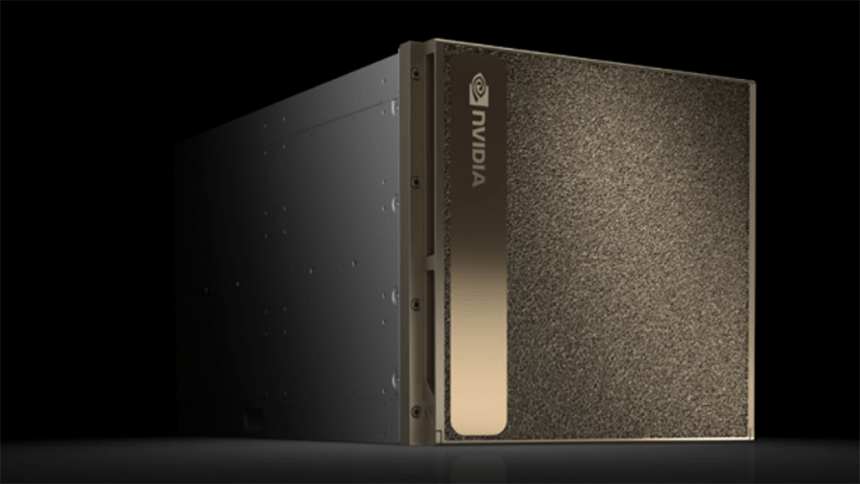

To do so, it's launched dedicated machine learning hardware in the form of the DGX-2 and new open-source platform, Rapids. Designed to work together as a complete end-to-end solution, Nvidia's GPU-powered machine learning platform is set to completely change how institutions and businesses crunch and understand their data.

"Data analytics and machine learning are the largest segments of the high-performance computing market that have not been accelerated," explained Jensen Huang, founder and CEO of Nvidia, on stage at GTC Europe 2018.

"The world's largest industries run algorithms written by machine learning on a sea of servers to sense complex patterns in their market and environment, and make fast, accurate, predictions that directly impact their bottom line."

Traditionally machine learning has taken place entirely on CPU-based systems due to their proliferation and the relative advancements of Moore's Law.

Now Moore's Law has come to an end, it's time for data scientists to find a more powerful solution to power their number-crunching machines. It's a market that, according to analysts, is worth a projected $36 billion (26.3 billion) per year, so it's no wonder Nvidia wants a slice of the pie and a chance to advance the machine learning field with its own products.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

"Building on CUDA and its global ecosystem, and working closely with the open-source community, we have created the Rapids GPU-acceleration platform," said Huang. "It integrates seamlessly into the world's most popular data science libraries and workflows to speed up machine learning.

"We are turbocharging machine learning like we have done with deep learning."

Without going into all the technical gubbins behind it all and trust me, there's a lot of that it's really quite clear just how much faster Nvidia's GPU systems are than traditional CPU machine learning.

In one example presented live on stage, Nvidia's platform crunched through 16 years' worth of mortgage and loan data from a major mortgage broker in roughly 40 seconds. On a traditional Python-based CPU system, that would take around two minutes.

For a more advanced process of creating a model for an AI to learn from to create predictions on behaviour such as modelling how likely someone is to default on a loan given their history takes a little longer. On a traditional 100 CPU system, it would take around half an hour and even longer on a 20 CPU array. As part of Nvidia's DGX-2 system and Rapids, it runs in about the two minutes it took Huang to explain what we were seeing happen.

This, obviously, translates into time benefits for data scientists and advances the pace of learning for businesses. As Huang also points out, one DGX-2 is also capable of the same workload as 300 servers. That could set a company back over $3 million to install, with that same level of expenditure on DGX-2 systems running Rapids, a company just wouldn't know what to do with that level of computational power.

It's not all hot air either as Nvidia has already had major companies coming out in support of its GPU-accelerated machine learning platform. Walmart has started using it to help understand stock management and forecasting to ensure it can drastically reduce food waste across its stores.

In the technology spheres, HPE has partnered up with Nvidia to improve its machine learning offerings to its customers; IBM is looking to use it with its Watson AI and a variety of other machine learning programs it runs, and Oracle is making use of its open-source Rapids platform and a suite of DGX-2's to power insights on its Oracle Cloud infrastructure.

Ultimately, the idea is for Rapids and DGX-2 to open up the opportunities of machine learning to new markets and businesses. Companies that don't have the budgets or capabilities to run racks of servers or employ reams of data scientists could now feasibly be able to do so. It's a technology that, while not inherently sexy, could have a huge impact on how businesses in the future run.

Vaughn Highfield is a seasoned freelance writer with more than 10 years experience in content strategy and technology journalism.

Vaughn is a self-described ‘wordsmith and UX wizard’, covering topics spanning cyber security, cryptocurrency, financial technology, and skills development.

From 2015 to 2018, he served as a senior staff writer at Alphr before assuming the role of associate editor. In his role as associate editor, Vaughn was responsible for a range of duties, including the publication’s long-term content strategy, events coverage, editorial commissions, and curation of the Alphr newsletter.

Prior to this, Vaughn held in-house roles at PCPro and Terrapinn Digital in addition to freelance marketing and content strategy activities with The Gamers Hub and Magdala Media.