Automating the end of discrimination

Tech tools such as AI and bots can help improve equality, but only if we humans build and use them the right way

AI and automation stand accused of embedding existing biases and furthering discrimination - but it doesn't have to be that way. We can build machines that make us better, helping us recognise and push back against our assumptions, confirmation bias, and other flaws that lead to discrimination.

That's the idea behind a multitude of HR and work-themed bots and AI systems, all hoping to machine discrimination out of existence and encourage diversity at work. One prominent example has been the Financial Times' sourcing bot, which skims through journalists' copy, making note of the gender of people mentioned, helping the newspaper track its own tendency to interview and feature men rather than women.

In recruitment, LinkedIn has added diversity data to its recruitment tools, while startup Textio will read your job ads and advise changes to encourage a wider range of applicants.

"Recruitment and HR is our fastest growing area, where HR directors are coming to us saying we want to build unbiased systems," said Tabitha Goldstaub, co-founder of AI advice platform CognitionX.

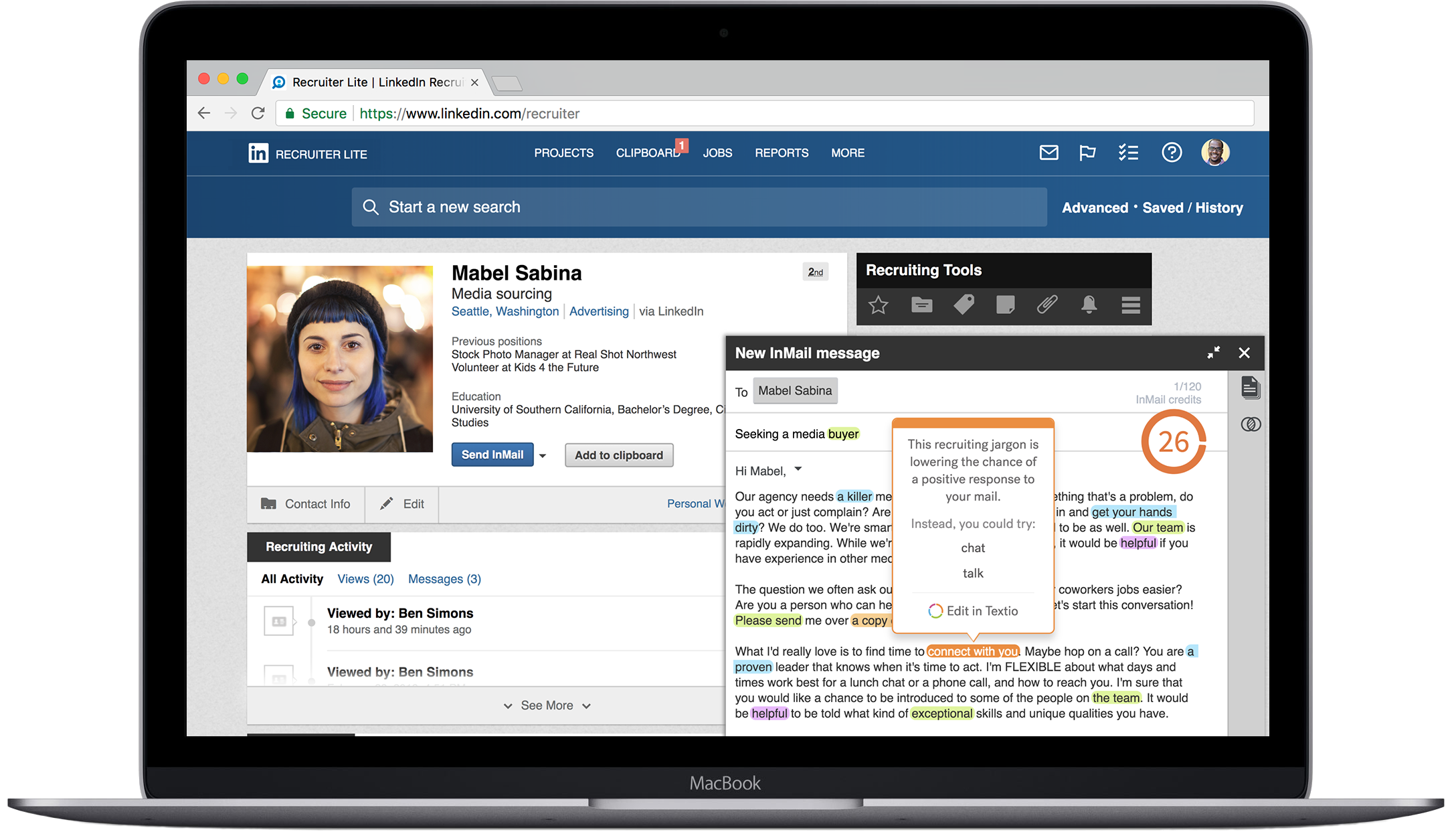

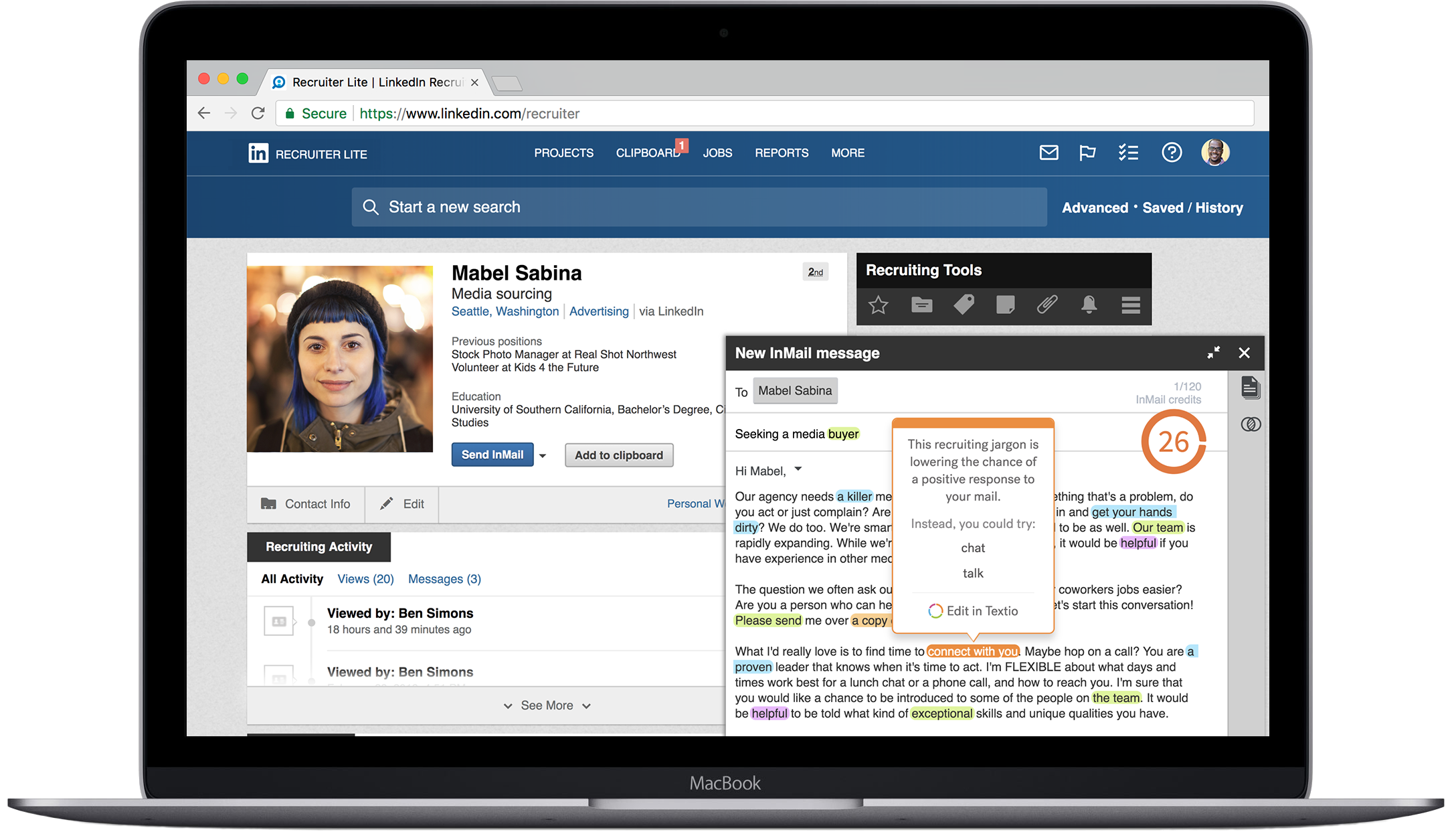

Like tools such as Grammarly, Textio pop-ups help improve the language recruiters use

Diversity at work has both a moral impetus and legal ramifications: discrimination on the grounds of gender, race or religion is already illegal in the UK. However, companies are starting to realise that choosing from a wider range of applicants and creating an inclusive workplace can be good for profit margins, too.

Indeed, consultancy McKinsey & Co analysed more than 1,000 companies across a dozen countries, revealing that those with the most diverse staff saw higher than average profits.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

No wonder then that building diverse and inclusive teams is the number one talent priority for HR departments, according to a LinkedIn report on recruiting trends, with 78% of respondents saying it was "very or extremely important" to their hiring plans. Why? They think it's good for business and creates a better working culture. (Rarely is it listed as being "the right thing to do" or "a legal requirement", but we'll take what we can get.)

Tracking talent

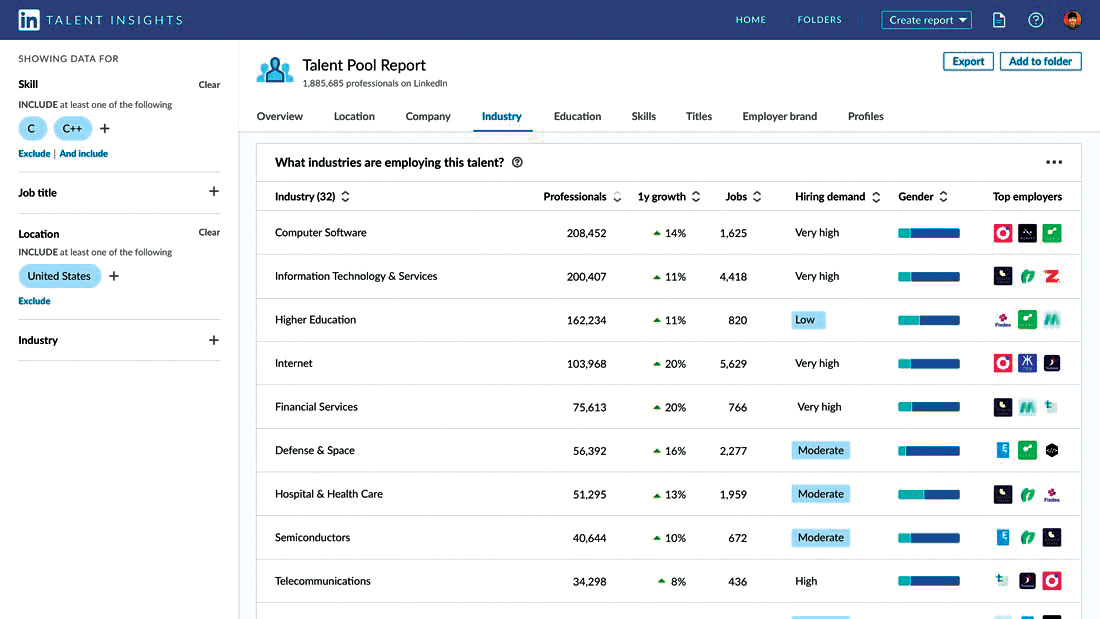

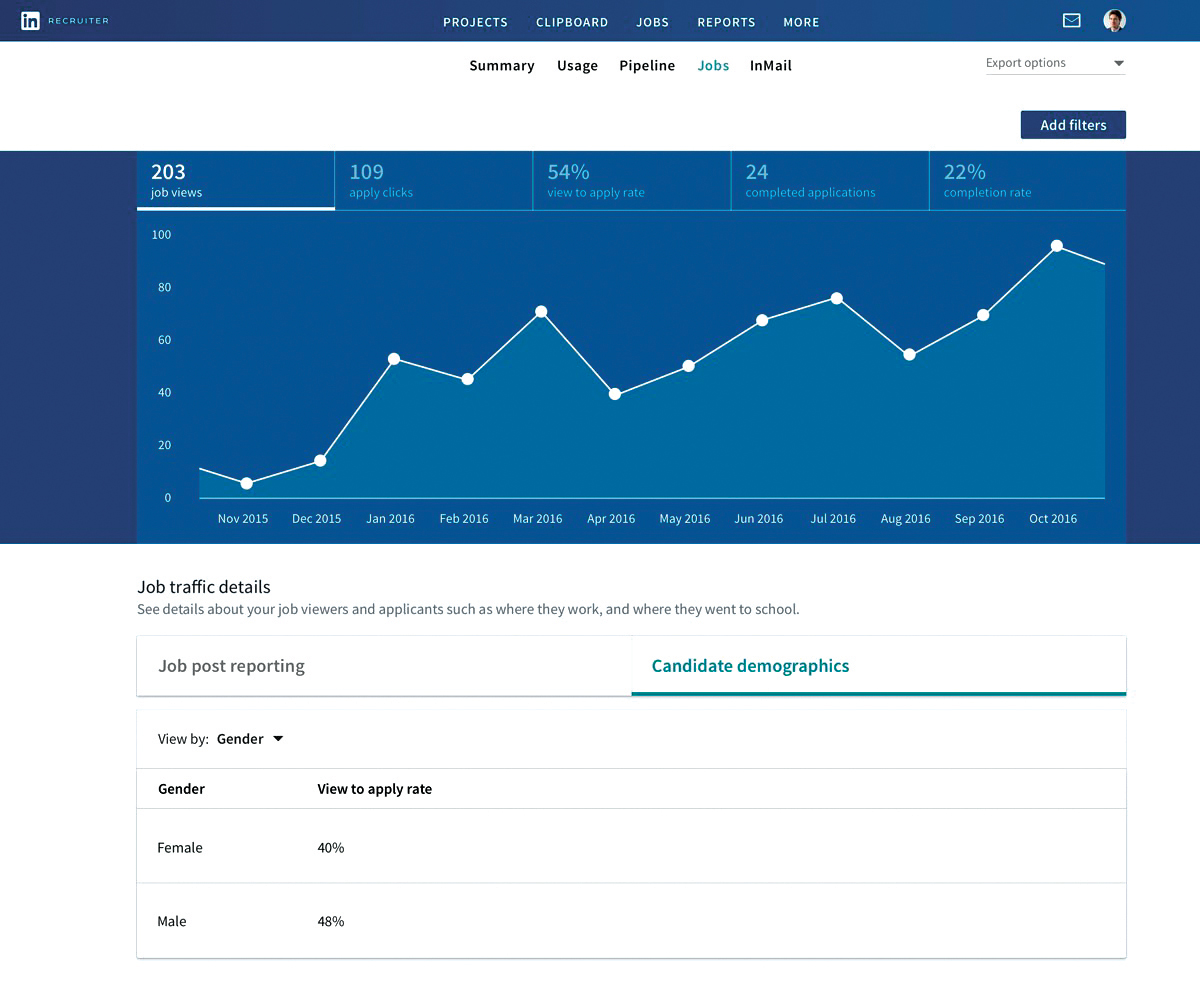

Tracking diversity is a challenge: 42% of HR departments said in the LinkedIn survey that they lack the data quality necessary to address such challenges. With that in mind, the professional social network has added gender-tracking tools to its Talent Insights platform, a data reporting tool that helps recruiters.

"When it comes to gender diversity, using tools such as LinkedIn Talent Insights will help organisations understand their own overall workforce composition, as well as within different functions, and see how they benchmark against the industry at large and spot areas of opportunities to address," said Jerome Leclercq, senior manager of product marketing at LinkedIn UK. "This avoids manual processes that deliver insights that is often outdated by the time you get it in your hands."

LinkedIn's Talent Pool Report helps recruiters access more diverse talent pools by including gender insights

In LinkedIn Talent Insights, companies will be able to see the gender representation of their own workforce, compare that to industry benchmarks, set recruiting objectives, and find out where to find more diverse applicants for roles - more colloquially, where the ladies are.

"The Linkedin Talent Insights Talent Pool Report now includes gender insights to help talent teams identify industries, locations, titles and skills where the gender representation is more favourable," said Leclercq.

"Using these insights, recruiters can refine and expand their sourcing to tap into more diverse talent pools."

RELATED RESOURCE

Diversity in the digital workplace

The future of work is a collaborative effort between humans and robots

In the LinkedIn Recruiter platform, HR departments will still see all qualified candidates, but more attention is paid to distribution in search results, with each page reflecting the gender mix of the available talent pool, Leclercq explained.

"As a very simple example, if the available talent pool for programmers with C and C++ skills in the Philadelphia area that you identified in Talent Insights is 42% women and 58% men, you'll see that same basic proportion on each page of results to make sure your recruiting team is seeing candidates that best reflect the gender distribution of the marketplace," he said.

Tweaking job adverts with Textio

Textio is similar to Grammarly or a spellchecker in Word, but rather than looking for typos, it considers the response you'll get from the words and phrases you choose. Textio Hire is its first application, a tool to analyse job posts and recruitment emails to make suggestions to speed up hiring times, candidate quality, and diversity of applicants.

Textio Hire analyses the tone of job adverts, giving a score and suggesting improvements

"Textio's predictive engine uses a combination of natural-language processing and data mining to find the words that have an impact on your hiring pipeline and bring them to your attention while you are writing," explained CEO and cofounder Kieran Snyder. "In the example of unconscious gender bias in your writing, the predictive engine finds the words and phrases that are statistically likely to create an imbalance between the number of men and women who are inspired to respond to your job ad today."

The app not only highlights problematic language, but makes suggestions, too. "Textio Hire uses its massive analytical power to not only improve what's been written, but it also imagines the things that haven't been written," said Snyder. "It can tell which one of those alternate phrases will create the best version of the job post."

While we humans can watch for biased language in our own writing, automation can make it easier to spot patterns and see outcomes that aren't obvious to us.

"Most of the patterns that emerge are truly things you just cannot theorise or guess, which is why a platform like Textio needs so much data to uncover the real patterns that change hiring outcomes," said Snyder, noting that "exhaustive" is a word that attracts more male applications, "loves learning" attracts more women, and "synergy" is a turn-off to people of colour. "You can't guess what works without massive data sets and the machine learning technology to find the hidden patterns," she said. "Intuition fails us."

Can tweaking a few words in a job ad work? Textio Hire claims that it helped Johnson & Johnson increase applications from underrepresented candidates by 22%, while Cisco gets 10% more female candidates and fills positions more quickly.

Using the right data

Another way to boost inclusivity in hiring is using automation to sift through applications, but train a system on flawed or skewed data and it will mirror your previous mistakes, notes Goldstaub. Look at Amazon: according to reports, the tech giant used AI to sift through CVs, but as the system was trained on skewed data - its own previous hires, which were predominantly male - it tended to chuck applications from women into the bin. The machine-learning system has since been dropped, according to a Reuters report.

The Recruiter platform on LinkedIn gives the gender balance of candidates as a percentage

Getting such technology right requires three elements: feeding the correct data, training the AI appropriately, and having humans in the loop to check the results, says Goldstaub. If, as with the Amazon trial, most successful hires in the past were men, there won't be enough data to train your system with. But if you're trying to encourage a wider range of applicants, you can "weight the data so we can find the people we want to look for, rather than people just like the ones we already have," said Goldstaub. As she notes, ignore anyone who blames bias on the machines - it isn't in control, we humans are. "We are in control of this, and don't need to just use the data we have already."

But that raises an "ethical conundrum," as Goldstaub puts it. "Should we have fairer data, even if it's not accurate data? That's a question we can ask ourselves and decide how we want to manage that."

RELATED RESOURCE

Diversity in the digital workplace

The future of work is a collaborative effort between humans and robots

Automated interviewers

Of course avoiding discrimination in the hiring process is a positive advancement, however it could all be for nothing if candidates are then faced with an interviewer who harbours no concerns over acting discriminatory. Whether subconscious or not, interview bias is prevalent among humans, particularly when it comes to gender and ethnicity.

Artificial intelligence can be programmed to eliminate this bias and promote workplace diversity. Furhat Robotics has used AI to produce a robot called Tengai, which they claim to be a better, fairer alternative to the human interviewer.

Science is available to support the claim. Since October 2018, the Swedish based tech startup has undertaken simulated robotic interviews to positive effects. Tengai analyses language, tone and facial expressions; in return, it mimics human speech and is designed to make candidates feel at ease - although, being greeted by a disembodied robotic head may not induce this emotion unless candidates have been forewarned.

Already sizeable organisations are turning towards Tengai and similar technologies. The ball started rolling in Sweden, and now UK-based firms are starting to dip in their toes. But problems do exist. Aviva trialled a variant of the technology to analyse videos of job interviews, however promptly halted the programme indefinitely. It is believed privacy concerns led to the decision, though Aviva has made no comment to clarify.

Artificial intelligence might give us tools to battle back against discrimination, but we still need to face the tough questions ourselves.

Freelance journalist Nicole Kobie first started writing for ITPro in 2007, with bylines in New Scientist, Wired, PC Pro and many more.

Nicole the author of a book about the history of technology, The Long History of the Future.

-

Westcon-Comstor and Vectra AI launch brace of new channel initiatives

Westcon-Comstor and Vectra AI launch brace of new channel initiativesNews Westcon-Comstor and Vectra AI have announced the launch of two new channel growth initiatives focused on the managed security service provider (MSSP) space and AWS Marketplace.

By Daniel Todd Published

-

Third time lucky? Microsoft finally begins roll-out of controversial Recall feature

Third time lucky? Microsoft finally begins roll-out of controversial Recall featureNews The Windows Recall feature has been plagued by setbacks and backlash from security professionals

By Emma Woollacott Published

-

Ciphr appoints new sales chief as part of double leadership shakeup

Ciphr appoints new sales chief as part of double leadership shakeupNews Former GoTo executive Gerald Byrne will lead all sales activity at the HR and payroll solutions provider

By Daniel Todd Published

-

How to choose an HR system

How to choose an HR systemWhitepaper What IT leaders need to know

By ITPro Published

-

Oracle launches Recruiting Booster platform to improve talent acquisition

Oracle launches Recruiting Booster platform to improve talent acquisitionNews The tech giant aims to help businesses find the right candidates with an automated recruitment platform

By Bobby Hellard Published

-

Hired by machines: Exploring recruitment's machine-driven future

Hired by machines: Exploring recruitment's machine-driven futureIn-depth As HR begins to incorporate AI, psychometrics, and digital twins, what does this mean for job seekers and businesses alike?

By David Howell Published

-

IT Pro 20/20: The problem with diversity in cyber security leadership

IT Pro 20/20: The problem with diversity in cyber security leadershipIT Pro 20/20 Why failing to address a shortage of women in senior roles puts businesses at risk - issue 23 is available to download now

By Dale Walker Published

-

How to attract the best talent to your business

How to attract the best talent to your businessWhitepapers Sample our exclusive Business Briefing content

By ITPro Published

-

Deel raises $425M for its hiring, compliance and payments platform

Deel raises $425M for its hiring, compliance and payments platformNews The solution eliminates remote hiring hurdles and supports over 120 currencies

By Praharsha Anand Published

-

Why it's the perfect time to rethink recruitment

Why it's the perfect time to rethink recruitmentWhitepapers Sample our exclusive Business Briefing content

By ITPro Published