Welcome back to ITPro's live coverage from AWS re:Invent 2024 in Las Vegas.

It's the third and final day here, but there's one more keynote speaker to hear from.

Yesterday saw Dr Swami Sivasubramanian, VP of AI and data at AWS go over even more announcements at the firm, including some sweeping updates to SageMaker. We also heard from Ruba Borno, VP of global specialists and partners at AWS, for an afternoon keynote focused on AWS partners.

This morning we'll be hearing from AWS CTO Dr. Werner Vogels, so expect some tech-focused specifics on all of the new announcements we've heard about already at AWS re:Invent 2024.

While we wait for the keynote to start, why not check out some of our recent coverage of AWS:

AWS bet big on Anthropic in the race against Microsoft and OpenAI, now it’s doubling down

AWS CISO Chris Betz wants greater industry collaboration for the multi-cloud era

AWS dealt major blow in nuclear-powered data center deal after regulators reject upgrade plans

Taking place in The Venetian, Las Vegas, AWS’ sprawling event takes place in multiple rooms and areas across the hotel’s conference facilities.

With not long until the keynote starts, seats are starting to fill up as the crowds file in.

Attendees flood into the vast keynote hall, no doubt eager to hear about all AWS’ latest announcements.

After an impressive (and quite loud) introductory video, it’s time for AWS CEO Matt Garman to hit the stage. There are cheers and applause from the crowd as he walks out, greeting those in attendance as the noise begins to die down.

“This year we have almost 60,000 people here in person,” Garman says, gesturing to the vast scale of the event. He offers a thanks to those in the crowd, including the huge community of builders and developers.

Garman talks about ‘building blocks, key components that a technology firm can provide individually and that customers can combine in interesting ways. To illustrate how customers might use these building blocks, Garman plays a video from one of the startups operating on AWS.

For the first buildling block, Garman talks about compute and AWS’ EC2 offering. He covers some brief background on the product, including some stats on AWS Graviton and its latest iteration, AWS Graviton4. By way of example of the product’s quality, he brings up Pinterest, which managed to achieve 47% cost savings by using Graviton.

But what’s next? Well, in the world of generative AI, it’s GPUs, and Garman is excited to announce a new P6 instance of Nvidia Blackwell GPUs in partnership with Nvidia. This builds on an ongoing collaboration between AWS and Nvidia.

Of course, AWS has its own AI chips - AWS Inferentia and AWS Trainium. On that note, Garman unveils another announcement, the next generation of Trainium. It’s called Amazon EC2 Trn 2 Instances, and it's in general availability, Garman says.

Next, Garman announces Amazon EC2 Trn2 UltraServers, which allows much larger models to be loaded into single nodes with far lower latency. It also means customers can build much larger training clusters, by creating UltraClusters with UltraServers.

The AWS CEO now gets an exec from Apple up on stage to talk about the pair’s collaboration. He talks about Apple’s reliance on Graviton and its partnership with AWS, mentioning also the importance of AWS’ infrastructure for developing the firm’s flagship AI offering, Apple Intelligence.

After this. Garman’s back on stage. He says generative AI is moving at “lightning speed” and that AWS isn’t slowing down. Cue another announcement - Trainium 3 which will be coming next year.

Then it’s time for another building block, storage. He takes the crowd on a trip down memory lane back to 2006, when Amazon launched S3 and kicked off the industry-wide adoption of cloud computing.

“Today, we have thousands of customers all storing more than a petabyte,” Garman says, illuminating AWS’ huge customer base.

It can be difficult to manage tables though, Garman says, when using Apache Iceberg specifically. What if S3 could do this for customers as well, Garman asks? Well, now it can, with Amazon S3 Tables, which offers up to 3x faster query performance and 10x higher transactions per second for Apache Iceberg tables.

He also announces a new metadata feature, Amazon S3 Metadata. This tool will allow users to search and manage data by using the metadata associated with their stored objects. S3 will automatically update object metadata as well.

The next building block is databases, Garman says. Amazon DynamoDB is one of AWS’ current offerings in this area, as well as Amazon Aurora. AWS is celebrating the 10-year anniversary of the latter, Garman says.

One problem AWS looked to solve is achieving multi-region consistency with low latency across its database offering. Garman explains the complexity of this issue, as transactions take a longer time to go through.

To solve this problem, AWS has innovated within the infrastructure of its cloud offerings to reduce latency between these database transactions. These innovations can be found in its new product, Amazon Aurora DSQL. This multi-region consistency is also being added to Amazon DynamoDB global tables.

Next on the stage is Lori Beer, global CIO of bank JPMorganChase. She talks about the firm’s partnership with AWS, including the core principles of JPMorganChase’s cloud journey. These include security, modernization, cloud innovation, and cloud migration. She explains some of the journey the firm has had with AWS, using it’s cloud infrastructure and GPUs to innovate.

Once Garman’s back on stage, it’s time to talk about inference. Users need a platform to deliver inference at scale, Garman says, and Amazon Bedrock is a great place for people to build and scale generative AI tools.

He returns to a theme expressed in AWS Summit London earlier this year, that Amazon Bedrock is so useful and so popular because it allows users to take advantage of different AI models.

Then, he mentions model distillation. This is a technique in which users can train a smaller, more agile model on the data of a larger more powerful model. This is useful for creating AI that deals with more specific tasks.

That’s why AWS is introducing Amazon Bedrock Model Distillation, which can do all the hard work of distilling a larger model into a smaller one.

AI hallucinations are a big worry, but AWS says it's been working on a way to stop them. One possible technique is automated reasoning, which can use mathematical reasoning to determine if a system is operating correctly. Automated reasoning is already used across other AWS products, Garman says.

Now Garman unveils Automated Reasoning Checks, to enthusiastic applause from the crowd. AI hallucinations have been a big concern for businesses, and this could be a game changer if it’s successful.

Another big topic in AI is agents and AI tools with agentic capabilities. This is when an AI can complete an entire task or workflow autonomously. Garman says these work well for smaller tasks, but customers want agents to do more complex tasks.

That’s why AWS is unveiling Amazon Bedrock Multi-agent Collaboration - users will be able to build, deploy, and orchestrate teams of agents, and assign specific agents to instruct other agents.

In a surprise visit, Garman welcomes Amazon CEO Andy Jassy to the stage. The crowd claps and cheers as the tech exec walks on stage to talk about generative AI and technological innovation at Amazon.

He says that a customer service tool at Amazon has been redesigned using AI and that it’s seen a huge improvement in predictive behavior - it can even sense when a user is getting frustrated and when they might need to be connected to a human operator. AI is also being used in optimizing seller pages, inventory management, and robotics.

“We’re also seeing altogether brand new shopping experiences,” Jassy says. Amazon’s Rufus tool is one such example, a chatbot that seeks to mimic the human experience of in-store shopping by responding to questions about products. He also mentions Amazon Alexa and Amazon Lens.

“There is never going to be one tool to rule the world,” Jassy says, referring to the range of AI models being used throughout the tech community. Customers will always want choice and will always be using different AI offerings.

Now, Jassy makes an exciting announcement - a new foundational model called Amazon Nova. It comes in four forms, Amazon Nova Micro, Amazon Nova Lite, Amazon Nova Pro, and Amazon Nova Premier.

There’s notable excitement about this announcement in the crowd, as Jassy moves on to discussing the benchmarking score of Nova against other key foundation model competitors. Jassy says they’re really cost-effective, being 75% more cost-effective than other models.

They’re also very fast, he goes on, and geared for the model distillation process which was outlined earlier.

With barely a pause for breath, Jassy announces another, image-based foundation model, Amazon Nova Canvas. He also announces Amazon Nova Reel, a video-focused foundation model. Coming soon, Jassy says, will be a speech-to-speech model, and eventually a multi-modal ‘any-to-any’ model.

After dropping these bombshell announcements, Garman is back on stage to rollout some more announcements. The first of these is related to Amazon Q Developer, Amazon’s developer-focused AI assistant.

Garman unveils three new agents for Amazon Q Developer, one that focuses on automating unit testing, one that generates code documentation, and another that automates code reviews.

While these are all important tasks, they can be tedious, Garman says. This new tool will help reduce the burden of these tasks on developers.

There’s also a new AWS capability for operations targeted at troubleshooting and issue investigation within AWS environments. It provides a guided workflow from investigation accessible from wherever a user interacts with data.

Developers aren’t the only ones who can see gains in AI, Garman says. Other departments, such as finance, can find a lot of value in the technology too. This is where Amazon Q Business comes in, an AI assistant for businesses as a whole.

Amazon Q Business indexes data from other software, such as Microsoft or Salesforce platforms. Customers need to be able to query both structured and unstructured data, Garman says.

This year, AWS is announcing that it’s bringing data from QuickSight and Amazon Q together. That means businesses can combine databases, data lakes, and data warehouses with document reports, purchase orders, emails, and other sources of data.

It’s time for the final area that Garman will be covering today, analytics. The headline announcement in this area is a new generation of Amazon Sagemaker, Amazon’s machine learning (ML) platform.

This new version of Sagemaker will be the center of all a users data, analytics, and AI needs, Garman says. To operate the platform, customers will be able to use the Amazon Sagemaker Unified Studio.

This is followed up with the announcement of the Amazon SageMaker Lakehouse, which grants unified access to user data across S3, Amazon Redshift, and a number of other platforms.

It seems like its time to start wrapping up. Garman closes out his talk and thanks everyone for coming. It’s been non-stop announcements at this mornings keynotes, and there’s a lot more to come over the next few days. Make sure to keep tuning in for our rolling coverage.

We're up very bright and early here in Vegas today, largely thanks to a lingering case of jetlag, but there's not long to go until the day-two keynote.

In the meantime, why not catch up on all of our coverage from AWS re:Invent so far?

Yesterday saw AWS jump on the agentic AI bandwagon with the launch of new AI agent features for the Bedrock and Amazon Q platforms.

AWS' announcement comes in the wake of a widespread shift in focus toward AI agents, with a host of firms including Google, Microsoft, and Salesforce having launched their own agentic AI capabilities.

Garman and AWS appear very excited about their prospects in the agentic AI era, if the keynote session was anything to go by. Customers will be able to markedly improve operations and reduce strain on developers and staff by integrating agents within their daily workflows.

Elsewhere, we saw Amazon CEO Andy Jassy take to the stage with a big announcement on the foundation model front.

Jassy unveiled the launch of 'Amazon Nova', a new AI foundation model range which pits the company in direct competition with the likes of Google and OpenAI with their Gemini and GPT-4 models.

Amazon Nova will come in a variety of shapes and sizes, we heard, and will include four different pricing tiers for customers. Two of these will focus specifically on text and video, Jassy revealed.

The model range will be made available via Amazon Bedrock.

The keynote hall is filling up again ahead of Sivasubramanian’s opening talk.

The crowd size shows no sign of having shrunk for this morning’s keynote, and the room feels just as packed as yesterday.

It’s time for Sivasubramanian to kick the show off. He starts by looking back to a defining breakthrough in a different industry - the aviation industry. Taking us back over some of the key innovations in flight and aviation, Sivasubramanian eventually links the analogy back to the new era of generative AI.

Generative AI is driving efficiency in customer service, marketing, and development, Sivasubramanian says. He also takes us on a more personal historical journey, talking the crowd through his 18 years at the company.

He comes back to an announcement from yesterday, in which CEO Matt Garman unveiled the next generation of SageMaker as the new center for all customers AI, data, and analytics needs.

He also talks about Amazon SageMaker AI, which provides access to public foundation models and allows users to build, train, and deploy machine learning (ML) models. There’s also a mention of Amazon SageMaker HyperPod.

Now time for something new - Sivasubramanian unveils Amazon SageMaker HyperPod flexible training plans. This removes uncertainty in manual training processes.

Some companies' training models need to alter what training compute instances are being used, to maximize their use when demand is down. Cue Amazon SageMaker HyperPod task governance.

This can dynamically allocate accelerator compute resources by task, and ensure that high-priority tasks are completed on time. This helps to cut costs and heighten productivity in ML training.

AI apps from AWS partners are now also available in Amazon SageMaker, Sivasubramanian reveals. AWS will keep adding partner applications as time goes on, he added.

Now, Sivasubramanian moves on to talk about inference. Amazon Bedrock is the best place for this, Sivasubramanian says, providing a platform for developers to build and scale generative AI tools.

AWS is continuing to tackle even more roadblocks for developers, he adds, Amazon Bedrock is always being built upon to provide access to an even wider range of models from the leading providers, such as Mistral, Llama, Stability, and Anthropic. He nods to Amazon Nova, the firm’s new family of foundation models announced yesterday.

There are more models on their way, such as one from Poolside and Stable Diffusion 3.5 from Stability. Luma AI, a video generation platform, is also coming to Amazon Bedrock.

Sivasubramanian gets Luma AI’s CEO on stage to talk about its product. Luma is useful for high-production quality video generation. He talks us through the training process behind Luma, explaining how AWS’ infrastructure was incredibly important for the company. AWS helped the firm train quickly and effectively.

Sivasubramanian is back on stage now, talking about how access to innovative model providers is a huge strength of Amazon Bedrock. He knows that customers' desire for emerging, specific models is growing.

This is why AWS has been working on its latest announcement, Amazon Bedrock Marketplace. This marketplace has more than 100 different models from leading providers, providing users with an even greater level of choice.

Amazon Bedrock now supports prompt caching, Sivasubramanian says. This allows developers to securely cache entire prompts and enhance accuracy with longer prompts, ultimately reducing larceny and saving on cost.

The platform will also now support Intelligent Prompt Routing. This provides a single endpoint to efficiently route prompts and helps meet cost and latency thresholds with advanced prompt matching techniques. The benefit? Application development costs can be reduced by up to 30%.

A new AWS offering targets retrieval augmented generation (RAG), which is a technique gathering popularity in the enterprise.

It’s called Amazon Kendra Generative AI Index, and it can be connected to enterprise data sources such as SharePoint, OneDrive, or Salesforce, and can be used as a knowledge base to build generative AI assistants.

It also allows customer to use indexed content across use cases such as Amazon Q Businesses applications.

Knowledge graphs create connections between different pieces of data, Sivasubramanian says, helpful for RAG requests and providing a greater level of access to data for generative AI systems.

It takes time to develop and utilize these graphs, though, so AWS is looking to do the hard work for customers with Amazon Bedrock Knowledge Bases new support for GraphRAG.

This can generate knowledge graphs to link relationships across data sources and ultimately build more comprehensive and more explainable generative AI applications. It can also enhance the transparency of source information for better fact verification.

Sivasubramanian uses a humorous example to outline the advantage of agentic AI, explaining how a linear orchestration of agents could help someone find free food dependent on personal preference at all the various events going on for re:Invent.

After introducing the agentic topic, Sivasubramanian introduces a customer to the stage, Shawn Malhotra CTO of Rocket Companies.

Malhotra talks about the results that generative AI is driving for Rocket Companies. One such product they’ve built with AWS is Rocket’s AI Agent feature. Apparently, customers are 3x more likely to close a loan from Rocket when interacting with an agent.

Now, Sivasubramanian wants to talk about Amazon Q, which has already received a suite of agentic updates this year at AWS re:Invent 2024.

It seems as though AWS isn’t done with the Amazon Q updates just yet though, as Sivasubramanian unveils Amazon Q Developer availability in SageMaker Canvas.

In SageMaker, Amazon Q will break down problems into sets of ML tasks, help to prepare data, help to define problems and build, evaluate, and deploy ML models. Ultimately, it will democratize ML development for non-ML experts.

Sivasubramanian talks a bit now about education, and the importance of learning opportunities in creating a thriving tech landscape. Digital learning struggles to reach millions of students, he says, according to external research.

He refers back to previous education programs - which have seen 29 million people receive free cloud computing skills training - before announcing AWS’ latest foray into the space, the AWS Education Equity Initiative.

This will see up to $100 million in AWS cloud credits committed to various learning and education projects over the next five years, as well as provisions for comprehensive advising from AWS experts.

It will also provide tailored support for responsible AI implementation and optimization.

With that, it’s time to wrap up. Sivasubramanian thanks the crowd and heads off stage. Make sure to check back in here at 3pm PST for this afternoon’s partner keynote from Ruba Borno.

We’re back in the keynote theater, this time greeted by the sound of a string quartet. We’re waiting to hear from AWS’ Ruba Borno this afternoon, who’ll be talking all things partner-related.

With just 10 minutes to go, the keynote hall is filling up.

After a slick promotional video, Ruba Borno, VP of global specialists and partners at AWS, hits the stage. Building on the theme of the video, Borno compares the partner ecosystem to an orchestra working collaboratively to create something beautiful.

Now she gets a bit more specific, talking about the security partner network and partner specializations, which help to distinguish which AWS partners to work with based on what they’re best at.

Borno announces four new specializations - an AI security competency, a digital sovereignty competency, an Amazon Security Lake service-ready competency, and an AWS security incident response specialization.

Borno says AWS has three experts from Turnitin, Ahead, and Wiz to talk on-stage with AWS exec Greg Pearson. The group talks about the relationship between their firms in the AWS partner ecosystem. Yinon Costica from Wiz talks about how the cloud is such a great tool for democratizing tools.

Kurby Brown Jr. from Turnitin imparts a message to the crowd, saying people should look for partners that will collaborate closely with their business. Costica tells the crowd that one of the keys to success - of which it has seen much - is laser-focus on customer needs.

“Tech is a noisy space,” Borno says when she’s back on stage. A great “signal tuner” in this environment is AWS Marketplace she says.

But what if customers could get the value of AWS Marketplace from wherever they were, if AWS Marketplace could be available on any partner website? Well, that’s exactly what AWS is announcing here, to a rousing cheer from the crowd.

This would allow for co-branded search, discovery, and procurement, and allow for requests for demos and custom pricing. It allows for AWS Marketplace central billing that connects to the AWS account.

Now time for another partner, Caylent. The firm’s President and CRO, Valerie Henderson, takes to the stage to talk about the value Caylent places on exploration and experimentation in its business.

She walks us through a brief history of the firm, before talking about the high-level of AI innovation at Caylent. “At the heart of this is our culture of continuous curiosity,” Henderson says.

Next on the stage is Muhammad Alam, executive board member of SAP and SE at SAP product engineering. AWS and SAP recently announced a partnership, ‘Grow with AWS’ and the pair talk about it on stage. SAP is also a launch partner for AWS’ new family of models, Amazon Nova.

In a surprise appearance, AWS CEO Matt Garman is invited on stage to talk about the AWS partner network and partner relationships.

Garman praises the deep knowledge that partners have of their customers, as well as the deep technical knowledge they have of AWS products and services, celebrating the collaboration between AWS and its partner ecosystem.

He also covers some of the key highlights that AWS has seen working with internet service providers (ISVs) such as Atlassian and SAP.

AWS wants to go big on small and medium-sized enterprises, Borno says, after Garman has left the stage. By way of example, she mentions Laurel Canyon Live, an SME looking to bring live music to end users as immersively as possible.

This example ties in nicely with the opening analogy of the orchestra, which Borno has tied into the talk in various ways throughout. For example, Borno brings out two execs to talk about orchestrating generative AI experiences.

Only 21% of generative AI proof of concepts (PoCs) get deployed, Borno says, referencing research from Gartner. How can this be solved? Borna brings out Francessca Vasquez, VP of professional services and generative AI innovation center at AWS to talk about this more.

Many businesses lack the deep expertise of generative AI Vasquez says, meaning they aren’t reaping all the possible benefits. AWS can help with this it seems, via its AI innovation center which has assisted TCS, United Airlines, and Slalom.

Now time for another announcement, Partner Connections, which will bring unified co-sell experiences in Partner Central, or a customers own integrated content resource management (CRM) system. It creates collaboration between multiple partners and AWS Field sellers.

It’s time to wrap up the partner keynote here, and Borno thanks the audience of partners for attending. Make sure to check back in here tomorrow morning at 8.30 am PST for tomorrow's keynote talk.

While we wait for Dr. Werner Vogels' keynote to start, why not check out some of our coverage of the event so far, which has seen AWS roll out a new suite of agentic capabilities and even its own family of foundation models:

Regulatory uncertainty is holding back AI adoption – here’s what the industry needs going forward

AWS goes all in on AI agents with new features for Bedrock and Amazon Q

We're back in the keynote hall with another string quartet, and the rooms getting busier ahead of Vogels hitting the stage.

The keynote kicks off with a slightly longer video this time. It’s a mockumentary-style look back at AWS’ invention of S3 buckets, in which the ‘pizza guy’ miraculously devised the innovation. Vogels is featured in the video, and the crowd gets a good laugh from the comedic premise.

When Vogels finally hits the stage in real life, the crowd cheers loudly and hundreds of phones begin to take pictures. When he gets talking, he starts to reminisce on his 20 years at Amazon.

“In those 20 years, there’s been a lot of highlights,” Vogels said, such as his first re:Invent, where he got to have inspiring conversations with customers about the possibilities creation.

He talks a bit about 21st-century architectures, and some of the pillars of importance for them that he has seen - controllability, resilience, data-driven, adaptive.

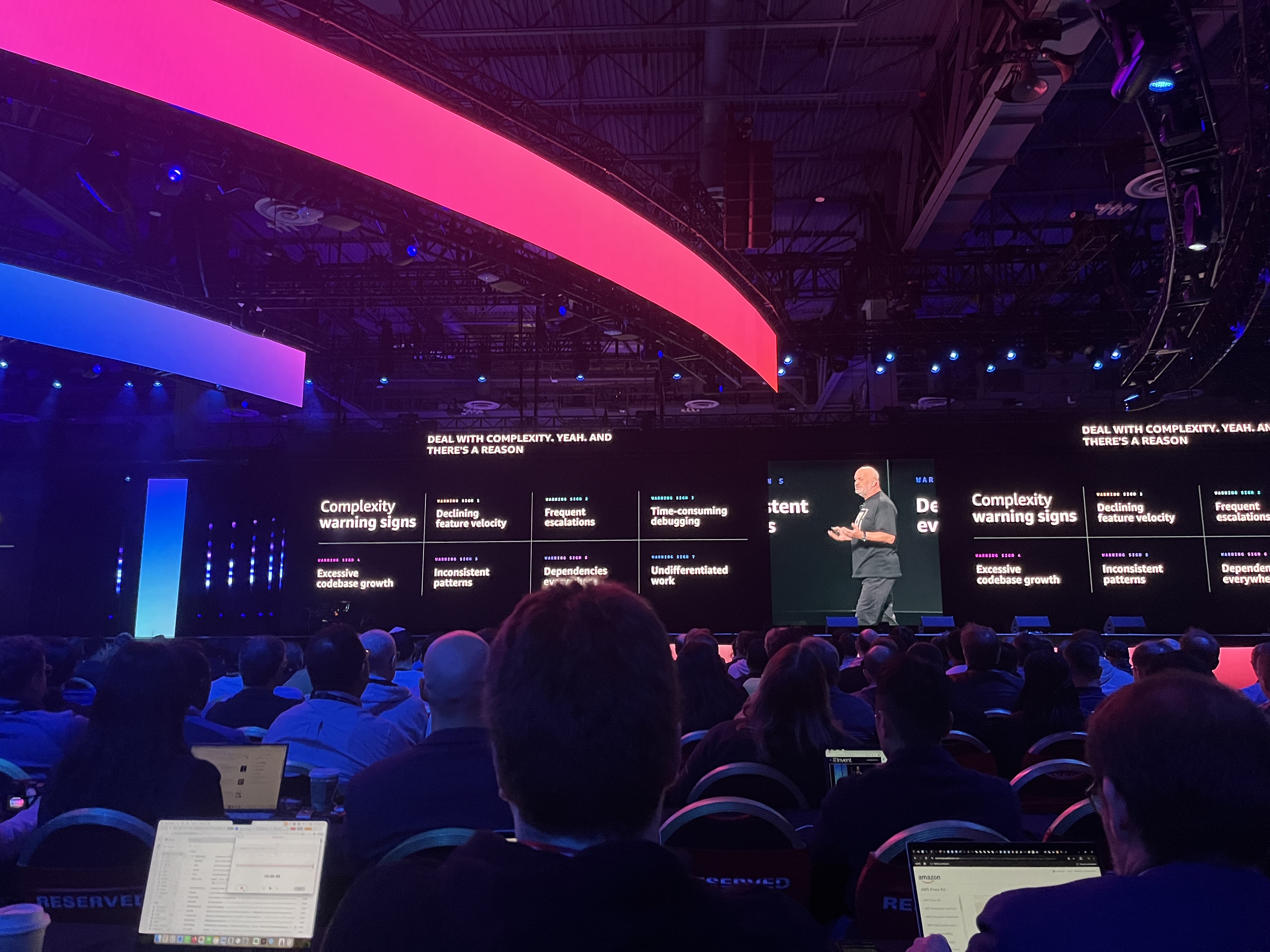

Complexity also seems to be a big topic for Vogels - the opening video was called ‘Simplexity’ - and he notes some of the forms of complexity. There is intended complexity, purpose built complexity which comes from creating applications. What businesses want to avoid is unintended complexity.

Some of the warning signs of complexity could be declining feature velocity, frequent escaltions, time-consuming debugging, excessive codebase growth - the list goes on.

After some words from Canva’s CTO about the company's development journey, Vogels is back on stage. He talks now about change and the nature of the world’s systems being constantly in flux.

Lehman’s laws of software evolution, Vogels says, suggest that software needs to continuously evolve to remain relevant. If not, customers will perceive the software to be stale or less valuable.

Businesses must champion evolvability and build this concept into software. How to do this? Model software on business concepts, keep internal details hidden, have fine-grained interfaces, integrate smart endpoint use, and so on.

Amazon S3 is a good example, Vogels thinks, as it has constantly got more complex yet this complexity has always remained hidden to the user.

“How big should a service be?” Vogels asks rhetorically, quoting a question he is often asked. This depends on different things, such as customer demand, and there are also different ways of increasing size, such as expanding or adding.

To talk about this, Vogels brings Andy Warfield to the stage, VP and distinguished engineer at AWS. Warfield talks about his experience of development at AWS, the difficulties of complexity, and the importance of experimentation.

“The most dangerous phrase in the English language is: we’ve always done it this way,” a quote from Grace Hopper reads on the slide presentation.

Lesson number four from Vogels is about organizing into cells, breaking things into building blocks, and adopting cell-based architectures. He also talks about automation and asks what shouldn’t be automated.

Security is also crucially important, Vogels adds, and must be built into everything. Protecting the customers is of utmost importance, so everyone must engage with security practices.

This concludes Vogels' lessons in ‘simplexity.’ While he’s shared his lessons, he says he’s sure the audience has many lessons of their own to share.

We’re back with the video we saw at the beginning before Vogels takes to the stage again, talking some more about complexity.

Amazon Aurora DSQL unveiled earlier at re:Invent, is a good example of system disaggregation, low coupling, high cohesion, and well-defined APIs - this gives fine-grained control and individual scaling, helping fight against unwanted complexity.

Using an example of ordering a pizza via a mobile application, Vogels does a short demo of how Amazon Aurora DSQL works.

He talks us through a few of the technical details of some of the key announcements, such as the built-in time-sync feature which allows for guaranteed time comparisons between transactions in large systems.

These clocks provide users with time detailed in nanoseconds. Both Amazon Aurora DSQL and Amazon Dynam,oDB global tables make use of these synchronized time features.

“Precise clocks reduce complexity,” Vogels says, bringing this explanation back to the key theme of the talk.

With that, Vogels wraps up his keynote. After bringing attention to his new CTO fellowship, an initiative to drive innovation from technical leaders. Then, he thanks the audience and makes his way off stage.

That’s a wrap! Vogels’ keynote was the last of a jam-packed line-up here at AWS re:Invent 2024. Make sure to check out all of our coverage and keep an eye out for more over the course of the next few days.