Welcome to ITPro's live coverage of AWS re:Invent 2023. It's day three of the conference here in Las Vegas and we have one final keynote session to go.

This morning we'll hear from Dr Werner Vogels, VP and chief technology officer at Amazon.

His twelfth appearance at re:Invent, Dr Vogels is set to explore best practices for designing modern, resilient cloud infrastructures, as well as the increasingly vital role artificial intelligence plays in supporting developers.

The keynote theatre here at AWS re:Invent is filling up rapidly ahead of the opening session with Adam Selipsky. A torrent of attendees making their way into the hall.

There certainly is a buzz in the air this morning. Anticipation ahead of arguably the biggest event of the year in Las Vegas.

We're being treated to a heavy rendition of Thunderstruck by AC/DC.

A fitting track given the sheer scale of this event so far in Las Vegas. Not long to go until Adam Selipsky takes the stage.

While we're waiting, check out some of our coverage from AWS re:Invent so far this week.

• AWS eyes AI-powered code remediation in Amazon CodeWhisperer update

• Amazon Detective offers security analysts their own generative AI sidekick

PLUS: Why Amazon's Thin Client for business is an IT departments dream.

It's likely we'll hear a lot about Amazon Bedrock today, the tech giant's LLM framework launched in April.

Bedrock has been a roaring success so far, by all accounts, enabling customers to access a range of both in-house and third-party foundation models.

Just months after launch, Bedrock had attracted "thousands of new customers", according to an AWS exec.

There's a chance, albeit a small one, that AWS could unveil the launch of 'Olympus', its own 2 trillion-parameter large language model, this week. Speculation was rampant earlier this month amid claims the company was working on the model.

If they were going to unveil Olympus, it would likely be here.

AWS chief executive Adam Selipsky is on stage now, welcomed with a huge applause from delegates.

Selipsky begins his keynote by thanking customers and partners, highlighting the broad range of organizations the cloud giant currently partners.

Salesforce, in particular, just got a shoutout. AWS and Salesforce announced a huge expansion of their ongoing partnership yesterday.

Salesforce's Einstein platform is now available on Amazon Bedrock.

But it's not just large enterprises AWS is working with, the "up-and-comers" such as Whiz are working with AWS.

Over 80% of unicorns globally run on AWS, Selipsky says. The cloud giant values its relationships with startups and dynamic young enterprises.

"Enterprises and startups, universities, community colleges, non-profits, the cloud is for anyone," Selipsky says.

"They count on us to be secure, to innovate rapidly."

So why are enterprises choose AWS?

For a start, they're the oldest, most established cloud giant. They're the most secure.

But they also 'reinvent', Selipsky says. The firm is continually exploring how it can take things to the next level and drive innovation.

"We invented infrastructure from the ground up. Because we look at things differently, our globally infrastructure is fundamentally different from others."

AWS' infrastructure regions span 32 existing regions at present, and there are plans for more ahead.

AWS has three times the number of data centers compared to the next largest cloud provider. Huge capacity and capabilities.

AWS also offers 60% more services and 40% more features for customers.

Selipsky highlights Amazon S3 storage as a prime example of its pattern of continuous innovation over the years.

S3 Glacier Deep Archive storage was the next iteration, follows by Amazon S3 Intelligent Tiering.

"Intelligent Tiering has already saved AWS customers over $2 billion," Selipsky says.

Selipsky says there's still more to come for S3 storage, however. This is only the beginning for S3.

We have our first announcement of the day here at AWS re:Invent.

Amazon S3 Express One Zone is now generally available. Up to ten times faster than S3 standard storage, Selipsky said.

This faster compute power will enable users to cut costs by up to 50% compared to S3 standard.

We've moved on to general purpose computing. Selipsky points back to the launch of Graviton in 2018.

This service has evolved rapidly in recently years. Today, more than 50k customers used Graviton to realize price performance benefits, he notes.

And our second announcement of the day. The launch of Graviton 4.

Selipsky says Graviton 4 is the most powerful and energy efficient chip we've ever built.

40% faster for database applications compared to Graviton3 and 30% faster than Graviton3. Also 45% faster for large Java applications, Selipsky adds.

We're onto generative AI now. Expect some bombshells here.

"GenAI is the next step in artificial intelligence and it is going to redefine everything we do at work and at home."

"We think about generative AI as having three macro layers," Selipsky says.

"The bottom layer is used to train foundation models and run them in production. The middle layer provides the tools you need to build and scale generative AI applications.

"Then at the top we've got the applications that you use in everyday operations,"

Selipsky points to AWS' long-standing relationship with Nvidia as a key example of how the company focused on the bottom infrastructure layer that underpins all generative AI innovation.

"Today, we're thrilled to announce we're expanding our partnership with Nvidia," he says.

Nvidia CEO Jensen Huang has joined Selipsky on stage to give us an insight into this partnership expansion.

Huang confirms the deployment of a whole new family of GPUs. The brand new H200, is the big one here.

This improves the throughput of large language model inference by a factor of four, Huang says.

"32 Grace Hoppers can be connected by a brand new NVLink switch," Huang says. "With AWS Nitro, that becomes basically one giant virtual GPU instance."

AWS and Nvidia are also partnering to bring the Nvidia DGX Cloud to AWS.

"DGX Cloud is Nvidia's AI factory," Huang says. "This is how our researchers advance AI. We use our AI factories to advance our large language models, to simulate Earth 2 - a digital twin of the earth."

Huang says the company is building its largest AI factory. This is going to be 16,384 GPUs connected to one "giant AI supercomputer", he adds.

"We'll be able to reduce the training time of large language models in just half of the time," Huang says. This will essentially reduce the cost of training in half each year.

Simply mind boggling numbers here. And incredible compute power from Nvidia, all underpinned by AWS as part of this ongoing relationship between the duo.

Now we're talking about capacity, Selipsky says. It's critical that customers have access to cluster compute capacity when training AI models.

But fluctuating levels of demand means they need short-term cluster capacity, which is an issue that no other cloud provider has addressed so far - except from AWS, Selipsky says.

Amazon EC2 Capacity Blocks for ML was announced a few weeks ago. This enables customers to scale hundreds of GPUs within one cluster. This will give them the capacity when they need it, and only when they need it, he adds.

Costs are a major consideration for organizations training AI models at present. The costs incurred by firms during training have been rising rapidly over the last year or so.

"As customers continue to train larger models, we need to keep pushing and scaling" Selipsky says.

Another announcement. The launch of the new AWS Trainium 2 chip for generative AI and ML training.

This is optimized for training FMs with hundreds of billions - to trillions - of parameters. It's also four-times faster than AWS Trainium.

We've been through the underlying infrastructure work that AWS is currently focusing on. Now we're moving onto the tools. Expect some Bedrock announcements.

"We know that many of you need it to be easier to access powerful foundation models and quickly build applications out with security and privacy in mind," Selipsky says.

"That's why we launched Amazon Bedrock.

"Customer excitement has been overwhelming," Selipsky says. More than 10,000 customers globally are using the Bedrock platform.

Organizations spanning a host of industries. From Adidas to Schneider Electric and United Airlines and Philips.

It's still early days though. There are new developments every day with generative AI, Selipsky says. This experimental stage at many enterprises is producing some great, tangible use cases of how generative AI can support organizations.

The flexibility of Bedrock and the availability of models through the framework is the best setup for customers, Selipsky says.

The events of the last ten days means that offering customers flexibility has never been more important.

Bedrock gives access to foundation models from Anthropic, AI21 Labs, Meta's Llama 2, Cohere, and more.

AWS' relationship with Anthropic has been a key talking point in recent months.

The cloud giant has invested billions in the AI startup alongside Google. AWS is also Anthropic's primary cloud provider, underpinning all the AI innovation at the startup.

Anthropic CEO and co-founder Dario Amodei has joined Selipsky on stage now.

The duo are discussing the close relationship between the two firms. Hardware is a key talking point here. Anthropic is relying on Trainium for inferentia

Anthropic recently announced Claude 2.1, Amodei says. The token capacity in Claude 2.1 is seriously impressive compared to the model's previous iteration.

Hallucinations have also been reduced by nearly half. This is a major breakthrough, Amodei says, providing customers with more peace of mind over potential AI risks.

You can read more about Claude 2.1 in our coverage from last week.

"We've put a lot of work into making our models hard to break," Amodei says.

Safety and security is a major focus at Anthropic. Interpretability is also a key focus at the startup right now.

Anthropic says the company views the current AI 'race' as a "race to the top" - but it's not what you think.

This is about striking a lead in all the right ways, taking into account safety and responsibility. This enables Anthropic to set an example for the rest of the industry, he says.

"The innovation around generative AI is explosive," Selipsky says. The company is excited about its own foundation models, the Titan models.

"We carefully choose how we train our models, and the data we used to do so," Selipsky says.

There are already multiple models in the Titan family. Titan Text Lite, Titan Text Embeddings Model, and Titan Text Express.

All of these foundation models are "extremely capable" Selipsky says. But the critical factor here for organizations is deriving value from internal company data to maximize the use of generative AI tools.

That's where Amazon Bedrock supports customers. It allows companies to access specific models based on their own specific business use cases.

Delta Airlines is using Bedrock to drive AI innovation in customer support operations, Selipsky says. This is a prime example of a large organization leveraging the framework to create tools based on their unique individual needs.

"Delta's been testing with different foundation models in Bedrock, including Anthropic Claude," Selipsky adds.

"At the end of the day, you want FMs to do more than just provide useful and targeted information," Selipsky says. Fundamentally, we need generative AI tools to make decisions for use in some capacity. To act proactively.

That's why AWS launched Agents for Amazon Bedrock recently. This is now generally available.

We're onto security now. A key talking point in the last year amidst concerns over data leakage.

Selipsky fires a cheeky broadside at OpenAI and Microsoft. Security is top of mind for AWS, he says. And fundamentally, the company has trust in both itself and its partners.

The same can't quite be said about certain industry competitors...

On the topic of security and responsibility, Selipsky announces the launch of Guardrails for Bedrock.

This enables users to safeguard generative AI applications. Users can easily configure harmful content filtering processes based on internal company policies.

"We're approaching the whole concept of generative AI in a fundamentally different way," Selipsky says. Responsibility, security, and trust are top of mind for the cloud giant.

On the topic of AI security, Pfizer chief technical officer Lidia Fonseca is live on stage now to run us through the pharma company's relationship with AWS and how the company is harnessing generative AI across its research operations.

This has been a long-standing collaboration between the two companies dating back to 2019. Pfizer's move to the cloud in 2021 was supported by AWS.

This saw the company move 12,000 applications and 8,000 servers to the cloud in just 42 weeks. One of the fastest, and largest, cloud migrations in history.

And all of this was done during arguably one of the most challenging periods in recent history - the pandemic.

Pfizer is experimenting with generative AI through Amazon Bedrock, Fonseca says.

Bedrock allows Pfizer to explore different models and generative AI tools based on specific business needs spanning a range of areas. This isn't just limited to medical research, but across IT operations and more.

We're back with Selipsky now, and he's talking skills. There's a major skills gap looming amid the ongoing focus on generative AI among enterprises globally. Simply put, businesses just don't have the relevant skills.

That's why the company is investing heavily in developing skills worldwide.

The company recently announced plans to provide AI training for two million people by 2025.

Now we're back to the top of the 'stack' Selipsky referenced earlier - the applications that leverage foundation models to support us in our daily lives.

A prime example of this is Amazon CodeWhisperer, Selipsky says. The AI coding assistant was launched last year and has been growing in popularity.

The company has been adding more features, functionalities, and capabilities in recent months. The coding assistant is helping developers spanning a host of industries drive productivity.

Many developers now view CodeWhisperer as a 'game changer'.

We've already had an Amazon CodeWhisperer announcement this week. Yesterday, AWS revealed new code remediation features for users to help weed out potential vulnerabilities and improve application development.

Now we're talking about AI chat applications at work. These applications are extremely useful for consumers, Selipsky says. But they don't really "work at work".

Well publicized data privacy and security issues around the use of AI chat applications in the workplace had even led to other platforms - cough, ChatGPT - being banned.

And we have another announcement - Amazon Q, a generative AI assistant specifically tailored for business needs, Selipsky says.

Amazon Q has been made with data privacy and security in-mind, according to Selipsky. It's also been trained on more than 17 years of AWS knowledge.

The chat application will provide natural language responses to a myriad of business-related queries.

Amazon Q could be a game changer. A highly intuitive chat assistant specifically tailored to individual use cases and business needs.

We've also got another announcement here - the launch of Amazon Q Code Transformation.

Selipsky says this has already been used internally at AWS, and the results have been "stunning". This has enabled developers to complete Java language upgrades in a "fraction of the time".

Devs at AWS carried out 1,000 Java upgrades in just two days, he says.

Amazon Q has been "built with privacy and security in mind from the ground up".

It's clear AWS is trying to position itself as the real trusted cloud and AI innovator right now. It just might work, especially given recent events. Enterprises want a steady hand, not a circus.

Amazon Q is also coming to Amazon Quicksight. This, Selipsky says, will provide organizations with a generative AI-powered business intelligence assistant.

Users can use natural language prompts to pull together reports on business performance, revenue stats, all based on internal company data.

Amazon Q is also coming to Amazon Connect. This could also be a game changer for contact center workers.

Connect will automatically create post-call summaries and support workers in real-time with natural language prompts.

"Amazon Q is just the first of many first specialized industry use cases," Selipsky says. "This is just the start of how we're going to reinvent the future of work."

There's one more thing left that customers need to make things work, Selipsky says.

Data.

To give us a run through of how to maximize the use of data, we have Stephan Durach, SVP for connected company development operations at BMW.

"We optimized our tech stack, and use data by leveraging AWS cloud technology," Stephan says.

The company has created its own cloud data hub leveraging AWS technology. BMW is also driving the use of machine learning internally using Amazon SageMaker.

An automated driving platform has also been developed using AWS technology, as well as route optimization services for drivers and an intelligent personal assistant used inside vehicles.

"20 years ago, BMW introduced its first connected vehicle," Stephan says.

Now it has more than 20 million connected vehicles. The sheer volume of data the company processes each day is mind boggling - 12 billion requests per day, and 100TB of traffic data each day.

BMW expects this to triple in the next two years, meaning the company is rapidly accelerating its digital capabilities to accommodate for this increased volume.

"To keep up with demand, we use AWS services to scale up services," he says.

We're back with Adam Selipsky now at AWS re:Invent 2023.

Selipsky is going to continue on the data strategy theme.

"When it comes to AI, your data is your differentiator," he says. Data quality has never been more important for enterprises.

Having an effective, cloud-based data strategy is now critical for organizations considering the use of generative AI. But how can different teams within an single organization maximize the use of data that pertains to their specific needs?

So to make the most of your data, you need a "comprehensive set of data services", Selipsky says.

That's why AWS has built what he describes as the most comprehensive set of data services currently out thereon the market. This includes Amazon data lakes services, analytics tools, and database services.

"You need this breadth and depth of choice so you can never compromise on cost or scale and can choose the right tool for the job," he insists.

We've got another announcement related to Zero-ETL integrations with Amazon Redshift.

This includes the new Amazon Aurora PostgreSQL service, Amazon RDS for MySQL, and Amazon DynamoDB.

"Customers are really excited about the prospect of a Zero-ETL future," Selipsky says.

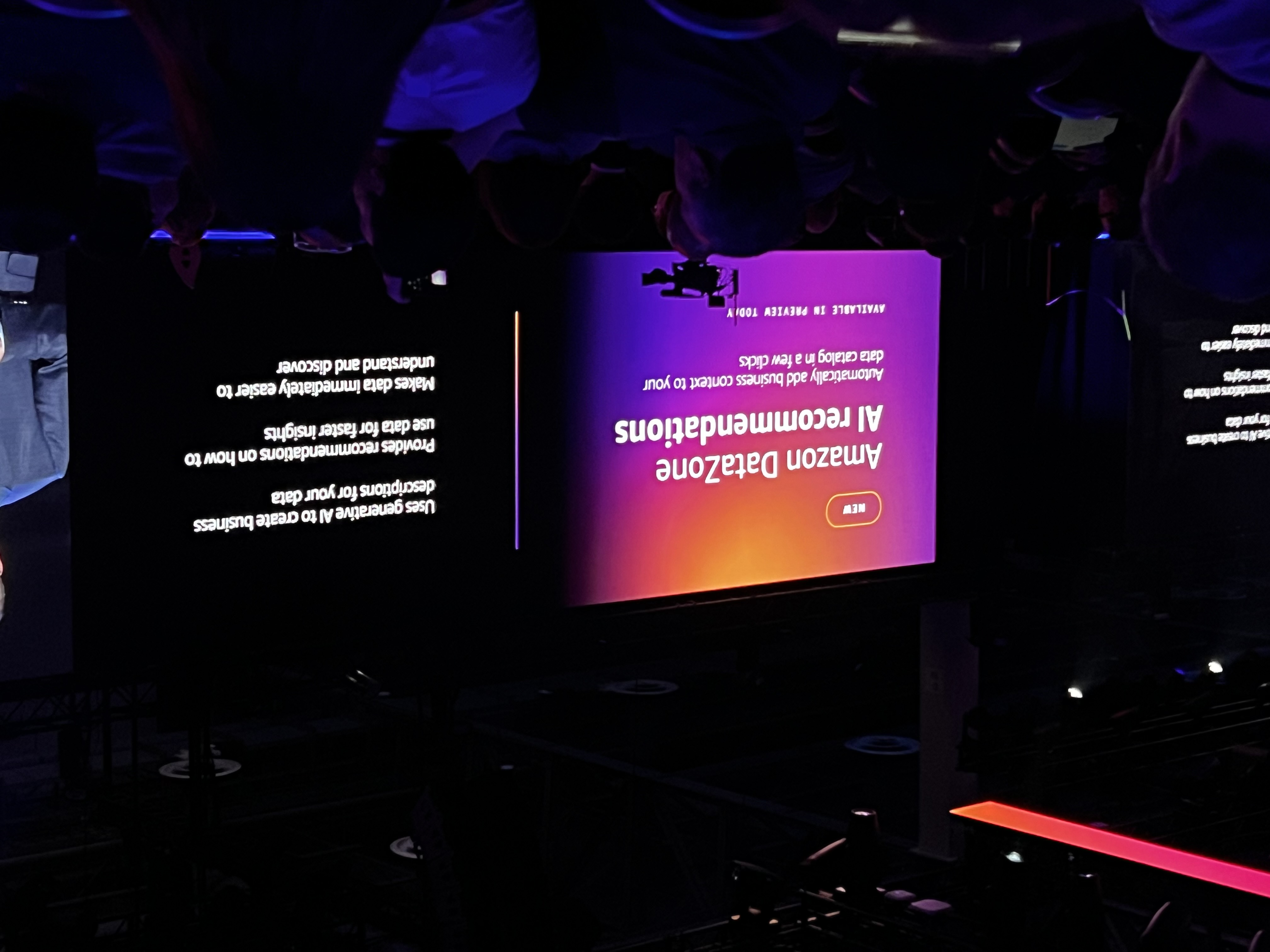

Data discoverability is critical, Selipsky says. That's why we've got the latest announcement at AWS re:Invent - Amazon DataZone AI recommendations.

This leverages generative Ai to create business descriptions of internal company data. The assistant will provide recommendations on how to harness data more effectively.

Selipsky is talking about Project Kuiper now. A comprehensive satellite network to provide better access to internet worldwide.

This project is a source of immense excitement internally at Amazon, he notes.

This won't just provide connectivity for consumers, but will also provide a dedicated enterprise connectivity service with security and data privacy in mind,

Adam Selipsky is rounding things off today in the opening keynote. What a start to AWS re:Invent.

We've had no shortage of product and service announcements spanning a number of key areas.

"Let's get out there and reinvent," Selipsky says, bringing the keynote to a close.

So, what've we learned so far?

• General availability of Amazon S3 Express One Zone. This will be up to ten-times faster than S3 standard storage.

• The launch of Graviton4, the most powerful and energy efficient chip ever created by AWS.

• An expansion of AWS' long-standing partnership with Nvidia. The chipmaker will be seriously ramping up compute power relying on AWS infrastructure, according to CEO Jensen Huang.

• Huang also unveiled the launch of a new family of H200 GPUs. Serious compute power to support the generative AI wave at the moment.

• Amazon Q launch - this could be a game changer for AWS, its first major AI chat assistant that will be leveraged across a range of business functions - from programming to business intelligence and data visualization.

• Some exciting Zero-ETL announcements, including the launch of the Amazon Aurora PostgreSQL service, Amazon RDS for MySQL, and Amazon DynamoDB

We hoped you enjoyed our coverage so far this morning.

Make sure to check in tomorrow, when we'll be covering the day-two keynote session with Swami Sivasubramanian, VP for database, analytics, and machine learning will give us a technical deep dive through all the latest generative AI moves at AWS.

While we wait for the day-two keynote to begin, why not catch up on our coverage so far?

• AWS unveils ‘Amazon Q’ enterprise-grade AI assistant

• New Amazon S3 Express One Zone promises 10x performance boost

• Amazon’s Thin Client for business is an IT department’s dream

• AWS eyes AI-powered code remediation in Amazon CodeWhisperer update

• Amazon Detective offers security analysts their own generative AI sidekick

We're minutes away from the day-two keynote here at AWS re:Invent. Not long to go, and a great buzz in the crowd once again.

Swami Sivasubramanian takes to the stage to a great applause, beginning his keynote with an optimistic note, describing generative AI as "our own supernovae".

We are on the edge of a period of great technological progress, he says.

Swami is discussing Ada Lovelace and her pioneering work as the world's first computer programmer.

"Despite a belief in their potential, Ada made something very clear, these machines could only perform tasks that humans were capable of ordering them to."

Lovelace believed that humans and computers would have a mutually beneficial relationship, much like what we are seeing with recent generative AI developments.

"Ada's analysis of computing is really inspiring to me, not only because her words have withstood the tests of time," he says,

Swami says he believes that the next stage of AI development will shift our relationship with technology.

There's another symbiotic relationship at play here though, the relationship between data and generative AI, he says. Maximizing the value of data is critical to generative AI development success.

What are the essentials to building a generative AI app? There are four key ingredients, Swami says.

• Access to a variety of foundation models

• A safe, secure, and private environment to leverage data

• Easy-to-use tools to build an deploy applications

• A purpose-built machine learning infrastructure

But the ingredients only go so far, Swami says. AWS has the comprehensive cookbook to provide customers with all the tips to achieve generative AI success.

This includes platforms such as SageMaker, and Amazon Bedrock.

We've got our first announcement from Swami here this morning. General availability of Claude 2.1 in Amazon Bedrock.

Claude 2.1 released last week, and offers an industry-leading 200k token context window, and a 2x reduction in the volume of hallucinations.

Here's everything you need to know about Claude 2.1,

And another quick fire announcement here for Amazon Bedrock. Swami confirms the availability of Meta's open source Llama 2 70B model.

Swami is not messing around this morning. Four big announcements in the space of five minutes with the availability of Titan multimodal embeddings.

"This portal enables you to build richer multimodal search and recommendation options," he says. "Companies like Opera are using Titan multimodal embeddings to enhance search experience for customers."

We've also had the Amazon Titan Image Generator announcement. This image generator performs "far better" than other industry models, Swami says. This is currently available in preview.

The image generator model will also offer in-built watermarks, Swami says. The first industry stakeholder to provide this feature to ensure transparency.

We're getting a technical run through of the image generator now. A very easy-to-use and intuitive platform. All done and dusted in just a matter of seconds.

"This is really cool," he says. Sure is.

"Because this model is trained for a large array of domains, businesses across many industries will be able to take advantage of this model.

The image generator joins a growing list of Titan models available for AWS customers via Bedrock.

This includes:

• Titan Text Embeddings

• Titan Text Lite

• Titan Text Express

• Titan Multimodal Embeddings

• Titan Image Generator

On Bedrock, Swami says more than 10,000 customers have taken advantage of the framework to develop a range of tools and applications to supercharge productivity and streamline operations.

Organizations including SAP, United Airlines, Philips, Ryanair and more.

United Airlines, for example, have used Bedrock to build intuitive generative AI applications aimed at enhancing customer services.

Data is the differentiator for generative AI applications, Swami says.

"Data is the key from a generic AI application, to an AI application that understands you and your business," he says.

So how do you customize models? Adapting models with fine tuning, he says. You can adapt model parameters to accommodate your business and relevant to your needs.

Unlabeled data sets, or raw data, can also be fine tuned by continued pre-training he says.

"Today you can leverage these techniques with Amazon Titan Lite, and Amazon Titan Express," he says. "These will enable your model to understand your business better over time."

"We make it easy to get started on Bedrock," Swami says. But some customers find it difficult to get the ball rolling. That's why AWS has its Generative AI Innovation Center, which offers customers bespoke advice on how to begin their generative AI journey.

The Generative AI Innovation Center will also launch a custom model program for Anthropic Claude, Swami reveals. This will again offer unique, business-specific advice and expertise for customers.

AWS is determined to offer "more options for our customers" and drive cost efficiency. Yesterday, Adam Selipsky announced an expansion of AWS' long-standing relationship with Nvidia.

AWS has also expanded its chip options for customers to rapidly accelerate development of AI models. Significant performance boosts and cost reductions for customers.

Training foundation models is extremely challenging, Swami says. Distributed training is an intensive process fraught with potential hurdles and pitfalls.

A lot of manual checking and fine tuning is required to ensure the process is going smoothly. To address this, Swami announces the launch of Amazon SageMaker HyperPod.

"This is a big deal," he says. SageMaker HyperPod can reduce the time to train models by up to 40%.

We've got a customer on stage now - Aravind Srinivas, co-founder and CEO at Perplexity.

Aravind will give us an insight into how Perplexity is using Amazon SageMaker to create its own LLMs.

Perplexity has been using Bedrock to experiment with Anthropic's Claude 2 model, he says.

"Claude 2 has injected new capabilities into Perplexity's product, helping us to be the leading research assistant in the market," he says.

Perplexity has also orchestrated several models within one product, leveraging Llama 2 and Mistral 7B, he adds.

SageMaker HyperPod has made it easier to create large language models at Perplexity, Aravind says.

"AWS has also helped us with customized service on our inferencing needs," he adds.

Swami is back on stage now, discussing data foundations. A critical focus for organizations hoping to leverage generative AI effectively.

"It is critical that you're able to store, organize and access high quality data to fuel your GenAI apps.

"To get high quality data for GenAI, you will need a strong data foundation. But developing a strong data strategy is not new," he says.

Many businesses have already made significant strides in bolstering their "data foundations".

Modernization of databases is critical for any business seeking to maximize the use of generative AI, Swami says. AWS offers a range of database services, helping customers to update and modernize their data architectures.

We've got another announcement here for vector search capabilities.

General availability of Amazon DocumentDB and Amazon DynamoDB.

"But we don't want to stop there," he says. In 2021, AWS added Amazon MemoryDB for Redis. This offers ultra-fast performance, Swami says.

That's why vector search for MemoryDB for Redis will be generally available from today.

This is one of the fastest AWS vector search experiences ever launched, Swami said, offering high throughput and high recall.

Another quick fire product announcement spree here from Swami. Amazon Neptune Analytics is now generally available.

This analytics database engine helps users find insights in Amazon Neptune graph data from data lakes up to 80x faster.

The platform can analyze tens of billions of connections in seconds. Snap has already been using this, Swami says, and has unlocked significant benefits for the social networking platform.

We're onto zero-ETL pipeline efficiency now here in the keynote. And we have another product announcement.

Swami unveils the launch of Amazon OpenSearch Service zero-ETL integration with Amazon S3. This is another "big one", he says, that will play a key role in helping to seamlessly search, analyze, and visualize log data stored in Amazon S3.

This is available in preview now.

We have another customer on stage with Swami now. Rob Francis, SVP and CTO for Booking.com will give us an insight into how the travel platform is leveraging generative AI tools to support customer services.

Rob says he's "very excited" about the potential of generative AI for customers of Booking.com.

Booking.com is a giant in the industry. It has over 28 million listings of places to stay, you can book flights to 524 countries, and can book vehicles at 52 locations globally.

"As you can imagine, this presents a lot of data challenges for us," he says. Over 50 petabytes of data, to be exact.

Booking has partnered with AWS for several years now. Data scientists are unlocking marked benefits through this partnership. A three-times increase in model training capabilities, a two-times decrease in failed model training attempts, Rob says.

Booking has also launched an 'AI trip planner' to make it easy for customers to book their vacations from start to finish in an easy manner.

The travel firm created this platform using Amazon SageMaker, he says. The demo here is fascinating. Highly intuitive and a very smooth process for customers.

The platform draws on review data to provide users with detailed insights into locations, activities, and venues. These personalized hotel recommendations are helping travelers get the best deals and vacations in a streamlined manner.

We're back with Swami again. Now we're looking at how generative AI can be used proactively to manage data.

"We often think of generative AI as an outcome of data," he says.

While this is partly correct, generative AI offers organizations the chance to create a cyclical process of improvement, using generative AI developed on company data, to actively improve quality and management of said data.

This is where Amazon Q is a game changer. The AI assistant can be used to support data management practices and more.

"We know Q can make these tasks easier," he says. "Because Q knows you and your business, it helps you manage your data"

If you want to learn more about Amazon Q, check out our coverage of the announcement from day one at AWS re:Invent.

Swami has given a shout out to Hurone AI. This startup aims to improve cancer care detection and treatment through generative AI. This dynamic startup is using Amazon Bedrock to

"I love this story, because it showcases how generative AI can augment our ability to solve human problems," Swami says.

This is a great example of generative AI being used to solve real-world issues, and improve human worker productivity, he adds.

Sticking with the productivity angle, we're onto Amazon CodeWhisperer now. We've had a couple of interesting updates for this AI coding assistant already this week, which you can find below.

• Amazon CodeWhisperer updates could be a game changer for shifting left

CodeWhisperer is helping to rapidly improve developer productivity, Swami says.

Amazon Q is also helping to streamline productivity across multiple business functions, from HR, to software development, to IT operations.

"I love seeing how builders are using the power of data and GenAI to solve problems," Swami says.

Swami says that we need to better utilize the power of human intellect to strengthen the relationship between data and generative AI. Human workers are the facilitators in this regard.

Swami draws parallels to nature in describing the relationship between humans, data, and generative AI. Humans lead development and are a key component in this symbiotic relationship.

Sticking with human involvement, we've got a new product announcement.

Swami announces the launch of Model Evaluation tools, available on Amazon Bedrock.

Available now in preview, this tool enables users to evaluate, review, and compare foundation models.

"This new feature, you can preview and perform automatic human-based evaluations for all your needs."

"Human inputs will continue to be a critical component of the development process with generative AI," he says.

With human involvement in generative AI development critical, it's essential that workers have all the relevant skills to effectively carry out their roles.

Swami says that, as someone who grew up without access to technology, he's deeply committed to supporting skills development initiatives at AWS.

The cloud giant has invested heavily in supporting skills courses for thousands of people worldwide.

Earlier this week, Amazon revealed it plans to train more than two million people in AI skills by 2025.

Rounding things off at AWS re:Invent, Swami says "we're just getting started" with generative AI development. We are at the beginning of an exciting wave of innovation that will unlock huge benefits both for organizations, and individual workers globally.

While we're waiting for the final keynote session to begin here at re:Invent, why not catch up on our coverage of the conference so far?

Yesterday we had a flurry of quick fire product announcements from Swami Sivasubramanian, including updates to Amazon SageMaker and the addition of Claude 2.1 and Llama 2 70B for customers using Amazon Bedrock.

• AWS re:Invent: All the big updates from the rapid fire day-two keynote

• Amazon CodeWhisperer updates could be a game changer for shifting left

• New Amazon S3 Express One Zone promises 10x performance boost

• AWS unveils ‘Amazon Q’ enterprise-grade AI assistant

• Amazon’s Thin Client for business is an IT department’s dream

• Amazon Detective offers security analysts their own generative AI sidekick

Another busy morning at the Venetian Hotel in Las Vegas. The crowds show no sign of stopping and there's a real buzz. Let's hope this continues for the re:Play party this evening.

A slightly more relaxing opening act this morning at day three of AWS re:Invent.

If you've enjoyed our coverage of AWS re:Invent so far, you can follow us on social media for additional updates or subscribe to our daily news letter.

Here we go. The final keynote at AWS re:Invent 2023. Dr Vogels walks out to a rapturous applause from the crowd.

Dr Vogels starts with a few brief comments on cloud migration, highlighting the fact that much of the crowd will likely have 'grown up' in a cloud native environment. The age of sprawling hardware estates is over - or is it?

Hardware constraints are widely publicized, he says. This has harmed business innovation in recent years and left some organizations at a disadvantage.

"Cloud of course, removed all of those constraints," he adds. "Think about all the things you can do now that you couldn't do before."

But as speed of execution becomes increasingly critical, many businesses are becoming lost and losing sight of how to work smart and architect with cost in mind.

Financial constraints placed on many firms in recent months means that we need to re-think how to maximize IT estates.

PBS is a great example of an organization that has rearchitected its infrastructure in recent years, Vogels says. The company has driven costs down, and reduced streaming costs by 80%.

The broadcaster's shift to the cloud has been transformative in terms of its ability to meet viewer demands, especially during new release periods.

Cost and sustainability are intricately linked, Vogels adds. In making sure that systems are sustainable, businesses can also ensure cost efficiency.

"Cost is a pretty good approximation for the resources you use. Throughout this talk when I say 'cost', I hope you also keep 'sustainability' in mind."

WeTransfer is another AWS customer Vogels highlights as a great case study here.

The organization has undertaken a widescale modernization of its cloud estate, and has unlocked marked benefits in terms of cost efficiency, sustainability, and scalability.

WeTransfer unlocked a 78% reduction in emissions from server use in 2022 by optimizing energy efficiency capabilities.

The era of the 'frugal architect' is in full swing, Vogels says. IT leaders must prioritize ways to optimize and streamline efficiency across their estates.

This is a concept Vogels has been talking about for over a decade now.

"Find the dimension you're going to make money over, then make sure that the architecture follows the money."

That's a quote from 2012. This has been a long-running topic and one that many organizations fail to address - much to their detriment.

Optimizing architectures has multiple follow-on impacts on the organization and customers.

A more streamlined IT estate, means lower costs, which translates to lower costs for consumers, and thus a more satisfied customer base, he says.

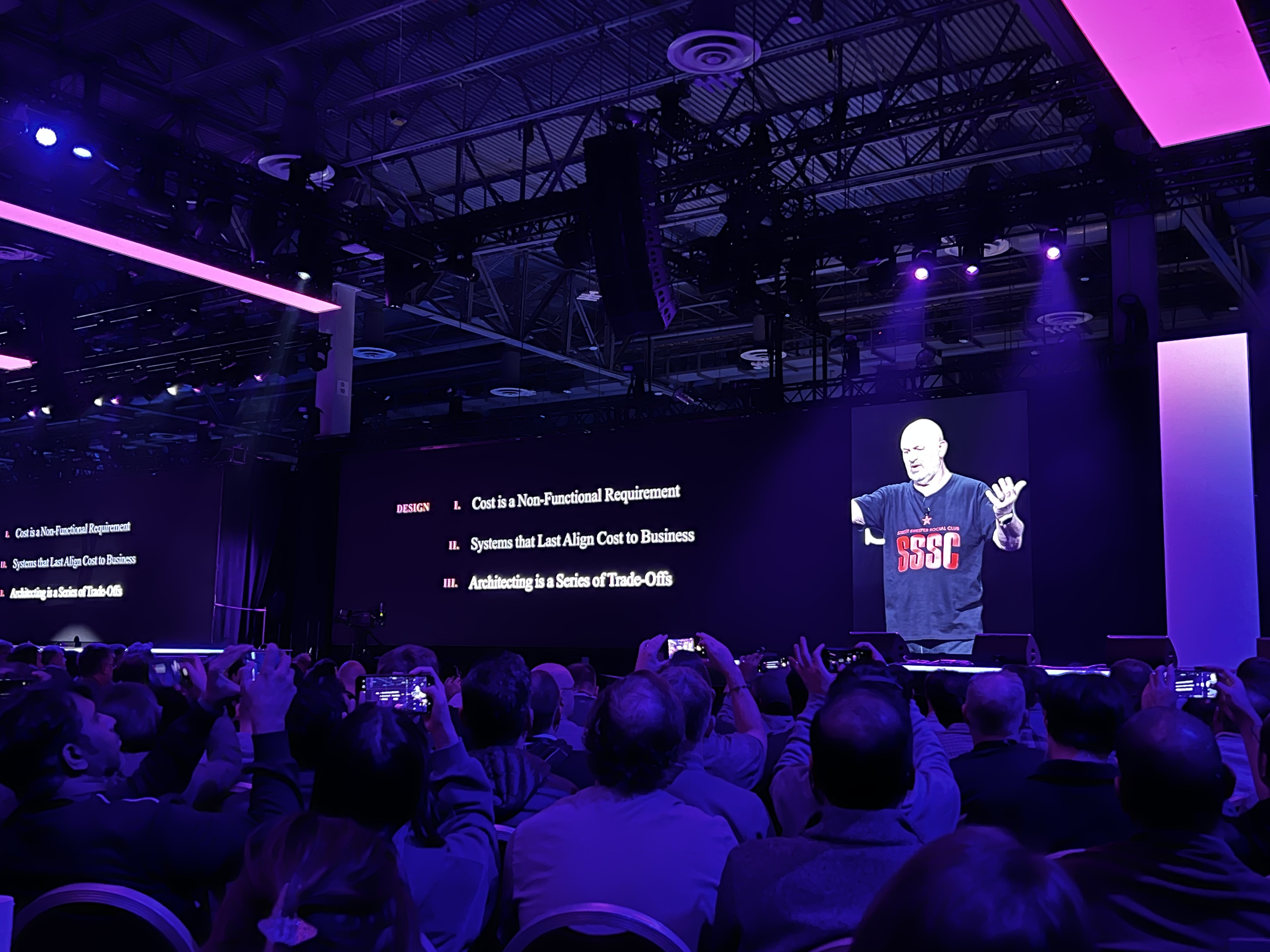

The three key considerations to becoming a truly frugal architect:

• Cost is a non-functional requirement. This is the critical first step - acknowledge cost considerations and adapt.

• Create systems that last. Evolvable systems can reduce long-term cost burdens and will deliver marked business benefits.

• Understand that architecting is a 'series of trade-offs'. Architecting can accrue technical and economic debt. These debts must be paid, Vogels said. Ensure alignment across the business to identify key areas of focus.

"The business needs to understand AWS costs," Vogels says. "Keep that in mind."

Vogels says a sharpened focus on metrics and measurements, and observability, is key to aligning priorities. This enables businesses to understand key areas to tackle within their architecture.

Vogels reflects on his childhood in the Netherlands. During the 1970s, there was an oil crisis which forced people to avoid using cars and become more energy efficient.

An interesting parallel to current energy concerns around IT estates.

Houses in which individuals had meters where they could see their energy usage were typically more energy efficient, Vogels says. This is because the homeowners became more conscious of their usage.

This mindset can be applied to current cost and energy considerations. Insights into energy usage can enable you to measure the long-term individual costs and take action to reduce both energy usage, and the financial burden placed on businesses.

Microservice costs, for example, have been consistently decreasing over the last decade, Vogels says. This is due to modernization of energy efficiency techniques and the ability for firms to identify their usage and optimize accordingly.

We have a new service announcement from Vogels here this morning with the general availability of AWS Management Console myApplications.

This new service enables AWS users to monitor and manage the cost, health, and performance of applications, Vogels says.

And just like that, another product announcement. This time the launch of Amazon CloudWatch Application Signals, available in preview from today.

This new service will automatically monitor applications to track performance and streamline optimization, Vogels notes.

Two big cost efficiency-focused product launches in as many minutes.

Continuing on the optimization topic here, Vogels says this has become somewhat of a "lost art".

"We've been moving really fast, and we're not thinking at a smaller level. But as it turns out, quite a few of your costs go there. We need to think about eliminating digital waste in our systems," he says.

PBS, for example, had some content that would be watched maybe "once or twice a month", he says. They made the decision to remove this content. The burden this placed on the IT estate was not worth the returns it delivered.

The cost of development should be "continuously under scrutiny", Vogels says. Firms must strike a perfect balance between the costs to build and the costs to operate.

Many struggle to strike this balance, however. And it causes significant long-term harm.

"Cost awareness is a lost art. Because sustainability is a freight train that is coming your way that you cannot escape," he says.

Organizations should place some constraints around cost and sustainability.

We're moving onto AI innovation now here in Vogels' keynote.

There's been a lot of discussion about generative AI so far at AWS re:Invent, but Vogels will be giving us an insight into how this emerging technology can be harnessed to fuel transformation.

"The things we're doing at AWS define the technical future," he says.

Vogels adds that "historical context" around AI is important to understand where we're going with the technology.

Vogels points to some of the pioneering work from Alan Turing in the mid-2oth century as a great historical example of how humans perceived the potential of emerging technologies.

Theories around artificial intelligence were being discussed during this period, but things "didn't really go anywhere".

'Expert systems' emerged in the years following. But these were very laborious and not quite impactful enough.

"The big breakthrough came when we saw this shift from symbolic AI to embodied AI," Vogels says. It was out of this that modern ideas of artificial intelligence, and its application in the workplace, emerged.

In recent years, we've seen deep learning and reinforcement learning emerged and became increasingly critical. Running in parallel to this was improvements in hardware that underpinned this wave of AI innovation.

Now we're seeing a "revolution" with the generative AI wave, Vogels says. But we're not looking at this specifically today.

We're going back a step to look at "good, old fashioned AI".

"Not everything needs to be done with these massive large language models," Vogels says. We've already seen some fantastic examples of "old-fashioned" AI delivering operational improvements and efficiency in recent years.

The International Rice Research Institute (IRRR) in Manilla has used artificial intelligence to optimize the production of rice to tackle hunger in the Philippines, Vogels says.

This is a perfect example of old fashioned AI being used to deliver positive impact for broader society. Truly a great case of "AI for good".

Cergenx, another AWS customer, has been using AI to improve hearing tests and support for children with hearing issues.

Precision AI, meanwhile, has been using AI to target weeds on farmland to "attack individual weed plants" and prevent the use of harmful chemicals that damage the environment.

All of this is powered by the use of open data sets. "Good data", he says. This is the key to leveraging artificial intelligence effectively.

But making sense of data can often be challenging for organizations. Vogels says that it's akin to "finding a needle in a haystack".

But machine learning represents the 'magnet' that businesses can use to find these needles and derive value from their data.

Vogels continues on the topic of maximizing data for developing impactful AI applications.

A common recurring theme throughout AWS re:Invent. A tradesperson is only as good as their tools, as they say, and in the age of AI your applications are only as good as your data.

There's been a lot of discussion around the massive demands of large language models of late. Vogels says that "small, fast, and inexpensive" models are becoming increasingly appealing to many organizations.

Many organizations would like to embrace machine learning and AI, but they don't have the capacity - be it technical or financial - to use the immense compute power often required.

We're looking deeper at how to effectively integrate generative AI tools now.

There are a host of considerations organizations and developers must tackle during the adoption and integration process, he says. Similar to IT architectural efforts, this is a delicate balancing act.

We have another product announcement here, with the general availability of Amazon SageMaker Studio Code Editor.

This new platform will help streamline the development of AI applications, and marks the latest in a slew of updates to the service so far this week.

Vogels seems very excited about the potential of Amazon Q.

There's huge potential here to help optimize code generation and support developers. But it's not just limited to that, there are a host of potential use cases spanning multiple operational departments in the modern enterprise; from HR to IT operations and business intelligence.

Amazon Q certainly seems to be AWS' flagship AI product offering right now alongside Bedrock and CodeWhisperer.

Vogels brings his keynote to a conclusion now with a bold statement.

"I think there's never been a better time to be a builder," he says. This is an exciting period for developers the world over, with generative AI offering great potential to improve productivity and unlock performance benefits for enterprises across a host of industries.

He rounds off his keynote with a call to arms.

"Tonight...tonight we're going to party."

And that's a wrap on our keynote coverage from AWS re:Invent 2023.

We hope you've enjoyed our rolling coverage over the three days in Las Vegas.

For additional insights, check out some of our product announcement coverage from the week.

• Amazon CodeWhisperer updates could be a game changer for shifting left

• All the big updates from the rapid fire day-two keynote

• Amazon’s Thin Client for business is an IT department’s dream

• New Amazon S3 Express One Zone promises 10x performance boost

• AWS unveils ‘Amazon Q’ enterprise-grade AI assistant

• Amazon Detective offers security analysts their own generative AI sidekick

• AWS eyes AI-powered code remediation in Amazon CodeWhisperer update

There's more to come from re:Invent, so make sure to subscribe to our daily newsletter for all our latest updates and analysis.