European Commission says tech giants are more effectively examining illegal hate speech

But the UK ranks as the third-worst performing nation in terms of removing harmful content

Social media platforms have generally become more effective at removing online hate speech and harmful content within 24 hours, but are providing feedback to their users less frequently.

Tech firms are reviewing the majority of notifications they receive on hate speech in a timely way, according to a European study, with an average of 89% of flagged content across several platforms examined within 24 hours. These social networks, including Facebook, Twitter, YouTube, Instagram and Google+, are also removing 72% of the illegal hate speech notified to them.

The figures, outlined in the latest European Commission's (EC) monitoring report on its voluntary Code of Conduct, mark a significant improvement since the first such study in 2016. The first of now four monitoring reports saw 40% of flagged content examined within 24 hours, and 28% removed.

The study saw dozens of organisations raise thousands of queries across the social media platforms in question during a six-week period, with the latest monitoring report conducted in late 2018.

Despite the pan-European improvement, however, the UK still lags well behind the continental average, representing the nation with the third-lowest rates of content removal across all member states.

Just 54% of content flagged is removed in the UK, according to the figures, which is actually a drop on the previous monitoring report, measured in December 2017, which saw 66% of illegal content removed.

Facebook is the best-performing platform on the reviewing front, with 92.6% of cases seen within 24 hours, and a further 5.1% seen within 48 hours. The corresponding figures for Youtube and Twitter are 83.8% and 7.9%, and 88.3% and 7.3% respectively.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Meanwhile, supplying feedback to users and a rationale behind these decisions, are being provided less frequently, with 65% of users receiving notifications in 2018 against 69% being informed in 2017's survey.

Facebook is the only company informing users systematically, the report says, with 92% of notifications receiving feedback, but this is a reduction from almost 95% last year.

The latest figures are released in light of mounting pressure in the UK for social media companies to do more to monitor harmful content and hate speech posted on their platforms.

An influential parliamentary committee of MPs just last week published a report recommending that policymakers construe a set of new laws to subject social media companies to a legally-enforceable duty of care'.

The European Union (EU) has even proposed limiting the statutory deadline for tech giants to remove illegal content to just one hour.

Keumars Afifi-Sabet is a writer and editor that specialises in public sector, cyber security, and cloud computing. He first joined ITPro as a staff writer in April 2018 and eventually became its Features Editor. Although a regular contributor to other tech sites in the past, these days you will find Keumars on LiveScience, where he runs its Technology section.

-

Why keeping track of AI assistants can be a tricky business

Why keeping track of AI assistants can be a tricky businessColumn Making the most of AI assistants means understanding what they can do – and what the workforce wants from them

By Stephen Pritchard

-

Nvidia braces for a $5.5 billion hit as tariffs reach the semiconductor industry

Nvidia braces for a $5.5 billion hit as tariffs reach the semiconductor industryNews The chipmaker says its H20 chips need a special license as its share price plummets

By Bobby Hellard

-

UK financial services firms are scrambling to comply with DORA regulations

UK financial services firms are scrambling to comply with DORA regulationsNews Lack of prioritization and tight implementation schedules mean many aren’t compliant

By Emma Woollacott

-

Supply chain services, 2023

Supply chain services, 2023whitepaper Covering the leading service providers in enterprise supply chain innovation

By ITPro

-

Transforming the aftermarket supply chain

Transforming the aftermarket supply chainwhitepaper with IBM’s cognitive enterprise business platform for Oracle Cloud and generative AI

By ITPro

-

The Forrester Wave™: API management solutions

The Forrester Wave™: API management solutionsWhitepaper The 15 providers that matter the most and how they stack up

By ITPro

-

A green future: How the crypto asset sector can embrace ESG

A green future: How the crypto asset sector can embrace ESGWhitepaper Understanding the challenges and opportunities of new ESG standards and policies

By ITPro

-

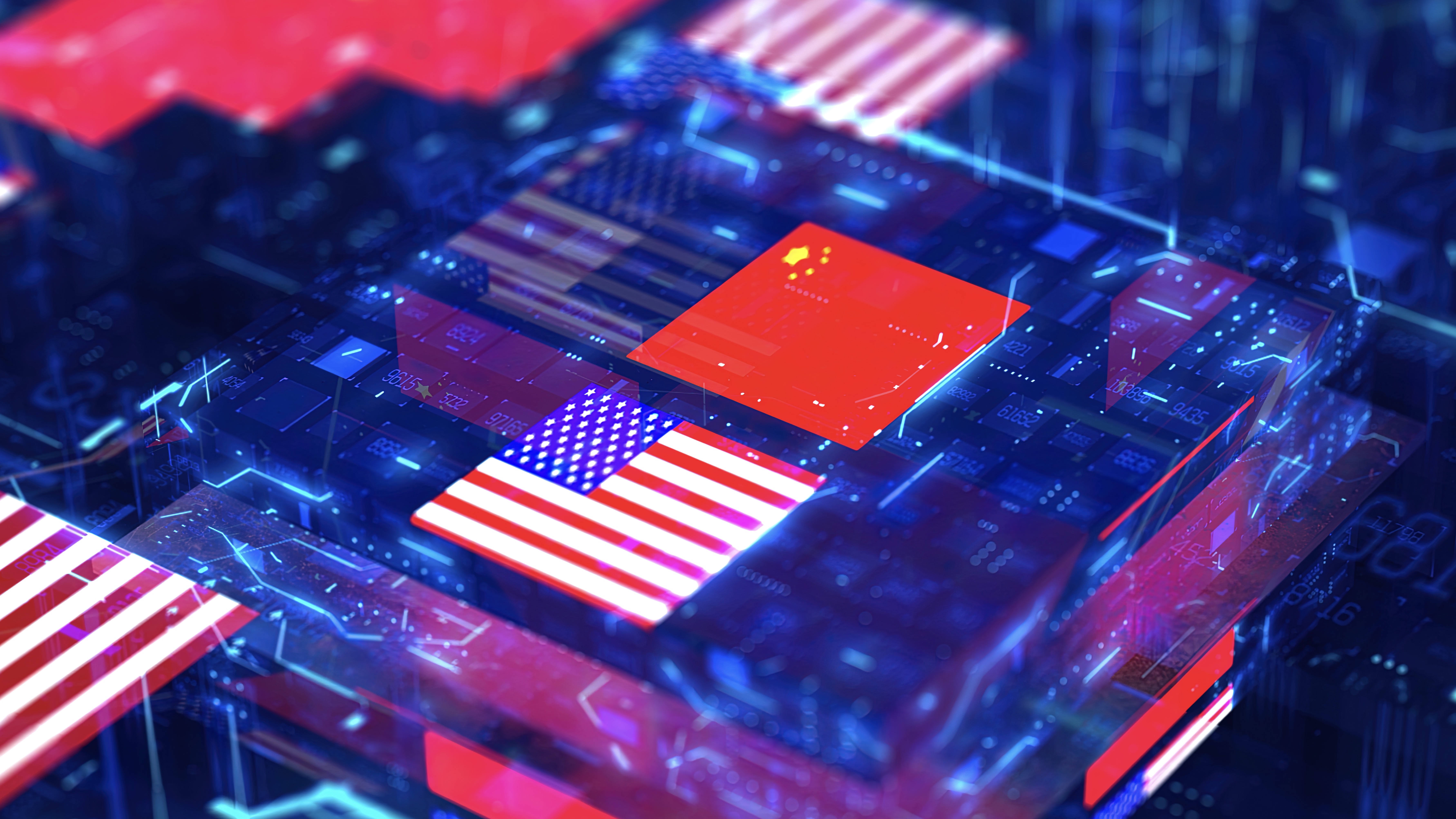

What the US-China chip war means for the tech industry

What the US-China chip war means for the tech industryIn-depth With China and the West at loggerheads over semiconductors, how will this conflict reshape the tech supply chain?

By James O'Malley

-

Former TSB CIO fined £81,000 for botched IT migration

Former TSB CIO fined £81,000 for botched IT migrationNews It’s the first penalty imposed on an individual involved in the infamous migration project

By Ross Kelly

-

Microsoft, AWS face CMA probe amid competition concerns

Microsoft, AWS face CMA probe amid competition concernsNews UK businesses could face higher fees and limited options due to hyperscaler dominance of the cloud market

By Ross Kelly