Calls for watchdog to prevent AI discrimination

Research argues AI algorithms lack transparency necessary for legal challenges

A watchdog should be created to keep tabs on artificial intelligence and other automated systems to avoid discrimination and poor decision-making, according to a group of experts.

A report by a team at the Alan Turing Institute in London and the University of Oxford calls for a dedicated independent body to be set up to investigate and monitor how these systems make decisions, many of which can massively impact a person's livelihood.

Due to the economic value of AI algorithms, the technical details behind these systems are a closely guarded secret within the industry and are rarely discussed publically. However an independent body will be able to represent those individuals who feel they have been discriminated against by an AI service, according to the researchers.

"What we'd like to see is a trusted third party, perhaps a regulatory or supervisory body, that would have the power to scrutinise and audit algorithms so they could go in and see whether the system is actually transparent and fair," said researcher Sandra Wachter.

The team, which also includes Brent Mittelstadt and Luciano Floridi, argues that although people are able to challenge any flawed decisions made by AI, current protective laws are out of date and inefficient.

The UK Data Protection Act enables individuals to challenge AI led decisions however companies do not have to release information that they consider a trade secret. This currently includes specifically how an AI algorithm has arrived at the decision it made, leaving people in the dark as to why they may have had a credit card application turned down or been mistakenly removed from the electoral register.

New General Data Protection Regulation (GDPR) legislation will arrive in 2018 across European member states and the UK, promising to add greater transparency to AI decision-making. However the researchers argue it runs the risk of being "toothless" and won't give potential victims enough legal certainty.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

"There is an idea that the GDPR will deliver accountability and transparency for AI, but that's not at all guaranteed," said Mittelstadt. "It all depends on how it is interpreted in the future by national and European courts."

A third party could create a balance between company concerns over leaking trade secrets and the right for individuals to know they have been treated fairly, according to the report.

The report looked at cases in Germany and Austria, which are considered to have the most robust laws surrounding the use of AI led decision-making. In most cases, companies were required to hand over only general information about the algorithm's decision process.

"If the algorithms can really affect people's lives, we need some kind of scrutiny so we can see how an algorithm actually reached a decision," said Wachter.

Microsoft's recent attempt to develop AI in the social media space ended in disaster when its Tay chatbot, designed to mimic conversations, was manipulated into tweeting messages such as "Hitler did nothing wrong".

In December 2016, an embarrassing incident occurred when an AI-driven identification system rejected a passport application from a 22-year-old Asian man, because it decided that he had his eyes closed.

Dale Walker is a contributor specializing in cybersecurity, data protection, and IT regulations. He was the former managing editor at ITPro, as well as its sibling sites CloudPro and ChannelPro. He spent a number of years reporting for ITPro from numerous domestic and international events, including IBM, Red Hat, Google, and has been a regular reporter for Microsoft's various yearly showcases, including Ignite.

-

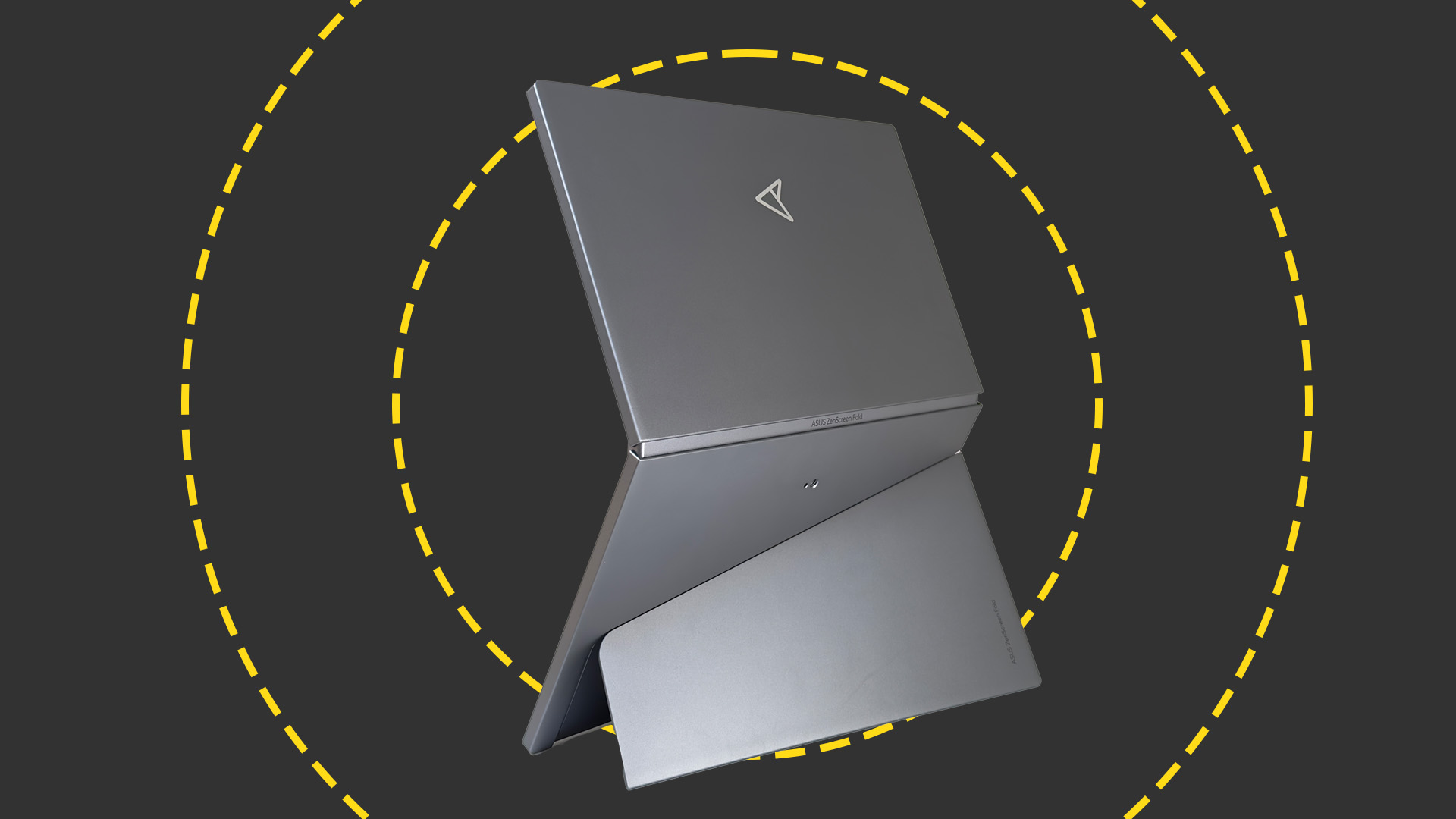

Asus ZenScreen Fold OLED MQ17QH review

Asus ZenScreen Fold OLED MQ17QH reviewReviews A stunning foldable 17.3in OLED display – but it's too expensive to be anything more than a thrilling tech demo

By Sasha Muller

-

How the UK MoJ achieved secure networks for prisons and offices with Palo Alto Networks

How the UK MoJ achieved secure networks for prisons and offices with Palo Alto NetworksCase study Adopting zero trust is a necessity when your own users are trying to launch cyber attacks

By Rory Bathgate