AI’s use as a hacking tool has been overhyped

New research reveals that most LLMs are unable to exploit one-day vulnerabilities, even when given the CVE description

The offensive potential of popular large language models (LLMs) has been put to the test in a new study that found GPT-4 was the only model capable of writing viable exploits for a range of CVEs.

The paper from researchers at University of Illinois Urbana-Champaign tested a series of popular LLMs including OpenAI’s GPT-3.5 and GPT-4, as well as leading open-source agents from Mistral AI, Hugging Face, and Meta.

The agents were given a list of 15 vulnerabilities, ranging from medium to critical severity, to test how successfully the LLMs could autonomously write exploit code for CVEs.

The researchers tailored a specific prompt to yield the best results from the models that encouraged the agent not to give up and be as creative as possible with its solution.

During the test, the agents were given access to web browsing elements, a terminal, search results, file creation and editing, as well as a code interpreter.

The results of the investigation found GPT-4 was the only model capable of successfully writing an exploit for any of the one-day vulnerabilities, boasting a 86.7% success rate.

The authors noted they did not have access to GPT-4’s commercial rivals such as Anthropic’s Claude 3 or Google’s Gemini 1.5 Pro, and so were not able to compare their performance to that of OpenAI’s flagship GPT-4.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

The researchers argued the results demonstrate the “possibility of an emergent capability” in LLMs to exploit one-day vulnerabilities, but also that finding the vulnerability itself is a more difficult task than exploiting it.

GPT-4 was highly capable when provided with a specific vulnerability to exploit according to the study. With more features including better planning, larger response sizes, and the use of subagents, it could become even more capable, the researchers said.

In fact, when given an Astrophy RCE exploit that was published after GPT-4’s knowledge cutoff date, the agent was still able to write code that successfully exploited the vulnerability, despite its absence from the model's training dataset.

Removing CVE descriptions significantly hamstrings GPT-4’s blackhat capabilities

While GPT-4’s capacity for malicious use by hackers may seem concerning, the offensive potential of LLMs remains limited for the moment, according to the research, as even it needed full access to the CVE description before it could create a viable exploit.Without this, GPT-4 was only able to muster a success rate of 7%.

This weakness was further underlined when the study found that although GPT-4 was able to identify the correct vulnerability 33% of the time,, its ability to exploit the flaw without further information was limited: Of the successfully detected vulnerabilities GPT-4 was only able to exploit one of them.

In addition, the researchers tested how many actions the agent took when operating with and without the CVE description, noting the average number of actions only differed by 14%, which the authors put down to the length of the model’s context window.

Speaking to ITPro, president at managed detection and response firm CyberProof, Yuval Wollman, said despite growing interest from cyber criminals in the offensive capabilities of AI chatbots, their efficacy remains limited at this time.

RELATED WHITEPAPER

“The rise, by hundreds of percentage points, in discussions of ChatGPT on the dark web shows that something is going on, but whether it's being translated into more effective attacks? Not yet.”

Wollman said the offensive potential of AI systems is well established, citing previous simulations run on the AI-powered BlackMamba malware, but argued the maturity of these tools is not quite there for them to be adopted more widely by threat actors.

Ultimately, Wollman thinks AI will have a significant impact on the ongoing arms race between threat actors and security professionals, but claims it’s too early to answer that question at the moment.

“The big question would be how the GenAI revolution and the new capabilities and engines that are now being discussed on the dark web would affect this arms race. I think it's too soon to answer that question.”

Solomon Klappholz is a former staff writer for ITPro and ChannelPro. He has experience writing about the technologies that facilitate industrial manufacturing, which led to him developing a particular interest in cybersecurity, IT regulation, industrial infrastructure applications, and machine learning.

-

Google Cloud announces new data residency flexibility for UK firms, accelerator for regional startups

Google Cloud announces new data residency flexibility for UK firms, accelerator for regional startupsNews UK-specific controls and support for up and coming AI firms is central to Google Cloud’s UK strategy

-

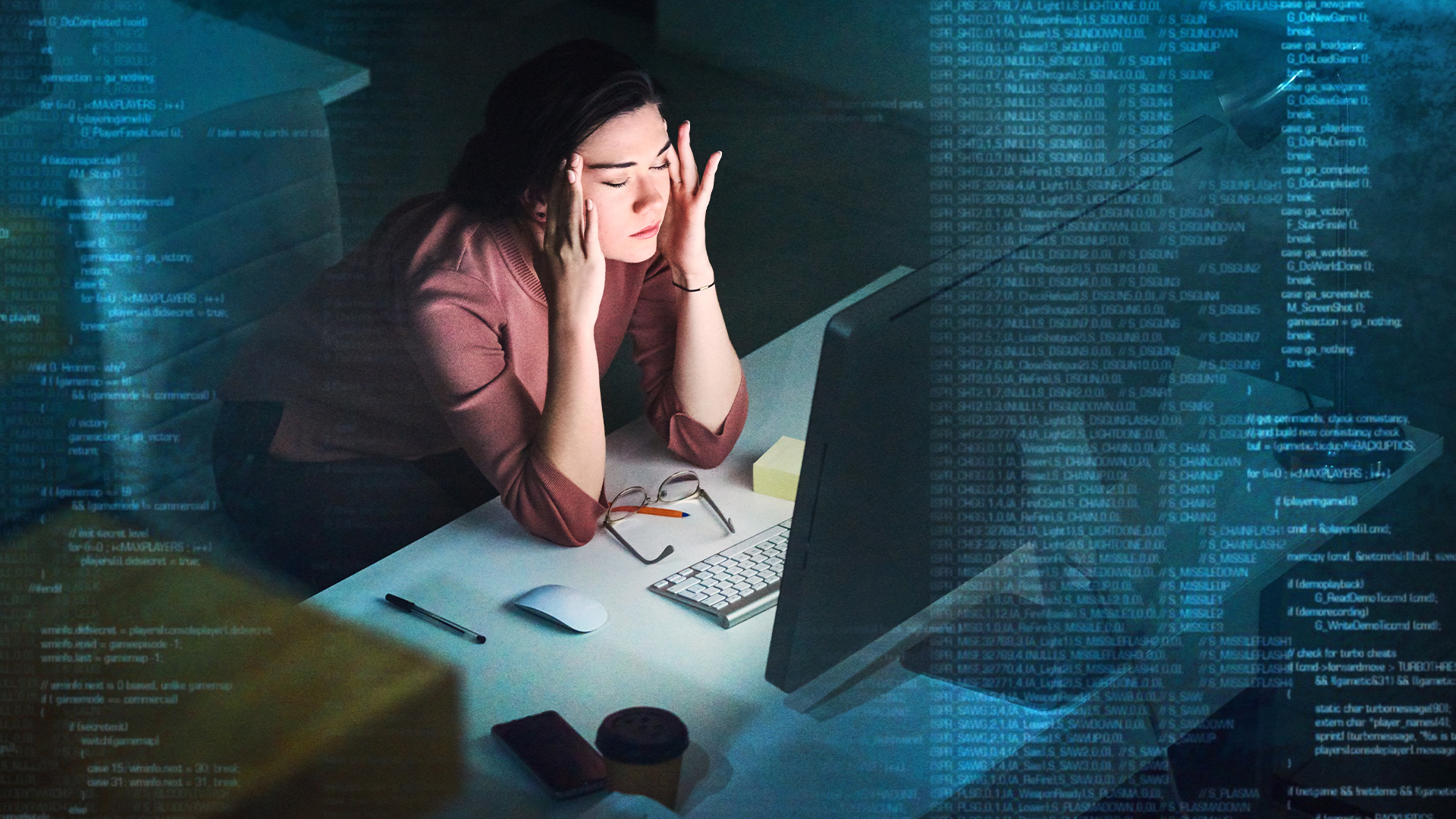

Workers are covering up cyber attacks for fear of reprisal – here’s why that’s a huge problem

Workers are covering up cyber attacks for fear of reprisal – here’s why that’s a huge problemNews More than one-third of office workers say they wouldn’t tell their cybersecurity team if they thought they had been the victim of a cyber attack.

-

Cyber professionals call for a 'strategic pause' on AI adoption as teams left scrambling to secure tools

Cyber professionals call for a 'strategic pause' on AI adoption as teams left scrambling to secure toolsNews Security professionals are scrambling to secure generative AI tools

-

AI security blunders have cyber professionals scrambling

AI security blunders have cyber professionals scramblingNews Growing AI security incidents have cyber teams fending off an array of threats

-

CISOs bet big on AI tools to reduce mounting cost pressures

CISOs bet big on AI tools to reduce mounting cost pressuresNews AI automation is a top priority for CISOs, though data quality, privacy, and a lack of in-house expertise are common hurdles

-

The FBI says hackers are using AI voice clones to impersonate US government officials

The FBI says hackers are using AI voice clones to impersonate US government officialsNews The campaign uses AI voice generation to send messages pretending to be from high-ranking figures

-

Almost a third of workers are covertly using AI at work – here’s why that’s a terrible idea

Almost a third of workers are covertly using AI at work – here’s why that’s a terrible ideaNews Employers need to get wise to the use of unauthorized AI tools and tighten up policies

-

Foreign AI model launches may have improved trust in US AI developers, says Mandiant CTO – as he warns Chinese cyber attacks are at an “unprecedented level”

Foreign AI model launches may have improved trust in US AI developers, says Mandiant CTO – as he warns Chinese cyber attacks are at an “unprecedented level”News Concerns about enterprise AI deployments have faded due to greater understanding of the technology and negative examples in the international community, according to Mandiant CTO Charles Carmakal.

-

Security experts issue warning over the rise of 'gray bot' AI web scrapers

Security experts issue warning over the rise of 'gray bot' AI web scrapersNews While not malicious, the bots can overwhelm web applications in a way similar to bad actors

-

Law enforcement needs to fight fire with fire on AI threats

Law enforcement needs to fight fire with fire on AI threatsNews UK law enforcement agencies have been urged to employ a more proactive approach to AI-related cyber crime as threats posed by the technology accelerate.