Microsoft files suit against threat actors abusing AI services

Cyber criminals are accused of using stolen credentials for an illegal hacking as a service operation

Microsoft has filed a lawsuit against 10 foreign threat actors, accusing the group of stealing API keys for its Azure OpenAI service and using it to run a hacking as a service operation.

According to the complaint, filed in December 2024, Microsoft discovered the customer API keys were being used to generate illicit content in late July that year.

After investigating the incident it found the credentials had been stolen and were scraped from public websites.

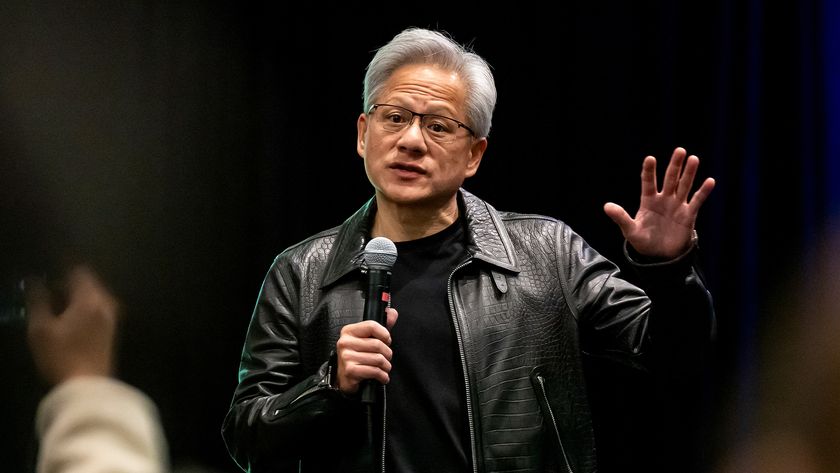

In a blog post publicly announcing the details of the legal action, Steven Masada, assistant general counsel at Microsoft’s Digital Crimes Unit (DCU), said the group identified and unlawfully accessed accounts with ‘certain AI services’ and intentionally reconfigured these capabilities for malicious purposes.

“Cyber criminals then used these services and resold access to other malicious actors with detailed instructions on how to use these custom tools to generate harmful and illicit content,” he reported.

In particular, it appears the group had bypassed internal guardrails to use the DALL-E AI image generation system to create thousands of harmful images.

“Upon discovery, Microsoft revoked cyber criminal access, put in place countermeasures, and enhanced its safeguards to further block such malicious activity in the future,” Masada noted.

Get the ITPro. daily newsletter

Sign up today and you will receive a free copy of our Focus Report 2025 - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

He added that Microsoft was able to seize a website that was instrumental to the group’s operation and will allow the DCU to collect further evidence on those responsible, and how these services are monetized.

Threat actors consistently look to jailbreak legitimate generative AI services

Masada warned that generative AI systems are continually being probed by cyber criminals looking for ways to corrupt the tools for use in threat campaigns.

“ Every day, individuals leverage generative AI tools to enhance their creative expression and productivity. Unfortunately, and as we have seen with the emergence of other technologies, the benefits of these tools attract bad actors who seek to exploit and abuse technology and innovation for malicious purposes,” he explained.

“Microsoft recognizes the role we play in protecting against the abuse and misuse of our tools as we and others across the sector introduce new capabilities. Last year, we committed to continuing to innovate on new ways to keep users safe and outlined a comprehensive approach to combat abusive AI-generated content and protect people and communities. This most recent legal action builds on that promise. ”

OpenAI, a key partner of Microsoft for the frontier models driving its AI services, reported in October 2024 that it had disrupted more than 20 attempts to use its models for malicious purposes since the start of the year.

The company said it observed threat actors trying to use its flagship ChatGPT service to debug malware, generate fake social media accounts, and produce general disinformation.

In July 2024, Microsoft also warned users of a new method of prompt engineering AI models into disclosing harmful information.

The jailbreaking technique, labelled Skeleton Key, involves asking the model to augment its behavior models such that when given a request for illicit content, instead of refusing the request the model would comply but simply prefix the response with a warning.

Earlier that year, researchers published a paper on a weakness in OpenAI’s GPT-4 where attackers could jailbreak the model by translating their prompts into rarer or ‘low-resource’ languages.

By translating their prompts into languages such as Scots Gaelic, Hmong, or Guarani, which the model has less rigorous training on, the researchers were far more likely to be able to get the system to generate harmful outputs.

Solomon Klappholz is a Staff Writer at ITPro. He has experience writing about the technologies that facilitate industrial manufacturing which led to him developing a particular interest in IT regulation, industrial infrastructure applications, and machine learning.

Multichannel attacks are becoming a serious threat for enterprises – and AI is fueling the surge

Cobalt Strike abusers have been dealt a hammer blow: An "aggressive" takedown campaign by Fortra and Microsoft shuttered over 200 malicious domains – and it’s cut the misuse of the tool by 80%