HPE launches two servers into space

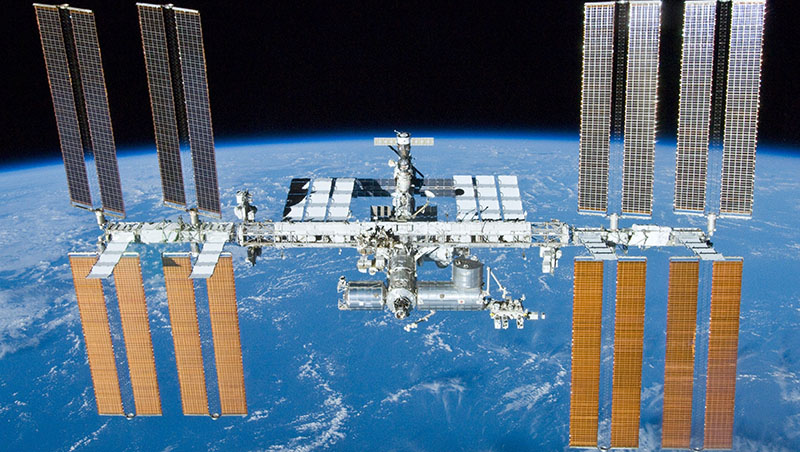

Spaceborne Computer Project sees Apollo units attached to the ISS

Hewlett Packard Enterprise (HPE) is subjecting two of its Apollo servers to the rigours of space in the hopes of making its product line ready for interplanetary travel.

The company has sent two of its high density, high performance units to the International Space Station (ISS) to see if it can get them to work equally well in space as they do on earth.

What makes this experiment, known as the Spaceborne Computer Project, different is that the organisation is seeking to "ruggedize" the appliances through software, rather than modifying the hardware to make the servers more robust.

"We have a postulate that we can harden with software and this experiment will help us determine if we can or cannot [do that]," Mark Fernandez, Americas CTO of SGI at HPE and co-principal investigator for software on the Spaceborne Computer Project, told IT Pro.

If successful, Fernandez and his colleagues will be able to get the Apollo units running as well in low earth orbit as they do back on terra firma. In order to measure this, the team also has two servers identical to those headed to the ISS running in Wisconsin as control units, giving it four data points in total.

"We're looking at this at the macro-level," he explains. "Whereas traditional hardening looks down at the silicon or the hardware and tries to anticipate those physical interactions between the radiation, microgravity etc and account for that. We're looking at the ... scientific application and its results and can I get the results correctly if I slow the machine down, if I let it run a little cooler than it normally runs, if I give it more time to do error correction, if I encourage it to do error correction checks more often than it has been doing."

Fernandez said that as there are multiple variables in the experiment, the team may find that it can get some computation running as normal through software hardening, while others it can't.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

"We have NASA approved interactive access to the systems onboard the ISS and we'll tweak our software parameters to try to find a set that gives us correct computations and minimising or eliminating errors over a one year period," Fernandez said

"If we find out, for example, that we're getting too many memory bit errors, then running applications that are memory intensive might not be a good idea, but if you're running those that are computationally intensive and the CPU isn't encountering those errors, then we know that we can use a standard COTS server onboard a spaceship for those types of [workloads]," he added.

Indeed, this experiment is part of HPE's wider ambition to be part of the first manned mission to Mars. As the company has pointed out in a blog post, most calculations and computation for space missions are done back on the ground. For a Mars mission, however, this is impractical as the lag would be in the region of 20 minutes.

Attaching a server, or indeed servers, to the spacecraft eliminates this problem, effectively operating as an extreme version of edge computing.

Specs

The Spaceborne Computer project uses standard off-the-shelf Apollo x86 servers. There have been some additional layers of error detection, collection and prevention software added on top of the typical high performance computing (HPC) benchmarks.

Explaining why HPE had chosen to use this particular hardware, Fernandez said: "There are three reasons for us selecting members of the Apollo 40 family. One is they're dense, two is they're high performing and number three, they're run-of-the-mill plain Jane pizza boxes that anybody can get and we sell many, many, many of.

"We didn't want to have any implications that this was any kind of special hardware, any new hardware. It's plain Jane, two-socket x86 base servers that anyone can have, because if we can get these guys to run properly, we can get anything to run properly."

After the end of their mission to the ISS, the servers will make their way back to earth. If they failed, they will be subject to standard failure analysis to determine which component or components were at fault and why.

"We have plans to make any necessary engineering changes that would improve the reliability of the server for space, which will almost certainly improve the reliability for all of the similar systems that we sell here on earth," Fernandez said.

This is another important element of the experiment, in fact. While a manned mission to Mars may be decades away, learnings from it can be used by HPE to modify and improve its range much quickly.

In particular, the company is hoping for three things that can be fed back into non-spacefaring technology: a set of parameters on the BIOS, in the CPU and in the memory systems that's stable and reliable; the physical operating systems, such as voltage and heat, that give reliability; and optimise the software stack that controls the other two.

"It has benefits for space travel, it has benefits for on-earth computation and of course it has benefits for our customers to discover these safety features that give us the more correct answers," Fernandez concluded.

Main image credit: NASA/Crew (public domain)

Jane McCallion is Managing Editor of ITPro and ChannelPro, specializing in data centers, enterprise IT infrastructure, and cybersecurity. Before becoming Managing Editor, she held the role of Deputy Editor and, prior to that, Features Editor, managing a pool of freelance and internal writers, while continuing to specialize in enterprise IT infrastructure, and business strategy.

Prior to joining ITPro, Jane was a freelance business journalist writing as both Jane McCallion and Jane Bordenave for titles such as European CEO, World Finance, and Business Excellence Magazine.

-

On the ground at HPE Discover Barcelona 2025

On the ground at HPE Discover Barcelona 2025ITPro Podcast This is a pivotal time for HPE, as it heralds its Juniper Networks acquisition and strengthens ties with Nvidia and AMD

-

HPE promises “cross pollinated" future for Aruba and Juniper

HPE promises “cross pollinated" future for Aruba and JuniperNews Juniper Networks’ Marvis and LEM capabilities will move to Aruba Central, while client profiling and organizational insights will transfer to Mist

-

HPE ProLiant Compute DL325 Gen12 review: A deceptively small and powerful 1P rack server with a huge core count

HPE ProLiant Compute DL325 Gen12 review: A deceptively small and powerful 1P rack server with a huge core countReviews The DL325 Gen12 delivers a CPU core density and memory capacity normally reserved for expensive, power-hungry dual-socket rack servers

-

HPE's new Cray system is a pocket powerhouse

HPE's new Cray system is a pocket powerhouseNews Hewlett Packard Enterprise (HPE) has unveiled new HPC storage, liquid cooling, and supercomputing offerings ahead of SC25

-

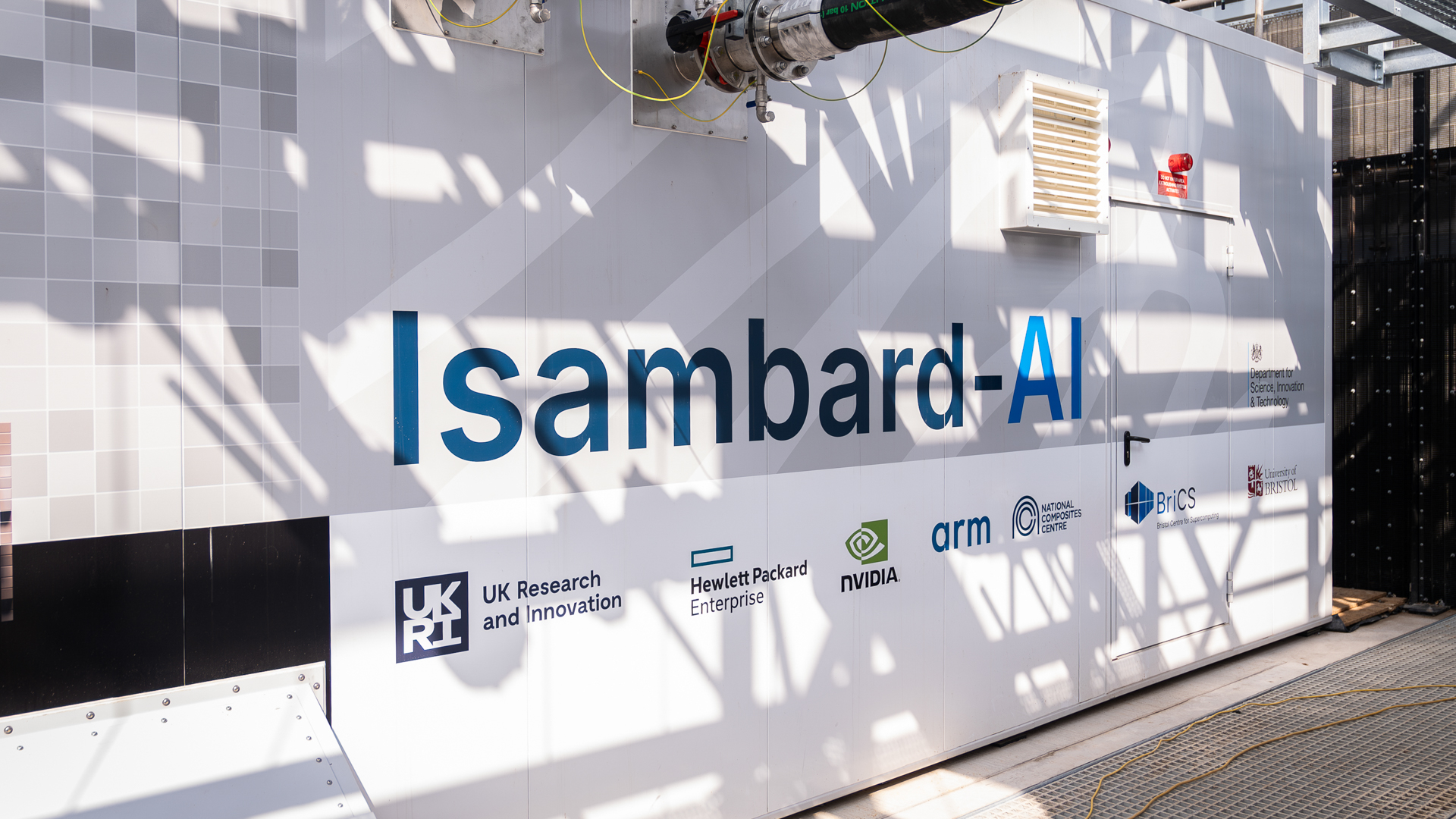

Inside Isambard-AI: The UK’s most powerful supercomputer

Inside Isambard-AI: The UK’s most powerful supercomputerLong read Now officially inaugurated, Isambard-AI is intended to revolutionize UK innovation across all areas of scientific research

-

HPE forced to offload Instant On networking division and license Juniper’s AI Ops source code in DOJ settlement

HPE forced to offload Instant On networking division and license Juniper’s AI Ops source code in DOJ settlementNews HPE will be required to make concessions to push the deal through, including divesting its ‘Instant On’ wireless networking division within 180 days.

-

HPE eyes enterprise data sovereignty gains with Aruba Networking Central expansion

HPE eyes enterprise data sovereignty gains with Aruba Networking Central expansionNews HPE has announced a sweeping expansion of its Aruba Networking Central platform, offering users a raft of new features focused on driving security and data sovereignty.

-

HPE unveils Mod Pod AI ‘data center-in-a-box’ at Nvidia GTC

HPE unveils Mod Pod AI ‘data center-in-a-box’ at Nvidia GTCNews Water-cooled containers will improve access to HPC and AI hardware, the company claimed