What is ethical AI?

Ethical AI is a concept that has evolved significantly in recent years, but defining a 'good outcome' is key to understanding what it is

As a concept, ethical AI can seem quite abstract or like something out of a science-fiction film. Today’s AI systems are thankfully a far cry from the likes of Skynet or HAL 9000, but there is nevertheless an important conversation to be had about how AI can be ethically developed and deployed.

In short, ethical AI is an artificial intelligence system that follows guidelines such as accountability and transparency, and has been designed with factors such as privacy and individual human rights in mind.

Ethical AI has become a highly sensitive topic in recent years, especially with its increased use in public life. The use of AI facial recognition technology by police services, for example, has been a frequent political flashpoint and heavily criticized by privacy rights groups amid claims of discrimination and a lack of transparency.

A common criticism is a lack of understanding around how these algorithms make deductions. As the algorithms don’t tend to be transparent, also known as a black box, it can be hard to know if their datasets contain any kind of bias which is taken into account when the system arrives at its conclusion.

Disclaimer

Ethical AI falls within the broader field of study, commonly known as AI ethics, the latter of which sees sector experts and academics attempt to set out an ethical and legal framework that developers can refer to in order to produce reliable ethical AI.

In other words, AI ethics is the discipline through which ethical guidelines are set, while ethical AI is a practical implementation of AI technology that fits those guidelines. The two terms are often confused across the internet, but the focus of this article is on ethical AI.

A recurring problem with AI systems that aim to produce human-like results is that one cannot easily embed ‘ethics’ into a system that operates using an extremely complex series of mathematical decisions.

When interacting with a chatbot that uses generative AI technology to function, one could easily convince oneself that the program was responding intelligently, or even considering factors such as what would be ‘right’ to say in any given situation.

That’s not the case, however. The responses presented to users are merely what the system has been taught, and are identified as being the most relevant response to a user query.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

It’s important to acknowledge that a significant portion of AI systems do not use any form of generative content. In the business world specifically, AI systems may be used to identify patterns in data or automate processes rather than create new content from user inputs.

RELATED WHITEPAPER

Any AI system carries the risk of exacerbating errors or biases to produce unethical results. For example, an AI recruitment system could favor one specific group of applicants based on certain variables, such as gender or race.

This is an issue that has occurred previously. In 2018, Amazon’s AI hiring tool demonstrated gender bias due to the quality of the data it was fed. This tool was eventually scrapped by Amazon amid complaints.

Similarly, generative AI can produce unethical text or image outputs. Microsoft learned this the hard way with its Tay chatbot, which was trained by Twitter users into spewing hate speech within 16 hours of its release in March 2016.

More recently, Google was forced to temporarily halt the use of its Gemini-based image generation tool amid complaints that it was producing inaccurate images based on user inputs.

What is the purpose of ethical AI?

AI presents unique challenges when it comes to output. Unlike traditional algorithms which can easily be quantified and have predictable outcomes – say, a simple program for multiplication or even one for encryption – the results of an AI system are weighted by a complex series of statistical models.

Input also plays an important role in affecting how ‘good’ the outcome of an AI system is. Particularly for large generative AI models, adequately-prepared datasets can make the difference between the creation of insightful, potentially misleading, or outright offensive content.

This applies to bias within data, or even the bias of the framework through which a model was trained.

In 2023, Dell CTO John Roese provided some insight into how the tech company has endeavored to remove all non-inclusive language from its internal code and content.

If the company chose to use this data to train a large language model (LLM) to produce marketing content internally, this would, in theory, prevent the production of unwanted language from appearing in outputs.

Using this as a basis for ethical results, one could define a ‘good’ output for AI as that which falls within the ethical boundaries established by the developers.

Recognizing that it’s impossible to completely eliminate bias in AI is an important step in its development. It’s effectively impossible to create an entirely neutral system, but the field of AI ethics can help to create guardrails that developers include within systems.

For example, many popular models such as OpenAI’s GPT-4 have been refined using reinforcement learning from human feedback (RLHF). This process involves using human testers who are given two potential outputs, and choose the best outcome of the two.

Over time, the AI can infer the parameters within which it is expected to operate, becoming more accurate and also less likely to produce harmful output such as hate speech.

These methods can carry their own ethical concerns, however. A January 2023 report by Time alleged that OpenAI outsourced some of its model refinement work to a firm in Kenya where workers were paid as little as $1.32 per hour.

Ethical AI is a complex field where a multitude of variables must be considered by developers and organizations deploying systems, and firms must approach this from a holistic perspective and be prepared to continually reassess their methods and strategy.

Arguments against ethical AI

Some industry stakeholders have argued that the concept of ethical AI is fundamentally flawed, and that it’s impossible to assess AI systems on the same basis as human beings.

This argument goes hand-in-hand with discussions around whether roles associated with particular ethical problems - such as those advising customers or involved in processes that affect people’s fundamental rights - are ever correct for AI.

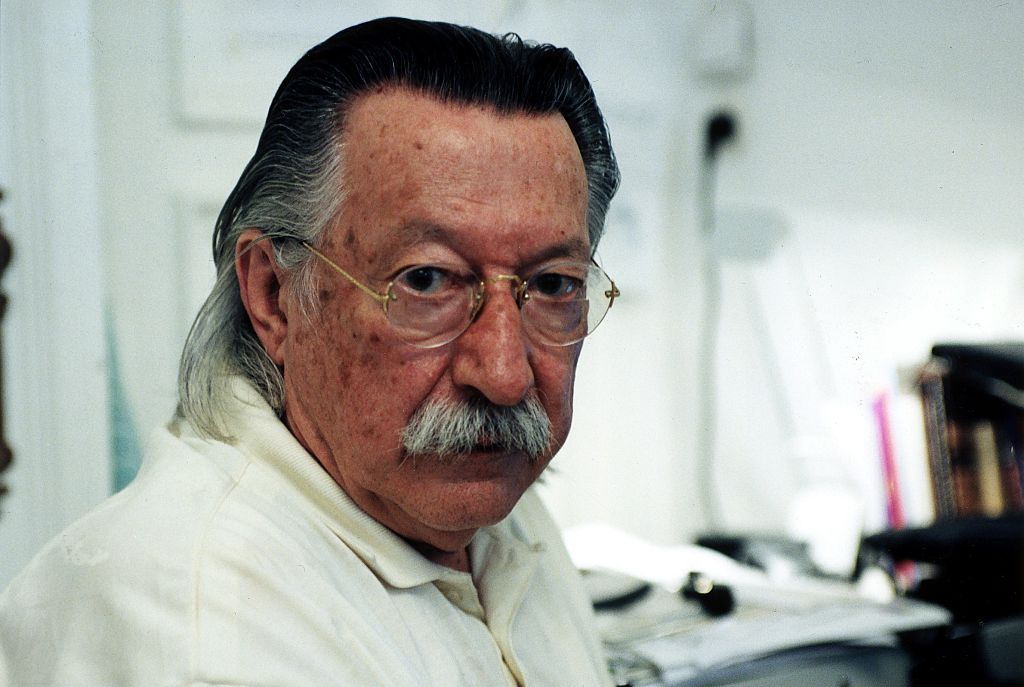

Famed computer scientist Joseph Weizenbaum has argued since the 60s that non-human beings shouldn't be used in roles that rely on human interaction or relationship building.

Computer scientist Joseph Weizenbaum in 1977

In human-facing roles, workers need to experience and show empathy. Therefore, however human-like the interactions with an AI system may be for the consumer, they will never be able to fully replace the emotions experienced in real-world interactions.

However, as developers and many businesses are already implementing AI systems, these theoretical concerns must also occur alongside practical ethical mitigations within current AI models.

Boundaries for the use of AI are important considerations that technologists must take into account. Beyond the risks associated with replacing any given role with an AI system, one must also factor in the rights of individuals who come into contact with an AI system, particularly those whose creators attempt to pass them off as real human interaction.

Global tech companies are making efforts to explore ways to deploy Ai safely and ethically in consumer-facing scenarios. In July 2023, a host of major AI companies, including Google, Microsoft, Anthropic, and OpenAI formed a group known as the Frontier Model Forum.

This consortium of industry heavyweights was formed specifically to aid private and public sector organizations to safely develop and deploy AI systems.

Similarly, in December 2023 a separate ‘AI Alliance’ aimed at responsible development was launched by a host of firms including Meta, IBM, AMD, Dell Technologies, and Oracle.

Ethical AI and regulation

The topic of ethical AI was thrust firmly into the spotlight during the development of the EU AI Act, which the EU Parliament formally adopted in March 2024.

The first-of-its-kind legislation outlines EU-wide rules pertaining to transparency, human oversight, accountability, and the quality of data used to train systems.

Under the terms of the regulation, AI systems will be ranked based on ‘risk factors’ and their potential to infringe on the rights of citizens.

Copyright holders in particular will benefit from rigid requirements on developers to disclose the intellectual property on which their models have been trained. Both Meta and OpenAI were named in copyright complaints last year amid claims by authors that their work had been used without consent to train models.

Moving forward, the developers of AI systems will be required to provide information on the data they have used to train language models. Firms that fail to comply with the laws face up to €35 million euros or 7% of their annual global turnover – whichever is higher.

The UK has moved slower than the EU on AI regulation, with the government having committed to not over-enforcing AI regulation over fears it could hamper AI innovation. That’s not to say that efforts aren’t being made by the government to improve safety and ethical deployment, however.

Its whitepaper, A pro-innovation approach to AI regulation, lays out five principles for AI that the government says will guide the development of AI throughout the economy:

- Safety, security, and robustness

- Appropriate transparency and explainability

- Fairness

- Accountability and governance

- Contestability and redress

In late 2023, the UK hosted the world’s first AI Safety Summit, at which a host of nations signed the Bletchley Declaration that outlined plans for 28 countries to cooperate on the development of so-called ‘frontier’ AI technologies, such as generative AI chatbots and image generators.

RELATED RESOURCE

Accelerating FinOps & Sustainable IT

Optimize costs and automate resourcing actions with IBM Turbonomic Application Resource Management.

The aim of this is to continually examine and prevent the development of “serious, even catastrophic harm” as a result of AI technologies. Three particular areas of risk outlined in the declaration included cyber security, biotechnology, and the potential use of AI in disinformation.

It has also appointed State of AI report co-author Ian Hogarth as the chair of the Foundation Model Taskforce, which will collaborate with experts throughout the sector to establish baselines for the ethics and safety of AI models.

The government hopes that the work of the taskforce will help to establish guidelines for AI that can be used worldwide, which can be held up alongside the upcoming UK-hosted AI summit as proof of the nation’s importance in the field.

Other groups such as Responsible AI UK continue to call for the ethical development of AI, and enable academics across a range of disciplines to collaborate on a safe and fruitful approach to AI systems.

Ethical AI in business

Google was one of the first companies to vow that its AI will only ever be used ethically, but previously faced controversy for its involvement with the US Department of Defense (DoD).

Google published its ethical code of practice in June 2018 in response to widespread criticism over its relationship with the US government's weapon program.

In April 2023, the company consolidated its DeepMind and Google Brain subsidiaries into one entity named Google DeepMind. Its CEO Demis Hassabis has committed to ethical AI, with a Google executive having stated that Hassabis only took the position under the condition that the firm would approach AI.

DeepMind CEO Demis Hassabis

The consortium explained: "This partnership on AI will conduct research, organise discussions, provide thought leadership, consult with relevant third parties, respond to questions from the public and media, and create educational material that advances the understanding of AI technologies including machine perception, learning, and automated reasoning."

Google isn't along in its responsible AI work, either. Some of the largest tech firms working on AI have public policies on responsible and ethical development.

Both AWS and Meta have focused on producing open AI models produced and maintained by a large community of developers, identifying this as a key factor towards keeping AI responsible, safe, and ethical.

In February 2023 AWS partnered with Hugging Face to ‘democratize’ AI, and its approach to delivering AI through its Bedrock platform has attracted thousands of customers.

Private sector policies regarding AI will be refined in the years to come, as new frameworks become available and the regulatory landscape shifts.

Dale Walker is a contributor specializing in cybersecurity, data protection, and IT regulations. He was the former managing editor at ITPro, as well as its sibling sites CloudPro and ChannelPro. He spent a number of years reporting for ITPro from numerous domestic and international events, including IBM, Red Hat, Google, and has been a regular reporter for Microsoft's various yearly showcases, including Ignite.

-

Bigger salaries, more burnout: Is the CISO role in crisis?

Bigger salaries, more burnout: Is the CISO role in crisis?In-depth CISOs are more stressed than ever before – but why is this and what can be done?

By Kate O'Flaherty Published

-

Cheap cyber crime kits can be bought on the dark web for less than $25

Cheap cyber crime kits can be bought on the dark web for less than $25News Research from NordVPN shows phishing kits are now widely available on the dark web and via messaging apps like Telegram, and are often selling for less than $25.

By Emma Woollacott Published