EU to propose GDPR-like fines for AI abuses

AI will be prohibited from use in mass surveillance or for ranking social behaviour

The European Union (EU) is set to propose a set of enforceable rules that will restrict the use of artificial intelligence (AI) systems against the threat of hefty GDPR-like fines for flagrant violations.

Under the proposals drafted by the European Commission (EC), organisations operating in the EU will not be allowed to use AI for mass surveillance or for ranking social behaviour, according to Bloomberg. Systems deployed to manipulate human behaviour, exploit information about individuals or groups would also be banned in the EU.

Under the rules, authorisation would be required to use biometric identification systems in the public domain, while high-risk AI applications would need to undergo a thorough inspection before they’re deployed. The high-risk category would include applications that use facial recognition, are involved with physical safety or healthcare, or those used in transport or energy.

Member states, in these cases, would need to appoint assessment bodies to examine whether these systems are trained on unbiased data sets and have sufficient human oversight. They’ll inspect and eventually certify the systems that pass the criteria.

While some companies will be allowed to assess themselves, others will need to be vetted by a third party, which will issue compliance certificates valid for up to five years.

Failure to comply with the terms set out in the proposals will result in a range of punishments including financial penalties up to a maximum of 4% of global revenue, which is the same maximum penalty for violating GDPR.

There are several exemptions in place, however, including the use of AI for safeguarding public security as well as AI systems used exclusively for military purposes.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

The EU has long been keen to devise a set of enforceable rules that would govern the use of AI systems by organisations operating in its territories, amid the growing concerns about the consequences of unregulated AI deployments. Google has also been among a sea of voices calling out for some kind of framework governing the use of AI, while the Information Commissioner's Office (ICO) is currently consulting with experts on AI regulation.

In March last year, the EC launched the first phase of this process, setting out in its AI white paper the goals for a regulated industry that taps into its digital single market.

The EU also hopes these rules will serve as a model for other nations and territories to replicate, as has become the case with GDPR since its introduction in 2018 with legislation such as the California Consumer Privacy Act (CCPA).

Keumars Afifi-Sabet is a writer and editor that specialises in public sector, cyber security, and cloud computing. He first joined ITPro as a staff writer in April 2018 and eventually became its Features Editor. Although a regular contributor to other tech sites in the past, these days you will find Keumars on LiveScience, where he runs its Technology section.

-

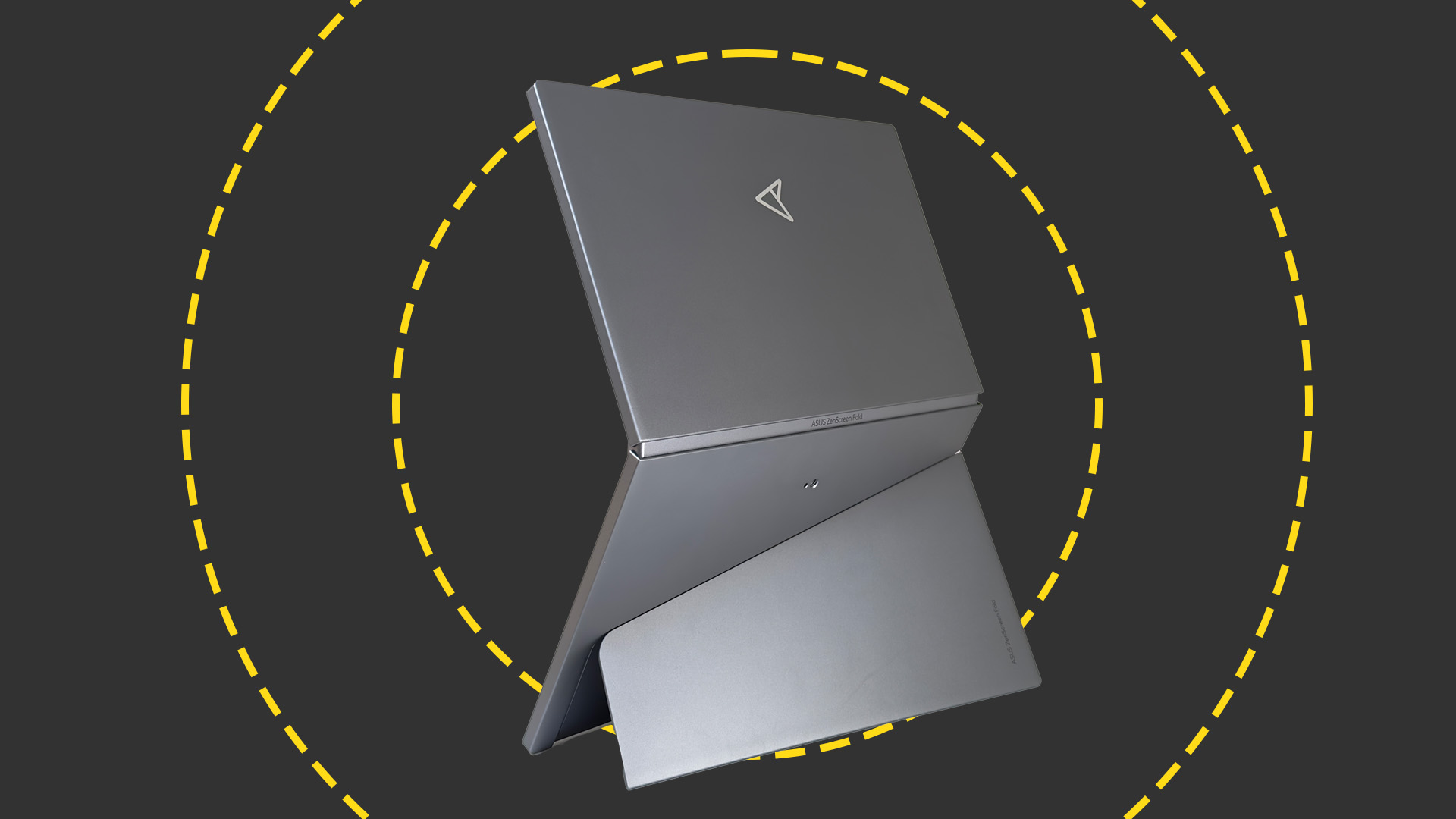

Asus ZenScreen Fold OLED MQ17QH review

Asus ZenScreen Fold OLED MQ17QH reviewReviews A stunning foldable 17.3in OLED display – but it's too expensive to be anything more than a thrilling tech demo

By Sasha Muller

-

How the UK MoJ achieved secure networks for prisons and offices with Palo Alto Networks

How the UK MoJ achieved secure networks for prisons and offices with Palo Alto NetworksCase study Adopting zero trust is a necessity when your own users are trying to launch cyber attacks

By Rory Bathgate