A big enforcement deadline for the EU AI Act just passed – here's what you need to know

Fines for noncompliance are still way off, but firms should still prepare for significant changes

The first of a number of enforcement actions for the EU AI Act have officially come into effect, and experts have warned firms should accelerate preparations for the next batch of deadlines.

Passed in March last year, the first elements of the EU’s landmark legislation came into effect on the 2nd February 2025, bringing with it a series of rules and regulations that AI developers and deployers must adhere to.

The EU AI Act employs a risk-based approach to assessing the potential impact of AI systems, designating them as being minimal, limited, or high-risk. High-risk systems, for example, are those defined as posing a potential threat to life, human rights, or financial livelihood.

These particular systems are in the crosshairs following the introduction of the new rules this month.

Speaking to ITPro ahead of the deadline, Enza Iannopollo, principal analyst at Forrester, said lawmakers specifically chose to target the most dangerous AI use cases with the first round of rules.

“Requirements enforced on this deadline focus on AI use-cases the EU considers pose the greatest risk to core Union values and fundamental rights, due to their potential negative impacts,” Iannopollo said.

“These rules are those related to prohibited AI use-cases, along with requirements related to AI literacy. Organizations that violate these rules could face severe fines — up to 7% of their global turnover — so it’s crucial that requirements are met effectively,” she added.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Iannopollo noted that fines will not be issued immediately, however, as details about sanctions are still a work-in-progress and the authorities in charge of enforcement are still not in place.

While there may not be any big fines in the headlines in the next few months, Iannopollo said this is still an important milestone.

Tim Roberts, UK country co-leader at AlixPartners, said the first set of compliance obligations will act similarly to GDPR, mainly in that they will apply to any organization doing business with AI models in Europe.

With this in mind, it’s critical that companies are aware of these first batch of rules, even if they are not EU-based.

“Naturally, this also reignites the debate about striking the right balance between innovation and regulation. But instead of seeing them as opposing forces, it’s more useful to think of them as two things we need to get right in parallel … because regulation can be a facilitator of innovation - not a blocker,” Roberts said.

“The speed at which AI is advancing has caused discomfort for some consumers, but strong safeguards can build trust and create a thriving (and fairer) environment for greater business innovation.

“The EU AI Act is an important first step in this journey, and its success will depend on how well it is applied and how well it evolves, with the end goal being smarter regulation that drives businesses to continue pushing boundaries for the benefit of all.”

EU AI Act: Firms should tighten up risk assessments

Due to the global reach of the Act and the fact that requirements span the entire AI value chain, Iannopollo said enterprises must ensure they adhere to the regulation.

“The EU AI Act will have a significant impact on AI governance globally. With these regulations, the EU has established the ‘de facto’ standard for trustworthy AI and AI risk management,” she added.

To prepare for the rules, enterprises are advised to begin refining risk assessment practices to ensure they’ve classified AI use cases in line with the designated risk categories contained in the Act.

RELATED WHITEPAPER

Systems that would fall within the ‘prohibited’ category need to be switched off immediately.

“Finally, they need to be prepared for the next key deadline on 2nd August. By this date, the enforcement machine and sanctions will be in better shape, and authorities will be much more likely to sanction firms that are not compliant. In other words, this is when we will see a lot more action.”

George Fitzmaurice is a former Staff Writer at ITPro and ChannelPro, with a particular interest in AI regulation, data legislation, and market development. After graduating from the University of Oxford with a degree in English Language and Literature, he undertook an internship at the New Statesman before starting at ITPro. Outside of the office, George is both an aspiring musician and an avid reader.

-

Geekom Mini IT13 Review

Geekom Mini IT13 ReviewReviews It may only be a mild update for the Mini IT13, but a more potent CPU has made a good mini PC just that little bit better

By Alun Taylor

-

Why AI researchers are turning to nature for inspiration

Why AI researchers are turning to nature for inspirationIn-depth From ant colonies to neural networks, researchers are looking to nature to build more efficient, adaptable, and resilient systems

By David Howell

-

Generative AI adoption is 'creating deep rifts' at enterprises: Execs are battling each other over poor ROI, IT teams are worn out, and workers are sabotaging AI strategies

Generative AI adoption is 'creating deep rifts' at enterprises: Execs are battling each other over poor ROI, IT teams are worn out, and workers are sabotaging AI strategiesNews Execs are battling each other over poor ROI, underperforming tools, and inter-departmental clashes

By Emma Woollacott

-

‘If you want to look like a flesh-bound chatbot, then by all means use an AI teleprompter’: Amazon banned candidates from using AI tools during interviews – here’s why you should never use them to secure a job

‘If you want to look like a flesh-bound chatbot, then by all means use an AI teleprompter’: Amazon banned candidates from using AI tools during interviews – here’s why you should never use them to secure a jobNews Amazon has banned the use of AI tools during the interview process – and it’s not the only major firm cracking down on the trend.

By George Fitzmaurice

-

‘Europe could do it, but it's chosen not to do it’: Eric Schmidt thinks EU regulation will stifle AI innovation – but Britain has a huge opportunity

‘Europe could do it, but it's chosen not to do it’: Eric Schmidt thinks EU regulation will stifle AI innovation – but Britain has a huge opportunityNews Former Google CEO Eric Schmidt believes EU AI regulation is hampering innovation in the region and placing enterprises at a disadvantage.

By Ross Kelly

-

The EU just shelved its AI liability directive

The EU just shelved its AI liability directiveNews The European Commission has scrapped plans to introduce the AI Liability Directive aimed at protecting consumers from harmful AI systems.

By Ross Kelly

-

We spoke to over 700 IT leaders to hear their tech strategy plans for 2025 – here's what we learned

We spoke to over 700 IT leaders to hear their tech strategy plans for 2025 – here's what we learnedNews ITPro's Future Focus report shows AI, cybersecurity, and cloud remain top of the priority list for IT leaders in 2025.

By George Fitzmaurice

-

Former Scale AI leaders raise $4.2 million to simplify agent deployments

Former Scale AI leaders raise $4.2 million to simplify agent deploymentsNews Applied Labs wants to take the hassle out of AI agent deployments

By Ross Kelly

-

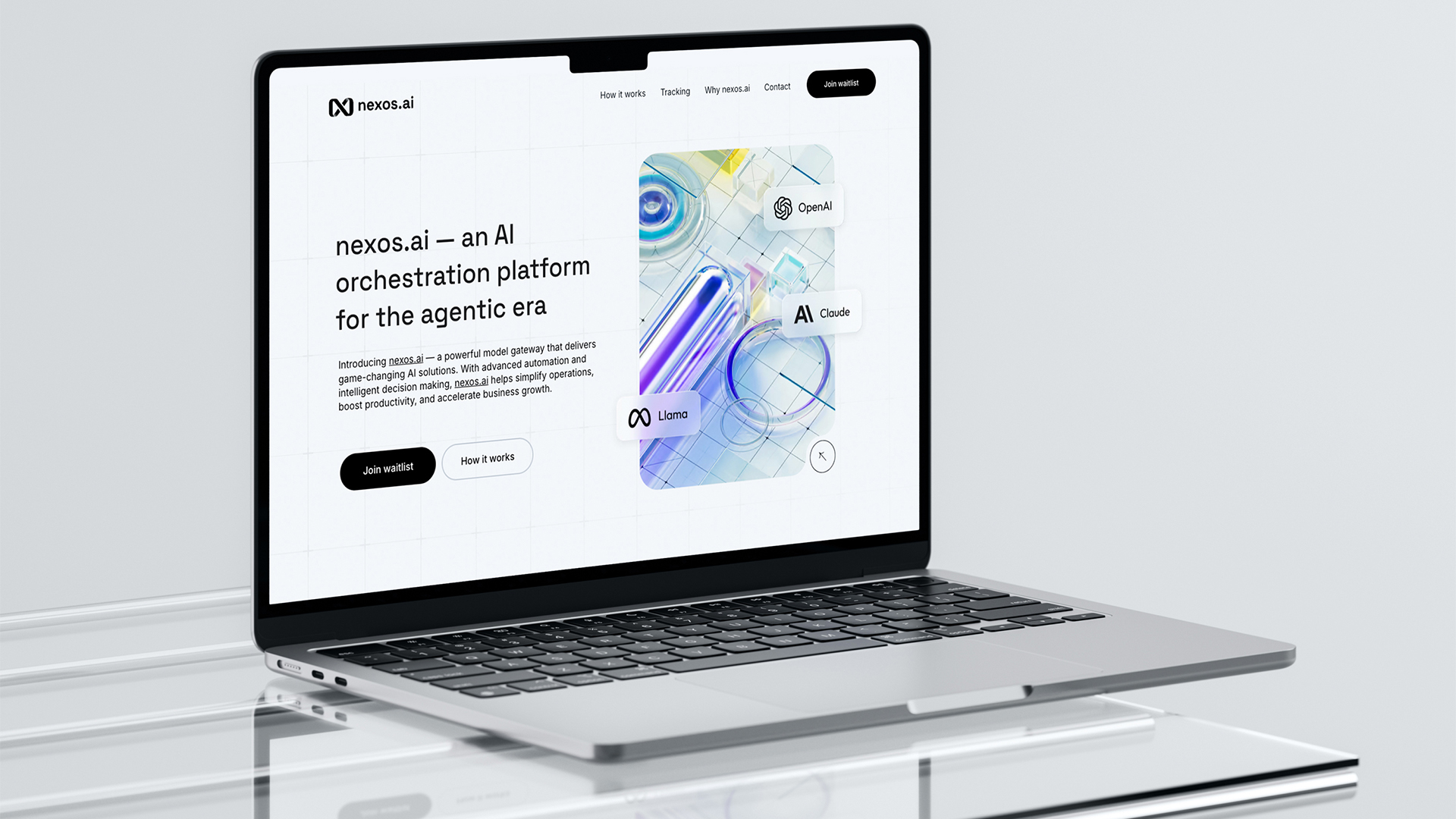

This startup wants to simplify AI adoption through a single “orchestration” platform – and it includes access to popular models from Meta, OpenAI, and Anthropic

This startup wants to simplify AI adoption through a single “orchestration” platform – and it includes access to popular models from Meta, OpenAI, and AnthropicNews Nexos.ai wants to speed things up for enterprises struggling with AI adoption

By Ross Kelly

-

UK financial services firms are scrambling to comply with DORA regulations

UK financial services firms are scrambling to comply with DORA regulationsNews Lack of prioritization and tight implementation schedules mean many aren’t compliant

By Emma Woollacott