Dell doubles down on Nvidia partnership with ‘AI factories’ and models at the edge

Alongside closer ties with Nvidia, Dell also unveiled a turnkey solution for digital assistants, rooted in servers that utilize the chipmaker's newest GPUs

Dell Technologies has announced added features for its shared AI solution with Nvidia, alongside a number of added compatibility features for Nvidia hardware as the two companies draw even closer ties together.

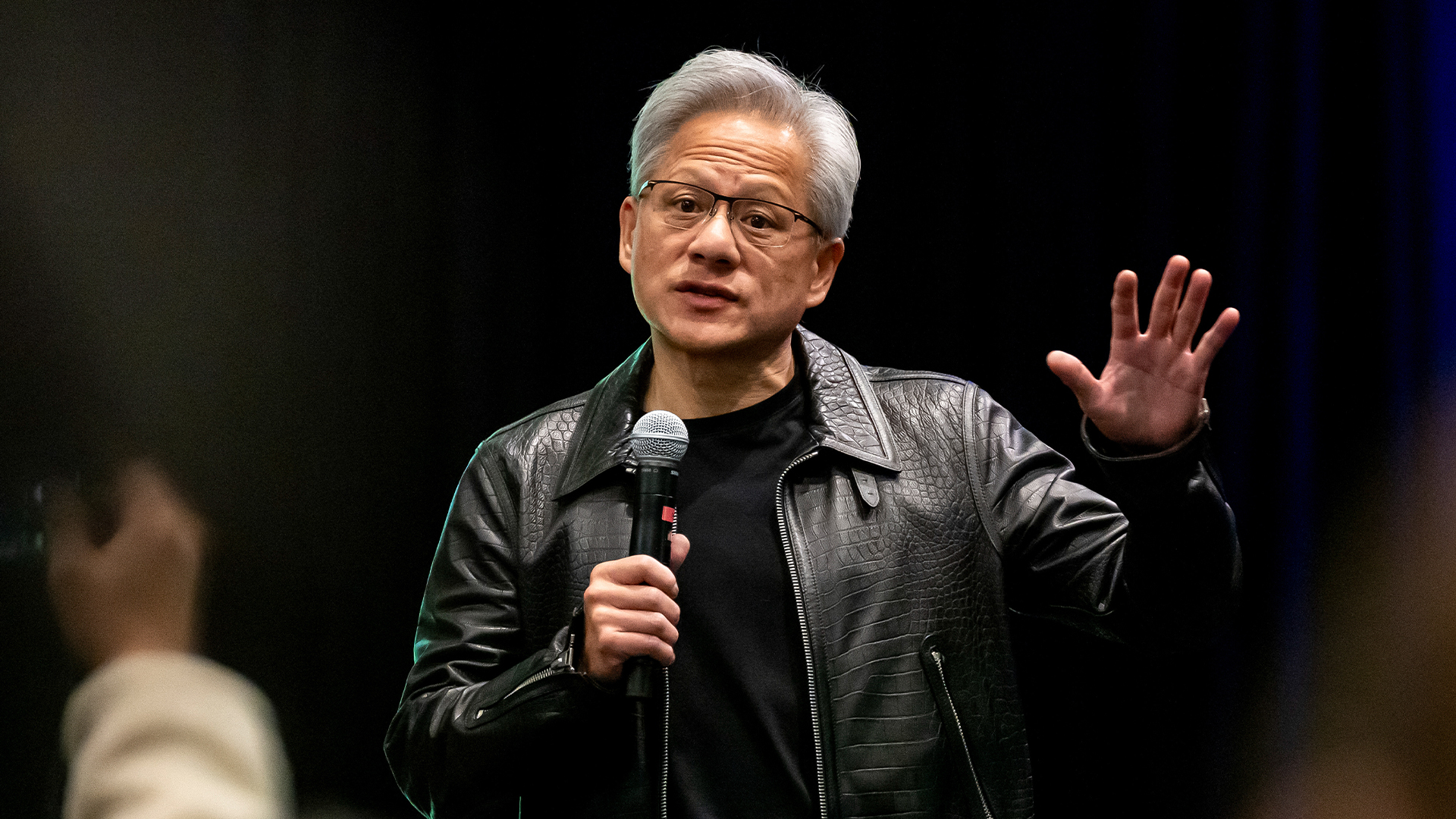

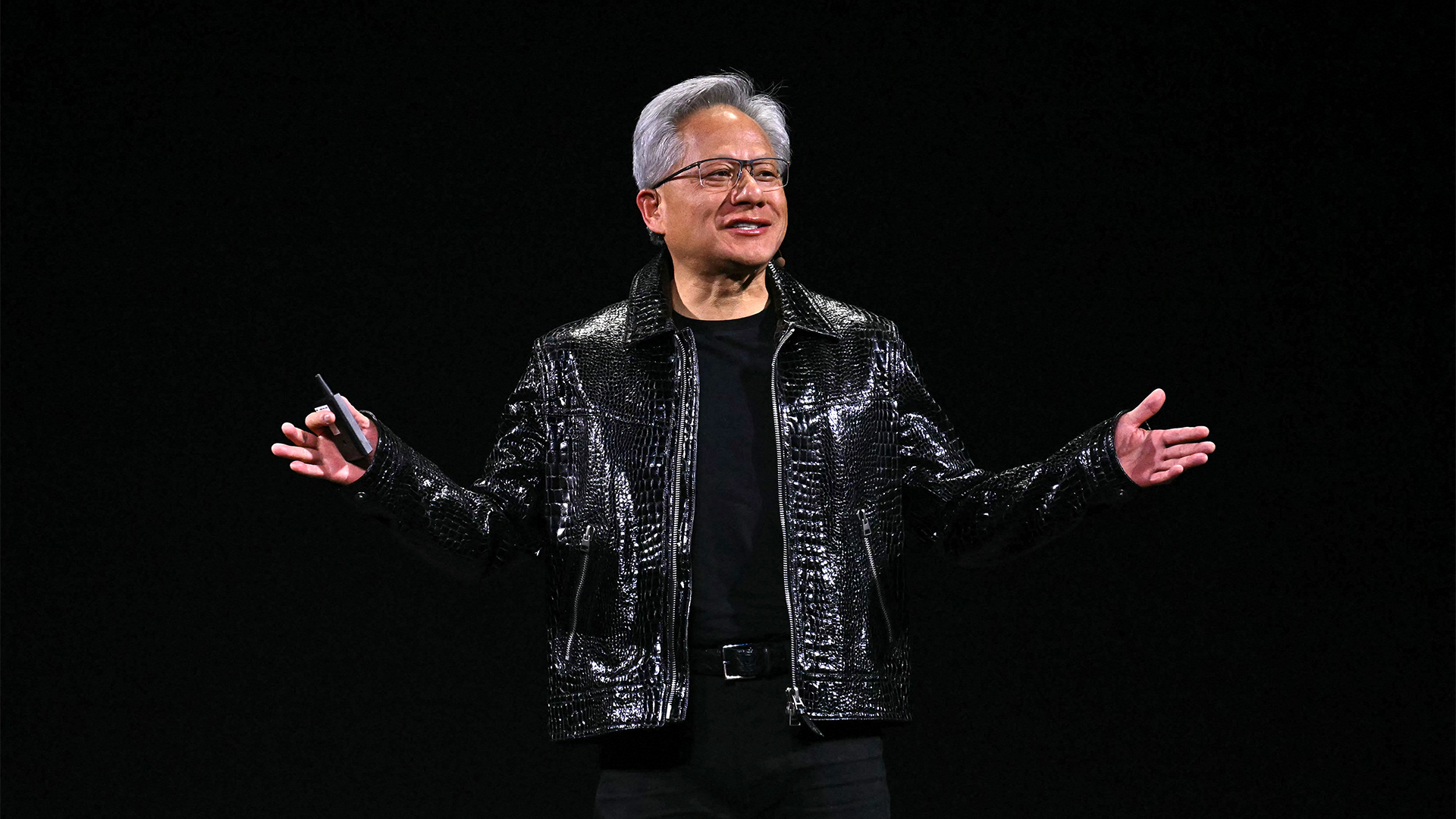

In the opening keynote of Dell Technologies World 2024, held this year at the Venetian in Las Vegas, Nvidia founder and CEO Jensen Huang was welcomed onstage to loud applause from the crowd and much hype by Michael Dell, founder and CEO at Dell Technologies.

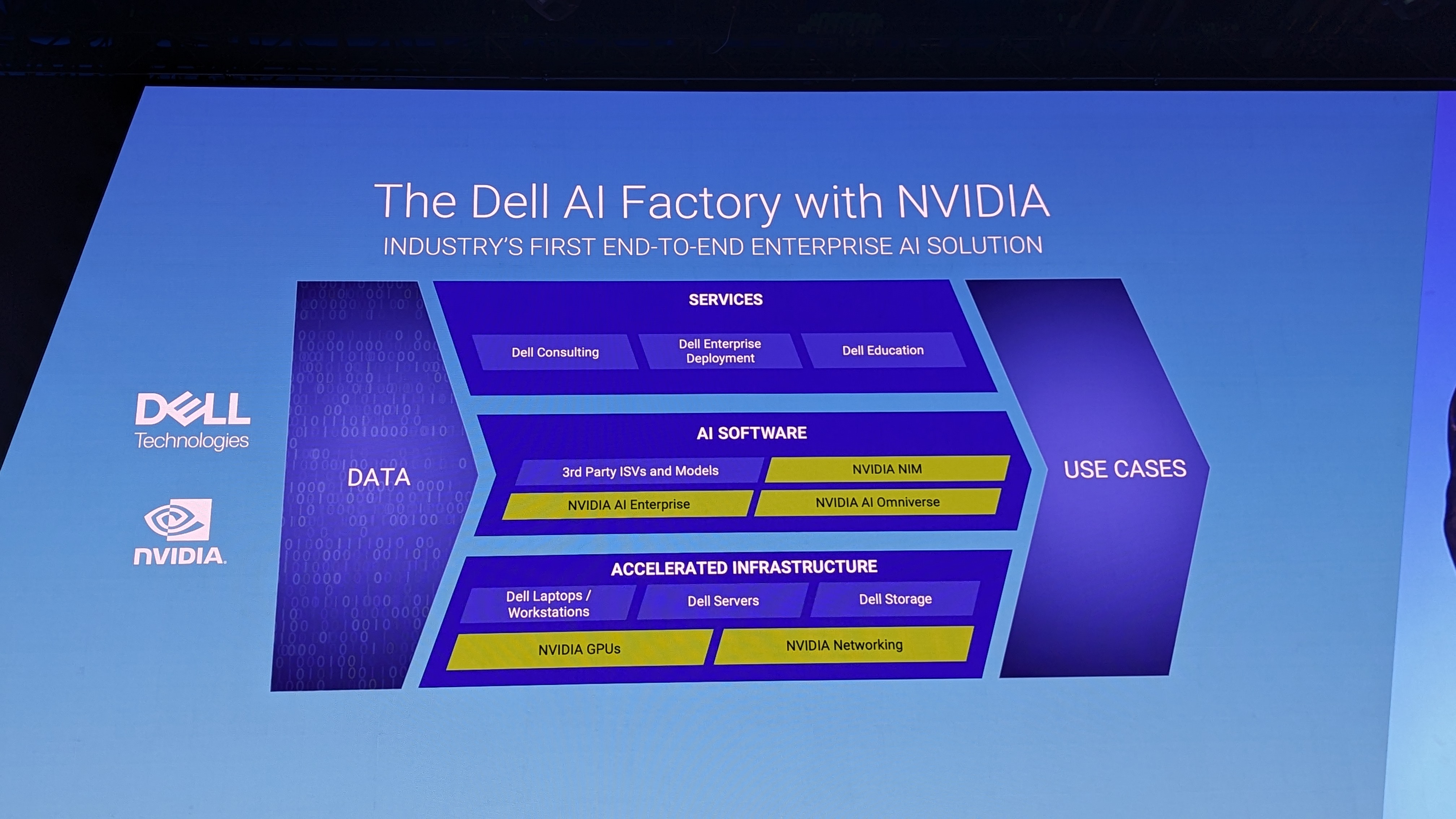

The pair shared the stage to announce new features for Dell AI Factory with Nvidia, the end-to-end solution for enterprise AI deployment. As part of the move, Dell and Nvidia will combine their software and hardware capabilities to flesh out the service, with new offerings including servers that use Nvidia’s latest chips and support for the Nvidia AI Enterprise software platform.

The concept of an 'AI factory' was repeatedly defined throughout the first day of Dell Technologies World and in essence refers to the processes, technology, and infrastructure necessary to use AI.

Across the wave of announcements, Dell and Huang made it clear that their respective companies are looking to play into one another's strengths to form the ideal factory structure. Where Dell's infrastructure is in prime position for enterprise use, Nvidia has a hardware or software solution to accelerate AI training and deployment.

“This partnership between us is going to be the first and the largest generative AI go-to-market in history,” Huang said.

“Only Dell has the ability to build compute, networking, storage, innovative and incredible software, air-cooled or liquid-cooled, bring it to your company and help you stand it up with professional services.”

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

Achieving an edge with Nvidia

Both companies have taken something of an indivisible approach to AI, where Dell and Nvidia enable and empower the other to perform better and deliver fuller services. An example of this can be found in the new availability of Nvidia's AI software platform, Nvidia AI Enterprise, as another facet of Dell AI Factory.

Dell customers will now be able to deploy Nvidia AI Enterprise through Dell NativeEdge, the company’s multi-cloud solution for edge workload management and oversight, allowing for a range of Nvidia tools to be easily deployed where a customer so chooses.

This includes NIM microservices, the firm’s catalog of ready-made cloud native containers that help developers speed up AI deployment, or the transcription and translation microservice Nvidia Riva.

At Nvidia’s GTC conference in March 2024, Michael Dell first unveiled the Nvidia AI Factory in partnership with the chipmaker. The announcements made at Dell Technologies World 2024 build on this initial reveal, with more support for Nvidia hardware and networking alongside further infrastructure and service support by Dell.

RELATED WHITEPAPER

“We couldn’t be more excited to partner with Nvidia in this journey,” said Michael Dell. “It’s built on our decades of collaboration – we’re giving customers an ‘easy button’ for AI.”

Dell continues to aim for the widest possible access to AI for its customers, a concept the firm has said is central to the idea of the ‘AI Factory’.

In a Q&A with the press after the keynote, Jeff Clarke, COO at Dell Technologies, stated that Dell aims to provide its customers with ‘AI factories’, plural, across the wide range of environments that can benefit from edge computing.

“We’ve got this broad ecosystem of software, retrieval augmented generation (RAG), closed and open large language models (LLMs), small and large LLMs, PCs and edge devices, data centers for training and inference. Put all that together, you’ve got yourself an AI factory,” said Dell.

There’s no requirement for customers to use Nvidia hardware when they construct their own AI factory, nor to adopt specific Dell-supported models such as Llama 3. But when it comes to tackling most hardware-intensive workloads on the market, or deploying competitive AI models at the edge, it’s clear Dell places immense value on its partnership with Nvidia.

PowerEdge servers, running on Nvidia GPUs, are a core element in another new solution that comes as part of the AI Factory approach: Dell Validated Design for Digital Assistants.

Through this, Dell aims to provide its customers with the platforms, services, and infrastructure necessary to deploy generative AI digital assistants for customer-facing or internal tasks. Customers will leverage guidance from Dell, including advice on the Dell best compute for the task, as well as tailored development like AI avatar customization.

Dell emphasized the value that chatbots for customer service can bring, most notably in improved end-user experience. John Roese, global chief technology officer at Dell Technologies, gave ITPro the example of the City of Amarillo, which has used digital assistants to improve the accessibility of its community services.

Through the deployment of an LLM, the local government was able to ensure residents can access digital services in their own language – a task previously thought too difficult as across the region 62 languages and dialects are actively spoken.

Nvidia hardware on Dell’s newest servers

As part of Dell Factory with Nvidia, Dell customers will be able to make use of the new PowerEdge XE9680L server, which is based on Nvidia’s new Grace Blackwell family of GPUs. Inside, the server contains eight GPUs in an ultra-dense architecture, each of which is cooled using direct-to-chip liquid cooling which Dell says delivers 2.5x better energy efficiency.

“We’ve had a ton of success with the XE9680 with Hopper and now we’re super excited to bring the XE9680L with direct liquid cooling so it goes from a 6U to a 4U form factor so we can put 72 of your B200 Blackwells in one rack,” said Dell.

Speaking reflectively as Dell celebrates its 40th anniversary year, both Dell and Huang emphasized how the previous four decades of innovation will form the backdrop for the exciting developments they see in store as their respective companies continue to collaborate.

“When we first met, almost 28 years ago, Dell made PC one-click easy. You could build your own PC, build it, and color it how you like, you made it easy to buy and sell. And now we're going to take this to a whole new level.

“These systems are incredible. These are giant supercomputers that we are going to build at scale, deliver at scale, and help you stand it up at scale. So what appears to be an incredibly complicated technology – and it's a very complicated technology – we’re going to easy for all of you guys to enjoy.”

With the likes of the XE96890L, Dell is setting the groundwork for training and inferencing even the largest and most demanding LLMs on the market, as well as preparing for what it anticipates will be a widespread adoption of AI models for edge computing.

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

Bigger salaries, more burnout: Is the CISO role in crisis?

Bigger salaries, more burnout: Is the CISO role in crisis?In-depth CISOs are more stressed than ever before – but why is this and what can be done?

By Kate O'Flaherty Published

-

Cheap cyber crime kits can be bought on the dark web for less than $25

Cheap cyber crime kits can be bought on the dark web for less than $25News Research from NordVPN shows phishing kits are now widely available on the dark web and via messaging apps like Telegram, and are often selling for less than $25.

By Emma Woollacott Published

-

‘This is the first event in history where a company CEO invites all of the guests to explain why he was wrong’: Jensen Huang changes his tune on quantum computing after January stock shock

‘This is the first event in history where a company CEO invites all of the guests to explain why he was wrong’: Jensen Huang changes his tune on quantum computing after January stock shockNews Nvidia CEO Jensen Huang has stepped back from his prediction that practical quantum computing applications are decades away following comments that sent stocks spiraling in January.

By Nicole Kobie Published

-

We’re optimistic that within five years we’ll see real-world applications’: Google thinks it’s on the cusp of delivering on its quantum computing dream – even if Jensen Huang isn't so sure

We’re optimistic that within five years we’ll see real-world applications’: Google thinks it’s on the cusp of delivering on its quantum computing dream – even if Jensen Huang isn't so sureNews Nvidia CEO Jensen Huang sent shares in quantum computing firms tumbling last month after making comments on the near-term viability of the technology.

By Ross Kelly Last updated

-

DeepSeek flips the script

DeepSeek flips the scriptITPro Podcast The Chinese startup's efficiency gains could undermine compute demands from the biggest names in tech

By Rory Bathgate Published

-

Top five security considerations for Generative AI (Gen AI)

Top five security considerations for Generative AI (Gen AI)whitepaper Protection across AI attack vectors

By ITPro Published

-

Prepare for the future now. Achieve greater, secure productivity, using AI with the latest Dell PCs powered by Intel® Core™ Ultra and Copilot

Prepare for the future now. Achieve greater, secure productivity, using AI with the latest Dell PCs powered by Intel® Core™ Ultra and Copilotwhitepaper Protection across AI attack vectors

By ITPro Published

-

Deliver engaging and personalized experiences with your own digital assistants

Deliver engaging and personalized experiences with your own digital assistantswhitepaper Protection across AI attack vectors

By ITPro Published

-

Top five security considerations for generative AI (Gen AI)

Top five security considerations for generative AI (Gen AI)whitepaper Accelerate your adoption of a secure and scalable infrastructure foundation with Dell AI Factory with NVIDIA

By ITPro Published

-

The power of generative AI to revolutionize content creation

The power of generative AI to revolutionize content creationwhitepaper Protection across AI attack vectors

By ITPro Published