Does anyone care if you use AI? Fiverr doesn’t think so

The freelance marketplace’s latest marketing campaign feels like a reductive take on an unpredictable technology

On the face of it, Fiverr’s new ad seems pretty harmless. The sub-two-minute video takes the form of a musical parody entitled ‘Nobody Cares: The Musical’ and seeks to hammer home one key point about AI being used for work: nobody cares that you use AI in the workplace.

The ad is not subtle with this point and one of the opening lines is quite straightforward: “Nobody cares that you use AI.” Fiverr’s point? AI is little more than a means to an end.

“We just care if it works, if it disrupts or converts, or makes a user dramatically cry,” the onscreen avatars sing (which are themselves AI-generated, the ad confirms in a final self-referential twist).

The improbability of AI causing genuine emotion aside, the ad could be taken as a pretty clever bit of branding from the firm – if not for a few glaring issues. It taps into something many are probably fed up with AI discourse swamping popular forms of business communication like LinkedIn (which the ad directly references).

In some ways, the ad is right that most people running businesses put success and results front and center. Assuming one isn’t violating any regulations or putting anything at risk, any path to profit and growth could be considered valid.

But while success is a top priority for any business, security and compliance are rarely far behind it. Not so for Fiverr’s idea of the typical leader:

“Your boss doesn’t care an ad was made with AI, that’s TMI (too much information), she just wants ROI (return on investment),” the advert assures us. This is the most egregious line. While it’s true members of the C-suite are still getting to grip with the ongoing AI revolution – nearly two-thirds (65%) of execs failed to pick an accurate description of generative AI according to a recent study – the goal should not be to keep it this way. Indeed, the idea CEOs don’t have or want oversight of where their employees are using AI is itself concerning.

Get the ITPro daily newsletter

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

AI oversight is a hot topic

With fears increasing around how AI models may expose sensitive or propriety data, or how malicious actors could target businesses via AI models, there’s never been a more pressing need for execs to know what has and hasn’t been made using AI.

If staff are using AI via Fiverr, they need to be even more transparent with senior management. Freelancers on Fiverr don't necessarily know a company's specific AI policy, so the process they undergo to create something needs to be fully understood by whoever’s outsourcing.

The ROI line also assumes that workers needn’t tell their seniors what they are doing with AI. In other words, Fiverr is encouraging staff to engage in ‘Shadow AI’, a practice potentially damaging to businesses.

Reports suggest that 44% of UK workers are currently receiving no guidance on how to use generative AI from their employer, creating an environment where malpractice can thrive. Fiverr’s suggestion? Just keep it to yourself.

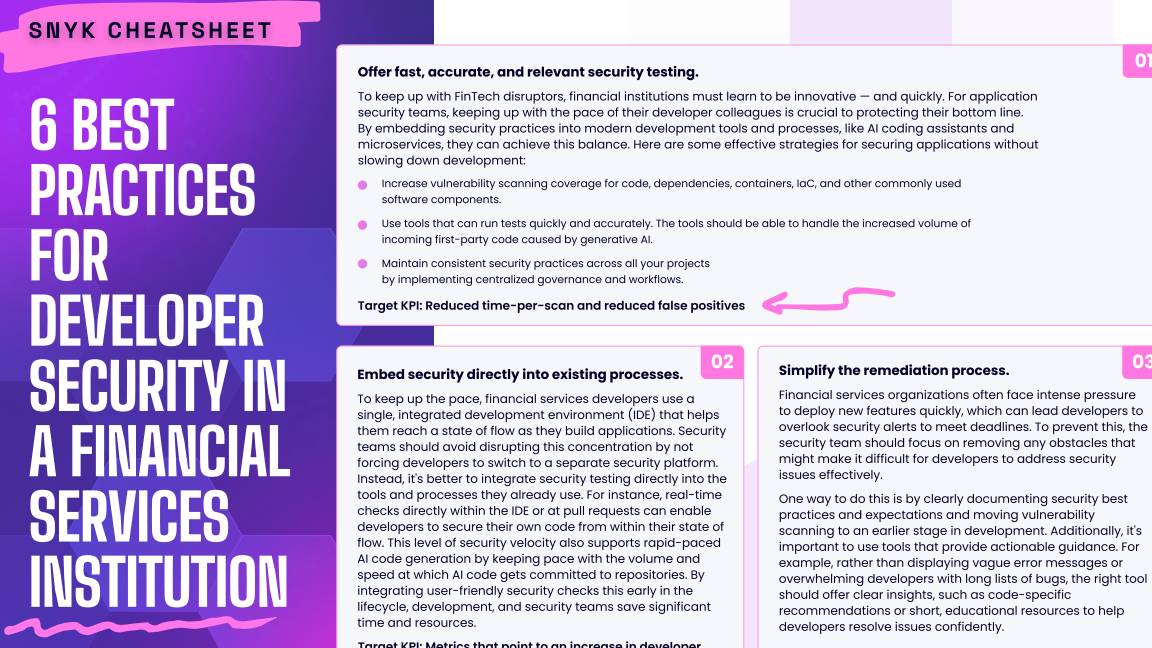

RELATED WHITEPAPER

“Nobody cares AI built this bot, just make it work fast and we’ll give it a shot,” reads another line from the ad. This brings to mind AI coding tools which, though promising to speed up developer workflows, are yet to guarantee an improved software product.

According to research from GitClear, there are concerns about the maintainability of AI-generated code and a sense that some tools simply produce bad code faster. Other reports found less than a quarter of DevSecOps teams are “very confident” in the guardrails they have put in place to secure AI-generated code.

While the tide seems to be shifting on this topic as devs begin to report positive changes from AI use, it is certainly a stretch for Fiverr to say that “nobody cares” whether a piece of software was built using AI. Most do care – and if they don't, then they should.

“Like a pen or a brush, AI is only a tool, so don’t brag like a pro, if you prompt like a fool,” is one line that somewhat redeems the advert. If there’s one thing we can agree on, it’s that AI is fundamentally just a tool.

The narrative of the video would have worked a lot better if it focused more on this. If AI is just a tool, then it makes sense to sideline it in the conversation. After all, few site managers would like to hear in detail how a construction worker used their hammer.

But every site manager would want to know that their workers are following safety regulations to the letter and would be rightly concerned if they found out workers were simply hiding potential indiscretions.

AI isn’t yet so well understood that it can be compared to a hammer, a pen, or a brush. It is a complex technology, the impact of which is far from comprehended, be it positive or negative. While you can say a lot of things about AI, one thing you probably can’t is that “nobody cares” when it’s been deployed.

George Fitzmaurice is a former Staff Writer at ITPro and ChannelPro, with a particular interest in AI regulation, data legislation, and market development. After graduating from the University of Oxford with a degree in English Language and Literature, he undertook an internship at the New Statesman before starting at ITPro. Outside of the office, George is both an aspiring musician and an avid reader.

-

Microsoft just hit a major milestone in its ‘zero waste’ strategy

Microsoft just hit a major milestone in its ‘zero waste’ strategyNews Microsoft says it's outstripping its zero waste targets, recording a 90.9% reuse and recycling rate for servers and components in 2024.

By Emma Woollacott

-

Dell names Lisa Ergun as new Client Solutions Group channel lead for the UK

Dell names Lisa Ergun as new Client Solutions Group channel lead for the UKNews Dell Technologies has announced the appointment of Lisa Ergun as its new Client Solutions Group (CSG) channel lead for the UK.

By Daniel Todd