HPE's AI democratization aims have small business potential

HPE’s solutions to ‘democratize AI’ reveal its confidence in a universal business case for generative AI

HPE is betting on businesses across every industry finding a use case for generative AI, and it wants to provide enterprises of all sizes with a solution to unlock the potential of generative AI.

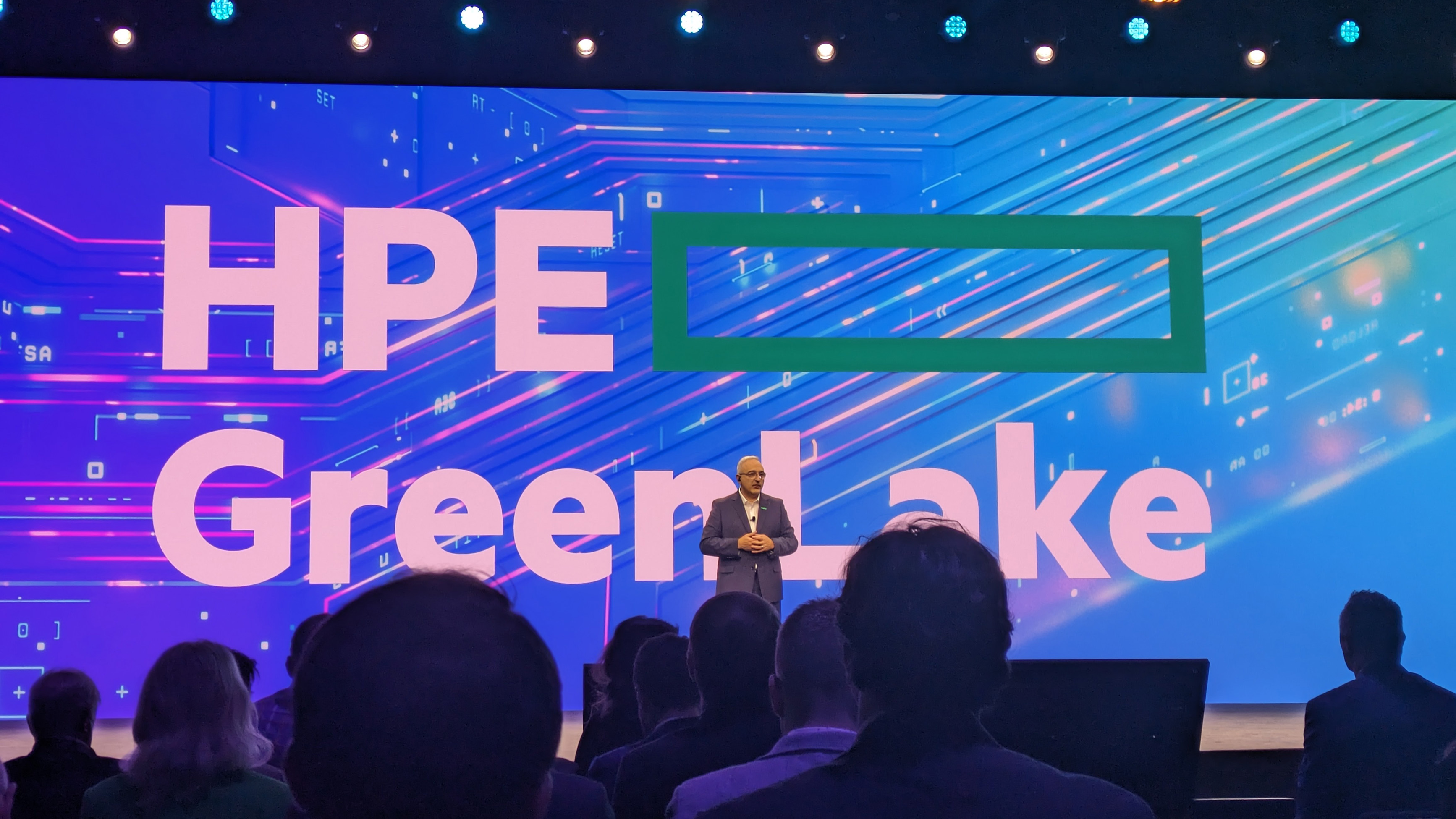

Just weeks before its annual HPE Discover Barcelona conference, held in Barcelona, HPE announced its collaboration with Nvidia on a supercomputing solution built specifically for large enterprises looking to develop their own models from scratch.

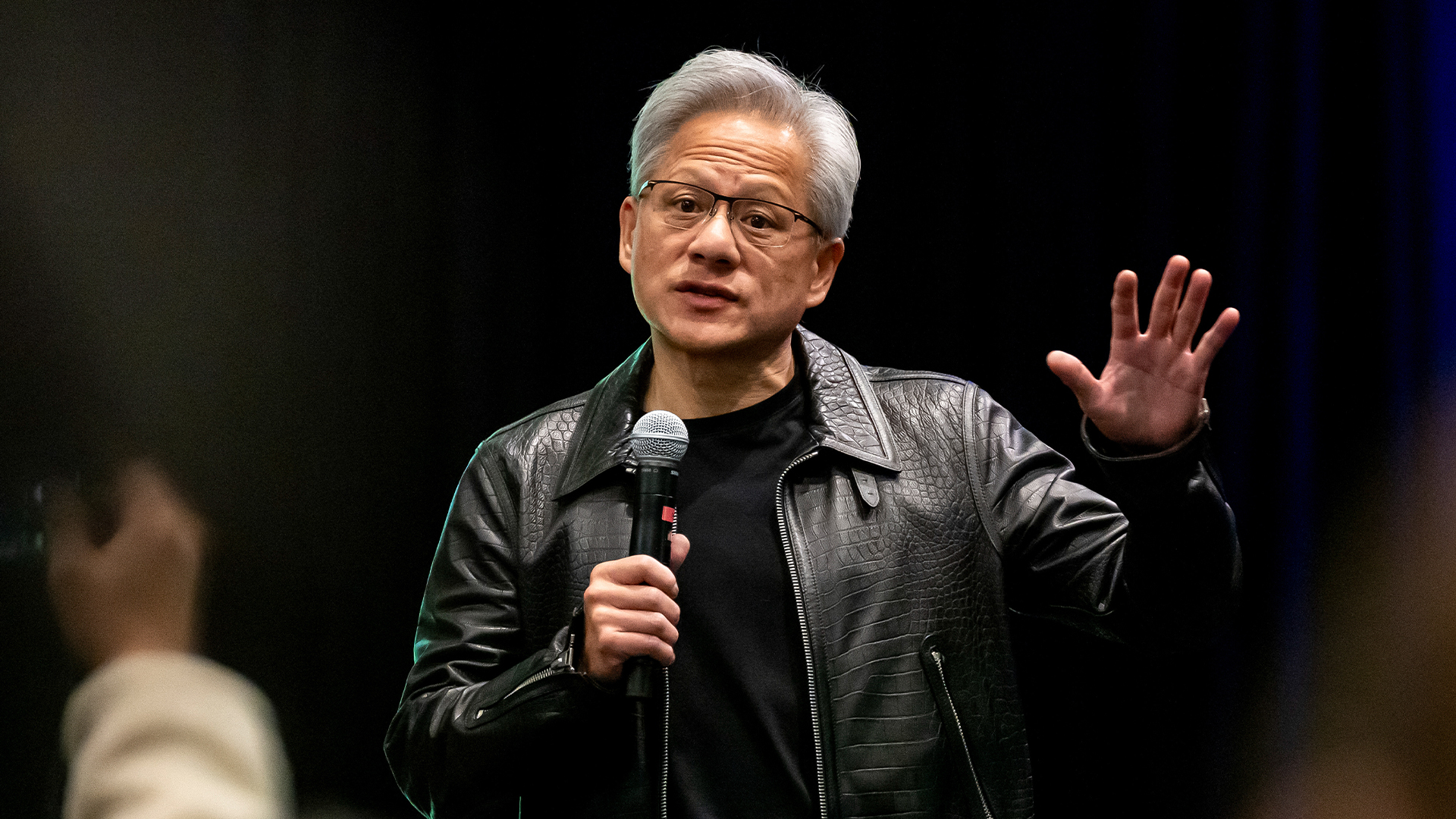

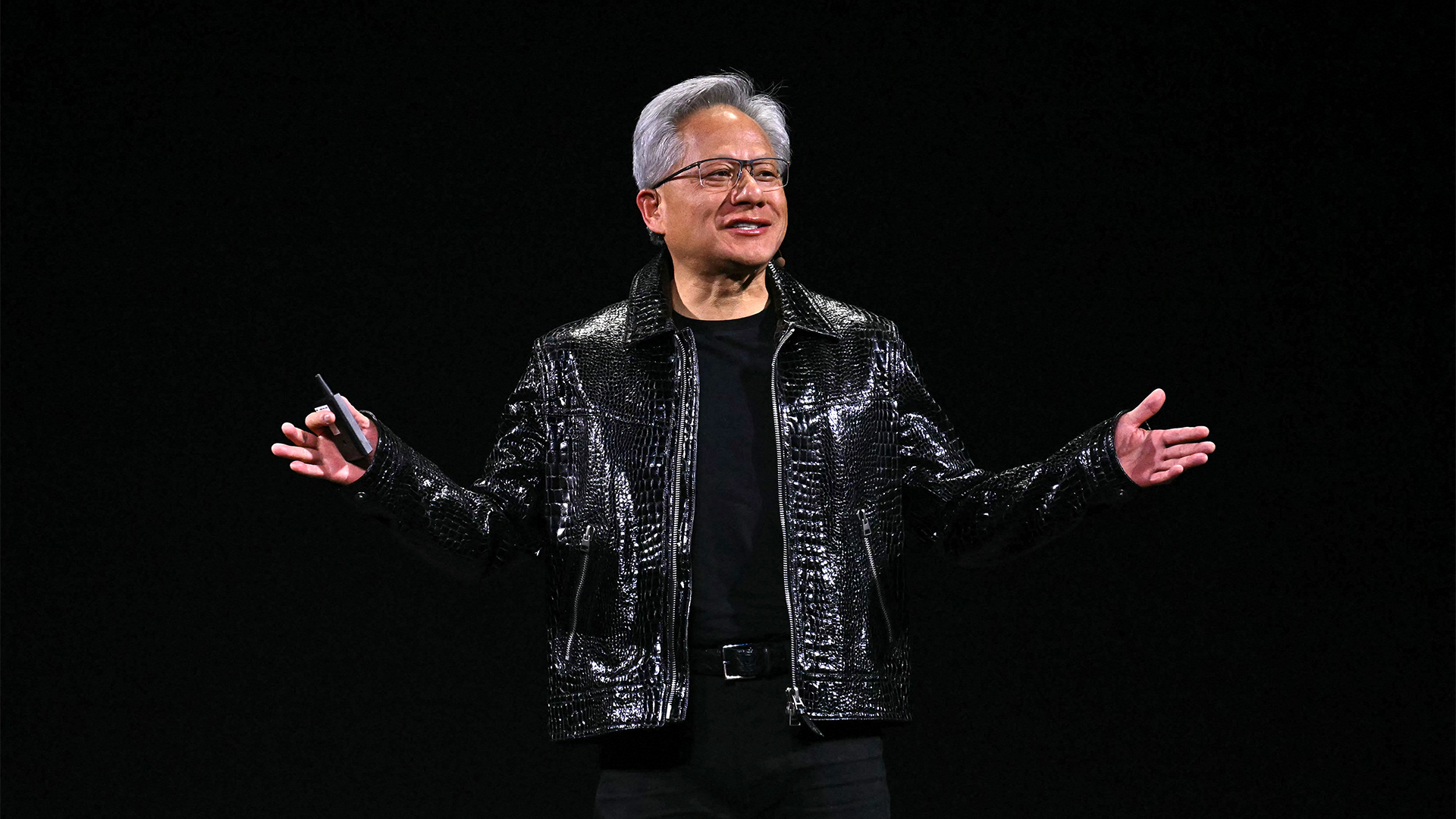

The platform comprises the infrastructure and software stack required to train and deploy foundation models and is aimed at large enterprises, research institutions, and government agencies. But, as Manuvir Das, head of enterprise computing at Nvidia pointed out while on stage at Discover Barcelona, the number of organizations looking to build their own models is limited to the hundreds or perhaps thousands, and the level of investment required is significant.

Das noted there are a far larger number of businesses who are looking to take advantage of generative AI inferencing solutions without making the considerable time and resource investments required to build their own custom model.

It is this cautious majority of businesses that forms the basis for HPE and Nvidia’s latest collaboration. Announced on stage by HPE CEO Antonio Neri, HPE’s catchily-named ‘enterprise computing solution for generative AI’ will deliver a turnkey solution for enterprises to deploy pre-existing models for their own purposes and fine-tune them to their needs using their own data.

The key here is simplicity, as HPE aims to provide enterprises who may not have the resources or know-how with the inferencing functionality of large language models (LLMs).

HPE appears to be particularly well-placed to achieve this goal of creating a more accessible AI ecosystem for enterprises perhaps lacking the expertise or CapEx to develop these systems from the ground up. The full suite of infrastructure, software, and services offered in HPE’s product portfolio have received updates to reflect the company’s AI-native architecture ambitions.

ChannelPro Newsletter

Stay up to date with the latest Channel industry news and analysis with our twice-weekly newsletter

For example, HPE’s Ezmeral software has been enhanced for genAI applications with new GPU-aware capabilities to boost data preparation in AI workloads within public and private cloud applications. This is a show of HPE’s confidence in its ability to meet customer needs even as soaring generative AI demand pushes data centers to their limits.

AI democratization via HPE and Nvidia

Underpinning this ‘out-of-the-box’ solution is a host of HPE and Nvidia infrastructure and software solutions.

This includes HPE’s Proliant DL380a servers, which come pre-configured with Nvidia L40S graphics processing units (GPUs). Referred to as a ‘rack-scale architecture’, 16 of the Proliant servers and up to four Nvidia L40 GPUs will drive the system’s compute element capable of fine-tuning models such as Meta’s 70 billion-parameter Llama 2.

Meta introduced Llama 2 in July 2023 and immediately made it freely available for commercial and research purposes. The firm made much of its more open approach in contrast with developers such as OpenAI and Anthropic. HPE wants to fill the same role on a broader scale and is well-positioned to do so.

Nvidia’s contribution to the software stack is its AI Enterprise suite, included in its previous supercomputing generative AI partnership. This suite offers users over 50 frameworks, pre-trained models, and development tools to accelerate model deployment.

RELATED RESOURCE

Ensure your business makes the most of the opportunities GenAI offers

DOWNLOAD NOW

Finally, the platform also features Nvidia’s NeMo toolkit for conversational AI, which will bring tools for guardrailing, data curation, automatic speech recognition (ASR), and more.

Enterprises will be able to make use of these tools to create a range of chatbots that can be trained on their private data and offer useful responses via natural language processing.

While giving firms the hardware and software to leverage this model for their own business purposes will go a long way toward AI democratization, we are still waiting to find out just how accessible HPE’s AI solution will be.

The enterprise computing solution for generative AI will be available in early 2024 but there has been no indication of price as yet.

A new generation of AI for enterprise

Lowering the barrier to entry of generative AI applications will be fundamental to achieving 'AI democratization’ – a phrase that was repeated by HPE throughout the conference.

Manuvir Das touted the offering as opening up the field of enterprise application development, where generative AI will be producing a slew of unique applications for each business, tuned to their own data. Privacy is a core concern for enterprises looking to dip their toes into the generative AI space, where previously businesses looking to access the benefits of the technology had to feed their private data into the public domain to train the model.

This of course is not something many businesses are comfortable doing and so HPE’s new turnkey solution will allow businesses to tune pre-configured models using their private data within the private cloud, ‘bringing the model to the data’, so they can access the bespoke inferencing capabilities of GenAI without sacrificing their IP.

Is HPE playing catch-up with Dell?

HPE is betting on significant demand from medium-sized enterprises who are looking to maximize the time-to-value of a model deployment, but it is not without competitors.

In July 2023, Dell unveiled a new suite of generative AI solutions aiming to help companies adopt on-prem generative AI without requiring expertise in model training. Dell’s offering also uses Nvidia’s NeMo suite to offer pre-built models that provide language generation and inference capabilities that can be used in chatbots and image generation.

Both Dell and HPE are looking to provide enterprises with the capacity to scale their generative AI applications and this could be a space in which HPE is forced to hurriedly catch up.

Dell’s Project Helix focuses on facilitating businesses looking to deploy generative AI models on premises, whereas HPE may look to differentiate itself with the flexibility of a hybrid cloud model using its GreenLake platform.

HPE’s offering will allow businesses to tune pre-configured models using their private data and deploy the resulting production applications across their business from the edge to the cloud.

Solomon Klappholz is a former staff writer for ITPro and ChannelPro. He has experience writing about the technologies that facilitate industrial manufacturing, which led to him developing a particular interest in cybersecurity, IT regulation, industrial infrastructure applications, and machine learning.

-

Bigger salaries, more burnout: Is the CISO role in crisis?

Bigger salaries, more burnout: Is the CISO role in crisis?In-depth CISOs are more stressed than ever before – but why is this and what can be done?

By Kate O'Flaherty Published

-

Cheap cyber crime kits can be bought on the dark web for less than $25

Cheap cyber crime kits can be bought on the dark web for less than $25News Research from NordVPN shows phishing kits are now widely available on the dark web and via messaging apps like Telegram, and are often selling for less than $25.

By Emma Woollacott Published

-

‘This is the first event in history where a company CEO invites all of the guests to explain why he was wrong’: Jensen Huang changes his tune on quantum computing after January stock shock

‘This is the first event in history where a company CEO invites all of the guests to explain why he was wrong’: Jensen Huang changes his tune on quantum computing after January stock shockNews Nvidia CEO Jensen Huang has stepped back from his prediction that practical quantum computing applications are decades away following comments that sent stocks spiraling in January.

By Nicole Kobie Published

-

We’re optimistic that within five years we’ll see real-world applications’: Google thinks it’s on the cusp of delivering on its quantum computing dream – even if Jensen Huang isn't so sure

We’re optimistic that within five years we’ll see real-world applications’: Google thinks it’s on the cusp of delivering on its quantum computing dream – even if Jensen Huang isn't so sureNews Nvidia CEO Jensen Huang sent shares in quantum computing firms tumbling last month after making comments on the near-term viability of the technology.

By Ross Kelly Last updated

-

DeepSeek flips the script

DeepSeek flips the scriptITPro Podcast The Chinese startup's efficiency gains could undermine compute demands from the biggest names in tech

By Rory Bathgate Published

-

What HPE's results say about the direction of enterprise AI

What HPE's results say about the direction of enterprise AIAnalysis As with cloud computing, some companies value privacy over capacity

By Jane McCallion Published

-

Gaining timely insights with AI inferencing at the edge

Gaining timely insights with AI inferencing at the edgeWhitepaper Business differentiation in an AI-everywhere era

By ITPro Published

-

Digital strategies in the era of AI

Digital strategies in the era of AIWhitepaper Businesses are on the cusp of a major paradigm shift

By ITPro Published

-

HPE’s AI and supercomputing journey continues with new Cray and Slingshot hardware

HPE’s AI and supercomputing journey continues with new Cray and Slingshot hardwareNews The company is also wooing MSPs and enterprises looking to roll out AI on-premises

By Jane McCallion Published

-

Jensen Huang doesn't think AI will come for his job — but other CEOs might disagree

Jensen Huang doesn't think AI will come for his job — but other CEOs might disagreeNews A survey last year found almost half of CEOs believe they could be replaced with AI, but Nvidia’s superstar CEO thinks otherwise

By Solomon Klappholz Published