The UK's hollow AI Safety Summit has only emphasized global divides

Successes at pivotal UK event have been overshadowed by differing regulatory approaches and disagreement on open source

The world’s leading nations on AI have jointly signed a letter of intent on AI safety at the UK’s AI Summit, but civil discussions still play second fiddle to a glaring international divergence on the future of AI and a clear lack of steps for regulating the technology.

In the Bletchley Declaration, 28 countries came together to agree on the shared risks and opportunities of frontier AI and to commit to international cooperation on research and mitigation.

Nations included the UK, US, China, India, France, Japan, Nigeria, and the Kingdom of Saudi Arabia. Representatives of countries from every continent agreed to the terms of the declaration.

But what was left unsaid in the agreement is likely to define AI development over the next 12 months than anything addressed within well-intentioned but apparently non-binding text.

Stealing Britain’s thunder

Signatories pledged to “support an internationally inclusive network of scientific research on frontier AI safety that encompasses and complements existing and new multilateral, plurilateral and bilateral collaboration”.

However, Gina Raimondo, United States secretary of commerce, used her opening remarks to announce a new US AI safety institute and arrived just days after Joe Biden signed an executive order that set out sweeping requirements for AI developers in the name of AI safety.

This is far from an internationalist move by the US, which largely appears to have used the AI Safety Summit as a good chance to flog its own domestic AI agenda.

Sign up today and you will receive a free copy of our Future Focus 2025 report - the leading guidance on AI, cybersecurity and other IT challenges as per 700+ senior executives

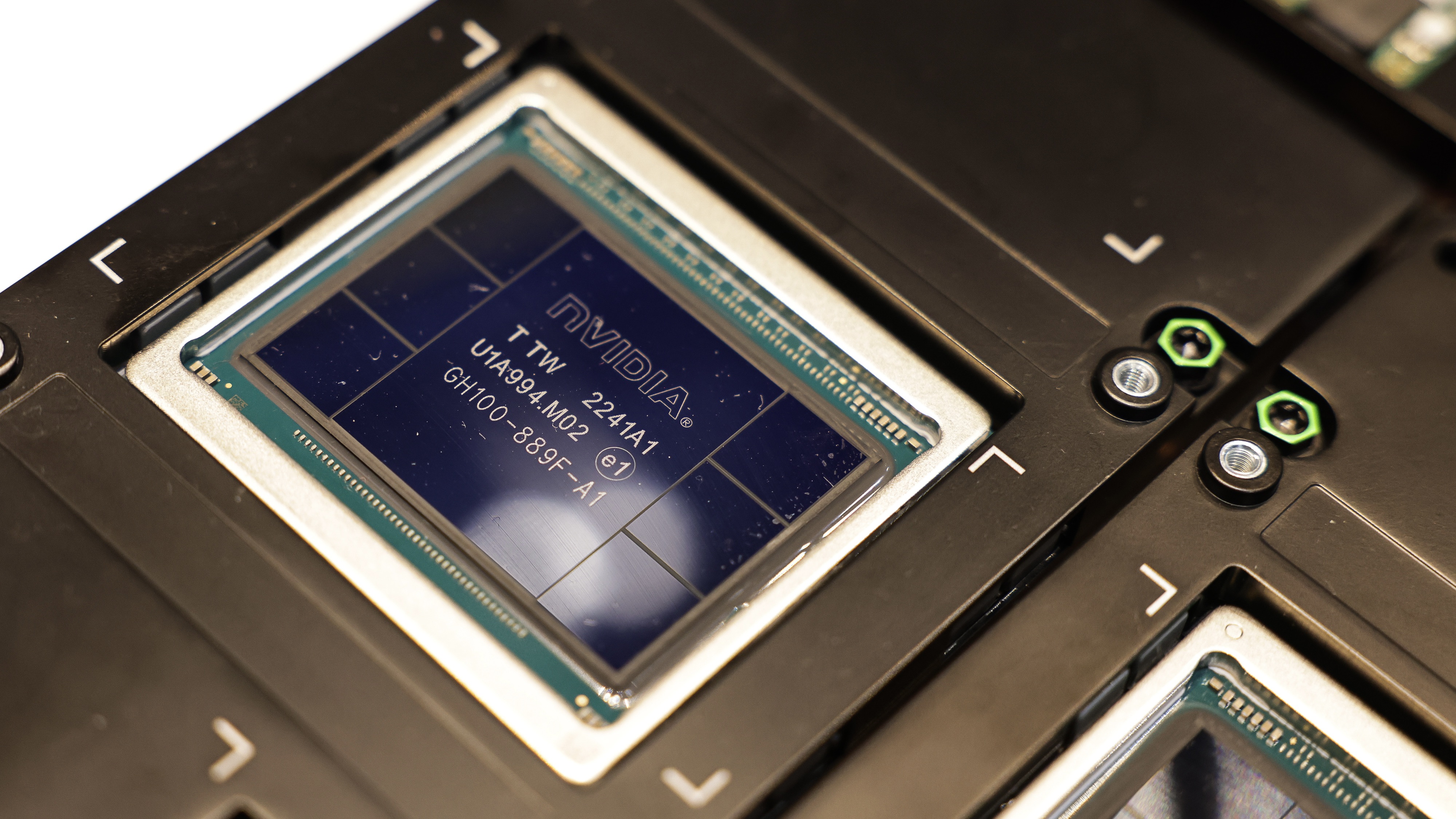

That’s not to say that the UK played the neutral host all week either. Halfway through the conference, HPE and Dell announced new supercomputers that each firm will work on using UK government funding that will together form the UK’s new AI Research Resource (AIRR).

Michelle Donelan, secretary of innovation and technology, stated the investment would ensure “Britain’s leading researchers and scientific talent” had access to the tools they need and that the government was “making sure we cement our place as a world-leader in AI safety”.

For all the praise over the summit, and claims that it was a diplomatic success, this is unmistakably the language of competition and British exceptionalism.

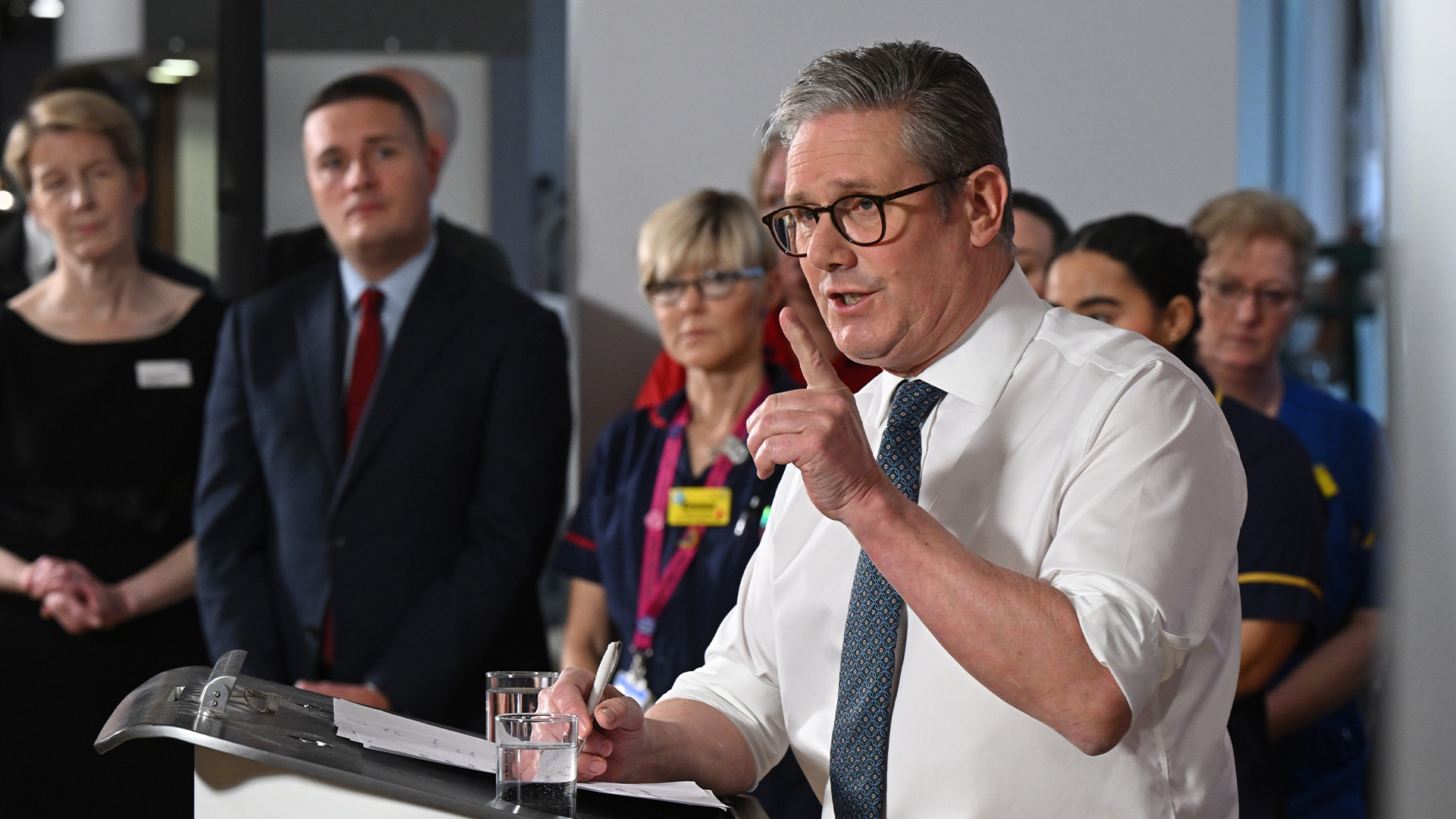

Prime minister Rishi Sunak’s talk with Elon Musk, which was live streamed on the second day of the summit, further emphasized the PR aspect of the entire event. No major revelations emerged from the chat, which saw the PM quiz Musk in a lighthearted manner and touch on pop culture more than policy.

Yi Zeng of the Chinese Academy of Sciences, and chair of the summit roundtable on the risks posed by unpredictable advances in frontier AI capabilities, used his closing remarks to advocate for an international AI safety network.

“This morning we've heard that the UK and also the US will have its version of the AI Safety Institute,” said Yi.

“Actually, we think that we should all have something like an AI Safety Institute for our own country but that's not enough. We need to have an AI safety network, working together just to hold the hope that maybe we can solve some of the unpredicted advances and unpredicted failures.”

The fact that Yi was essentially still lobbying for more global cooperation on AI safety even after the Bletchley Declaration had been signed does not inspire confidence in the potency of the agreements made at the summit.

RELATED RESOURCE

This guide will help you discover a platform that will harness the full power of AI

DOWNLOAD NOW

The attendance of Wu Zhaohui, vice minister of technology for the People’s Republic of China, at the AI Safety Summit has been held up by the government as a success of its own. In giving China a seat at the table, and in China’s acceptance of the seat, the UK government allowed for a proper global discussion on AI to take place.

Wu was clear about China’s mindset on AI when it comes to the international community.

“We should increase the representation and voice of developing countries in global AI governance, and bridge the gap in AI and its governance capacity. We call for global collaboration to share AI knowledge and make AI technologies available to the public under open-source terms,” he said.

“AI governance is a common task faced by humanity and bears on the future of humanity. Only with joint efforts of the international community can we ensure AI technology’s safe and reliable development.

“China is willing to enhance dialog and communication in AI safety with all sides, contributing to an international mechanism with broad participation and governance framework based on wide consensus, delivering benefits to the people and building a community with a shared future for mankind.”

Wu and others called for a framework through which safety research could be shared, but by the end of the summit no plans for this were set in stone.

Going it alone

While the event has proved a welcome confirmation that countries are broadly aligned on the need for AI safety, it seems each will continue on its existing trajectory when it comes to AI legislation.

“This declaration isn’t going to have any real impact on how AI is regulated,” said Martha Bennett, VP principal analyst at Forrester.

“For one, the EU already has the AI Act in the works, in the US, President Biden on Oct 30 released an Executive Order on AI, and the G7 “International Guiding Principles” and “International Code of Conduct” for AI, was published on Oct 30, all of which contain more substance than the Bletchley Declaration.”

Open source still left out in the cold

Amanda Brock, CEO of OpenUK, has been supportive of the government’s aims on AI but has called for a broadening of stakeholders to provide better opportunities for the open-source software and open data business communities.

Her argument that open innovation and collaboration will be necessary for stirring progress in the space does not appear to have won out at the summit itself.

For example, Yi summarized the concerns among his group that open-sourcing AI could be a major risk.

“This is a really challenging topic because in my generation when we were starting working on computer science we were getting so much benefit from Linux and BSD.

“Now when we're working on large-scale AI models, we have to think about whether the paradigm for open source can continue, because it raises so many concerns and risks with larger-scale models with very large uncertainties open to everyone. How can we ensure that it is not misused and abused?”

In contrast, Wu called for more encouragement of AI development on an open-source basis, and for giving the public access to AI models through open-source licenses.

His calls are matched by organizations such as Meta, which have called for more open adoption of LLMs and contributed to this field with its free LLM Llama 2.

Rory Bathgate is Features and Multimedia Editor at ITPro, overseeing all in-depth content and case studies. He can also be found co-hosting the ITPro Podcast with Jane McCallion, swapping a keyboard for a microphone to discuss the latest learnings with thought leaders from across the tech sector.

In his free time, Rory enjoys photography, video editing, and good science fiction. After graduating from the University of Kent with a BA in English and American Literature, Rory undertook an MA in Eighteenth-Century Studies at King’s College London. He joined ITPro in 2022 as a graduate, following four years in student journalism. You can contact Rory at rory.bathgate@futurenet.com or on LinkedIn.

-

Hybrid cloud has hit the mainstream – but firms are still confused about costs

Hybrid cloud has hit the mainstream – but firms are still confused about costsNews How do you know if it's a good investment if you don't have full spending visibility?

-

An executive suggested laid off staff use AI for counseling – I think that's ludicrous

An executive suggested laid off staff use AI for counseling – I think that's ludicrousOpinion In the aftermath of Microsoft layoffs, promoting AI career advice feels supremely cold

-

‘Archaic’ legacy tech is crippling public sector productivity

‘Archaic’ legacy tech is crippling public sector productivityNews The UK public sector has been over-reliant on contractors and too many processes are still paper-based

-

Public sector improvements, infrastructure investment, and AI pothole repairs: Tech industry welcomes UK's “ambitious” AI action plan

Public sector improvements, infrastructure investment, and AI pothole repairs: Tech industry welcomes UK's “ambitious” AI action planNews The new policy, less cautious than that of the previous government, has been largely welcomed by experts

-

UK government trials chatbots in bid to bolster small business support

UK government trials chatbots in bid to bolster small business supportNews The UK government is running a private beta of a new chatbot designed to help people set up small businesses and find support.

-

Rishi Sunak’s stance on AI goes against the demands of businesses

Rishi Sunak’s stance on AI goes against the demands of businessesAnalysis Execs demanding transparency and consistency could find themselves disappointed with the government’s hands-off approach

-

UK aims to be an AI leader with November safety summit

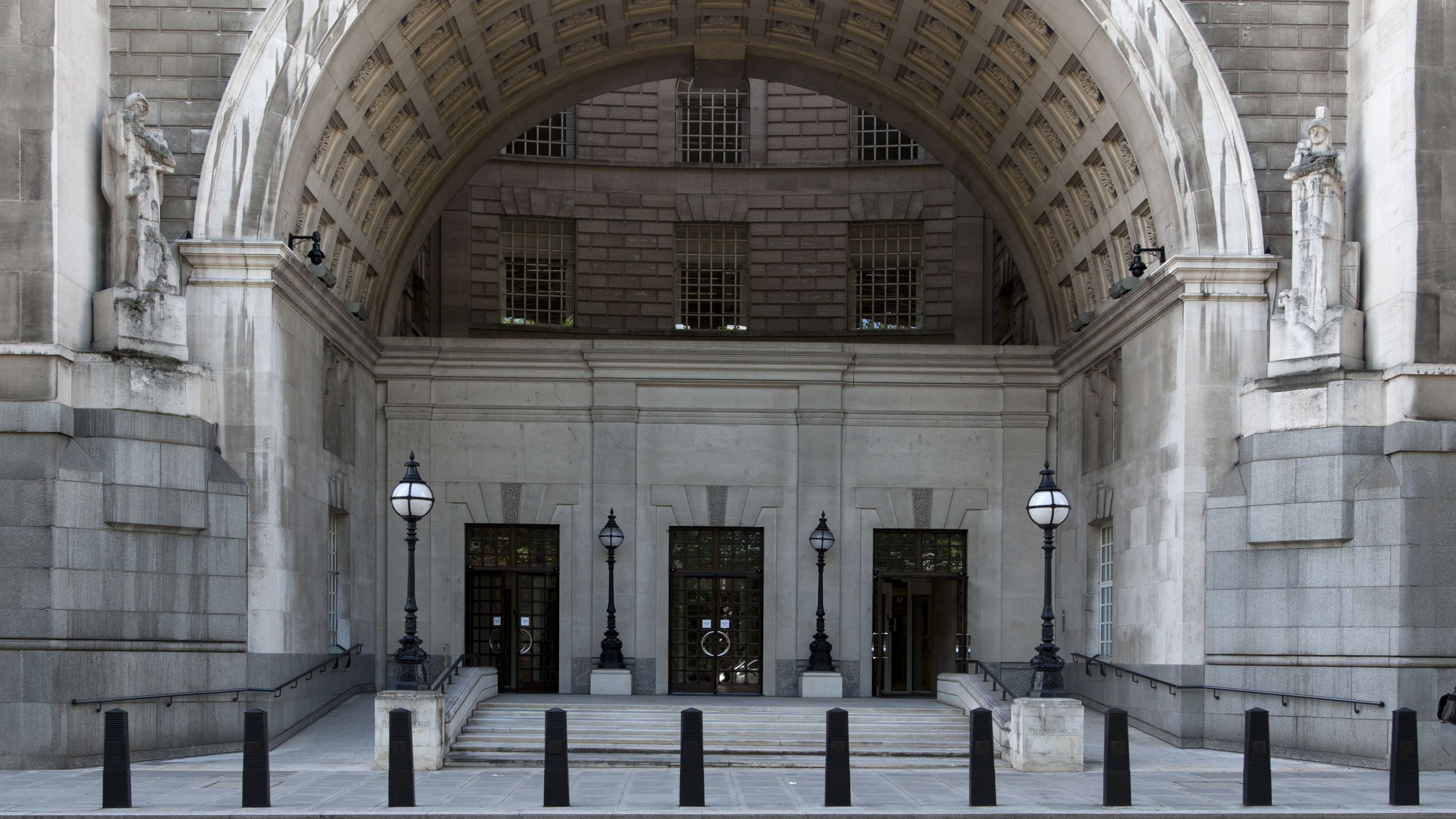

UK aims to be an AI leader with November safety summitNews Bletchley Park will play host to the guests who will collaborate on the future of AI

-

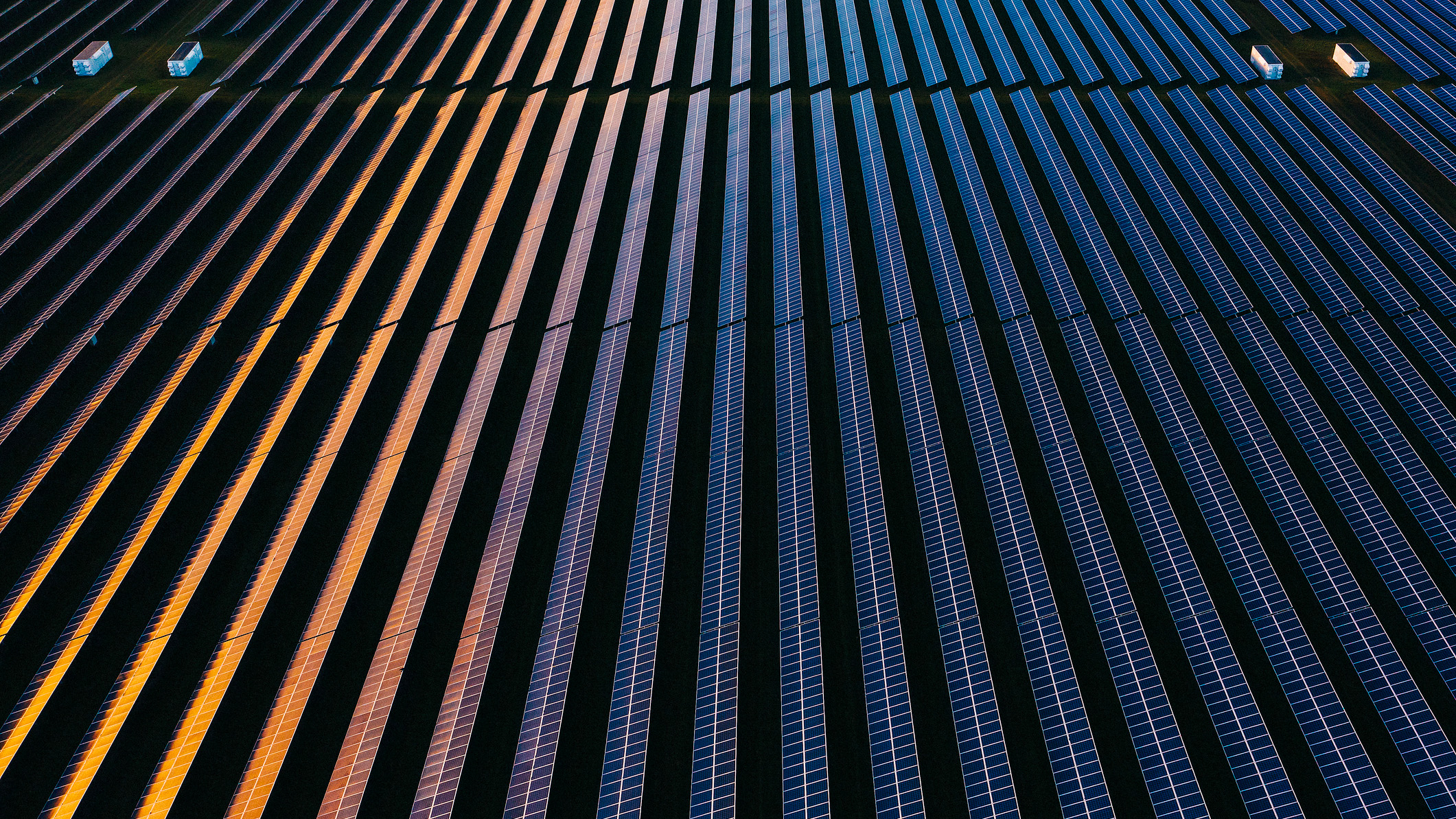

AI-driven net zero projects receive large cash injection from UK gov

AI-driven net zero projects receive large cash injection from UK govNews Funds have been awarded to projects that explore the development of less energy-intensive AI hardware and tech to improve renewables

-

Who is Ian Hogarth, the UK’s new leader for AI safety?

Who is Ian Hogarth, the UK’s new leader for AI safety?News The startup and AI expert will head up research into AI safety

-

MI5 reveals it has been working with an AI non-profit on national security since 2017

MI5 reveals it has been working with an AI non-profit on national security since 2017News The security service has been working with The Alan Turing Institute and has decided to unveil the partnership today to allow the pair to work more closely together